A Large Arabic Broadcast News Speech Data Collection

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Satellite Systems

Chapter 18 REST-OF-WORLD (ROW) SATELLITE SYSTEMS For the longest time, space exploration was an exclusive club comprised of only two members, the United States and the Former Soviet Union. That has now changed due to a number of factors, among the more dominant being economics, advanced and improved technologies and national imperatives. Today, the number of nations with space programs has risen to over 40 and will continue to grow as the costs of spacelift and technology continue to decrease. RUSSIAN SATELLITE SYSTEMS The satellite section of the Russian In the post-Soviet era, Russia contin- space program continues to be predomi- ues its efforts to improve both its military nantly government in character, with and commercial space capabilities. most satellites dedicated either to civil/ These enhancements encompass both military applications (such as communi- orbital assets and ground-based space cations and meteorology) or exclusive support facilities. Russia has done some military missions (such as reconnaissance restructuring of its operating principles and targeting). A large portion of the regarding space. While these efforts have Russian space program is kept running by attempted not to detract from space-based launch services, boosters and launch support to military missions, economic sites, paid for by foreign commercial issues and costs have lead to a lowering companies. of Russian space-based capabilities in The most obvious change in Russian both orbital assets and ground station space activity in recent years has been the capabilities. decrease in space launches and corre- The influence of Glasnost on Russia's sponding payloads. Many of these space programs has been significant, but launches are for foreign payloads, not public announcements regarding space Russian. -

1. INTRODUCTION 2. EASY INSTALLATION GUIDE 8. Explain How to Download S/W by USB and How to Upload and Download 9. HOW to DOWNLO

1. INTRODUCTION Overview…………………………………………………………………………..………………...……... 2 Main Features……………………………………………………………………………... ...………... ....4 2. EASY INSTALLATION GUIDE...…………...…………...…………...…………...……….. .. 3 3. SAFETY Instructions.………………………………………………………………………… …6 4. CHECK POINTS BEFORE USE……………………………………………………………… 7 Accessories Satellite Dish 5. CONTROLS/FUNCTIONS……………………………………………………………………….8 Front/Rear panel Remote controller Front Display 6. EQUIPMENT CONNECTION……………………………………………………………....… 11 CONNECTION WITH ANTENNA / TV SET / A/V SYSTEM 7. OPERATION…………………………………………………………………….………………….. 12 Getting Started System Settings Edit Channels EPG CAM(COMMON INTERFACE MODULE) Only CAS(CONDITIONAL ACCESS SYSTEM) USB Menu PVR Menu 8. Explain how to download S/W by USB and how to upload and download channels by USB……………………….……………………………………….…………………31 9. HOW TO DOWNLOAD SOFTWARE FROM PC TO RECEIVER…………….…32 10. Trouble Shooting……………………….……………………………………….………………34 11. Specifications…………………………………………………………………….……………….35 12. Glossary of Terms……………………………………………………………….……………...37 1 INTRODUCTION OVERVIEW This combo receiver is designed for using both free-to-air and encrypted channel reception. Enjoy the rich choice of more than 20,000 different channels, broadcasting a large range of culture, sports, cinema, news, events, etc. This receiver is a technical masterpiece, assembled with the highest qualified electronic parts. MAIN FEATURES • High Definition Tuners : DVB-S/DVB-S2 Satellite & DVB-T Terrestrial Compliant • DVB-S/DVB-S2 Satellite Compliant(MPEG-II/MPEG-IV/H.264) -

1998 Year in Review

Associate Administrator for Commercial Space Transportation (AST) January 1999 COMMERCIAL SPACE TRANSPORTATION: 1998 YEAR IN REVIEW Cover Photo Credits (from left): International Launch Services (1998). Image is of the Atlas 2AS launch on June 18, 1998, from Cape Canaveral Air Station. It successfully orbited the Intelsat 805 communications satellite for Intelsat. Boeing Corporation (1998). Image is of the Delta 2 7920 launch on September 8, 1998, from Vandenberg Air Force Base. It successfully orbited five Iridium communications satellites for Iridium LLP. Lockheed Martin Corporation (1998). Image is of the Athena 2 awaiting its maiden launch on January 6, 1998, from Spaceport Florida. It successfully deployed the NASA Lunar Prospector. Orbital Sciences Corporation (1998). Image is of the Taurus 1 launch from Vandenberg Air Force Base on February 10, 1998. It successfully orbited the Geosat Follow-On 1 military remote sensing satellite for the Department of Defense, two Orbcomm satellites and the Celestis 2 funerary payload for Celestis Corporation. Orbital Sciences Corporation (1998). Image is of the Pegasus XL launch on December 5, 1998, from Vandenberg Air Force Base. It successfully orbited the Sub-millimeter Wave Astronomy Satellite for the Smithsonian Astrophysical Observatory. 1998 YEAR IN REVIEW INTRODUCTION INTRODUCTION In 1998, U.S. launch service providers conducted In addition, 1998 saw continuing demand for 22 launches licensed by the Federal Aviation launches to deploy the world’s first low Earth Administration (FAA), an increase of 29 percent orbit (LEO) communication systems. In 1998, over the 17 launches conducted in 1997. Of there were 17 commercial launches to LEO, 14 these 22, 17 were for commercial or international of which were for the Iridium, Globalstar, and customers, resulting in a 47 percent share of the Orbcomm LEO communications constellations. -

Annette Froehlich ·André Siebrits Volume 1: a Primary Needs

Studies in Space Policy Annette Froehlich · André Siebrits Space Supporting Africa Volume 1: A Primary Needs Approach and Africa’s Emerging Space Middle Powers Studies in Space Policy Volume 20 Series Editor European Space Policy Institute, Vienna, Austria Editorial Advisory Board Genevieve Fioraso Gerd Gruppe Pavel Kabat Sergio Marchisio Dominique Tilmans Ene Ergma Ingolf Schädler Gilles Maquet Jaime Silva Edited by: European Space Policy Institute, Vienna, Austria Director: Jean-Jacques Tortora The use of outer space is of growing strategic and technological relevance. The development of robotic exploration to distant planets and bodies across the solar system, as well as pioneering human space exploration in earth orbit and of the moon, paved the way for ambitious long-term space exploration. Today, space exploration goes far beyond a merely technological endeavour, as its further development will have a tremendous social, cultural and economic impact. Space activities are entering an era in which contributions of the humanities—history, philosophy, anthropology—, the arts, and the social sciences—political science, economics, law—will become crucial for the future of space exploration. Space policy thus will gain in visibility and relevance. The series Studies in Space Policy shall become the European reference compilation edited by the leading institute in the field, the European Space Policy Institute. It will contain both monographs and collections dealing with their subjects in a transdisciplinary way. More information about this -

Classification of Geosynchronous Objects

esoc European Space Operations Centre Robert-Bosch-Strasse 5 D-64293 Darmstadt Germany T +49 (0)6151 900 www.esa.int CLASSIFICATION OF GEOSYNCHRONOUS OBJECTS Produced with the DISCOS Database Prepared by T. Flohrer & S. Frey Reference GEN-DB-LOG-00195-OPS-GR Issue 18 Revision 0 Date of Issue 3 June 2016 Status ISSUED Document Type TN European Space Agency Agence spatiale europeenne´ Abstract This is a status report on geosynchronous objects as of 1 January 2016. Based on orbital data in ESA’s DISCOS database and on orbital data provided by KIAM the situation near the geostationary ring is analysed. From 1434 objects for which orbital data are available (of which 2 are outdated, i.e. the last available state dates back to 180 or more days before the reference date), 471 are actively controlled, 747 are drifting above, below or through GEO, 190 are in a libration orbit and 15 are in a highly inclined orbit. For 11 objects the status could not be determined. Furthermore, there are 50 uncontrolled objects without orbital data (of which 44 have not been cata- logued). Thus the total number of known objects in the geostationary region is 1484. In issue 18 the previously used definition of ”near the geostationary ring” has been slightly adapted. If you detect any error or if you have any comment or question please contact: Tim Flohrer, PhD European Space Agency European Space Operations Center Space Debris Office (OPS-GR) Robert-Bosch-Str. 5 64293 Darmstadt, Germany Tel.: +49-6151-903058 E-mail: tim.fl[email protected] Page 1 / 178 European Space Agency CLASSIFICATION OF GEOSYNCHRONOUS OBJECTS Agence spatiale europeenne´ Date 3 June 2016 Issue 18 Rev 0 Table of contents 1 Introduction 3 2 Sources 4 2.1 USSTRATCOM Two-Line Elements (TLEs) . -

Trends in Space Commerce

Foreword from the Secretary of Commerce As the United States seeks opportunities to expand our economy, commercial use of space resources continues to increase in importance. The use of space as a platform for increasing the benefits of our technological evolution continues to increase in a way that profoundly affects us all. Whether we use these resources to synchronize communications networks, to improve agriculture through precision farming assisted by imagery and positioning data from satellites, or to receive entertainment from direct-to-home satellite transmissions, commercial space is an increasingly large and important part of our economy and our information infrastructure. Once dominated by government investment, commercial interests play an increasing role in the space industry. As the voice of industry within the U.S. Government, the Department of Commerce plays a critical role in commercial space. Through the National Oceanic and Atmospheric Administration, the Department of Commerce licenses the operation of commercial remote sensing satellites. Through the International Trade Administration, the Department of Commerce seeks to improve U.S. industrial exports in the global space market. Through the National Telecommunications and Information Administration, the Department of Commerce assists in the coordination of the radio spectrum used by satellites. And, through the Technology Administration's Office of Space Commercialization, the Department of Commerce plays a central role in the management of the Global Positioning System and advocates the views of industry within U.S. Government policy making processes. I am pleased to commend for your review the Office of Space Commercialization's most recent publication, Trends in Space Commerce. The report presents a snapshot of U.S. -

2013 Commercial Space Transportation Forecasts

Federal Aviation Administration 2013 Commercial Space Transportation Forecasts May 2013 FAA Commercial Space Transportation (AST) and the Commercial Space Transportation Advisory Committee (COMSTAC) • i • 2013 Commercial Space Transportation Forecasts About the FAA Office of Commercial Space Transportation The Federal Aviation Administration’s Office of Commercial Space Transportation (FAA AST) licenses and regulates U.S. commercial space launch and reentry activity, as well as the operation of non-federal launch and reentry sites, as authorized by Executive Order 12465 and Title 51 United States Code, Subtitle V, Chapter 509 (formerly the Commercial Space Launch Act). FAA AST’s mission is to ensure public health and safety and the safety of property while protecting the national security and foreign policy interests of the United States during commercial launch and reentry operations. In addition, FAA AST is directed to encourage, facilitate, and promote commercial space launches and reentries. Additional information concerning commercial space transportation can be found on FAA AST’s website: http://www.faa.gov/go/ast Cover: The Orbital Sciences Corporation’s Antares rocket is seen as it launches from Pad-0A of the Mid-Atlantic Regional Spaceport at the NASA Wallops Flight Facility in Virginia, Sunday, April 21, 2013. Image Credit: NASA/Bill Ingalls NOTICE Use of trade names or names of manufacturers in this document does not constitute an official endorsement of such products or manufacturers, either expressed or implied, by the Federal Aviation Administration. • i • Federal Aviation Administration’s Office of Commercial Space Transportation Table of Contents EXECUTIVE SUMMARY . 1 COMSTAC 2013 COMMERCIAL GEOSYNCHRONOUS ORBIT LAUNCH DEMAND FORECAST . -

Changes to the Database for May 1, 2021 Release This Version of the Database Includes Launches Through April 30, 2021

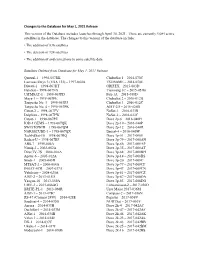

Changes to the Database for May 1, 2021 Release This version of the Database includes launches through April 30, 2021. There are currently 4,084 active satellites in the database. The changes to this version of the database include: • The addition of 836 satellites • The deletion of 124 satellites • The addition of and corrections to some satellite data Satellites Deleted from Database for May 1, 2021 Release Quetzal-1 – 1998-057RK ChubuSat 1 – 2014-070C Lacrosse/Onyx 3 (USA 133) – 1997-064A TSUBAME – 2014-070E Diwata-1 – 1998-067HT GRIFEX – 2015-003D HaloSat – 1998-067NX Tianwang 1C – 2015-051B UiTMSAT-1 – 1998-067PD Fox-1A – 2015-058D Maya-1 -- 1998-067PE ChubuSat 2 – 2016-012B Tanyusha No. 3 – 1998-067PJ ChubuSat 3 – 2016-012C Tanyusha No. 4 – 1998-067PK AIST-2D – 2016-026B Catsat-2 -- 1998-067PV ÑuSat-1 – 2016-033B Delphini – 1998-067PW ÑuSat-2 – 2016-033C Catsat-1 – 1998-067PZ Dove 2p-6 – 2016-040H IOD-1 GEMS – 1998-067QK Dove 2p-10 – 2016-040P SWIATOWID – 1998-067QM Dove 2p-12 – 2016-040R NARSSCUBE-1 – 1998-067QX Beesat-4 – 2016-040W TechEdSat-10 – 1998-067RQ Dove 3p-51 – 2017-008E Radsat-U – 1998-067RF Dove 3p-79 – 2017-008AN ABS-7 – 1999-046A Dove 3p-86 – 2017-008AP Nimiq-2 – 2002-062A Dove 3p-35 – 2017-008AT DirecTV-7S – 2004-016A Dove 3p-68 – 2017-008BH Apstar-6 – 2005-012A Dove 3p-14 – 2017-008BS Sinah-1 – 2005-043D Dove 3p-20 – 2017-008C MTSAT-2 – 2006-004A Dove 3p-77 – 2017-008CF INSAT-4CR – 2007-037A Dove 3p-47 – 2017-008CN Yubileiny – 2008-025A Dove 3p-81 – 2017-008CZ AIST-2 – 2013-015D Dove 3p-87 – 2017-008DA Yaogan-18 -

Abbreviations Bibliography Cur

The international trade in launch services : the effects of U.S. laws, policies and practices on its development Fenema, H.P. van Citation Fenema, H. P. van. (1999, September 30). The international trade in launch services : the effects of U.S. laws, policies and practices on its development. H.P. van Fenema, Leiden. Retrieved from https://hdl.handle.net/1887/44957 Version: Not Applicable (or Unknown) Licence agreement concerning inclusion of doctoral thesis in the License: Institutional Repository of the University of Leiden Downloaded from: https://hdl.handle.net/1887/44957 Note: To cite this publication please use the final published version (if applicable). Cover Page The handle http://hdl.handle.net/1887/44957 holds various files of this Leiden University dissertation. Author: Fenema, H.P. van Title: The international trade in launch services : the effects of U.S. laws, policies and practices on its development Issue Date: 1999-09-30 ABBREVIATIONS AND ACRONYMS a fortiori with greater reason a 1'Americaine = American-style a.o. = among other (things) AADC Alaska Aerospace Development Corporation ABM (Treaty) Anti-Ballistic Missile (Treaty) ACDA Arms Control and Disarmament Agency ad hoc concerned with a particular end/purpose or formed/ used for a specific or immediate problem/need AECA = Arms Export Control Act AFB Air Force Base AIA(A) Aerospace Industries Association (of America) ANPRM Advance Notice of Proposed Rulemaking APMT Asia-Pacific Mobile Telecommunications art. = article artt. articles AST = Associate Administrator of Commercial Space Transportation AW/ST Aviation Week & Space Technology bona .fide in good faith BXA Bureau of Export Administration c.q. -

Improving Access to Education Via Satellites in Africa: a Primer

Improving access to education via satellites in Africa: A primer (an overview of the opportunities afforded by satellite and other technologies in meeting educational and development needs) Mathy Vanbuel To cite this version: Mathy Vanbuel. Improving access to education via satellites in Africa: A primer (an overview of the opportunities afforded by satellite and other technologies in meeting educational and development needs). 2003. hal-00190216 HAL Id: hal-00190216 https://telearn.archives-ouvertes.fr/hal-00190216 Submitted on 23 Nov 2007 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Improving Access to Education via Satellites in Africa: A Primer IMFUNDO (im-fun-doe) n. The acquisition of knowledge: the process of becoming educated. (from the Nguni languages of southern Africa.) An overview of the opportunities afforded by satellite and other technologies in meeting educational and development needs IMFUNDO Partnership for IT in Education KnowledgeBank paper 1 ........Improving.. Access.. to Education.. via.. Satellites.. in Africa: ..A Primer ..Improving.. Access to.. Education.. via Satellites.. in Africa:.. A Primer........ Table of Contents 1.0 Executive Summary 7 2.0 Introduction 9 This Primer was commissioned by Imfundo:Partnership in IT 2.1. -

Nilesat and Eutelsat Sign a New Strategic Agreement to Develop the 7° West Video Neighbourhood for the Middle East and North Africa

PR/16/09 NILESAT AND EUTELSAT SIGN A NEW STRATEGIC AGREEMENT TO DEVELOP THE 7° WEST VIDEO NEIGHBOURHOOD FOR THE MIDDLE EAST AND NORTH AFRICA Paris, 24 March 2009 Nilesat, the Egyptian satellite company, and Eutelsat Communications (Euronext Paris: ETL) today announced the signature of a new strategic agreement to pursue the development of the 7 degrees West orbital position which is used by both companies for satellite broadcasting across the Middle East; the Gulf countries and North Africa. This new phase of collaboration between Nilesat and Eutelsat follows the recent successful launch of Eutelsat’s HOT BIRD™ 10 satellite that will first be commercialised at 7 degrees West under the name ATLANTIC BIRD™ 4A. Eutelsat has been partnering with Nilesat at 7 degrees West since July 2006 when its ATLANTIC BIRD™ 4 satellite was copositioned with the Nilesat 101 and Nilesat 102 satellites. This constellation of three satellites at a single neighbourhood currently comprises 39 transponders, which transmit more than 450 television channels to an audience over 38 million homes. The development of the shared neighbourhood will move into a new phase with the commercial entry into service later this month of ATLANTIC BIRD™ 4A at 7 degrees West, enabling Nilesat to boost through to 2019 the number of transponders it leases from Eutelsat. The collaboration between both operators will move into a third phase with the deployment in mid-2010 of the Nilesat 201 satellite procured by Nilesat. This will be followed in 2011 by the arrival of ATLANTIC BIRD™ 4R which Eutelsat recently confirmed it will shortly procure. -

بسم هللا الرحمن الرحيم Satellite Communications

بسم هللا الرحمن الرحيم SATELLITE COMMUNICATIONS - SERVICES AND 1 APPLICATIONS LECTURER : Dr. Lotfi Laadhar دورة لمنسوبي الكلية SATCOM-Services $ Applications June’2006 I INTRODUCTION II SATCOM - PRINCIPLE III TVSAT & VSAT 2 IV LINK DESIGN – SatMaster Pro دورة لمنسوبي الكلية SATCOM-Services $ Applications June’2006 SATELLITE COMMUNICATION SYSTEMS [1] - DENIS RODDY SATELLITE COMMUNICATIONS 1996 3 [2] – DONALD C. MEAD DIRECT BROADCAST SATELLITE COMMUNCATIONS 2000 [3] - TRI T. HA DIGITAL SATELLITE COMMUNICATIONS 1990 October'2003 HISTORY 1957 – LAUNCH THE FIRST SATELLITE SPUTNIC1 1961 – AT&T HAD LAUNCHED TELSTAR 4 1964 August 20 - International Telecommunications Satellite Organization (INTELSAT). دورة لمنسوبي الكلية SATCOM-Services $ Applications June’2006 HISTORY CON’t 1976 – ARABSAT organization was created 1977- EUTELSAT was created 1979 - The International Maritime Organization 5 sponsored the establishment of the International Maritime Satellite Organization INMARSAT دورة لمنسوبي الكلية SATCOM-Services $ Applications June’2006 HISTORY CON’t 1984–1985-1988 French satellites TELECOM had launched (I generation) 1995 – HOTBIRD I had lunched 1996 - II generation of ARABSAT was launched 6 1999 -Launching BADR-3 to the orbit of 26° East, the same orbit of Arabsat 2A. Feb.28 2006 - Proton rocket fails in ARABSAT 4A launch دورة لمنسوبي الكلية SATCOM-Services $ Applications June’2006 INTELSAT FLEET 7 دورة لمنسوبي الكلية SATCOM-Services $ Applications June’2006 EUTELSAT FLEET Satellite Date Duration Eutelsat-1 F1,F2,F3,F4,F5 1981-1988 7 years Eutelsat-2