Non-Uniform Time-Scaling of Carnatic Music Transients

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Comparative Analysis of the Melody in the Kṛti Śhri

Comparative analysis of the melody in the Kṛti Śri Mahāgaṇapatiravatumām with references to Saṅgīta Sampradāya Pradarśini of Subbarāma Dīkṣitar and Dīkṣita Kīrtana Mālā of A. Sundaram Ayyar Pradeesh Balan PhD-JRF Scholar (full-time) Department of Music Queen Mary‟s College Chennai – 600 004 Introduction: One of the indelible aspects of music when it comes to passing on to posterity is the variations in renditions, mainly since the sources contain not only texts of songs and notations but also an oral inheritance. This can be broadly witnessed in the compositions of the composers of Carnatic Music. Variations generally occur in the text of the song, rāga, tāḷa, arrangement of the words within the tāḷa cycle and melodic framework, graha, melodic phrases and musical prosody1. The scholars who have written and published on Muttusvāmi Dīkṣitar and his accounts are named in chronological order as follows: Subbarāma Dīkṣitar Kallidaikuricci Ananta Krishṇa Ayyar Natarāja Sundaram Pillai T. L. Venkatarāma Ayyar Dr. V. Rāghavan A. Sundaram Ayyar R. Rangarāmānuja Ayyengār Prof. P. Sāmbamūrthy Dr. N. Rāmanāthan writes about Śri Māhādēva Ayyar of Kallidaikuricci thus: He is a student of Kallidaikuricci Vēdānta Bhāgavatar and along with A. Anantakṛṣṇayyar (well known as Calcutta Anantakṛṣṇa Ayyar) and A. Sundaram Ayyar (of Mayilāpūr), has been instrumental in learning the compositions of Dīkṣitar from Śri Ambi Dīkṣita, son of Subbarāma Dīkṣita and propagating them to the next generation2. Dr. V. Rāghavan (pp. 76-92), in his book Muttuswāmi Dīkṣitar gives an index of Dīkṣitar‟s compositions which is forked in two sections. The former section illustrates the list of kṛti-s as notated in Saṅgīta Sampradāya Pradarśini of Subbarāma Dīkṣitar while the other one lists almost an equal number of another set 1 Rāmanāthan N. -

B.A.Western Music School of Music and Fine Arts

B.A.Western Music Curriculum and Syllabus (Based on Choice based Credit System) Effective from the Academic Year 2018-2019 School of Music and Fine Arts PROGRAM EDUCATIONAL OBJECTIVES (PEO) PEO1: Learn the fundamentals of the performance aspect of Western Classical Karnatic Music from the basics to an advanced level in a gradual manner. PEO2: Learn the theoretical concepts of Western Classical music simultaneously along with honing practical skill PEO3: Understand the historical evolution of Western Classical music through the various eras. PEO4: Develop an inquisitive mind to pursue further higher study and research in the field of Classical Art and publish research findings and innovations in seminars and journals. PEO5: Develop analytical, critical and innovative thinking skills, leadership qualities, and good attitude well prepared for lifelong learning and service to World Culture and Heritage. PROGRAM OUTCOME (PO) PO1: Understanding essentials of a performing art: Learning the rudiments of a Classical art and the various elements that go into the presentation of such an art. PO2: Developing theoretical knowledge: Learning the theory that goes behind the practice of a performing art supplements the learner to become a holistic practioner. PO3: Learning History and Culture: The contribution and patronage of various establishments, the background and evolution of Art. PO4: Allied Art forms: An overview of allied fields of art and exposure to World Music. PO5: Modern trends: Understanding the modern trends in Classical Arts and the contribution of revolutionaries of this century. PO6: Contribution to society: Applying knowledge learnt to teach students of future generations . PO7: Research and Further study: Encouraging further study and research into the field of Classical Art with focus on interdisciplinary study impacting society at large. -

Transcription and Analysis of Ravi Shankar's Morning Love For

Louisiana State University LSU Digital Commons LSU Doctoral Dissertations Graduate School 2013 Transcription and analysis of Ravi Shankar's Morning Love for Western flute, sitar, tabla and tanpura Bethany Padgett Louisiana State University and Agricultural and Mechanical College, [email protected] Follow this and additional works at: https://digitalcommons.lsu.edu/gradschool_dissertations Part of the Music Commons Recommended Citation Padgett, Bethany, "Transcription and analysis of Ravi Shankar's Morning Love for Western flute, sitar, tabla and tanpura" (2013). LSU Doctoral Dissertations. 511. https://digitalcommons.lsu.edu/gradschool_dissertations/511 This Dissertation is brought to you for free and open access by the Graduate School at LSU Digital Commons. It has been accepted for inclusion in LSU Doctoral Dissertations by an authorized graduate school editor of LSU Digital Commons. For more information, please [email protected]. TRANSCRIPTION AND ANALYSIS OF RAVI SHANKAR’S MORNING LOVE FOR WESTERN FLUTE, SITAR, TABLA AND TANPURA A Written Document Submitted to the Graduate Faculty of the Louisiana State University and Agricultural and Mechanical College in partial fulfillment of the requirements for the degree of Doctor of Musical Arts in The School of Music by Bethany Padgett B.M., Western Michigan University, 2007 M.M., Illinois State University, 2010 August 2013 ACKNOWLEDGEMENTS I am entirely indebted to many individuals who have encouraged my musical endeavors and research and made this project and my degree possible. I would first and foremost like to thank Dr. Katherine Kemler, professor of flute at Louisiana State University. She has been more than I could have ever hoped for in an advisor and mentor for the past three years. -

301763 Communityconnections

White Paper, p. 2 White Paper The Connected Community: Local Governments as Partners in Citizen Engagement and Community Building James H. Svara and Janet Denhardt, Editors Arizona State University October 15, 2010 Reprinting or reuse of this document is subject to the “Fair Use” principal. Reproduction of any substantial portion of the document should include credit to the Alliance for Innovation. Cover Image, Barn Raising, Paul Pitt Used with permission of Beverly Kaye Gallery Art.Brut.com White Paper, p. 3 Table of Contents The Connected Community, James Svara and Janet Denhardt Preface, p. 4 Overview: Citizen Engagement, Why and How?, p. 5 Citizen Engagement Strategies, Approaches, and Examples, p. 28 References, p. 49 Essays General Discussions Cheryl Simrell King, “Citizen Engagement and Sustainability.” p. 52 John Clayton Thomas, “Citizen, Customer, Partner: Thinking about Local Governance with and for the Public.” p. 57 Mark D. Robbins and Bill Simonsen, “Citizen Participation: Goals and Methods.” p. 62 Utilizing the Internet and Social Media Thomas Bryer, “Across the Great Divide: Social Media and Networking for Citizen Engagement.” p. 73 Tina Nabatchi and Ines Mergel, “Participation 2.0: Using Internet and Social Media: Technologies to Promote Distributed Democracy and Create Digital Neighborhoods.” - p. 80 Nancy C. Roberts, “Open-Source, Web-Based Platforms for Public Engagement during Disasters.” p. 88 Service Delivery and Performance Measurement Kathe Callahan, “Next Wave of Performance Measurement: Citizen Engagement,” p. 95 Janet Woolum, “Citizen-Government Dialogue in Performance Measurement Cycle: Cases from Local Government.” p. 102 Arts Applications Arlene Goldbard, “The Art of Engagement: Creativity in the Service of Citizenship.” p. -

9753 Y21 Sy Music H2 Level for 2021

Music Singapore-Cambridge General Certificate of Education Advanced Level Higher 2 (2021) (Syllabus 9753) CONTENTS Page INTRODUCTION 2 AIMS 2 FRAMEWORK 2 WEIGHTING AND ASSESSMENT OF COMPONENTS 3 ASSESSMENT OBJECTIVES 3 DESCRIPTION OF COMPONENTS 4 DETAILS OF TOPICS 10 ASSESSMENT CRITERIA 14 NOTES FOR GUIDANCE 22 Singapore Examinations and Assessment Board MOE & UCLES 2019 1 9753 MUSIC GCE ADVANCED LEVEL H2 SYLLABUS (2021) INTRODUCTION This syllabus is designed to engage students in music listening, performing and composing, and recognises that each is an individual with his/her own musical inclinations. This syllabus is also underpinned by the understanding that an appreciation of the social, cultural and historical contexts of music is vital in giving meaning to its study, and developing an open and informed mind towards the multiplicities of musical practices. It aims to nurture students’ thinking skills and musical creativity by providing opportunities to discuss music-related issues, transfer learning and to make music. It provides a foundation for further study in music while endeavouring to develop a life-long interest in music. AIMS The aims of the syllabus are to: • Develop critical thinking and musical creativity • Develop advanced skills in communication, interpretation and perception in music • Deepen understanding of different musical traditions in their social, cultural and historical contexts • Provide the basis for an informed and life-long appreciation of music FRAMEWORK This syllabus approaches the study of Music through Music Studies and Music Making. It is designed for the music student who has a background in musical performance and theory. Music Studies cover a range of works from the Western Music tradition as well as prescribed topics from the Asian Music tradition. -

Computational Analysis of Audio Recordings and Music Scores for the Description and Discovery of Ottoman-Turkish Makam Music

Computational Analysis of Audio Recordings and Music Scores for the Description and Discovery of Ottoman-Turkish Makam Music Sertan Şentürk TESI DOCTORAL UPF / 2016 Director de la tesi Dr. Xavier Serra Casals Music Technology Group Department of Information and Communication Technologies Copyright © 2016 by Sertan Şentürk http://compmusic.upf.edu/senturk2016thesis http://www.sertansenturk.com/phd-thesis Dissertation submitted to the Department of Information and Com- munication Technologies of Universitat Pompeu Fabra in partial fulfillment of the requirements for the degree of DOCTOR PER LA UNIVERSITAT POMPEU FABRA, with the mention of European Doctor. Licensed under Creative Commons Attribution - NonCommercial - NoDerivatives 4.0 You are free to share – to copy and redistribute the material in any medium or format under the following conditions: • Attribution – You must give appropriate credit, provide a link to the license, and indicate if changes were made. You may do so in any reasonable manner, but not in any way that suggests the licensor endorses you or your use. • Non-commercial – You may not use the material for com- mercial purposes. • No Derivative Works – If you remix, transform, or build upon the material, you may not distribute the modified ma- terial. Music Technology Group (http://mtg.upf.edu/), Department of Informa- tion and Communication Technologies (http://www.upf.edu/etic), Univer- sitat Pompeu Fabra (http://www.upf.edu), Barcelona, Spain The doctoral defense was held on .................. 2017 at Universitat Pompeu Fabra and scored as ........................................................... Dr. Xavier Serra Casals Thesis Supervisor Universitat Pompeu Fabra (UPF), Barcelona, Spain Dr. Gerhard Widmer Thesis Committee Member Johannes Kepler University, Linz, Austria Dr. -

The Microtones of Bharata's Natyashastra

The Microtones of Bharata’s Natyashastra John Stephens N about 200 CE, Bharata and Dattila penned closely related Sanskrit treatises on music in I the Natyashastra and Dattilam, respectively (Ghosh 1951, lxv). Inside, they outline the essential intervals and scales of Gandharva music, a precursor to the Hindustani and Carnatic traditions. Their texts are the first known documents from south Asia that attempt to systematically describe a theory of intonation for musical instruments. At the core of their tonal structure is a series of twenty-two microtones, known as sruti-s.1 The precise musical definition of the twenty-two sruti-s has been a topic of debate over the ages. In part, this is because Bharata does not explain his system in acoustically verifiable terms, a hurdle that all interpretations of his text ultimately confront. Some authors, such as Nazir Ali Jairazbhoy and Emmie te Nijenhuis, suggest that Bharata believed the sruti-s were even (twenty-two tone equal temperament, or 22-TET), though they were not in practice (Jairazbhoy 1975, 44; Nijenhuis 1974, 14–16). Jairazbhoy concludes that it is impossible to determine the exact nature of certain intervals in Bharata’s system, such as major thirds. Nijenhuis (1974, 16–19) takes a different approach, suggesting that the ancient scales were constructed using interval ratios 7:4 and 11:10, so as to closely approximate the neutral seconds and quarter tones produced by 22-TET. Prabhakar R. Bhandarkar (1912, 257-58) argues that Bharata’s 22-TET system was approximate and still implied the use of 3:2 perfect fifths and 5:4 major thirds. -

An Overview of Hindustani Music in the Context of Computational Musicology

AN OVERVIEW OF HINDUSTANI MUSIC IN THE CONTEXT OF COMPUTATIONAL MUSICOLOGY Suvarnalata Rao* and Preeti RaoŦ National Centre for the Performing Arts, Mumbai 400021, India * Department of Electrical Engineering, Indian Institute of Technology Bombay, IndiaŦ [email protected], [email protected] [This is an Author’s Original Manuscript of an Article whose final and definitive form, the Version of Record, has been published in the Journal of New Music Research, Volume 43, Issue 1, 31 Mar 2014, available online at: http://dx.doi.org/10.1080/09298215.2013.831109 [dx.doi.org]] ABSTRACT ludes any key changes, which are so characteristic of Western music. Indian film music is of course an excep- With its origin in the Samveda, composed between 1500 - tion to this norm as it freely uses Western instruments 900 BC, the art music of India has evolved through ages and techniques including harmonization, chords etc. and come to be regarded as one of the oldest surviving music systems in the world today. This paper aims to Art music (sometimes inappropriately described as clas- provide an overview of the fundamentals governing Hin- sical music) is one of the six categories of music that dustani music (also known as North Indian music) as have flourished side by side: primitive, folk, religious, practiced today. The deliberation will mainly focus on the art, popular and confluence. The patently aesthetic inten- melodic aspect of music making and will attempt to pro- tion of the art music sets it apart from other categories. It vide a musicological base for the main features associated is governed by two main elements: raga and tala. -

The Oral in Writing: Early Indian Musical Notations Richard Widdess

The Oral in Writing: Early Indian Musical Notations Richard Widdess Early Music, Vol. 24, No. 3, Early Music from Around the World. (Aug., 1996), pp. 391-402+405. Stable URL: http://links.jstor.org/sici?sici=0306-1078%28199608%2924%3A3%3C391%3ATOIWEI%3E2.0.CO%3B2-8 Early Music is currently published by Oxford University Press. Your use of the JSTOR archive indicates your acceptance of JSTOR's Terms and Conditions of Use, available at http://www.jstor.org/about/terms.html. JSTOR's Terms and Conditions of Use provides, in part, that unless you have obtained prior permission, you may not download an entire issue of a journal or multiple copies of articles, and you may use content in the JSTOR archive only for your personal, non-commercial use. Please contact the publisher regarding any further use of this work. Publisher contact information may be obtained at http://www.jstor.org/journals/oup.html. Each copy of any part of a JSTOR transmission must contain the same copyright notice that appears on the screen or printed page of such transmission. The JSTOR Archive is a trusted digital repository providing for long-term preservation and access to leading academic journals and scholarly literature from around the world. The Archive is supported by libraries, scholarly societies, publishers, and foundations. It is an initiative of JSTOR, a not-for-profit organization with a mission to help the scholarly community take advantage of advances in technology. For more information regarding JSTOR, please contact [email protected]. http://www.jstor.org Fri Jun 29 11:02:20 2007 Richard Widdess The oral in writing: early Indian musical notations t is generally agreed that written musical notation plays a relatively Iinsignificant part in the history and practice of music in South Asia. -

The Classical Indian Just Intonation Tuning System

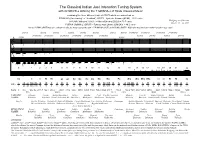

The Classical Indian Just Intonation Tuning System with 22 SRUTI-s defining the 7 SWARA-s of Hindu Classical Music combining the three different kinds of SRUTI which are understood as PRAMANA ("measuring" or "standard") SRUTI = Syntonic Comma (81/80) = 21.5 cents NYUNA ("deficient") SRUTI = Minor Chroma (25/24) = 70.7 cents Wolfgang von Schweinitz October 20 - 22, 2006 PURNA ("fullfilling") SRUTI = Pythagorean Limma (256/243) = 90.2 cents (where PURNA SRUTI may also, enharmonically, be interpreted as the sum of PRAMANA SRUTI and NYUNA SRUTI = Major Chroma (135/128 = 81/80 * 25/24) = 92.2 cents) purna nyuna purna nyuna purna nyuna purna purna pramana pramana pramana pramana pramana pramana pramana pramana pramana pramana nyuna purna nyuna purna nyuna nyuna # # # # # # " ! # " # # ! # # # <e e f m n >m n # # # # # f # n t e u f m n # # ! # # # <e e# f# m# n# e f m n >m ! n" t <f e u f m n # # # # # # " ! # " # # ! # # # <e e f m n >m n ! # # # # # f # n t e u f m n # # ! # # # <e e# f# m# n# n o u v >u n " t <f e u f m n # # " ! ! # # ! # # # <n n# o# u# v# >u n # # # # f # # n" t e u f m n # # # ! # # # <e e # f # m# n# n o u v >u n " t <f e u f m n 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 # # ! # # ! <e e# f# m# n# >m n " # # f n" t e# u f# m# n# $ # ! <e e# f# m# n# n# o# u# v# >u n " t <f e#u f# m# n# Sa ri ri Ri Ri ga ga Ga Ga ma ma Ma Ma Pa dha dha Dha Dha ni ni Ni Ni Sa 1 25 21 256 135 16 10 9 7 32 6 5 81 4 27 45 64 729 10 3 25 128 405 8 5 27 7 16 9 15 243 40 2 Ratio 1 24 20 243 128 15 9 8 6 27 5 4 64 3 20 32 45 -

Classification of Melodic Motifs in Raga Music with Time-Series Matching

Classification of Melodic Motifs in Raga Music with Time-series Matching Preeti Rao*, Joe Cheri Ross*, Kaustuv Kanti Ganguli*, Vedhas Pandit*, Vignesh Ishwar#, Ashwin Bellur#, Hema Murthy# Indian Institute of Technology Bombay* Indian Institute of Technology Madras# This is an Author’s Original Manuscript of an Article whose final and definitive form, the Version of Record, has been published in the Journal of New Music Research, Volume 43, Issue 1, 31 Mar 2014, available online at: http://dx.doi.org/10.1080/09298215.2013.831109 [dx.doi.org] Abstract Ragas are characterized by their melodic motifs or catch phrases that constitute strong cues to the raga identity for both, the performer and the listener, and therefore are of great interest in music retrieval and automatic transcription. While the characteristic phrases, or pakads, appear in written notation as a sequence of notes, musicological rules for interpretation of the phrase in performance in a manner that allows considerable creative expression, while not transgressing raga grammar, are not explicitly defined. In this work, machine learning methods are used on labeled databases of Hindustani and Carnatic vocal audio concerts to obtain phrase classification on manually segmented audio. Dynamic time warping and HMM based classification are applied on time series of detected pitch values used for the melodic representation of a phrase. Retrieval experiments on raga- characteristic phrases show promising results while providing interesting insights on the nature of variation in the surface realization of raga-characteristic motifs within and across concerts. Keywords: raga-characteristic phrases, melodic motifs, machine learning 1 Introduction Indian music is essentially melodic and monophonic (or more accurately, heterophonic) in nature. -

A Historic Overview of Oriental Solmisation Systems Followed by an Inquiry Into the Current Use of Solmisation in Aural Training at South African Universities

A historic overview of oriental solmisation systems followed by an inquiry into the current use of solmisation in aural training at South African universities by Theunis Gabriël Louw (Student number: 13428330) Thesis presented in fulfilment of the requirements for the degree Magister Musicae at Stellenbosch University Department of Music Faculty of Arts and Social Sciences Supervisor: Mr Theo Herbst Co-supervisor: Prof. Hans Roosenschoon Date: December 2010 DECLARATION By submitting this thesis electronically, I declare that the entirety of the work contained therein is my own, original work, that I am the owner of the copyright thereof (unless to the extent explicitly otherwise stated) and that I have not previously in its entirety or in part submitted it for obtaining any qualification. Date: 1 November 2010 Copyright © 2010 Stellenbosch University All rights reserved i ABSTRACT Title: A historic overview of oriental solmisation systems followed by an inquiry into the current use of solmisation in aural training at South African universities Description: The purpose of the present study is twofold: I. In the first instance, it is aimed at promoting a better acquaintance with and a deeper understanding of the generally less well-known solmisation systems that have emerged within the oriental music sphere. In this regard a general definition of solmisation is provided, followed by a historic overview of indigenous solmisation systems that have been developed in China, Korea, Japan, India, Indonesia and the Arab world, thereby also confirming the status of solmisation as a truly global phenomenon. II. The second objective of the study was to investigate the current use of solmisation, and the Tonic Sol-fa system in particular, in aural training at South African universities.