Book of Proceedings

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ethnicity, Education and Equality in Nepal

HIMALAYA, the Journal of the Association for Nepal and Himalayan Studies Volume 36 Number 2 Article 6 December 2016 New Languages of Schooling: Ethnicity, Education and Equality in Nepal Uma Pradhan University of Oxford, [email protected] Follow this and additional works at: https://digitalcommons.macalester.edu/himalaya Recommended Citation Pradhan, Uma. 2016. New Languages of Schooling: Ethnicity, Education and Equality in Nepal. HIMALAYA 36(2). Available at: https://digitalcommons.macalester.edu/himalaya/vol36/iss2/6 This work is licensed under a Creative Commons Attribution-Noncommercial 4.0 License This Research Article is brought to you for free and open access by the DigitalCommons@Macalester College at DigitalCommons@Macalester College. It has been accepted for inclusion in HIMALAYA, the Journal of the Association for Nepal and Himalayan Studies by an authorized administrator of DigitalCommons@Macalester College. For more information, please contact [email protected]. New Languages of Schooling: Ethnicity, Education, and Equality in Nepal Uma Pradhan Mother tongue education has remained this attempt to seek membership into a controversial issue in Nepal. Scholars, multiple groups and display of apparently activists, and policy-makers have favored contradictory dynamics. On the one hand, the mother tongue education from the standpoint practices in these schools display inward- of social justice. Against these views, others looking characteristics through the everyday have identified this effort as predominantly use of mother tongue, the construction of groupist in its orientation and not helpful unified ethnic identity, and cultural practices. in imagining a unified national community. On the other hand, outward-looking dynamics Taking this contention as a point of inquiry, of making claims in the universal spaces of this paper explores the contested space of national education and public places could mother tongue education to understand the also be seen. -

The Tatoeba Translation Challenge--Realistic Data Sets For

The Tatoeba Translation Challenge – Realistic Data Sets for Low Resource and Multilingual MT Jorg¨ Tiedemann University of Helsinki [email protected] https://github.com/Helsinki-NLP/Tatoeba-Challenge Abstract most important point is to get away from artificial This paper describes the development of a new setups that only simulate low-resource scenarios or benchmark for machine translation that pro- zero-shot translations. A lot of research is tested vides training and test data for thousands of with multi-parallel data sets and high resource lan- language pairs covering over 500 languages guages using data sets such as WIT3 (Cettolo et al., and tools for creating state-of-the-art transla- 2012) or Europarl (Koehn, 2005) simply reducing tion models from that collection. The main or taking away one language pair for arguing about goal is to trigger the development of open the capabilities of learning translation with little or translation tools and models with a much without explicit training data for the language pair broader coverage of the World’s languages. Using the package it is possible to work on in question (see, e.g., Firat et al.(2016a,b); Ha et al. realistic low-resource scenarios avoiding arti- (2016); Lakew et al.(2018)). Such a setup is, how- ficially reduced setups that are common when ever, not realistic and most probably over-estimates demonstrating zero-shot or few-shot learning. the ability of transfer learning making claims that For the first time, this package provides a do not necessarily carry over towards real-world comprehensive collection of diverse data sets tasks. -

View Entire Book

ODISHA REVIEW VOL. LXX NO. 8 MARCH - 2014 PRADEEP KUMAR JENA, I.A.S. Principal Secretary PRAMOD KUMAR DAS, O.A.S.(SAG) Director DR. LENIN MOHANTY Editor Editorial Assistance Production Assistance Bibhu Chandra Mishra Debasis Pattnaik Bikram Maharana Sadhana Mishra Cover Design & Illustration D.T.P. & Design Manas Ranjan Nayak Hemanta Kumar Sahoo Photo Raju Singh Manoranjan Mohanty The Odisha Review aims at disseminating knowledge and information concerning Odisha’s socio-economic development, art and culture. Views, records, statistics and information published in the Odisha Review are not necessarily those of the Government of Odisha. Published by Information & Public Relations Department, Government of Odisha, Bhubaneswar - 751001 and Printed at Odisha Government Press, Cuttack - 753010. For subscription and trade inquiry, please contact : Manager, Publications, Information & Public Relations Department, Loksampark Bhawan, Bhubaneswar - 751001. Five Rupees / Copy E-mail : [email protected] Visit : http://odisha.gov.in Contact : 9937057528(M) CONTENTS Sri Krsna - Jagannath Consciousness : Vyasa - Jayadeva - Sarala Dasa Dr. Satyabrata Das ... 1 Good Governance ... 3 Classical Language : Odia Subrat Kumar Prusty ... 4 Language and Language Policy in India Prof. Surya Narayan Misra ... 14 Rise of the Odia Novel : 1897-1930 Jitendra Narayan Patnaik ... 18 Gangadhar Literature : A Bird’s Eye View Jagabandhu Panda ... 23 Medieval Odia Literature and Bhanja Dynasty Dr. Sarat Chandra Rath ... 25 The Evolution of Odia Language : An Introspection Dr. Jyotirmati Samantaray ... 29 Biju - The Greatest Odia in Living Memory Rajkishore Mishra ... 31 Binode Kanungo (1912-1990) - A Versatile Genius ... 34 Role of Maharaja Sriram Chandra Bhanj Deo in the Odia Language Movement Harapriya Das Swain ... 38 Odissi Vocal : A Unique Classical School Kirtan Narayan Parhi .. -

The Journal of the Music Academy Madras Devoted to the Advancement of the Science and Art of Music

The Journal of Music Academy Madras ISSN. 0970-3101 Publication by THE MUSIC ACADEMY MADRAS Sangita Sampradaya Pradarsini of Subbarama Dikshitar (Tamil) Part I, II & III each 150.00 Part – IV 50.00 Part – V 180.00 The Journal Sangita Sampradaya Pradarsini of Subbarama Dikshitar of (English) Volume – I 750.00 Volume – II 900.00 The Music Academy Madras Volume – III 900.00 Devoted to the Advancement of the Science and Art of Music Volume – IV 650.00 Volume – V 750.00 Vol. 89 2018 Appendix (A & B) Veena Seshannavin Uruppadigal (in Tamil) 250.00 ŸÊ„¢U fl‚ÊÁ◊ flÒ∑ȧá∆U Ÿ ÿÊÁªNÔUŒÿ ⁄UflÊÒ– Ragas of Sangita Saramrta – T.V. Subba Rao & ◊jQÊ— ÿòÊ ªÊÿÁãà ÃòÊ ÁÃDÊÁ◊ ŸÊ⁄UŒH Dr. S.R. Janakiraman (in English) 50.00 “I dwell not in Vaikunta, nor in the hearts of Yogins, not in the Sun; Lakshana Gitas – Dr. S.R. Janakiraman 50.00 (but) where my Bhaktas sing, there be I, Narada !” Narada Bhakti Sutra The Chaturdandi Prakasika of Venkatamakhin 50.00 (Sanskrit Text with supplement) E Krishna Iyer Centenary Issue 25.00 Professor Sambamoorthy, the Visionary Musicologist 150.00 By Brahma EDITOR Sriram V. Raga Lakshanangal – Dr. S.R. Janakiraman (in Tamil) Volume – I, II & III each 150.00 VOL. 89 – 2018 VOL. COMPUPRINT • 2811 6768 Published by N. Murali on behalf The Music Academy Madras at New No. 168, TTK Road, Royapettah, Chennai 600 014 and Printed by N. Subramanian at Sudarsan Graphics Offset Press, 14, Neelakanta Metha Street, T. Nagar, Chennai 600 014. Editor : V. Sriram. THE MUSIC ACADEMY MADRAS ISSN. -

Map by Steve Huffman; Data from World Language Mapping System

Svalbard Greenland Jan Mayen Norwegian Norwegian Icelandic Iceland Finland Norway Swedish Sweden Swedish Faroese FaroeseFaroese Faroese Faroese Norwegian Russia Swedish Swedish Swedish Estonia Scottish Gaelic Russian Scottish Gaelic Scottish Gaelic Latvia Latvian Scots Denmark Scottish Gaelic Danish Scottish Gaelic Scottish Gaelic Danish Danish Lithuania Lithuanian Standard German Swedish Irish Gaelic Northern Frisian English Danish Isle of Man Northern FrisianNorthern Frisian Irish Gaelic English United Kingdom Kashubian Irish Gaelic English Belarusan Irish Gaelic Belarus Welsh English Western FrisianGronings Ireland DrentsEastern Frisian Dutch Sallands Irish Gaelic VeluwsTwents Poland Polish Irish Gaelic Welsh Achterhoeks Irish Gaelic Zeeuws Dutch Upper Sorbian Russian Zeeuws Netherlands Vlaams Upper Sorbian Vlaams Dutch Germany Standard German Vlaams Limburgish Limburgish PicardBelgium Standard German Standard German WalloonFrench Standard German Picard Picard Polish FrenchLuxembourgeois Russian French Czech Republic Czech Ukrainian Polish French Luxembourgeois Polish Polish Luxembourgeois Polish Ukrainian French Rusyn Ukraine Swiss German Czech Slovakia Slovak Ukrainian Slovak Rusyn Breton Croatian Romanian Carpathian Romani Kazakhstan Balkan Romani Ukrainian Croatian Moldova Standard German Hungary Switzerland Standard German Romanian Austria Greek Swiss GermanWalser CroatianStandard German Mongolia RomanschWalser Standard German Bulgarian Russian France French Slovene Bulgarian Russian French LombardRomansch Ladin Slovene Standard -

The Maulana Who Loved Krishna

SPECIAL ARTICLE The Maulana Who Loved Krishna C M Naim This article reproduces, with English translations, the e was a true maverick. In 1908, when he was 20, he devotional poems written to the god Krishna by a published an anonymous article in his modest Urdu journal Urd -i-Mu’all (Aligarh) – circulation 500 – maulana who was an active participant in the cultural, H ū ā which severely criticised the British colonial policy in Egypt political and theological life of late colonial north India. regarding public education. The Indian authorities promptly Through this, the article gives a glimpse of an Islamicate charged him with “sedition”, and demanded the disclosure of literary and spiritual world which revelled in syncretism the author’s name. He, however, took sole responsibility for what appeared in his journal and, consequently, spent a little with its surrounding Hindu worlds; and which is under over one year in rigorous imprisonment – held as a “C” class threat of obliteration, even as a memory, in the singular prisoner he had to hand-grind, jointly with another prisoner, world of globalised Islam of the 21st century. one maund (37.3 kgs) of corn every day. The authorities also confi scated his printing press and his lovingly put together library that contained many precious manuscripts. In 1920, when the fi rst Indian Communist Conference was held at Kanpur, he was one of the organising hosts and pre- sented the welcome address. Some believe that it was on that occasion he gave India the slogan Inqilāb Zindabād as the equivalent to the international war cry of radicals: “Vive la Revolution” (Long Live The Revolution). -

The Forms of Seeking Accepting and Denying Permissions in English and Awadhi Language

THE FORMS OF SEEKING ACCEPTING AND DENYING PERMISSIONS IN ENGLISH AND AWADHI LANGUAGE A Thesis Submitted to the Department of English Education In Partial Fulfilment for the Masters of Education in English Submitted by Jyoti Kaushal Faculty of Education Tribhuwan University, Kirtipur Kathmandu, Nepal 2018 THE FORMS OF SEEKING ACCEPTING AND DENYING PERMISSIONS IN ENGLISH AND AWADHI LANGUAGE A Thesis Submitted to the Department of English Education In Partial Fulfilment for the Masters of Education in English Submitted by Jyoti Kaushal Faculty of Education Tribhuwan University, Kirtipur Kathmandu, Nepal 2018 T.U. Reg. No.:9-2-540-164-2010 Date of Approval Thesis Fourth Semester Examination Proposal: 18/12/2017 Exam Roll No.: 28710094/072 Date of Submission: 30/05/2018 DECLARATION I hereby declare that to the best of my knowledge this thesis is original; no part of it was earlier submitted for the candidate of research degree to any university. Date: ..…………………… Jyoti Kaushal i RECOMMENDATION FOR ACCEPTANCE This is to certify that Miss Jyoti Kaushal has prepared this thesis entitled The Forms of Seeking, Accepting and Denying Permissions in English and Awadhi Language under my guidance and supervision I recommend this thesis for acceptance Date: ………………………… Mr. Raj Narayan Yadav Reader Department of English Education Faculty of Education TU, Kirtipur, Kathmandu, Nepal ii APPROVAL FOR THE RESEARCH This thesis has been recommended for evaluation from the following Research Guidance Committee: Signature Dr. Prem Phyak _______________ Lecturer & Head Chairperson Department of English Education University Campus T.U., Kirtipur, Mr. Raj Narayan Yadav (Supervisor) _______________ Reader Member Department of English Education University Campus T.U., Kirtipur, Mr. -

Library Stock.Pdf

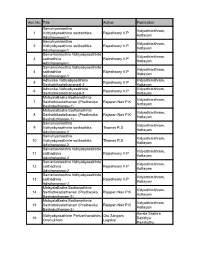

Acc.No. Title Author Publication Samuhyashasthra Vidyarthimithram, 1 Vidhyabyasathinte saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Samuhyashasthra Vidyarthimithram, 2 Vidhyabyasathinte saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 3 saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 4 saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Adhunika Vidhyabyasathinte Vidyarthimithram, 5 Rajeshwary V.P Saithathikadisthanangal-2 Kottayam Adhunika Vidhyabyasathinte Vidyarthimithram, 6 Rajeshwary V.P Saithathikadisthanangal-2 Kottayam MalayalaBasha Bodhanathinte Vidyarthimithram, 7 Saithathikadisthanam (Pradhesika Rajapan Nair P.K Kottayam Bashabothanam-1) MalayalaBasha Bodhanathinte Vidyarthimithram, 8 Saithathikadisthanam (Pradhesika Rajapan Nair P.K Kottayam Bashabothanam-1) Samuhyashasthra Vidyarthimithram, 9 Vidhyabyasathinte saithadhika Thomas R.S Kottayam Adisthanangal-2 Samuhyashasthra Vidyarthimithram, 10 Vidhyabyasathinte saithadhika Thomas R.S Kottayam Adisthanangal-2 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 11 saithadhika Rajeshwary V.P Kottayam Adisthanangal-2 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 12 saithadhika Rajeshwary V.P Kottayam Adisthanangal-2 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 13 saithadhika Rajeshwary V.P Kottayam Adisthanangal-2 MalayalaBasha Bodhanathinte Vidyarthimithram, 14 Saithathikadisthanam (Pradhesika Rajapan Nair P.K Kottayam Bashabothanam-2) MalayalaBasha -

1 Brahmi Word Recognition by Supervised Techniques

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 5 June 2020 doi:10.20944/preprints202006.0048.v1 Brahmi word recognition by supervised techniques Neha Gautam 1*, Soo See Chai1, Sadia Afrin2, Jais Jose3 1 Faculty of Computer Science and Information Technology, University Malaysia Sarawak 2 Institute of Cognitive Science, Universität Osnabrück 3Amity University, Noida, India *[email protected] [email protected] Abstract: Significant progress has made in pattern recognition technology. However, one obstacle that has not yet overcome is the recognition of words in the Brahmi script, specifically the recognition of characters, compound characters, and word because of complex structure. For this kind of complex pattern recognition problem, it is always difficult to decide which feature extraction and classifier would be the best choice. Moreover, it is also true that different feature extraction and classifiers offer complementary information about the patterns to be classified. Therefore, combining feature extraction and classifiers, in an intelligent way, can be beneficial compared to using any single feature extraction. This study proposed the combination of HOG +zonal density with SVM to recognize the Brahmi words. Keeping these facts in mind, in this paper, information provided by structural and statistical based features are combined using SVM classifier for script recognition (word-level) purpose from the Brahmi words images. Brahmi word dataset contains 6,475 and 536 images of Brahmi words of 170 classes for the training and testing, respectively, and the database is made freely available. The word samples from the mentioned database are classified based on the confidence scores provided by support vector machine (SVM) classifier while HOG and zonal density use to extract the features of Brahmi words. -

UNIT 2 INDIAN LANGUAGES Notes STRUCTURE 2.0 Introduction

Indian Languages UNIT 2 INDIAN LANGUAGES Notes STRUCTURE 2.0 Introduction 2.1 Learning Objectives 2.2 Linguistic Diversity in India 2.2.1 A picture of India’s linguistic diversity 2.2.2 Language Families of India and India as a Linguistic Area 2.3 What does the Indian Constitution say about Languages? 2.4 Categories of Languages in India 2.4.1 Scheduled Languages 2.4.2 Regional Languages and Mother Tongues 2.4.3 Classical Languages 2.4.4 Is there a Difference between Language and Dialect? 2.5 Status of Hindi in India 2.6 Status of English in India 2.7 The Language Education Policy in India Provisions of Various Committees and Commissions Three Language Formula National Curriculum Framework-2005 2.8 Let Us Sum Up 2.9 Suggested Readings and References 2.10 Unit-End Exercises 2.0 INTRODUCTION You must have heard this song: agrezi mein kehte hein- I love you gujrati mein bole- tane prem karu chhuun 18 Diploma in Elementary Education (D.El.Ed) Indian Languages bangali mein kehte he- amii tumaake bhaalo baastiu aur punjabi me kehte he- tere bin mar jaavaan, me tenuu pyar karna, tere jaiyo naiyo Notes labnaa Songs of this kind is only one manifestation of the diversity and fluidity of languages in India. We are sure you can think of many more instances where you notice a multiplicity of languages being used at the same place at the same time. Imagine a wedding in Delhi in a Telugu family where Hindi, Urdu, Dakkhini, Telugu, English and Sanskrit may all be used in the same event. -

INCLUSIVE GOVERNANCE a Study on Participation and Representation After Federalization in Nepal

STATE OF INCLUSIVE GOVERNANCE A Study on Participation and Representation after Federalization in Nepal Central Department of Anthropology Tribhuvan University Kirtipur, Kathmandu, Nepal B I STATE OF INCLUSIVE GOVERNANCE STATE OF INCLUSIVE GOVERNANCE STATE OF INCLUSIVE GOVERNANCE A Study on Participation and Representation after Federalization in Nepal Binod Pokharel Meeta S. Pradhan SOSIN Research Team Project Coordinator Dr. Dambar Chemjong Research Director Dr. Mukta S. Tamang Team Leaders Dr. Yogendra B. Gurung Dr. Binod Pokharel Dr. Meeta S. Pradhan Dr. Mukta S. Tamang Team Members Dr. Dhanendra V. Shakya Mr. Mohan Khajum Advisors/Reviewers Dr. Manju Thapa Tuladhar Mr. Prakash Gnyawali B I STATE OF INCLUSIVE GOVERNANCE STATE OF INCLUSIVE GOVERNANCE STATE OF INCLUSIVE GOVERNANCE A Study on Participation and Representation after Federalization in Nepal Copyright@2020 Central Department of Anthropology Tribhuvan University This study is made possible by the support of the American People through the United States Agency for International Development (USAID). The contents of this report are the sole responsibility of the authors and do not necessarily reflect the views of USAID or the United States Government or Tribhuvan University. Published By Central Department of Anthropology (CDA) Tribhuvan University (TU) Kirtipur, Kathmandu, Nepal Tel: + 977-0-4334832 Email: [email protected] Website: anthropologytu.edu.np First Published: October 2020 400 Copies Cataloguing in Publication Data Pokharel, Binod. State of inclusive governance: a study on participation and representation after federalization in Nepal / Binod Pokharel, Meeta S. Pradhan.- Kirtipur : Central Department of Anthropology, Tribhuvan University, 2020. Xx, 164p. : Col. ill. ; Cm. ISBN 978-9937-0-7864-1 1. -

Language Transliteration in Indian Languages – a Lexicon Parsing Approach

LANGUAGE TRANSLITERATION IN INDIAN LANGUAGES – A LEXICON PARSING APPROACH SUBMITTED BY JISHA T.E. Assistant Professor, Department of Computer Science, Mary Matha Arts And Science College, Vemom P O, Mananthavady A Minor Research Project Report Submitted to University Grants Commission SWRO, Bangalore 1 ABSTRACT Language, ability to speak, write and communicate is one of the most fundamental aspects of human behaviour. As the study of human-languages developed the concept of communicating with non-human devices was investigated. This is the origin of natural language processing (NLP). Natural language processing (NLP) is a subfield of Artificial Intelligence and Computational Linguistics. It studies the problems of automated generation and understanding of natural human languages. A 'Natural Language' (NL) is any of the languages naturally used by humans. It is not an artificial or man- made language such as a programming language. 'Natural language processing' (NLP) is a convenient description for all attempts to use computers to process natural language. The goal of the Natural Language Processing (NLP) group is to design and build software that will analyze, understand, and generate languages that humans use naturally, so that eventually you will be able to address your computer as though you were addressing another person. The last 50 years of research in the field of Natural Language Processing is that, various kinds of knowledge about the language can be extracted through the help of constructing the formal models or theories. The tools of work in NLP are grammar formalisms, algorithms and data structures, formalism for representing world knowledge, reasoning mechanisms. Many of these have been taken from and inherit results from Computer Science, Artificial Intelligence, Linguistics, Logic, and Philosophy.