What Were You THINKING? Biases and Rational Decision Making

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

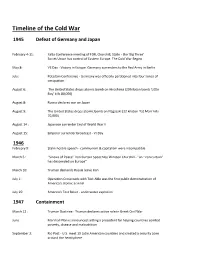

Timeline of the Cold War

Timeline of the Cold War 1945 Defeat of Germany and Japan February 4-11: Yalta Conference meeting of FDR, Churchill, Stalin - the 'Big Three' Soviet Union has control of Eastern Europe. The Cold War Begins May 8: VE Day - Victory in Europe. Germany surrenders to the Red Army in Berlin July: Potsdam Conference - Germany was officially partitioned into four zones of occupation. August 6: The United States drops atomic bomb on Hiroshima (20 kiloton bomb 'Little Boy' kills 80,000) August 8: Russia declares war on Japan August 9: The United States drops atomic bomb on Nagasaki (22 kiloton 'Fat Man' kills 70,000) August 14 : Japanese surrender End of World War II August 15: Emperor surrender broadcast - VJ Day 1946 February 9: Stalin hostile speech - communism & capitalism were incompatible March 5 : "Sinews of Peace" Iron Curtain Speech by Winston Churchill - "an "iron curtain" has descended on Europe" March 10: Truman demands Russia leave Iran July 1: Operation Crossroads with Test Able was the first public demonstration of America's atomic arsenal July 25: America's Test Baker - underwater explosion 1947 Containment March 12 : Truman Doctrine - Truman declares active role in Greek Civil War June : Marshall Plan is announced setting a precedent for helping countries combat poverty, disease and malnutrition September 2: Rio Pact - U.S. meet 19 Latin American countries and created a security zone around the hemisphere 1948 Containment February 25 : Communist takeover in Czechoslovakia March 2: Truman's Loyalty Program created to catch Cold War -

Revision for Year 11 Mocks February 2018 General Information and Advice

Revision for Year 11 Mocks February 2018 General Information and Advice You will be sitting TWO exams in February 2018. Paper 1 will be one hour long and will be focussing on your ability to work with sources and interpretations. Although it is mainly a skills test, you MUST learn the facts about the Nazis and Women and the Chain of Evacuation at the Western Front in order to be able to evaluate the usefulness of sources and consider how and why interpretations of the success of Nazi policies towards women were successful or not. You need to revise: the Nazis and Women and the Chain of Evacuation at the Western Front; how to answer ‘how useful” questions; ‘why interpretetions differ’ questions and ‘how far do you agree’ questions. This paper is worth 30%. Paper 2 will be a test of your knowledge of The Superpowers course we studied before Christmas and the first section of the Early Elizabethan course which we have been studying since Christmas. You will be familiar with the exam format in Section A: 1) Consequences of Event; 2) Write a narrative analysing an event 3) Explain the importance of TWO events. You need to revise: The Truman Doctrine and Marshall Aid; Berlin; The Soviet Invasion of Afghanistan In Section B you will be asked the following type of question: 1)Describe two key features of…. 2) Explain why…. 3) How far do you agree with this statement. You need to revise: Elizabeth’s Religious Settlement; Mary Queen of Scots; the Threats to Elizabeth from Catholics at home and abroad eg France, Spain and the Pope; the plots against Elizabeth. -

Thirteen Days Is the Story of Mankind's Closest Brush with Nuclear Armageddon

Helpful Background: Thirteen Days is the story of mankind's closest brush with nuclear Armageddon. Many events are portrayed exactly as they occurred. The movie captures the tension that the crisis provoked and provides an example of how foreign policy was made in the last half of the 20th century. Supplemented with the information provided in this Learning Guide, the film shows how wise leadership during the crisis saved the world from nuclear war, while mistakes and errors in judgment led to the crisis. The film is an excellent platform for debates about the Cuban Missile Crisis, nuclear weapons policy during the Cold War, and current foreign policy issues. With the corrections outlined in this Learning Guide, the movie can serve as a motivator and supplement for a unit on the Cold War. WERE WE REALLY THAT CLOSE TO NUCLEAR WAR? Yes. We were very, very, close. As terrified as the world was in October 1962, not even the policy-makers had realized how close to disaster the situation really was. Kennedy thought that the likelihood of nuclear war was 1 in 3, but the administration did not know many things. For example, it believed that the missiles were not operational and that only 2-3,000 Soviet personnel were in place. Accordingly, the air strike was planned for the 30th, before any nuclear warheads could be installed. In 1991-92, Soviet officials revealed that 42 [missiles] had been in place and fully operational. These could obliterate US cities up to the Canadian border. These sites were guarded by 40,000 Soviet combat troops. -

The Bay of Pigs: Lessons Learned Topic: the Bay of Pigs Invasion

The Bay of Pigs: Lessons Learned Topic: The Bay of Pigs Invasion Grade Level: 9-12 Subject Area: US History after World War II – History and Government Time Required: One class period Goals/Rationale: Students analyze President Kennedy’s April 20, 1961 speech to the American Society of Newspaper Editors in which he unapologetically frames the invasion as “useful lessons for us all to learn” with strong Cold War language. This analysis will help students better understand the Cold War context of the Bay of Pigs invasion, and evaluate how an effective speech can shift the focus from a failed action or policy towards a future goal. Essential Question: How can a public official address a failed policy or action in a positive way? Objectives Students will be able to: Explain the US rationale for the Bay of Pigs invasion and the various ways the mission failed. Analyze the tone and content of JFK’s April 20, 1961 speech. Evaluate the methods JFK used in this speech to present the invasion in a more positive light. Connection to Curricula (Standards): National English Language Standards (NCTE) 1 - Students read a wide range of print and non-print texts to build an understanding of texts, of themselves, and of the cultures of the United States and the world; to acquire new information; to respond to the needs and demands of society and the workplace; and for personal fulfillment. Among these texts are fiction and nonfiction, classic and contemporary works. 3- Students apply a wide range of strategies to comprehend, interpret, evaluate, and appreciate texts. -

American Incursion Into Cuba: the Bay of Pigs

Western Oregon University Digital Commons@WOU Student Theses, Papers and Projects (History) Department of History 3-10-2004 American Incursion into Cuba: The Bay of Pigs Robert Moore Follow this and additional works at: https://digitalcommons.wou.edu/his Part of the Latin American History Commons, Military History Commons, and the United States History Commons ATT,MRICRN INCUNSION INTO CUSE: Trm Bev or Plcs Robert Moore March L0,2004 HST 351 Dr. John Rector when The Cold War was a fearsome chapter in the history of the world, a time on the everything hung on the decisions of a few men, the fate of mankind was balanced the mid-eighties, edge of a knife. From the end of the Second World War and up through the majority of Amencan citizens would agree on this: communism was the enemy' and erase all Communists wanted nothing more than to destroy the American way of life remnants of the free world. Even after the mentality of Senator Joseph McCarthy had died away from two titans American politics, the idea of the United States and the Soviet Union being locked in a mortal combat to determine the future of the human race lived on in the American public. For many people, this concept seemed natural; but it was understood U'S.S.R. that the communists would not fight fairly, that the U.S. would have to face the on the Soviet's terms. America needed a champion to lead the fight against the communist ideals of the paying for Russians, a president that would not let the Soviets take any ground without it dearly; Senator John F. -

Operation Bumpy Road: the Role of Admiral Arleigh Burke and the U.S. Navy in the Bay of Pigs Invasion John P

Old Dominion University ODU Digital Commons History Theses & Dissertations History Winter 1988 Operation Bumpy Road: The Role of Admiral Arleigh Burke and the U.S. Navy in the Bay of Pigs Invasion John P. Madden Old Dominion University Follow this and additional works at: https://digitalcommons.odu.edu/history_etds Part of the United States History Commons Recommended Citation Madden, John P.. "Operation Bumpy Road: The Role of Admiral Arleigh Burke and the U.S. Navy in the Bay of Pigs Invasion" (1988). Master of Arts (MA), thesis, History, Old Dominion University, DOI: 10.25777/chem-m407 https://digitalcommons.odu.edu/history_etds/35 This Thesis is brought to you for free and open access by the History at ODU Digital Commons. It has been accepted for inclusion in History Theses & Dissertations by an authorized administrator of ODU Digital Commons. For more information, please contact [email protected]. OPERATION BUMPY ROAD THE ROLE OF ADMIRAL ARLEIGH BURKE AND THE U.S. NAVY IN THE BAY OF PIGS INVASION by John P. Madden B.A. June 1980, Clemson University A Thesis Submitted to the Faculty of Old Dominion University in Partial Fulfilment of Requirements for the Degree of Master of Arts History Old Dominion University December, 1988 Apy-Luvtsu u y; (Willard C. Frank, Jr.,Direct Reproduced with permission of the copyright owner. Further reproduction prohibited without permission. ABSTRACT OPERATION BUMPY ROAD THE ROLE OF ADMIRAL ARLEIGH BURKE AND THE U.S. NAVY IN THE BAY OF PIGS INVASION John P. Madden Old Dominion University Director: Dr. Willard C. Frank, Jr. The Bay of Pigs invasion in April 1961 was a political and military fiasco. -

The Cuban Missile Crisis and Its Effect on the Course of Détente

1 THE CUBAN MISSILE CRISIS AND ITS EFFECT ON THE COURSE OF DÉTENTE 2 Abstract The Cold War between the United States and the Soviet Union began in 1945 with the end of World War II and the start of an international posturing for control of a war-torn Europe. However, the Cold War reached its peak during the events of the Cuban Missile Crisis, occurring on October 15-28, 1962, with the United States and the Soviet Union taking sides against each other in the interest of promoting their own national security. During this period, the Soviet Union attempted to address the issue of its own deficit of Intercontinental Ballistic Missiles compared to the United States by placing shorter-range nuclear missiles within Cuba, an allied Communist nation directly off the shores of the United States. This move allowed the Soviet Union to reach many of the United States’ largest population centers with nuclear weapons, placing both nations on a more equal footing in terms of security and status. The crisis was resolved through the imposition of a blockade by the United States, but the lasting threat of nuclear destruction remained. The daunting nature of this Crisis led to a period known as détente, which is a period of peace and increased negotiations between the United States and the Soviet Union in order to avoid future confrontations. Both nations prospered due to the increased cooperation that came about during this détente, though the United States’ and the Soviet Union’s rapidly changing leadership styles and the diverse personalities of both countries’ individual leaders led to fluctuations in the efficiency and extent of the adoption of détente. -

Moral Masculinity: the Culture of Foreign Relations

MORAL MASCULINITY: THE CULTURE OF FOREIGN RELATIONS DURING THE KENNEDY ADMINISTRATION DISSERTATION Presented in Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the Graduate School of The Ohio State University By Jennifer Lynn Walton, B.A., M.A. ***** The Ohio State University 2004 Dissertation Committee: Approved by Professor Michael J. Hogan, Adviser ___________________________ Professor Peter L. Hahn Adviser Department of History Professor Kevin Boyle Copyright by Jennifer Lynn Walton 2004 ABSTRACT The Kennedy administration of 1961-1963 was an era marked by increasing tension in U.S.-Soviet relations, culminating in the Cuban missile crisis of October 1962. This period provides a snapshot of the culture and politics of the Cold War. During the early 1960s, broader concerns about gender upheaval coincided with an administration that embraced a unique ideology of masculinity. Policymakers at the top levels of the Kennedy administration, including President John F. Kennedy, operated within a cultural framework best described as moral masculinity. Moral masculinity was the set of values or criteria by which Kennedy and his closest foreign policy advisors defined themselves as white American men. Drawing on these criteria justified their claims to power. The values they embraced included heroism, courage, vigor, responsibility, and maturity. Kennedy’s focus on civic virtue, sacrifice, and public service highlights the “moral” aspect of moral masculinity. To members of the Kennedy administration, these were moral virtues and duties and their moral fitness justified their fitness to serve in public office. Five key elements of moral masculinity played an important role in diplomatic crises during the Kennedy administration. -

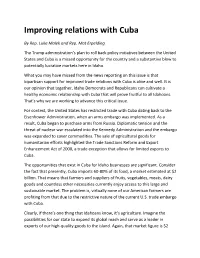

Improving Relations with Cuba

Improving relations with Cuba By Rep. Luke Malek and Rep. Mat Erpelding The Trump administration’s plan to roll back policy initiatives between the United States and Cuba is a missed opportunity for the country and a substantive blow to potentially lucrative markets here in Idaho. What you may have missed from the news reporting on this issue is that bipartisan support for improved trade relations with Cuba is alive and well. It is our opinion that together, Idaho Democrats and Republicans can cultivate a healthy economic relationship with Cuba that will prove fruitful to all Idahoans. That’s why we are working to advance this critical issue. For context, the United States has restricted trade with Cuba dating back to the Eisenhower Administration, when an arms embargo was implemented. As a result, Cuba began to purchase arms from Russia. Diplomatic tension and the threat of nuclear war escalated into the Kennedy Administration and the embargo was expanded to cover commodities. The sale of agricultural goods for humanitarian efforts highlighted the Trade Sanctions Reform and Export Enhancement Act of 2000, a trade exception that allows for limited exports to Cuba. The opportunities that exist in Cuba for Idaho businesses are significant. Consider the fact that presently, Cuba imports 60-80% of its food, a market estimated at $2 billion. That means that farmers and suppliers of fruits, vegetables, meats, dairy goods and countless other necessities currently enjoy access to this large and sustainable market. The problem is, virtually none of our American farmers are profiting from that due to the restrictive nature of the current U.S. -

![The Cuban Missile Crisis ‘Within the Past Week Unmistakable Evidence Has Established the Fact That a Series of Offensive Missile Sites Is Now in Preparation on [Cuba]](https://docslib.b-cdn.net/cover/5935/the-cuban-missile-crisis-within-the-past-week-unmistakable-evidence-has-established-the-fact-that-a-series-of-offensive-missile-sites-is-now-in-preparation-on-cuba-3375935.webp)

The Cuban Missile Crisis ‘Within the Past Week Unmistakable Evidence Has Established the Fact That a Series of Offensive Missile Sites Is Now in Preparation on [Cuba]

CHAPTER 4 THE CUBAN MISSILE CRISIS ‘Within the past week unmistakable evidence has established the fact that a series of offensive missile sites is now in preparation on [Cuba]. The purposes of these bases can be none other than to provide a nuclear strike capacity against the Western hemisphere … It shall be the policy of this nation to regard any nuclear missile launched from Cuba against any nation in the Western hemisphere as an attack by the Soviet Union on the United States, requiring a full retaliatory response upon the Soviet Union.’ PRESIDENT JOHN F. KENNEDY, 22 OCTOBER 19621 PAGES INtroDuctioN On 1 January 1959, left-wing The Cuban Missile Crisis was rebels under the leadership of an escalation in the tensions Fidel Castro seized control of between the two superpowers, Cuba. As Castro’s ideology and which one historian called the policies veered towards socialism, ‘most dangerous crisis of the Cold Castro drew the ire of the United War.’ 2 Only by standing on theSAMPLE States. Embargo, invasion and edge of the abyss could the USA assassination attempts followed. and USSR see that their rivalry Castro was forced to seek had taken humanity to the brink economic and military security of extinction. A period of détente from another world power: the followed, which saw greater Soviet Union. Soviet Premier communication between the two Khrushchev decided to install superpowers, and a tentative nuclear missiles on Cuba to step towards placing limits on the intimidate the United States. This most dangerous weapon mankind was the catalyst for the Cuban has ever devised. -

US History Curriculum Map Unit 11: Cold War Enduring Themes

U.S. History Curriculum Map Unit 11: Cold War Enduring Themes: Conflict and Change Governance Individuals, Groups, Institutions Distribution of Power Technological Change Time Frame: 13 Days Standards: SSUSH20 The student will analyze the domestic and international impact of the Cold War on the United States. a. Describe the creation of the Marshall Plan, U.S. commitment to Europe, the Truman Doctrine, and the origins and implications of the containment policy. b. Explain the impact of the new communist regime in China and the outbreak of the Korean War and how these events contributed to the rise of Senator Joseph McCarthy. c. Describe the Cuban Revolution, the Bay of Pigs, and the Cuban missile crisis. d. Describe the Vietnam War, the Tet Offensive, and growing opposition to the war. e. Explain the role of geography on the U.S. containment policy, the Korean War, the Bay of Pigs, the Cuban missile crisis, and the Vietnam War. SSUSH21d. Describe the impact of competition with the USSR as evidenced by the launch of Sputnik I and President Eisenhower’s actions. SSUSH24c. Analyze the anti-Vietnam War movement. **Introduce anti-war movement and revisit during Social Movements. Unit Essential Question: How did the Cold War impact the U.S. domestically and internationally? Unit Resources: Unit 11 Vocabulary Unit 11 Student Content Map Unit 11 Study Guide Unit 11 Sample Assessment Items Concept 1 Concept 2 Concept 3 Origins of Cold War Cuban Missile Crisis Vietnam War Concept 1: Origins of Cold War Standard: SSUSH20. The student will analyze the domestic and international impact of the Cold War a. -

Bay of Pigs Invasion

BAY OF PIGS INVASION The Bay of Pigs affair was an unsuccessful invasion of Cuba on April 17, 1961, at Playa Girón (the Bay of Pigs) by about two thousand Cubans who had gone into exile after the 1959 revolution. Encouraged by members of the CIA who trained them, the invaders believed they would have air and naval support from the United States and that the invasion would cause the people of Cuba to rise up and overthrow the regime of communist Fidel Castro. Neither expectation materialized, although unmarked planes from Florida bombed Cuban air bases prior to the invasion. Cuban army troops pinned down the exiles and forced them to surrender within seventy-two hours. At first, the State Department denied any direct links to the exiles. The true American role did not become public until a few days after the invasion. President Kennedy assumed full responsibility for what he admitted was a mistake. Nonetheless, he refused to negotiate a settlement of America's differences with the Castro regime. Before and after the invasion, the United States promoted the expulsion of Cuba from the Organization of American States, attempted an unsuccessful diplomatic quarantine, and stopped all Cuban exports from entering the United States. Economic and diplomatic estrangement remained American policy toward Communist Cuba for the indefinite future. Richard M. Nixon proposed it. Dwight D. Eisenhower planned it. Robert F. Kennedy championed it. John F. Kennedy approved it. The CIA carried it out. 1,197 invaders were captured. 200 of them had been soldiers in Batista's army (14 of those were wanted for murder in Cuba).