Handbook for UTC-5

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Journal of the Music Academy Madras Devoted to the Advancement of the Science and Art of Music

The Journal of Music Academy Madras ISSN. 0970-3101 Publication by THE MUSIC ACADEMY MADRAS Sangita Sampradaya Pradarsini of Subbarama Dikshitar (Tamil) Part I, II & III each 150.00 Part – IV 50.00 Part – V 180.00 The Journal Sangita Sampradaya Pradarsini of Subbarama Dikshitar of (English) Volume – I 750.00 Volume – II 900.00 The Music Academy Madras Volume – III 900.00 Devoted to the Advancement of the Science and Art of Music Volume – IV 650.00 Volume – V 750.00 Vol. 89 2018 Appendix (A & B) Veena Seshannavin Uruppadigal (in Tamil) 250.00 ŸÊ„¢U fl‚ÊÁ◊ flÒ∑ȧá∆U Ÿ ÿÊÁªNÔUŒÿ ⁄UflÊÒ– Ragas of Sangita Saramrta – T.V. Subba Rao & ◊jQÊ— ÿòÊ ªÊÿÁãà ÃòÊ ÁÃDÊÁ◊ ŸÊ⁄UŒH Dr. S.R. Janakiraman (in English) 50.00 “I dwell not in Vaikunta, nor in the hearts of Yogins, not in the Sun; Lakshana Gitas – Dr. S.R. Janakiraman 50.00 (but) where my Bhaktas sing, there be I, Narada !” Narada Bhakti Sutra The Chaturdandi Prakasika of Venkatamakhin 50.00 (Sanskrit Text with supplement) E Krishna Iyer Centenary Issue 25.00 Professor Sambamoorthy, the Visionary Musicologist 150.00 By Brahma EDITOR Sriram V. Raga Lakshanangal – Dr. S.R. Janakiraman (in Tamil) Volume – I, II & III each 150.00 VOL. 89 – 2018 VOL. COMPUPRINT • 2811 6768 Published by N. Murali on behalf The Music Academy Madras at New No. 168, TTK Road, Royapettah, Chennai 600 014 and Printed by N. Subramanian at Sudarsan Graphics Offset Press, 14, Neelakanta Metha Street, T. Nagar, Chennai 600 014. Editor : V. Sriram. THE MUSIC ACADEMY MADRAS ISSN. -

Library Stock.Pdf

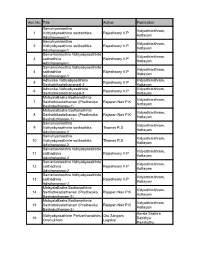

Acc.No. Title Author Publication Samuhyashasthra Vidyarthimithram, 1 Vidhyabyasathinte saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Samuhyashasthra Vidyarthimithram, 2 Vidhyabyasathinte saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 3 saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 4 saithadhika Rajeshwary V.P Kottayam Adisthanangal-1 Adhunika Vidhyabyasathinte Vidyarthimithram, 5 Rajeshwary V.P Saithathikadisthanangal-2 Kottayam Adhunika Vidhyabyasathinte Vidyarthimithram, 6 Rajeshwary V.P Saithathikadisthanangal-2 Kottayam MalayalaBasha Bodhanathinte Vidyarthimithram, 7 Saithathikadisthanam (Pradhesika Rajapan Nair P.K Kottayam Bashabothanam-1) MalayalaBasha Bodhanathinte Vidyarthimithram, 8 Saithathikadisthanam (Pradhesika Rajapan Nair P.K Kottayam Bashabothanam-1) Samuhyashasthra Vidyarthimithram, 9 Vidhyabyasathinte saithadhika Thomas R.S Kottayam Adisthanangal-2 Samuhyashasthra Vidyarthimithram, 10 Vidhyabyasathinte saithadhika Thomas R.S Kottayam Adisthanangal-2 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 11 saithadhika Rajeshwary V.P Kottayam Adisthanangal-2 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 12 saithadhika Rajeshwary V.P Kottayam Adisthanangal-2 Samaniashasthra Vidhyabyasathinte Vidyarthimithram, 13 saithadhika Rajeshwary V.P Kottayam Adisthanangal-2 MalayalaBasha Bodhanathinte Vidyarthimithram, 14 Saithathikadisthanam (Pradhesika Rajapan Nair P.K Kottayam Bashabothanam-2) MalayalaBasha -

Language Transliteration in Indian Languages – a Lexicon Parsing Approach

LANGUAGE TRANSLITERATION IN INDIAN LANGUAGES – A LEXICON PARSING APPROACH SUBMITTED BY JISHA T.E. Assistant Professor, Department of Computer Science, Mary Matha Arts And Science College, Vemom P O, Mananthavady A Minor Research Project Report Submitted to University Grants Commission SWRO, Bangalore 1 ABSTRACT Language, ability to speak, write and communicate is one of the most fundamental aspects of human behaviour. As the study of human-languages developed the concept of communicating with non-human devices was investigated. This is the origin of natural language processing (NLP). Natural language processing (NLP) is a subfield of Artificial Intelligence and Computational Linguistics. It studies the problems of automated generation and understanding of natural human languages. A 'Natural Language' (NL) is any of the languages naturally used by humans. It is not an artificial or man- made language such as a programming language. 'Natural language processing' (NLP) is a convenient description for all attempts to use computers to process natural language. The goal of the Natural Language Processing (NLP) group is to design and build software that will analyze, understand, and generate languages that humans use naturally, so that eventually you will be able to address your computer as though you were addressing another person. The last 50 years of research in the field of Natural Language Processing is that, various kinds of knowledge about the language can be extracted through the help of constructing the formal models or theories. The tools of work in NLP are grammar formalisms, algorithms and data structures, formalism for representing world knowledge, reasoning mechanisms. Many of these have been taken from and inherit results from Computer Science, Artificial Intelligence, Linguistics, Logic, and Philosophy. -

Stotras in Sanskrit, Tamil, Malayalam and Krithis Addressed To

Stotras in Sanskrit, Tamil, Malayalam and Krithis addressed to Guruvayurappan This document has 10 sanskrit stotras, 5 Tamil prayers ,56 Malayalam prayers and 8 Krithis Table of Contents 1. Stotras in Sanskrit, Tamil, Malayalam and Krithis addressed to Guruvayurappan .......... 1 2. Guruvayu puresa Pancha Rathnam(sanskrit)........................................................................... 5 3. Sri Guruvatha pureesa stotram .................................................................................................. 7 4. Sampoorna roga nivarana stotra of Guryvayurappan ......................................................... 9 5. Solve all your problems by worshiping Lord Guruvayurappan ........................................ 13 6. Guruvayu puresa Bhujanga stotra ............................................................................................ 17 7. Guruvayupuresa Suprabatham ................................................................................................. 25 8. Gurvayurappan Suprabatham .................................................................................................. 31 9. Guruvayurappan Pratha Smaranam ...................................................................................... 37 10. Sri Guruvatha puresa Ashtotharam ........................................................................................ 39 11. Sampoorna roga nivarana stotra of Guryvayurappan ....................................................... 45 12. Srimad Narayaneeyamrutham(Tamil summary of Narayaneeyam) -

Madras- Cultural Tables, Part II-C (I), Vol-IX

l'RG. 18I-C (N) 1,500 CENSUS OF INDIA 196 I VOLUME IX MADRAS PART II-C-(l) CULTURAL TABLES P. K. N A M B JAR OF THE INDIAN ADMINISTRATIVE SERVICE SUPERINTENDENT OF CENSUS OPERATIONS. MADRAS 1964 CONTENTS PAGES PREFACE PRELIMINARY NOTE ANNEXURE I Extract of instructions issued 4 ANNEXURE II Ceusus Questionnaire 1871-1961 6 ANNEXURE III Census Population Record 9 FLY LEAF TO TABLE C-I 13 TABLE C-I Family Composition of Sample Households 14-21 FLYLEAF TO TABLE C-II 31 TABLE ColI Age and Marital Status 32-71 FLY LEAF TO TABLE C-IIl 75 TABLE C-Ill PART A Age, Sex and Education in all Areas 76-85 TABLE C-IIl PART B Age, Sex and Education in Urban areas only 86-113 TABLE C-III PART C Age, Sex and Education in Rural areas only 114-127 APPENDIX TO TABLE CollI Data on Scientific and Technical Personnel 129-130 UNION & STATE TABLE 1 to 8 131-265 STATE TABLE I Classification by each branch and sub-branch of Science or Technology 131-134 STATE TABLE II Type of activity and marital status of persons in each branch of Science or Technology 136-147 STATE TABLE III Year of obtaining post-graduate degrees in physical Sciences and graduates and Doctorates in Engineering, Technology and selected branches of Physical Sciences. .,. 150-157 STATE TABLE IV Persons holding degrees in Engineering & Technology and also holding Degrees in selected branches of Physical Science 160-163 STATE TABLE V Nature and sector of employment of persons in each branch of Science or Technology 166-189 STATE TABLE VI Total monthly income of persons employed in each branch of Science or Technology classified by sector of employment. -

Womens' Participation in Environment Protection Movement: a Study Of

Womens’ Participation in Environment Protection Movement: A Study of the Kairali Village in Wayanad District of Kerala By Reena Joseph Registration No: 14SU15533 Dissertation submitted in partial fulfilment of the requirements for the Degree of Master of Philosophy in Political Science Department of Political Science School of Social Sciences Sikkim University Sikkim 2016 Declaration I, Reena Joseph hereby declare that the subject matter of this dissertation is the record of word done by me, that the contents of this dissertation did not form basis for the award of any previous degree to me or to the best of my knowledge to anybody else, and that the dissertation has not been submitted by me for any research degree in any other university/institute. This is being submitted in partial fulfilment of the requirements for the degree of Master of Philosophy in the Department of Political Science, School of Social Sciences, Sikkim University. Name: Reena Joseph Registration No.: 14SU15533 We recommend that this dissertation be placed before the examiners for evaluation. Head of the Department Supervisor Certificate This is to certify that the dissertation entitled “Womens’ Participation in Environment Protection Movement: A Study of the Kairali Village in Wayanad District of Kerala” submitted to Sikkim University in partial fulfilment of the requirements for the degree of Master of Philosophy in Political Science is the result of bonafide research work carried out by Ms Reena Joseph under my guidance and supervision. No part of the dissertation has been submitted for any other degree, diploma, associateship and fellowship. All the assistance and help received during the course of the investigation have been duly acknowledged by her. -

Transliteration of Tamil to English for the Information Technology

Transliteration of Tamil to English for the Information Technology ‘jAzhan’ Ramalingam Shanmugalingam (‘appuAcci’) <[email protected]> San Diego CA 92131 USA. ___________________________________________________________________________ ABSTRACT: Transliteration as I understand is a method by which one could read a text of one language in the writing method of another language word to word. In other words, it is the transcription of a text in one language using the script of another language. However the transliterated word or words may not sound the same as the original unless corresponding letters that sound the same are found in the transliterated language. 18 English letters have similar sounds in Tamil that can represent the 18 basic Tamil letters. The balance 13 basic Tamil letters need some 'sound connection'. That is why we have to devise a standard scheme that will also fit into modern technology. The 'jAzhan’ Scheme, therefore, uses only 22 English letters of the QWERTY Keyboard without having to use symbols or diacritical marks that are used to mean different things to different people. Transliteration of Tamil has to fit the need for Tamil to be recognized as the only other known language comparable to the English language with a 26-letter keyboard. This dispels the notion that Tamil is a complicated language with 247 letters in the Alphabet. Whereas there are only 31 basic Tamil letters that fit into the 26-letter keyboard without using any symbols or diacritical marks. Tamil is a unique language and belongs to the Dravidian family. Dr. Steven Pinker, the Peter de Florez Professor in the Department of Brain and Cognitive Sciences at the Massachusetts Institute of Technology, in “Words and Rules” places Dravidian language around 15,000 years ago in the Ancestry of Modern English Chart vide (http://www.mit.edu/~pinker/). -

Automatic Phonetic Transcription of Tamil in Roman Script

Proe. Indian Acad. Sci., Vol. 86 A, No. 6, December 1977, pp. 503-512, 9 Printed in India. Automatic phonetic transcription of Tamil in Roman script E V KRISHNAMURTHY Department of Applied Mathematics, Indian Institute of Science, Bangalore 560 012 MS received 14 Febmary 1977; revised 20 June 1977 Abstract. Simple formalized rules are proposed for automatic phonetic transcription of Tamil words into Roman script. These rules are syntax-directed and require a one-symbol look-ahead facility and hence easily automated in a digital computer. Some suggestions are also put forth for the linearization of Tamil script for handling these by modern machinery. Keywords. Automatic phonetic transcription; data representation; linearization of Tamil script; Roman script; Tamil script; transcription. 1. Introduction Ir is well known that in the oldest of the Dravidian languages, Tamil, thepronuncia- tion of the letters eorresponding to the eonstants ~, g~, ,', ~, t~, ~ (called Surds) and their eonsonanto-vowels* vary eonsiderably depending upon the sequence in which these letters are embedded (hence may be called phonetically eontext-sensitive) e.g. there is only one set of a consonanto-vowel (CV) corresponding to the four different sound-values** in the words below; these are underlined. Na hai Ka t tu Pakhkhu Ponggal In other words there is a many-to-one mapping from sound-values to the Surd anda one-to-many mapping from the surd to its sound value. Accordingly, Tamil is nota strictly phonetic language (in relation to its writing system) unlike all other Indian languages. The advantage of not being strictly phonetic in this sense is rewarding, since there is ala enormous reduction in the number of letters in the alphabet; the disadvantage, however, from the point of view of a non-native speaker is the difficulty in leaxning the actual pronunciation which varies depending upon the context. -

Lost Word Is Lost World - a Study of Malayalam

================================================================== Language in India www.languageinindia.com ISSN 1930-2940 Vol. 16:6 June 2016 ================================================================== Lost Word is Lost World - A Study of Malayalam Dr. R. I. Prasanth ================================================================== Abstract This study attempts to present some of the salient features of influence of other langauges on Malayalam. Kerala people’s contact with other people groups from India and elsewhere led to many changes in vocabulary and this enriched the language. In particular, impact of Pali and Prakrit impact needs to be further explored. Key words: Influence on Malayalam, impact of contact on culture. Influence of Other Languages on Malayalam Language originated from sound, script originated from pictures, expression from natural body movements and utensils from the figures seen. What is explored here is to watch history of Malayalam while on transition by finding certain words and how it functioned through in the great passage of time. This is an attempt to find history from words, script, expression and utensils left by a succession of people. There is always living fossil left unmarked by the living time and one can discover missing links there. It will be done by acknowledging the influence of languages in its periphery. Writers common in Malayalam acknowledge the heavy influence of Tamil and Sanskrit but ignore the influence of Pali and Prakrit, what was the breath of life from 3 BC to 13 century AD Kerala. Sinhala language influenced it much. Tulu had influenced it. So did Konkani. These will be discussed separately. Just as the highlands people and islands people, people beyond the sea also contacted throughout its history. -

Book of Proceedings

LREC 2018 Workshop WILDRE4– 4th Workshop on Indian Language Data: Resources and Evaluation PROCEEDINGS Edited by Girish Nath Jha, Kalika Bali, Sobha L, Atul Kr. Ojha ISBN: 979-10-95546-09-2 EAN: 9791095546092 12 May 2018 Proceedings of the LREC 2018 Workshop “WILDRE4 – 4th Workshop on Indian Language Data: Resources and Evaluation” 12 May 2018 – Miyazaki, Japan Edited by Girish Nath Jha, Kalika Bali, Sobha L, Atul Kr. Ojha http://sanskrit.jnu.ac.in/conf/wildre4/index.jsp Acknowledgments: This work has received funding from the Microsoft Research India. Organising Committee • Girish Nath Jha, Jawaharlal Nehru University, India • Kalika Bali, Microsoft Research India Lab, Bangalore • Sobha L, AU-KBC, Anna University Workshop Manager • Atul Kr. Ojha, Jawaharlal Nehru University, India i Programme Committee • Adil Amin Kak, University of Kashmir, India • Anil Kumar Singh, IIT-BHU, India • Arul Mozhi, University of Hyderabad, India • Asif Iqbal, IIT-Patna, India • Bogdan Babych, University of Leeds, UK • Claudia Soria, CNR-ILC, Italy • Dafydd Gibbon, Universität Bielefeld, Germany • Delyth Prys, Bangor University, UK • Dipti Mishra Sharma, IIIT, Hyderabad India • Diwakar Mishra, EZDI, Ahmedabad, India • Dorothee Beermann, NTN Univeristy(NTNU), Norway • Elizabeth Sherley, IITM-Kerala, Trivandrum, India • Esha Banerjee, Google, USA • Eveline Wandl-Vogt, Austrian Academy of Sciences, Austria • Georg Rehm, DFKI, Germany • Girish Nath Jha, Jawaharlal Nehru University, New Delhi, India • Hans Uszkoreit, DFKI, Berlin, Germany • Jan Odijk, Utrecht -

ICRAITSE-17) Organized by A.P.C

IICCRRAAIITTSSEE –– 1177 International Conference on Recent Advancements in Information Technology, Science & Engineering Thoothukudi, Tamil Nadu th th 14 & 15 Decem ber, 2017 Organized at: A.P.C.Mahalaxmi College for Women Ettayapuram Road, Thoothukudi. Published by: Institute For Engineering Research and Publication (IFERP) RUDRA BHANU SATAPATHY., Director, Institute for Engineering Research and Publication. We are extremely glad to welcome researchers as well as scholars from academics and Industries to “International Conference on Recent Advancements in Information Technology Science & Engineering“(ICRAITSE-17) organized by A.P.C. Mahalaxmi College for Women, Thoothukudi, TN in association with Institute for Engineering Research and Publication (IFERP).Thoothukudi very often known as "Pearl City" has been a gateway of international trade to south India since 15th century. According to Confederation of Indian Industry, Thoothukudi has the second highest Human Development Index in Tamil Nadu next to Chennai. Hence it is a perfect destination to organize “International Conference on Recent Advancements in Information Technology Science & Engineering“(ICRAITSE-17) at A.P.C. Mahalaxmi College for Women, Thoothukudi. Our continuous and dedicated effort to bring scientific and academic transformation in India makes us organize world Class scientific events and conferences at educational institute of our country. As Scalar waves (superpower) that travel faster than speed of Light can be generated by resonating our DNA with Pineal Gland, we believe integrated effort to organize international conference like ICRAITSE-17 at a very short span will be a grand success. We are highly grateful to patron, convener, organizing committee and all the technical staffs of the host college for their swift, smooth and continuous response for execution of preconference propagation. -

Converting Women: Gender and Protestant Christianity in Colonial South India

Converting Women: Gender and Protestant Christianity in Colonial South India ELIZA F. KENT OXFORD UNIVERSITY PRESS Converting Women This page intentionally left blank Converting Women Gender and Protestant Christianity in Colonial South India eliza f. kent 1 2004 1 Oxford New York Auckland Bangkok Buenos Aires Cape Town Chennai Dar es Salaam Delhi Hong Kong Istanbul Karachi Kolkata Kuala Lumpur Madrid Melbourne Mexico City Mumbai Nairobi Sa˜o Paulo Shanghai Taipei Tokyo Toronto Copyright ᭧ 2004 by Oxford University Press, Inc. Published by Oxford University Press, Inc. 198 Madison Avenue, New York, New York 10016 www.oup.com Oxford is a registered trademark of Oxford University Press All rights reserved. No part of this publication may be reproduced, stored in a retrieval system, or transmitted, in any form or by any means, electronic, mechanical, photocopying, recording, or otherwise, without the prior permission of Oxford University Press. Library of Congress Cataloging-in-Publication Data Kent, Eliza F., 1966– Converting women: gender and Protestant Christianity in colonial South India / Eliza F. Kent. p. cm. Includes bibliographical references and index. ISBN 0-19-516507-1 1. Christian converts from Hinduism—India, South—History. 2. Women, Tamil—Religious life—India, South—History. 3. Protestant converts—India, South—History. 4. Protestant women—India, South—History. I. Title. BV3280.T3K46 2004 305.48'6204'09548—dc21 2003049854 987654321 Printed in the United States of America on acid-free paper Dedicated to the memory of Norman Cutler (1949–2002) and Alice Cooke Kent (1910–1992) This page intentionally left blank Acknowledgments I would like first of all to offer heartfelt thanks to my friends, col- leagues, research assistants, and gracious hosts in Tamil Nadu who facilitated the research for this project at every step of the way, illu- minating for me the meanings of south Indian Christianity and Hinduism through their thoughts as well as through the example of their lives.