An AI for Shogi Raphael R

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Games Ancient and Oriental and How to Play Them, Being the Games Of

CO CD CO GAMES ANCIENT AND ORIENTAL AND HOW TO PLAY THEM. BEING THE GAMES OF THE ANCIENT EGYPTIANS THE HIERA GRAMME OF THE GREEKS, THE LUDUS LATKUNCULOKUM OF THE ROMANS AND THE ORIENTAL GAMES OF CHESS, DRAUGHTS, BACKGAMMON AND MAGIC SQUAEES. EDWARD FALKENER. LONDON: LONGMANS, GEEEN AND Co. AND NEW YORK: 15, EAST 16"' STREET. 1892. All rights referred. CONTENTS. I. INTRODUCTION. PAGE, II. THE GAMES OF THE ANCIENT EGYPTIANS. 9 Dr. Birch's Researches on the games of Ancient Egypt III. Queen Hatasu's Draught-board and men, now in the British Museum 22 IV. The of or the of afterwards game Tau, game Robbers ; played and called by the same name, Ludus Latrunculorum, by the Romans - - 37 V. The of Senat still the modern and game ; played by Egyptians, called by them Seega 63 VI. The of Han The of the Bowl 83 game ; game VII. The of the Sacred the Hiera of the Greeks 91 game Way ; Gramme VIII. Tlie game of Atep; still played by Italians, and by them called Mora - 103 CHESS. IX. Chess Notation A new system of - - 116 X. Chaturanga. Indian Chess - 119 Alberuni's description of - 139 XI. Chinese Chess - - - 143 XII. Japanese Chess - - 155 XIII. Burmese Chess - - 177 XIV. Siamese Chess - 191 XV. Turkish Chess - 196 XVI. Tamerlane's Chess - - 197 XVII. Game of the Maharajah and the Sepoys - - 217 XVIII. Double Chess - 225 XIX. Chess Problems - - 229 DRAUGHTS. XX. Draughts .... 235 XX [. Polish Draughts - 236 XXI f. Turkish Draughts ..... 037 XXIII. }\'ci-K'i and Go . The Chinese and Japanese game of Enclosing 239 v. -

Prusaprinters

Chinese Chess - Travel Size 3D MODEL ONLY Makerwiz VIEW IN BROWSER updated 6. 2. 2021 | published 6. 2. 2021 Summary This is a full set of Chinese Chess suitable for travelling. We shrunk down the original design to 60% and added a new lid with "Chinese Chess" in traditional Chinese characters. We also added a new chess board graphics file suitable for printing on paper or laser etching/cutting onto wood. Enjoy! Here is a brief intro about Chinese Chess from Wikipedia: 8C 68 "Xiangqi (Chinese: 61 CB ; pinyin: xiàngqí), also called Chinese chess, is a strategy board game for two players. It is one of the most popular board games in China, and is in the same family as Western (or international) chess, chaturanga, shogi, Indian chess and janggi. Besides China and areas with significant ethnic Chinese communities, xiangqi (cờ tướng) is also a popular pastime in Vietnam. The game represents a battle between two armies, with the object of capturing the enemy's general (king). Distinctive features of xiangqi include the cannon (pao), which must jump to capture; a rule prohibiting the generals from facing each other directly; areas on the board called the river and palace, which restrict the movement of some pieces (but enhance that of others); and placement of the pieces on the intersections of the board lines, rather than within the squares." Toys & Games > Other Toys & Games games chess Unassociated tags: Chinese Chess Category: Chess F3 Model Files (.stl, .3mf, .obj, .amf) 3D DOWNLOAD ALL FILES chinese_chess_box_base.stl 15.7 KB F3 3D updated 25. -

Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25

Title: Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25 Introduction The UCS contains symbols for the game of chess in the Miscellaneous Symbols block. These are used in figurine notation, a common variation on algebraic notation in which pieces are represented in running text using the same symbols as are found in diagrams. While the symbols already encoded in Unicode are sufficient for use in the orthodox game, they are insufficient for many chess problems and variant games, which make use of extended sets. 1. Fairy chess problems The presentation of chess positions as puzzles to be solved predates the existence of the modern game, dating back to the mansūbāt composed for shatranj, the Muslim predecessor of chess. In modern chess problems, a position is provided along with a stipulation such as “white to move and mate in two”, and the solver is tasked with finding a move (called a “key”) that satisfies the stipulation regardless of a hypothetical opposing player’s moves in response. These solutions are given in the same notation as lines of play in over-the-board games: typically algebraic notation, using abbreviations for the names of pieces, or figurine algebraic notation. Problem composers have not limited themselves to the materials of the conventional game, but have experimented with different board sizes and geometries, altered rules, goals other than checkmate, and different pieces. Problems that diverge from the standard game comprise a genre called “fairy chess”. Thomas Rayner Dawson, known as the “father of fairy chess”, pop- ularized the genre in the early 20th century. -

Origins of Chess Protochess, 400 B.C

Origins of Chess Protochess, 400 B.C. to 400 A.D. by G. Ferlito and A. Sanvito FROM: The Pergamon Chess Monthly September 1990 Volume 55 No. 6 The game of chess, as we know it, emerged in the North West of ancient India around 600 A.D. (1) According to some scholars, the game of chess reached Persia at the time of King Khusrau Nushirwan (531/578 A.D.), though some others suggest a later date around the time of King Khusrau II Parwiz (590/628 A.D.) (2) Reading from the old texts written in Pahlavic, the game was originally known as "chatrang". With the invasion of Persia by the Arabs (634/651 A.D.), the game’s name became "shatranj" because the phonetic sounds of "ch" and "g" do not exist in Arabic language. The game spread towards the Mediterranean coast of Africa with the Islamic wave of military expansion and then crossed over to Europe. However, other alternative routes to some parts of Europe may have been used by other populations who were playing the game. At the moment, this "Indian, Persian, Islamic" theory on the origin of the game is accepted by the majority of scholars, though it is fair to mention here the work of J. Needham and others who suggested that the historical chess of seventh century India was descended from a divinatory game (or ritual) in China. (3) On chess theories, the most exhaustive account founded on deep learning and many years’ studies is the A History of Chess by the English scholar, H.J.R. -

FESA – Shogi Official Playing Rules

FESA Laws of Shogi FESA Page Federation of European Shogi Associations 1 (12) FESA – Shogi official playing rules The FESA Laws of Shogi cover over-the-board play. This version of the Laws of Shogi was adopted by voting by FESA representatives at August 04, 2017. In these Laws the words `he´, `him’ and `his’ should be taken to mean `she’, `her’ and ‘hers’ as appropriate. PREFACE The FESA Laws of Shogi cannot cover all possible situations that may arise during a game, nor can they regulate all administrative questions. Where cases are not precisely covered by an Article of the Laws, the arbiter should base his decision on analogous situations which are discussed in the Laws, taking into account all considerations of fairness, logic and any special circumstances that may apply. The Laws assume that arbiters have the necessary competence, sound judgement and objectivity. FESA appeals to all shogi players and member federations to accept this view. A member federation is free to introduce more detailed rules for their own events provided these rules: a) do not conflict in any way with the official FESA Laws of Shogi, b) are limited to the territory of the federation in question, and c) are not used for any FESA championship or FESA rating tournament. BASIC RULES OF PLAY Article 1: The nature and objectives of the game of shogi 1.1 The game of shogi is played between two opponents who take turns to make one move each on a rectangular board called a `shogiboard´. The player who starts the game is said to be `sente` or black. -

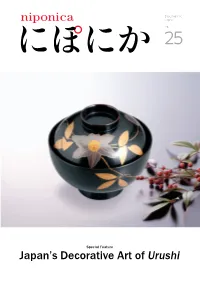

Urushi Selection of Shikki from Various Regions of Japan Clockwise, from Top Left: Set of Vessels for No

Discovering Japan no. 25 Special Feature Japan’s Decorative Art of Urushi Selection of shikki from various regions of Japan Clockwise, from top left: set of vessels for no. pouring and sipping toso (medicinal sake) during New Year celebrations, with Aizu-nuri; 25 stacked boxes for special occasion food items, with Wajima-nuri; tray with Yamanaka-nuri; set of five lidded bowls with Echizen-nuri. Photo: KATSUMI AOSHIMA contents niponica is published in Japanese and six other languages (Arabic, Chinese, English, French, Russian and Spanish) to introduce to the world the people and culture of Japan today. The title niponica is derived from “Nippon,” the Japanese word for Japan. 04 Special Feature Beauty Created From Strength and Delicacy Japan’s Decorative Art 10 Various Shikki From Different of Urushi Regions 12 Japanese Handicrafts- Craftsmen Who Create Shikki 16 The Japanese Spirit Has Been Inherited - Urushi Restorers 18 Tradition and Innovation- New Forms of the Decorative Art of Urushi Cover: Bowl with Echizen-nuri 20 Photo: KATSUMI AOSHIMA Incorporating Urushi-nuri Into Everyday Life 22 Tasty Japan:Time to Eat! Zoni Special Feature 24 Japan’s Decorative Art of Urushi Strolling Japan Hirosaki Shikki - representative of Japan’s decorative arts. no.25 H-310318 These decorative items of art full of Japanese charm are known as “japan” Published by: Ministry of Foreign Affairs of Japan 28 throughout the world. Full of nature's bounty they surpass the boundaries of 2-2-1 Kasumigaseki, Souvenirs of Japan Chiyoda-ku, Tokyo 100-8919, Japan time to encompass everyday life. https://www.mofa.go.jp/ Koshu Inden Beauty Created From Writing box - a box for writing implements. -

1 Jess Rudolph Shogi

Jess Rudolph Shogi – the Chess of Japan Its History and Variants When chess was first invented in India by the end of the sixth century of the current era, probably no one knew just how popular or wide spread the game would become. Only a short time into the second millennium – if not earlier – chess was being played as far as the most distant lands of the known world – the Atlantic coast of Europe and Japan. All though virtually no contact existed for centuries to come between these lands, people from both cultures were playing a game that was very similar; in Europe it was to become the chess most westerners know today and in Japan it was shogi – the Generals Game. Though shogi has many things in common with many other chess variants, those elements are not always clear because of the many differences it also has. Sadly, how the changes came about is not well known since much of the early history of shogi has been lost. In some ways the game is more similar to the Indian chaturanga than its neighboring cousin in China – xiangqi. In other ways, it’s closer to xiangqi than to any other game. In even other ways it has similarities to the Thai chess of makruk. Most likely it has elements from all these lands. It is generally believed that chess came to Japan from China through the trade routs in Korea in more than one wave, the earliest being by the end of tenth century, possibly as early as the eighth. -

Art. I.—On the Persian Game of Chess

JOURNAL OF THE ROYAL ASIATIC SOCIETY. ART. I.— On the Persian Game of Chess. By K BLAND, ESQ., M.R.A.S. [Read June 19th, 1847.] WHATEVER difference of opinion may exist as to the introduction of Chess into Europe, its Asiatic origin is undoubted, although the question of its birth-place is still open to discussion, and will be adverted to in this essay. Its more immediate design, however, is to illustrate the principles and practice of the game itself from such Oriental sources as have hitherto escaped observation, and, especially, to introduce to particular notice a variety of Chess which may, on fair grounds, be considered more ancient than that which is now generally played, and lead to a theory which, if it should be esta- blished, would materially affect our present opinions on its history. In the life of Timur by Ibn Arabshah1, that conqueror, whose love of chess forms one of numerous examples among the great men of all nations, is stated to have played, in preference, at a more complicated game, on a larger board, and with several additional pieces. The learned Dr. Hyde, in his valuable Dissertation on Eastern Games2, has limited his researches, or, rather, been restricted in them by the nature of his materials, to the modern Chess, and has no further illustrated the peculiar game of Timur than by a philological Edited by Manger, "Ahmedis ArabsiadEe Vitae et Rernm Gestarum Timuri, qui vulgo Tamerlanes dicitur, Historia. Leov. 1772, 4to;" and also by Golius, 1736, * Syntagma Dissertationum, &c. Oxon, MDCCJ-XVII., containing "De Ludis Orientalibus, Libri duo." The first part is " Mandragorias, seu Historia Shahi. -

World-Mind-Games-Brochure.Pdf

INDEX WHAT ARE THE WORLD MIND GAMES? ............................................ 4 THE SPORTS AND COMPETITION ....................................................... 6 VENUES .................................................................................................... 13 PARTICIPANTS ......................................................................................... 14 OPENING CEREMONY ............................................................................ 15 TV STRATEGY .......................................................................................... 16 MEDIA PROMOTION ................................................................................ 17 CULTURAL PROGRAMME ..................................................................... 18 BENEFITS .................................................................................................. 19 WHAT ARE THE WORLD MIND GAMES? Strategize, Deceive, Challenge The power of the human brain in action Launched in A combination of the world’s Provide 2011 in Beijing, Worldwide China most popular mind sports Exposure Feature the Promote the In cooperation world’s best values of with interna- athletes in strategy, tional sports high-level intelligence and federations competition concentration 4 WORLD MIND GAMES WORLD MIND GAMES 5 SPORTS MIND 5 SPORTS BRIDGE 250+ PLAYERS & OFFICIALS CHESS 150+ players from 37 countries ranked in the top 20 of their DRAUGHTS sport worldwide GO 550+ PARTICIPANTS XIANGQI 15+ DISCIPLINES 25+ CATEGORIES 6 WORLD MIND GAMES COMPETITION -

Read Book Japanese Chess: the Game of Shogi Ebook, Epub

JAPANESE CHESS: THE GAME OF SHOGI PDF, EPUB, EBOOK Trevor Leggett | 128 pages | 01 May 2009 | Tuttle Shokai Inc | 9784805310366 | English | Kanagawa, Japan Japanese Chess: The Game of Shogi PDF Book Memorial Verkouille A collection of 21 amateur shogi matches played in Ghent, Belgium. Retrieved 28 November In particular, the Two Pawn violation is most common illegal move played by professional players. A is the top class. This collection contains seven professional matches. Unlike in other shogi variants, in taikyoku the tengu cannot move orthogonally, and therefore can only reach half of the squares on the board. There are no discussion topics on this book yet. Visit website. The promoted silver. Brian Pagano rated it it was ok Oct 15, Checkmate by Black. Get A Copy. Kai Sanz rated it really liked it May 14, Cross Field Inc. This is a collection of amateur games that were played in the mid 's. The Oza tournament began in , but did not bestow a title until Want to Read Currently Reading Read. This article may be too long to read and navigate comfortably. White tiger. Shogi players are expected to follow etiquette in addition to rules explicitly described. The promoted lance. Illegal moves are also uncommon in professional games although this may not be true with amateur players especially beginners. Download as PDF Printable version. The Verge. It has not been shown that taikyoku shogi was ever widely played. Thus, the end of the endgame was strategically about trying to keep White's points above the point threshold. You might see something about Gene Davis Software on them, but they probably work. -

Shogi Perfecto EN

• At the end of your turn, in which your unpromoted piece moves into, out of, or within your promotion zone, you may promote it by flipping it over. • If your piece in the promotion zone would no longer be able to move if you don’t Rules of Shogi promote it now, you must promote it. Specifically, this means that a Pawn or a Lance ending its move in the row closest to your opponent must be promoted, as must a Knight in the two rows closest to your opponent. DROP INITIAL SETUP A mochi-goma can be placed in any vacant space on the board as your own piece, Place the pieces as depicted on the 9×9 board. following the rules below: Note that all of the pieces are the same colour. Your pieces point toward your opponent. • It must be placed as its original value (unpromoted). • You cannot drop it in a position where it cannot move. Specifically, this means that a The three rows closest to you are your territory Pawn or a Lance cannot be dropped in the row closest to your opponent, nor can a (and your opponent’s promotion zone). Knight be dropped on the two rows closest to your opponent (see Piece Rules). The three rows closest to your opponent are your • You cannot drop a pawn in front of your opponent’s King in such a way as to give opponent’s territory (and your promotion zone). you checkmate. • You cannot drop a pawn into a column that already has another of your unpromoted pawns. -

Chaturanga – the Chess Ancestor Reconstructed Historical Game

chATURANGA – The chess ANcesToR reconstructed historical game sTART It’s a game for 4 players that are set in 2 teams: gray and white, and blue and violet. Players from one team sets their figures on the opposite corners of the map. Figures of each player: 1 king, 1 elephant, 1 horse, 1 tower, 4 knights. Figures of player who lost his king disappears from the map. Winner team is the one who captures both kings of enemy team. MoveMeNTs Players are moving watchwise. KING moves like in the modern chess. HORSE moves like in the modern chess. TOWER moves in a straight lines, max 3 chess fields. ELEPHANT (bishop) moves in a straight diagonal line, max 3 chess fields KNIGHT (pawn) moves by 1 chess field, but he only captures diagonally (like in the modern chess). You can’t put your figures on the chess fields that are occupied by your own figures. You can’t jump over fields that are occupied by any figure (the exception is a horse). cApTURiNG You can capture enemy figure by standing on their occupied chess field. In this game there is no mate rule. pRoMoTioN Knight (pawn), that reach the last row of the board can be promoted to any own figure that were captured by enemy. Nausika Foundation Chaturanga [email protected] Reconstructed Indian board game vARiANTs The game is at the level of the first tests. We allow the possibility of checking the various rules and variants. The first rule of reconstruction is: good rules are the one that makes your game the most interesting.