Routledge Encyclopedia of Translation Technology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Study on Indirect Translation in Chinese Translation History from A

2017 3rd International Conference on Social Science and Management (ICSSM 2017) ISBN: 978-1-60595-445-5 The Study on Indirect Translation in Chinese Translation History From a Cultural Perspective Yue SUN Department of Arts and Science, Chengdu College of University of Electronic Science and Technology of China, Chengdu, Sichuan, China [email protected] Keywords: Indirect Translation, Culture, Direct Translation. Abstract. In comparison with direct translation, indirect translation has its own reasons and values of coming into being and existence. Though indirect translation is not as popular as it used to be in the past, it has played an important role in Chinese history of translation and would not disappear absolutely in the stage of translation with its unique characteristics. This paper tends to study indirect translation from a cultural perspective. Introduction of Indirect Translation Indirect Translation is a term used to denote the procedure whereby a text is not translated directly from an original ST, but via an intermediate translation in another language [1]. Indirect translation can also be called intermediate translation, mediated translation, retranslation or second-hand translation interchangeably. According to Toury, such a procedure is of course NORM-governed, and different literary systems will tolerate it to varying extents [2,3]. Indirect translation refers to translation from another language version rather than the original book. Some people may name indirect language as medium language translation. For example, Aesop’s Fables was first translated into Chinese from its English version in 1840, which had a profound effect and spread wide in our country; in the year 1955 Zhou Zuoren translated Aesop’s Fables from Greek. -

Translators' Tool

The Translator’s Tool Box A Computer Primer for Translators by Jost Zetzsche Version 9, December 2010 Copyright © 2010 International Writers’ Group, LLC. All rights reserved. This document, or any part thereof, may not be reproduced or transmitted electronically or by any other means without the prior written permission of International Writers’ Group, LLC. ABBYY FineReader and PDF Transformer are copyrighted by ABBYY Software House. Acrobat, Acrobat Reader, Dreamweaver, FrameMaker, HomeSite, InDesign, Illustrator, PageMaker, Photoshop, and RoboHelp are registered trademarks of Adobe Systems Inc. Acrocheck is copyrighted by acrolinx GmbH. Acronis True Image is a trademark of Acronis, Inc. Across is a trademark of Nero AG. AllChars is copyrighted by Jeroen Laarhoven. ApSIC Xbench and Comparator are copyrighted by ApSIC S.L. Araxis Merge is copyrighted by Araxis Ltd. ASAP Utilities is copyrighted by eGate Internet Solutions. Authoring Memory Tool is copyrighted by Sajan. Belarc Advisor is a trademark of Belarc, Inc. Catalyst and Publisher are trademarks of Alchemy Software Development Ltd. ClipMate is a trademark of Thornsoft Development. ColourProof, ColourTagger, and QA Solution are copyrighted by Yamagata Europe. Complete Word Count is copyrighted by Shauna Kelly. CopyFlow is a trademark of North Atlantic Publishing Systems, Inc. CrossCheck is copyrighted by Global Databases, Ltd. Déjà Vu is a trademark of ATRIL Language Engineering, S.L. Docucom PDF Driver is copyrighted by Zeon Corporation. dtSearch is a trademark of dtSearch Corp. EasyCleaner is a trademark of ToniArts. ExamDiff Pro is a trademark of Prestosoft. EmEditor is copyrighted by Emura Software inc. Error Spy is copyrighted by D.O.G. GmbH. FileHippo is copyrighted by FileHippo.com. -

Introducing Translation Studies

Introducing Translation Studies Introducing Translation Studies remains the definitive guide to the theories and concepts that make up the field of translation studies. Providing an accessible and up-to-date overview, it has long been the essential textbook on courses worldwide. This fourth edition has been fully revised and continues to provide a balanced and detailed guide to the theoretical landscape. Each theory is applied to a wide range of languages, including Bengali, Chinese, English, French, German, Italian, Punjabi, Portuguese and Spanish. A broad spectrum of texts is analysed, including the Bible, Buddhist sutras, Beowulf, the fiction of García Márquez and Proust, European Union and UNESCO documents, a range of contemporary films, a travel brochure, a children’s cookery book and the translations of Harry Potter. Each chapter comprises an introduction outlining the translation theory or theories, illustrative texts with translations, case studies, a chapter summary and discussion points and exercises. New features in this fourth edition include: Q new material to keep up with developments in research and practice, including the sociology of translation, multilingual cities, translation in the digital age and specialized, audiovisual and machine translation Q revised discussion points and updated figures and tables Q new, in-chapter activities with links to online materials and articles to encourage independent research Q an extensive updated companion website with video introductions and journal articles to accompany each chapter, online exercises, an interactive timeline, weblinks, and PowerPoint slides for teacher support This is a practical, user-friendly textbook ideal for students and researchers on courses in Translation and Translation Studies. -

Technical Reference Manual for the Standardization of Geographical Names United Nations Group of Experts on Geographical Names

ST/ESA/STAT/SER.M/87 Department of Economic and Social Affairs Statistics Division Technical reference manual for the standardization of geographical names United Nations Group of Experts on Geographical Names United Nations New York, 2007 The Department of Economic and Social Affairs of the United Nations Secretariat is a vital interface between global policies in the economic, social and environmental spheres and national action. The Department works in three main interlinked areas: (i) it compiles, generates and analyses a wide range of economic, social and environmental data and information on which Member States of the United Nations draw to review common problems and to take stock of policy options; (ii) it facilitates the negotiations of Member States in many intergovernmental bodies on joint courses of action to address ongoing or emerging global challenges; and (iii) it advises interested Governments on the ways and means of translating policy frameworks developed in United Nations conferences and summits into programmes at the country level and, through technical assistance, helps build national capacities. NOTE The designations employed and the presentation of material in the present publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. The term “country” as used in the text of this publication also refers, as appropriate, to territories or areas. Symbols of United Nations documents are composed of capital letters combined with figures. ST/ESA/STAT/SER.M/87 UNITED NATIONS PUBLICATION Sales No. -

Using Historical Editions of Encyclopaedia Britannica to Track the Evolution of Reputations

Catching the Red Priest: Using Historical Editions of Encyclopaedia Britannica to Track the Evolution of Reputations Yen-Fu Luo†, Anna Rumshisky†, Mikhail Gronas∗ †Dept. of Computer Science, University of Massachusetts Lowell, Lowell, MA, USA ∗Dept. of Russian, Dartmouth College, Hanover, NH, USA yluo,arum @cs.uml.edu, [email protected] { } Abstract mention statistics from books written at different historical periods. Google Ngram Viewer is a tool In this paper, we investigate the feasibil- that plots occurrence statistics using Google Books, ity of using the chronology of changes in the largest online repository of digitized books. But historical editions of Encyclopaedia Britan- while Google Books in its entirety certainly has nica (EB) to track the changes in the land- quantity, it lacks structure. However, the history scape of cultural knowledge, and specif- of knowledge (or culture) is, to a large extent, the ically, the rise and fall in reputations of history of structures: hierarchies, taxonomies, do- historical figures. We describe the data- mains, subdomains. processing pipeline we developed in order to identify the matching articles about his- In the present project, our goal was to focus on torical figures in Wikipedia, the current sources that endeavor to capture such structures. electronic edition of Encyclopaedia Britan- One such source is particularly fitting for the task; nica (edition 15), and several digitized his- and it has been in existence at least for the last torical editions, namely, editions 3, 9, 11. three centuries, in the form of changing editions of We evaluate our results on the tasks of arti- authoritative encyclopedias, and specifically, Ency- cle segmentation and cross-edition match- clopaedia Britannica. -

Multilingual -2010 Resource Directory & Editorial Index 2009

Language | Technology | Business RESOURCE ANNUAL DIRECTORY EDITORIAL ANNUAL INDEX 2009 Real costs of quality software translations People-centric company management 001CoverResourceDirectoryRD10.ind11CoverResourceDirectoryRD10.ind1 1 11/14/10/14/10 99:23:22:23:22 AMAM 002-032-03 AAd-Aboutd-About RD10.inddRD10.indd 2 11/14/10/14/10 99:27:04:27:04 AMAM About the MultiLingual 2010 Resource Directory and Editorial Index 2009 Up Front new year, and new decade, offers an optimistically blank slate, particularly in the times of tightened belts and tightened budgets. The localization industry has never been affected quite the same way as many other sectors, but now that A other sectors begin to tentatively look up the economic curve towards prosperity, we may relax just a bit more also. This eighth annual resource directory and index allows industry professionals and those wanting to expand business access to language-industry companies around the globe. Following tradition, the 2010 Resource Directory (blue tabs) begins this issue, listing compa- nies providing services in a variety of specialties and formats: from language-related technol- ogy to recruitment; from locale-specifi c localization to educational resources; from interpreting to marketing. Next come the editorial pages (red tabs) on timeless localization practice. Henk Boxma enumerates the real costs of quality software translations, and Kevin Fountoukidis offers tips on people-centric company management. The Editorial Index 2009 (gold tabs) provides a helpful reference for MultiLingual issues 101- 108, by author, title, topic and so on, all arranged alphabetically. Then there’s a list of acronyms and abbreviations used throughout the magazine, a glossary of terms, and our list of advertisers for this issue. -

Transformation of Participation in a Collaborative Online Encyclopedia Susan L

Becoming Wikipedian: Transformation of Participation in a Collaborative Online Encyclopedia Susan L. Bryant, Andrea Forte, Amy Bruckman College of Computing/GVU Center, Georgia Institute of Technology 85 5th Street, Atlanta, GA, 30332 [email protected]; {aforte, asb}@cc.gatech.edu ABSTRACT New forms of computer-supported cooperative work have sprung Traditional activities change in surprising ways when computer- from the World Wide Web faster than researchers can hope to mediated communication becomes a component of the activity document, let alone understand. In fact, the organic, emergent system. In this descriptive study, we leverage two perspectives on nature of Web-based community projects suggests that people are social activity to understand the experiences of individuals who leveraging Web technologies in ways that largely satisfy the became active collaborators in Wikipedia, a prolific, social demands of working with geographically distant cooperatively-authored online encyclopedia. Legitimate collaborators. In order to better understand this phenomenon, we peripheral participation provides a lens for understanding examine how several active collaborators became members of the participation in a community as an adaptable process that evolves extraordinarily productive and astonishingly successful over time. We use ideas from activity theory as a framework to community of Wikipedia. describe our results. Finally, we describe how activity on the In this introductory section, we describe the Wikipedia and related Wikipedia stands in striking contrast to traditional publishing and research, as well as two perspectives on social activity: activity suggests a new paradigm for collaborative systems. theory (AT) and legitimate peripheral participation (LPP). Next, we describe our study and how ideas borrowed from activity Categories and Subject Descriptors theory helped us investigate the ways that participation in the J.7 [Computer Applications]: Computers in Other Systems – Wikipedia community is transformed along multiple dimensions publishing. -

Are Encyclopedias Dead? Evaluating the Usefulness of a Traditional Reference Resource Rachel S

St. Cloud State University theRepository at St. Cloud State Library Faculty Publications Library Services 2012 Are Encyclopedias Dead? Evaluating the Usefulness of a Traditional Reference Resource Rachel S. Wexelbaum St. Cloud State University, [email protected] Follow this and additional works at: https://repository.stcloudstate.edu/lrs_facpubs Part of the Library and Information Science Commons Recommended Citation Wexelbaum, Rachel S., "Are Encyclopedias Dead? Evaluating the Usefulness of a Traditional Reference Resource" (2012). Library Faculty Publications. 26. https://repository.stcloudstate.edu/lrs_facpubs/26 This Article is brought to you for free and open access by the Library Services at theRepository at St. Cloud State. It has been accepted for inclusion in Library Faculty Publications by an authorized administrator of theRepository at St. Cloud State. For more information, please contact [email protected]. Are Encyclopedias Dead? Evaluating the Usefulness of a Traditional Reference Resource Author Rachel Wexelbaum is Collection Management Librarian and Assistant Professor at Saint Cloud State University, Saint Cloud, Minnesota. Contact Details Rachel Wexelbaum Collection Management Librarian MC135D Collections Saint Cloud State University 720 4 th Avenue South Saint Cloud, MN 56301 Email: [email protected] Abstract Purpose – To examine past, current, and future usage of encyclopedias. Design/methodology/approach – Review the history of encyclopedias, their composition, and usage by focusing on select publications covering different subject areas. Findings – Due to their static nature, traditionally published encyclopedias are not always accurate, objective information resources. Intentions of editors and authors also come into question. A researcher may find more value in using encyclopedias as historical documents rather than resources for quick facts. -

Elena Ulyanova – Biography

Piano Jack Price Founding Partner / Managing Director Marc Parella Partner / Director of Operations Brenna Sluiter Marketing Operations Manager Karrah Cambry Opera and Special Projects Manager Mailing Address: 520 Geary Street Suite 605 San Francisco CA 94102 Telephone: Contents: Toll-Free 1-866-PRI-RUBI (774-7824) 310-254-7149 / Los Angeles Biography 415-504-3654 / San Francisco Reviews & Testimonials Skype: pricerubent | marcparella Repertoire Email: Short Notice Concertos [email protected] CD/DVDs [email protected] Recordings Curriculum Vitae Website: http://www.pricerubin.com Complete artist information including video, audio Yahoo!Messenger and interviews are available at www.pricerubin.com pricerubin Elena Ulyanova – Biography Praised as "a phenomenal, gifted performer" by Roy Gillinson of the Beethoven Society of America, Elena Ulyanova is a pianist whose style runs the gamut of power, strength, and technique to a delicate, floating elegant finesse. Hailed by the Moscow Conservatory as one of their most gifted musicians, her Professor Victor Merzhanov noted that she possesses "Great virtuosity, brilliant artistic temperament, unique interpretive expression and a rich sound pallet." At the age of 5, Elena Ulyanova began to study piano with her mother, Larisa Ulyanova, in Saki, Ukraine. After winning several first prizes in Ukrainian and Russian competitions, she was awarded full scholarships for study in Moscow at Gnessin College of Music, Gnessin Academy of Music, and Moscow Tchaikovsky Conservatory. While at Gnessin Academy, she won the Momontov competition, which also resulted in tours of Russia, Bulgaria, and Romania. She was also awarded a tour of Austria, along with the best students representing Gnessin Academy, which included Alexander Kobrin. -

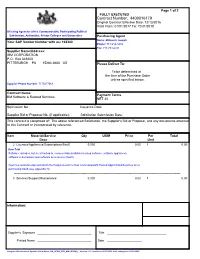

Contract Number: 4400016179

Page 1 of 2 FULLY EXECUTED Contract Number: 4400016179 Original Contract Effective Date: 12/13/2016 Valid From: 01/01/2017 To: 12/31/2018 All using Agencies of the Commonwealth, Participating Political Subdivision, Authorities, Private Colleges and Universities Purchasing Agent Name: Millovich Joseph Your SAP Vendor Number with us: 102380 Phone: 717-214-3434 Fax: 717-783-6241 Supplier Name/Address: IBM CORPORATION P.O. Box 643600 PITTSBURGH PA 15264-3600 US Please Deliver To: To be determined at the time of the Purchase Order unless specified below. Supplier Phone Number: 7175477069 Contract Name: Payment Terms IBM Software & Related Services NET 30 Solicitation No.: Issuance Date: Supplier Bid or Proposal No. (if applicable): Solicitation Submission Date: This contract is comprised of: The above referenced Solicitation, the Supplier's Bid or Proposal, and any documents attached to this Contract or incorporated by reference. Item Material/Service Qty UOM Price Per Total Desc Unit 2 Licenses/Appliances/Subscriptions/SaaS 0.000 0.00 1 0.00 Item Text Software: includes, but is not limited to, commercially available licensed software, software appliances, software subscriptions and software as a service (SaaS). Agencies must develop and attach the Requirements for Non-Commonwealth Hosted Applications/Services when purchasing SaaS (see Appendix H). -------------------------------------------------------------------------------------------------------------------------------------------------------- 3 Services/Support/Maintenance 0.000 0.00 -

A Könyvtárüggyel Kapcsolatos Nemzetközi Szabványok

A könyvtárüggyel kapcsolatos nemzetközi szabványok 1. Állomány-nyilvántartás ISO 20775:2009 Information and documentation. Schema for holdings information 2. Bibliográfiai feldolgozás és adatcsere, transzliteráció ISO 10754:1996 Information and documentation. Extension of the Cyrillic alphabet coded character set for non-Slavic languages for bibliographic information interchange ISO 11940:1998 Information and documentation. Transliteration of Thai ISO 11940-2:2007 Information and documentation. Transliteration of Thai characters into Latin characters. Part 2: Simplified transcription of Thai language ISO 15919:2001 Information and documentation. Transliteration of Devanagari and related Indic scripts into Latin characters ISO 15924:2004 Information and documentation. Codes for the representation of names of scripts ISO 21127:2014 Information and documentation. A reference ontology for the interchange of cultural heritage information ISO 233:1984 Documentation. Transliteration of Arabic characters into Latin characters ISO 233-2:1993 Information and documentation. Transliteration of Arabic characters into Latin characters. Part 2: Arabic language. Simplified transliteration ISO 233-3:1999 Information and documentation. Transliteration of Arabic characters into Latin characters. Part 3: Persian language. Simplified transliteration ISO 25577:2013 Information and documentation. MarcXchange ISO 259:1984 Documentation. Transliteration of Hebrew characters into Latin characters ISO 259-2:1994 Information and documentation. Transliteration of Hebrew characters into Latin characters. Part 2. Simplified transliteration ISO 3602:1989 Documentation. Romanization of Japanese (kana script) ISO 5963:1985 Documentation. Methods for examining documents, determining their subjects, and selecting indexing terms ISO 639-2:1998 Codes for the representation of names of languages. Part 2. Alpha-3 code ISO 6630:1986 Documentation. Bibliographic control characters ISO 7098:1991 Information and documentation. -

Software Localization Made Easy

Software localization made easy Launching software products into multiple international markets simultaneously in local language can be a real challenge. Software localization is typically very intensive and time-consuming for all the teams involved. During localization, software products are often modified to meet the linguistic, cultural and technical requirements of each target market. But it can be very challenging to localize elements of a graphical user interface (GUI) such as dialog boxes, menus and display texts, without a visual display to work from. SDL Passolo is designed to meet the specific demands of the software localization industry. By accessing a visual translation environment, the localization process is made much faster while the quality of the output is improved. SDL Passolo integrates with all translation tools in the SDL suite, boosting your efficiency every step of the way. Effortless project management With powerful project management capabilities, you’ll find it easy to stay on top of your multilingual software localization projects. Project preparation simplifies communication between the software manufacturer and the translators. The localization manager can work together with the developers and add files requiring localization to the project. Language Product Brief | SDL Passolo 2015 2 When a project is created, SDL Passolo saves the source text in a multilingual project database along with its SDL Passolo gives you translations, modified layouts, comments about the files and any other data. Multiple users can work on the same project simultaneously in Multi-User mode, while external All the tools you need for efficient software translators can download a free version of the software localization including project management, and edit translation packages created in the full version.