Evaluation of Finite State Morphological Analyzers Based on Paradigm Extraction from Wiktionary

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Traditional Bengali Keyboar

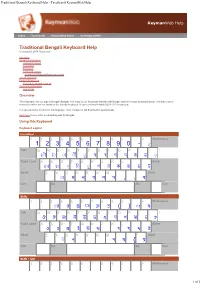

Traditional Bengali Keyboard Help - Tavultesoft KeymanWeb Help Keyman Web Help Index Tavultesoft KeymanWeb Demo Get KeymanWeb Traditional Bengali Keyboard Help Keyboard © 2008 Tavultesoft Overview Using this Keyboard Keyboard Layout Quickstart Examples Keyboard Details Complete Keyboard Reference Chart Troubleshooting Further Resources Related Keyboard Layouts Technical Information Authorship Overview This keyboard lets you type in Bengali (Bangla). It is easy to use for people familiar with Bengali and the Inscript keyboard layout. It includes some characters which are not found on the Inscript keyboard. It uses a normal English (QWERTY) keyboard. If a special font is needed for this language, most computers will download it automatically. Click here to see other keyboard layouts for Bengali. Using this Keyboard Keyboard Layout Unshifted ` 1 2 3 4 5 6 7 8 9 0 - = Backspace 1 2 3 4 5 6 7 8 9 0 - ◌ Tab Q W E R T Y U I O P [ ] \ ◌ ◌ ◌ ◌ ◌ ◌ Caps Lock A S D F G H J K L ; ' Enter ◌ ◌ ◌ ◌ ◌ Shift Z X C V B N M , . / Shift ◌ , . Ctrl Alt Alt Ctrl Shift ` 1 2 3 4 5 6 7 8 9 0 - = Backspace ◌ !" # $ ( ) ◌% & Tab Q W E R T Y U I O P [ ] \ ' ( ) * + , - . / 0 1 2 Caps Lock A S D F G H J K L ; ' Enter 3 4 5 6 7 8 9 : ; < Shift Z X C V B N M , . / Shift ◌= > ? " { @ Ctrl Alt Alt Ctrl Shift + Ctrl ` 1 2 3 4 5 6 7 8 9 0 - = Backspace 1 of 5 Traditional Bengali Keyboard Help - Tavultesoft KeymanWeb Help Tab Q W E R T Y U I O P [ ] \ Caps Lock A S D F G H J K L ; ' Enter Shift Z X C V B N M , . -

Managing Order Relations in Mlbibtex∗

Managing order relations in MlBibTEX∗ Jean-Michel Hufflen LIFC (EA CNRS 4157) University of Franche-Comté 16, route de Gray 25030 BESANÇON CEDEX France hufflen (at) lifc dot univ-fcomte dot fr http://lifc.univ-fcomte.fr/~hufflen Abstract Lexicographical order relations used within dictionaries are language-dependent. First, we describe the problems inherent in automatic generation of multilin- gual bibliographies. Second, we explain how these problems are handled within MlBibTEX. To add or update an order relation for a particular natural language, we have to program in Scheme, but we show that MlBibTEX’s environment eases this task as far as possible. Keywords Lexicographical order relations, dictionaries, bibliographies, colla- tion algorithm, Unicode, MlBibTEX, Scheme. Streszczenie Porządek leksykograficzny w słownikach jest zależny od języka. Najpierw omó- wimy problemy powstające przy automatycznym generowaniu bibliografii wielo- języcznych. Następnie wyjaśnimy, jak są one traktowane w MlBibTEX-u. Do- danie lub zaktualizowanie zasad sortowania dla konkretnego języka naturalnego umożliwia program napisany w języku Scheme. Pokażemy, jak bardzo otoczenie MlBibTEX-owe ułatwia to zadanie. Słowa kluczowe Zasady sortowania leksykograficznego, słowniki, bibliografie, algorytmy sortowania leksykograficznego, Unikod, MlBibTEX, Scheme. 0 Introduction these items w.r.t. the alphabetical order of authors’ Looking for a word in a dictionary or for a name names. But the bst language of bibliography styles in a phone book is a common task. We get used [14] only provides a SORT function [13, Table 13.7] to the lexicographic order over a long time. More suitable for the English language, the commands for precisely, we get used to our own lexicographic or- accents and other diacritical signs being ignored by der, because it belongs to our cultural background. -

Peace Corps Romania Survival Romanian Language Lessons Pre-Departure On-Line Training

US Peace Corps in Romania Survival Romanian Peace Corps Romania Survival Romanian Language Lessons Pre-Departure On-Line Training Table of Contents………………………………………………………………………. 1 Introduction……………………………………………………………………………… 2 Lesson 1: The Romanian Alphabet………………………………………………… 3 Lesson 2: Greetings…………………………………………………………………… 4 Lesson 3: Introducing self…………………………………………………………… 5 Lesson 4: Days of the Week…………………………………………………………. 6 Lesson 5: Small numbers……………………………………………………………. 7 Lesson 6: Big numbers………………………………………………………………. 8 Lesson 7: Shopping………………………………………………………………….. 9 Lesson 8: At the restaurant………………………………………………………..... 10 Lesson 9: Orientation………………………………………………………………… 11 Lesson 10: Useful phrases ……………………………………………………. 12 1 Survival Romanian, Peace Corps/Romania – December 2006 US Peace Corps in Romania Survival Romanian Introduction Romanian (limba română 'limba ro'mɨnə/) is one of the Romance languages that belong to the Indo-European family of languages that descend from Latin along with French, Italian, Spanish and Portuguese. It is the fifth of the Romance languages in terms of number of speakers. It is spoken as a first language by somewhere around 24 to 26 million people, and enjoys official status in Romania, Moldova and the Autonomous Province of Vojvodina (Serbia). The official form of the Moldovan language in the Republic of Moldova is identical to the official form of Romanian save for a minor rule in spelling. Romanian is also an official or administrative language in various communities and organisations (such as the Latin Union and the European Union – the latter as of 2007). It is a melodious language that has basically the same sounds as English with a few exceptions. These entered the language because of the slavic influence and of many borrowing made from the neighboring languages. It uses the Latin alphabet which makes it easy to spell and read. -

Technical Reference Manual for the Standardization of Geographical Names United Nations Group of Experts on Geographical Names

ST/ESA/STAT/SER.M/87 Department of Economic and Social Affairs Statistics Division Technical reference manual for the standardization of geographical names United Nations Group of Experts on Geographical Names United Nations New York, 2007 The Department of Economic and Social Affairs of the United Nations Secretariat is a vital interface between global policies in the economic, social and environmental spheres and national action. The Department works in three main interlinked areas: (i) it compiles, generates and analyses a wide range of economic, social and environmental data and information on which Member States of the United Nations draw to review common problems and to take stock of policy options; (ii) it facilitates the negotiations of Member States in many intergovernmental bodies on joint courses of action to address ongoing or emerging global challenges; and (iii) it advises interested Governments on the ways and means of translating policy frameworks developed in United Nations conferences and summits into programmes at the country level and, through technical assistance, helps build national capacities. NOTE The designations employed and the presentation of material in the present publication do not imply the expression of any opinion whatsoever on the part of the Secretariat of the United Nations concerning the legal status of any country, territory, city or area or of its authorities, or concerning the delimitation of its frontiers or boundaries. The term “country” as used in the text of this publication also refers, as appropriate, to territories or areas. Symbols of United Nations documents are composed of capital letters combined with figures. ST/ESA/STAT/SER.M/87 UNITED NATIONS PUBLICATION Sales No. -

Recognition Features for Old Slavic Letters: Macedonian Versus Bosnian Alphabet

International Journal of Scientific and Research Publications, Volume 5, Issue 12, December 2015 145 ISSN 2250-3153 Recognition features for Old Slavic letters: Macedonian versus Bosnian alphabet Cveta Martinovska Bande*, Mimoza Klekovska**, Igor Nedelkovski**, Dragan Kaevski*** * Faculty of Computer Science, University Goce Delcev, Stip, Macedonia ** Faculty of Technical Sciences, University St. Kliment Ohridski, Bitola, Macedonia *** Faculty of Elecrical Engineering and Information Technology, University St. Cyril and Methodius, Skopje, Macedonia Abstract- This paper compares recognition methods for two recognition have been reported, such as fuzzy logic [9, 10], Old Slavic Cyrillic alphabets: Macedonian and Bosnian. Two neural networks [11] and genetic algorithms [12]. novel methodologies for recognition of Old Macedonian letters Several steps have to be performed in the process of letter are already implemented and experimentally tested by recognition, like pre-processing, segmentation, feature extraction calculating their recognition accuracy and precision. The first and selection, classification, and post-processing [13]. Generally, method is based on a decision tree classifier realized by a set of pre-processing methods include image binarization, rules and the second one is based on a fuzzy classifier. To normalization, noise reduction, detection and correction of skew, enhance the performance of the decision tree classifier the estimation and removal of slant. There are two segmentation extracted rules are corrected according -

Germanic Standardizations: Past to Present (Impact: Studies in Language and Society)

<DOCINFO AUTHOR ""TITLE "Germanic Standardizations: Past to Present"SUBJECT "Impact 18"KEYWORDS ""SIZE HEIGHT "220"WIDTH "150"VOFFSET "4"> Germanic Standardizations Impact: Studies in language and society impact publishes monographs, collective volumes, and text books on topics in sociolinguistics. The scope of the series is broad, with special emphasis on areas such as language planning and language policies; language conflict and language death; language standards and language change; dialectology; diglossia; discourse studies; language and social identity (gender, ethnicity, class, ideology); and history and methods of sociolinguistics. General Editor Associate Editor Annick De Houwer Elizabeth Lanza University of Antwerp University of Oslo Advisory Board Ulrich Ammon William Labov Gerhard Mercator University University of Pennsylvania Jan Blommaert Joseph Lo Bianco Ghent University The Australian National University Paul Drew Peter Nelde University of York Catholic University Brussels Anna Escobar Dennis Preston University of Illinois at Urbana Michigan State University Guus Extra Jeanine Treffers-Daller Tilburg University University of the West of England Margarita Hidalgo Vic Webb San Diego State University University of Pretoria Richard A. Hudson University College London Volume 18 Germanic Standardizations: Past to Present Edited by Ana Deumert and Wim Vandenbussche Germanic Standardizations Past to Present Edited by Ana Deumert Monash University Wim Vandenbussche Vrije Universiteit Brussel/FWO-Vlaanderen John Benjamins Publishing Company Amsterdam/Philadelphia TM The paper used in this publication meets the minimum requirements 8 of American National Standard for Information Sciences – Permanence of Paper for Printed Library Materials, ansi z39.48-1984. Library of Congress Cataloging-in-Publication Data Germanic standardizations : past to present / edited by Ana Deumert, Wim Vandenbussche. -

On the Standardization of the Aromanian System of Writing

ON THE STANDARDIZATION OF THE AROMANIAN SYSTEM OF WRITING The Bituli-Macedonia Symposium of August 1997 by Tiberius Cunia INTRODUCTION The Aromanians started writing in their language in a more systematic way, a little over 100 years ago, and the first writings were associated with the national movement that contributed to the opening of Romanian schools in Macedonia. Because of the belief, at that time, that the Aromanian is a dialect of the Romanian language, it was natural to use the Romanian alphabet as the basis of the system of writing. The six sounds that are found in the Aromanian but not in the literary Romanian language – the three Greek sounds, dhelta , ghamma and theta and the other three, dz , nj and lj (Italian sounds gn and gl ) – were written in many ways, according to the wishes of the various Aromanian writers themselves. No standard way of writing Aromanian, or any serious movement to standardize this writing existed at that time. And the Romanian Academy that establishes the official rules of writing the Romanian literary language, never concerned itself with the establishment of rules for writing its dialects, at least not to my knowledge. Consequently, what is presently known as the "traditional" system of writing Aromanian is actually a system that consists of (i) several "traditional" alphabets in which several characters have diacritical signs, not always the same, and (ii) different ways for writing the same words. The reader interested in the various alphabets of the Aromanian language – including the Moscopoli era, the classical period (associated with the Romanian schools in Macedonia) and the most recent contemporary one – is referred to a long article published in Romanian in nine issues of the Aromanian monthly "Deşteptarea – Revista Aromânilor " from Bucharest (from Year 4, Number 5, May 1993 to Year 5, Number 1, January 1994), where the author, G. -

Comac Medical NLSP2 Thefo

Issue May/14 No.2 Copyright © 2014 Comac Medical. All rights reserved Dear Colleagues, The Newsletter Special Edition No.2 is dedicated to the 1150 years of the Moravian Mission of Saints Cyril and Methodius and 1150 years of the official declaration of Christianity as state religion in Bulgaria by Tsar Boris I and imposition of official policy of literacy due to the emergence of the fourth sacral language in Europe. We are proudly presenting: • PUBLISHED BY COMAC-MEDICAL • ~Page I~ SS. CIRYL AND METHODIUS AND THE BULGARIAN ALPHABET By rescuing the creation of Cyril and Methodius, Bulgaria has earned the admiration and respect of not only the Slav peoples but of all other peoples in the world and these attitudes will not cease till mankind keeps implying real meaning in notions like progress, culture “and humanity. Bulgaria has not only saved the great creation of Cyril and Methodius from complete obliteration but within its territories it also developed, enriched and perfected this priceless heritage (...) Bulgaria became a living hearth of vigorous cultural activity while, back then, many other people were enslaved by ignorance and obscurity (…) Тhe language “ of this first hayday of Slavonic literature and culture was not other but Old Bulgarian. This language survived all attempts by foreign invaders for eradication thanks to the firmness of the Bulgarian people, to its determination to preserve what is Bulgarian, especially the Bulgarian language which has often been endangered but has never been subjugated… -Prof. Roger Bernard, French Slavist Those who think of Bulgaria as a kind of a new state (…), those who have heard of the Balkans only as the “powder keg of Europe”, those cannot remember that “Bulgaria was once a powerful kingdom and an active player in the big politics of medieval Europe. -

Eight Fragments Serbian, Croatian, Bosnian

EIGHT FRAGMENTS FROM THE WORLD OF MONTENEGRIN LANGUAGES AND SERBIAN, CROATIAN, SERBIAN, CROATIAN, BOSNIAN SERBIAN, CROATIAN, BOSNIAN AND FROM THE WORLD OF MONTENEGRIN EIGHT FRAGMENTS LANGUAGES Pavel Krejčí PAVEL KREJČÍ PAVEL Masaryk University Brno 2018 EIGHT FRAGMENTS FROM THE WORLD OF SERBIAN, CROATIAN, BOSNIAN AND MONTENEGRIN LANGUAGES Selected South Slavonic Studies 1 Pavel Krejčí Masaryk University Brno 2018 All rights reserved. No part of this e-book may be reproduced or transmitted in any form or by any means without prior written permission of copyright administrator which can be contacted at Masaryk University Press, Žerotínovo náměstí 9, 601 77 Brno. Scientific reviewers: Ass. Prof. Boryan Yanev, Ph.D. (Plovdiv University “Paisii Hilendarski”) Roman Madecki, Ph.D. (Masaryk University, Brno) This book was written at Masaryk University as part of the project “Slavistika mezi generacemi: doktorská dílna” number MUNI/A/0956/2017 with the support of the Specific University Research Grant, as provided by the Ministry of Education, Youth and Sports of the Czech Republic in the year 2018. © 2018 Masarykova univerzita ISBN 978-80-210-8992-1 ISBN 978-80-210-8991-4 (paperback) CONTENT ABBREVIATIONS ................................................................................................. 5 INTRODUCTION ................................................................................................. 7 CHAPTER 1 SOUTH SLAVONIC LANGUAGES (GENERAL OVERVIEW) ............................... 9 CHAPTER 2 SELECTED CZECH HANDBOOKS OF SERBO-CROATIAN -

An Analysis of Contrasts Between English and Bengali Vowel Phonemes

Journal of Education and Social Sciences, Vol. 13, Issue 1, (June) ISSN 2289-9855 2019 AN ANALYSIS OF CONTRASTS BETWEEN ENGLISH AND BENGALI VOWEL PHONEMES Syed Mazharul Islam ABSTRACT Both qualitative and quantitative aspects of the English vowel system mark it as distinct from many other vowel systems including that of the Bengali language. With respect to the English pure vowels or monophthongs, tongue height, tongue position and lip rounding are the qualitative features which make English distinctive. The quantitative aspect of the English vowels or vowel length is another feature which makes some Bengali vowels different from their English equivalents the fact being that vowel length in Bengali, though it theoretically exists, is not important in actual pronunciation of Bengali. English diphthongs, or the gliding vowels, on the other hand, have the unique feature that between the constituent vowels, the first vowel component is longer than the second. This paper examines these contrasts underpinned by the CAH (Contrastive Analysis Hypothesis) the proponents of which believe that through contrastive analysis of the L1 and the L2 being learnt, difficulties in the latter’s acquisition can be predicted and materials should be based on the anticipated difficulties. The hypothesis also lends itself to the assumption that degrees of differences correspond to the varying levels of difficulty. This paper adds that contrastive analysis also enhances L2 awareness among learners, help them unlearn L1 habits and aids in the automation and creative use of all aspects of the L2 including phonology. The analysis of the sounds in question has made some interesting discoveries hitherto unknown and unobserved. -

LNCS 8104, Pp

Bengali Printed Character Recognition – A New Approach Soharab Hossain Shaikh1, Marek Tabedzki2, Nabendu Chaki3, and Khalid Saeed4 1 A.K.Choudhury School of Information Technology, University of Calcutta, India [email protected] 2 Faculty of Computer Science, Bialystok University of Technology, Poland [email protected] 3 Department of Computer Science & Engineering, University of Calcutta, India [email protected] 4 Faculty of Physics and Applied Computer Science, AGH University of Science and Technology, Cracow, Poland [email protected] Abstract. This paper presents a new method for Bengali character recognition based on view-based approach. Both the top-bottom and the lateral view-based approaches have been considered. A layer-based methodology in modification of the basic view-based processing has been proposed. This facilitates handling of unequal logical partitions. The document image is acquired and segmented to extract out the text lines, words, and letters. The whole image of the individual characters is taken as the input to the system. The character image is put into a bounding box and resized whenever necessary. The view-based approach is applied on the resultant image and the characteristic points are extracted from the views after some preprocessing. These points are then used to form a feature vector that represents the given character as a descriptor. The feature vectors have been classified with the aid of k-NN classifier using Dynamic Time Warping (DTW) as a distance measure. A small dataset of some of the compound characters has also been considered for recognition. The promising results obtained so far encourage the authors for further work on handwritten Bengali scripts. -

![Spelling Progress Bulletin Summer 1977 P2 in the Printed Version]](https://docslib.b-cdn.net/cover/2223/spelling-progress-bulletin-summer-1977-p2-in-the-printed-version-762223.webp)

Spelling Progress Bulletin Summer 1977 P2 in the Printed Version]

Spelling Progress Bulletin Summer, 1977 Dedicated to finding the causes of difficulties in learning reading and spelling. Summer, 1977. Publisht quarterly Editor and General Manager, Assistant Editor, Spring, Summer, Fall, Winter. Newell W. Tune, Helen Bonnema Bisgard, Subscription $ 3. 00 a year. 5848 Alcove Ave, 13618 E. Bethany Pl, #307 Volume XVII, no. 2 No. Hollywood, Calif. 91607 Denver, Colo, 80232 Editorial Board: Emmett A. Betts, Helen Bonnema, Godfrey Dewey, Wilbur J. Kupfrian, William J. Reed, Ben D. Wood, Harvie Barnard. Table of Contents 1. Spelling and Phonics II, by Emmett Albert Betts, Ph.D., LL.D. 2. Sounds and Phonograms I (Grapho-Phoneme Variables), by Emmett A. Betts, Ph.D. 3. Sounds and Phonograms II (Variant Spellings), by Emmett Albert Betts, Ph.D, LL.D. 4. Sounds and Phonograms III (Variant Spellings), by Emmett Albert Betts, Ph.D, LL.D. 5. The Development of Danish Orthography, by Mogens Jansen & Tom Harpøth. 6. The Holiday, by Frank T. du Feu. 7. A Decade of Achievement with i. t. a., by John Henry Martin. 8. Ten Years with i. t. a. in California, by Eva Boyd. 9. Logic and Good Judgement needed in Selecting the Symbols to Represent the Sounds of Spoken English, by Newell W. Tune. 10. Criteria for Selecting a System of Reformed Spelling for a Permanent Reform, by Newell W. Tune. 11. Those Dropping Test Scores, by Harvie Barnard. 12. Why Johnny Still Can't Learn to Read, by Newell W. Tune. 13. A Condensed Summary of Reasons For and Against Orthographic Simplification, by Harvie Barnard. 14. An Explanation of Vowels Followed by /r/ in World English Spelling (I.