KNIME - the Konstanz Information Miner

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

VHB Faltfahrplan.Pdf

Fahrplan Radolfzell – Stockach NE Fahrplan Radolfzell – StockachFahrplan NE Stockach NE – Radolfzell Fahrplan Stockach NE – Radolfzell RADOLFZELL – STOCKACH STOCKACH – RADOLFZELL 1996–2021 25 Jahre HzL RB HzL HzL HzL RB HzL HzL HzL HzL HzL HzL HzL HzL HzL HzL HzL HzL HzL RB HzL HzL RB HzL HzL HzL HzL HzL HzL HzL HzL HzL 74403 17383 74405 74407 74409 17757 74411 74413 74415 74417 74419 74421 74423HzL 74425RB 74427HzL 74429HzL HzL RB HzL HzL HzL74400HzL74404HzL74406HzL17750HzL74408HzL74410HzL17756HzL74412 74414 74416 74418 74420 74422HzL 74424HzL 74426HzL 74428RB HzL HzL RB HzL HzL HzL HzL HzL HzL HzL HzL HzL Mo-Fr Mo-Fr Mo-Fr Mo-Fr Mo-Sa Mo-Fr Mo-Fr Mo-Fr74403 17383 Mo-Fr74405 74407 74409 17757 74411 74413 74415Mo-Fr74417Mo-Fr74419Mo-Sa74421Mo-Fr74423Mo-Fr74425 74427Mo-Fr74429Mo-Fr 74400 Mo-Fr74404 74406 Mo-Fr17750 74408 74410 17756 74412 74414 74416 74418 74420 74422 74424 74426 74428 Ẅ Ẅ Ẅ Ẅ ẅ Ẅ Ẅ Mo-FrẄ Mo-Fr Mo-FrẄ Mo-Fr Mo-Sa Mo-Fr Mo-Fr Ẅ Ẅ ẅ Mo-FrẄ Ẅ Mo-Fr Ẅ Ẅ Mo-Fr Mo-FrẄ Mo-Sa Mo-FrẄ Mo-Fr Mo-Fr Mo-Fr Mo-Fr Mo-Fr Ẅ Ẅ Ẅ Ẅ ẅ Ẅ Ẅ Ẅ Ẅ Ẅ Ẅ ẅ Ẅ Ẅ Ẅ Ẅ Ẅ Ẅ Radolfzell 5 10 5 23 5 41 6 15 6 41 6 58 7 15 7 52 8 52 9 52 10 52 11 52 12 22 12 52 13 22 13 52 Stockach NE 4 35 5 37 6 08 6 38 7 08 7 46 8 16 9 16 10 16 11 16 12 16 12 46 13 16 13 46 Radolfzell 5 10 5 23 5 41 6 15 6 41 6 58 7 15 7 52 8 52 9 52 10 52 11 52 12 22 12 52 13 22 13 52 Stockach NE 4 35 5 37 6 08 6 38 7 08 7 46 8 16 9 16 10 16 11 16 12 16 12 46 13 16 13 46 Haselbrunn 5 12 Ꭺ 5 43 6 17 6 43 Ꭺ 7 17 7 54 8 54 9 54 10 54 11 54 12 24 12 54 13 24 13 54 Nenzingen 4 39 5 41 6 12 6 -

Ab Schaffhausen/Konstanz

Schiffsfahrplan 2019 Untersee und Rhein Radolfzell Insel Reichenau Iznang Schaffhausen Büsingen Gailingen Neuhausen Gaienhofen Untersee am Rheinfall Hemmen hofen Bodenseeforum Stein am Rhein Diessenhofen Wangen Konstanz Rheinfall Rhein Öhningen Berlingen Ermatingen Bodensee Steckborn Mannenbach Gottlieben Mammern Kreuzlingen Richtung Kreuzlingen Richtung Schaffhausen Kurse www.bsb.de CourseKurs | Schedule 525 533 543 551 CourseKurs | Schedule 528 536 550 558 Wichtige Informationen PrintempsFrühjahr • Spring 1 Schaffhausen 9.10 1 11.10 13.18 15.18 Kreuzlingen 9.00 11.00 14.27 1 16.27 Büsingen, Bodenseeforum : Halt auf Verlangen Büsingen 1 9.38 11.38 13.46 15.46 Konstanz 9.12 11.12 14.39 16.39 nicht alle Kurse / Haltestellen, z.T. nur Handrollstühle 13. 04. – 28. 04. 2019 1 Diessenhofen / Bodenseeforum 9.17 11.17 14.44 16.44 Restaurant auf allen Kursschiffen. « Schiffs-Frühstück » Do – So, allg. Feiertage Gailingen 10.10 12.10 14.18 16.18 auf den Kursen 525 und 528. Jeu – Dim, fêtes générales Gottlieben 9.32 11.32 14.59 16.59 Stein am Rhein 11.15 13.15 15.23 17.23 Allgemeine Feiertage: Karfreitag 19. April, Ostermontag 22. Thu – Sun, public holidays Ermatingen 9.52 11.52 15.19 17.19 April, Tag der Arbeit 1. Mai, Auffahrt 30. Mai, Pfingst montag 10. Juni, Fronleichnam 20. Juni Öhningen 11.32 13.32 15.40 17.40 Reichenau 10.06 12.06 15.33 17.33 Mammern 11.39 13.39 15.47 17.47 Fahrkarten an Bord der Schiffe erhältlich. 29. 04. – 21. 06. 2019 Mannenbach 10.13 12.13 15.40 17.40 Wangen 11.45 13.45 15.53 17.53 Gültig: GA, Halbtax ( 50 % ), Tageskarte zum Halbtax, täglich Berlingen 10.23 12.23 15.50 17.50 OSTWIND-Tageskarte Plus, Gemeinde-Tageskarte. -

29. Buchausstellung

42 Amtsblatt Stadt Isny Isnyaktuell Isnyer Wirtschaftsanzeiger mit dem Amtsblatt der Stadt Isny Nr. 42 | 16. Oktober 2019 „Stepping out“ - Tanzen fürs Leben ISNY – Im zehnten Jahr der Theater- gruppe „D’Weibsbilder & Co.“ haben die Schauspieler das amerikanische Step- pen gelernt. Am Premierenabend in Bolsternang im Gasthaus Schwarzer Grat werden nicht nur die Füße wirbeln, sondern auch die Leben der Charaktere im Stück werden ordentlich durch- einandergewirbelt. Acht sehr unter- schiedliche Menschen lernen Stepp- tanzen. Und sie tun das im Theater- stück "Stepping out" von Richard Harris aus den unterschiedlichsten Motiven. Da ist die Hoffnung, im schnellen Rhythmus Verletzungen zu vergessen und mit dem rasenden Klack-Klack der Sohlen, Probleme von der Seele zu tanzen. Starker Ansporn für alle ist aber, im wirbelnden Tanz der Füße bei mitreißender Musik, ausgelassenes Zusammensein genießen zu können. Am Samstag, 19. Oktober um 19.30 Uhr ist Premiere von „Stepping out“. Alle weiteren Auftrittstermine und Infos unter www.d-weibsbilder.de ANZEIGE ÕÀ >Õà > *>À] ÃÞ ->ÃÌ>}] ÓÈ°£ä° Là ÀiÌ>} 䣰££° 7À viiÀ ÕÃiÀi iÕÌÃV i ÕV >`Õ}ëÀià iÃÕ}Ì >À>>ÀÀiÀÀ}iLØ ® >Õà Ài ÕV ¹" >`Ã>Àú *}iÀ-«>i® iÃÕ}Ì`iÀÃÞiÀÕÌÀ >}>À }iÀ"vvi >Õà ÀiâÜiÌi ÕV ¹LÌiÃiiÀ>vØÀ`i<ÕÕv̶º &vvÕ}ÃâiÌi\ ÌB}° £äÓä 1 À] ÜÜÜ°LÕV >`Õ}>ÞiÀ°`i >Õ~iÀ £\Î䮳À£n\ää® Isny aktuell Isny aktuell 16. Oktober2019 2 Neues Ringgtttheater Isny Stellenangebote www.kinnoo-isny.de Telefon 0 757562/8642 ' '% ! 7À ÃÕV i ii >ÃÃi -i Ã` yiÝLi] ÃÞ«>Ì ÃV Õ` i }ÕÌ Ì iÃV i âÕÃ>i -

Informationen Zu Einem Geplanten Wohnungswechsel

Abgeschlossene Wohnungen Zahl der Wohlfläche Höchstbetrag für die Personen in qm Nettokaltmiete in Euro Zahl der Wohlfläche Höchstbetrag für die im Haushalt Umland Seenahe Personen in qm Nettokaltmiete in Euro Hegau/ Gemeinden*, im Haushalt Konstanz Reichenau Stockach* Singen, 1 45 516 469 Rielasingen- 2 60 640 597 Worblingen 3 75 780 704 1 45 400 400 4 90 929 790 2 60 450 500 5 105 1.084 884 3 75 530 580 Informationen zu 6 120 1.242 992 4 90 600 680 für jede 15 156 124 5 105 710 870 weitere 6 120 800 1.000 Person für jede 15 110 150 einem geplanten weitere Person Wohnungswechsel Zahl der Wohn- Höchstbetrag für die Nettokaltmiete Personen fläche in Euro im in qm Allens- Hegne Kalt- Freun- *Umland Hegau/ Stockach = Gailingen, Steißlingen, Volkerts- Stand: Oktober 2020 Haushalt bach brunn dental hausen, Gottmadingen, Aach, Tengen, Engen, Mühlhausen- Kernort Langen- Ehingen, Hilzingen, Eigeltingen, Hohenfels, Mühlingen, Orsingen- rain Nenzingen, Stockach 1 45 498 448 379 340 Geschäftsstelle Konstanz 2 60 626 564 477 427 *Seenahe Gemeinden/Singen/Rielasingen-Worblingen = Bodman- Konzilstraße 9, 78462 Konstanz 3 75 756 681 576 516 Ludwigshafen, Gaienhofen, Moos, Öhningen, Büsingen*, Singen, für die Gemeinden Konstanz, Reichenau und Allensbach 4 90 914 823 696 624 Rielasingen-Worblingen 5 105 1.095 987 825 748 6 120 1.300 1.171 991 888 *Aufgrund des Währungsfaktors kann in Büsingen ein Zuschlag Geschäftsstelle Singen für jede 15 163 147 124 111 von 10 Prozent berücksichtigt werden. Maggistraße 7, 78224 Singen weitere für die Gemeinden Singen, Gailingen, Tengen, Engen, Steißlingen, Person Volkertshausen, Büsingen, Hilzingen, Gottmadingen, Rielasingen- Zimmer Worblingen, Mühlhausen-Ehingen, Eigeltingen, Aach, Radolfzell, Gaienhofen, Moos und Öhningen Zimmer Warmmiete in Euro Zahl der Wohn- Höchstbetrag für die Nettokaltmiete Stadt Konstanz maximal 400 Personen fläche in Euro Seenahe Gemeinden, Singen, maximal 350 Geschäftsstelle Stockach im in qm Radolf- Böhr. -

Deutscher Bundestag 5. Wahlperiode Drucksache V/1032 Entwurf Eines

Deutscher Bundestag Drucksache V/1032 5. Wahlperiode Bundesrepublik Deutschland Der Bundeskanzler Bonn, den 25. Oktober 1966 I 2 - 31109 - 2876 - 66 An den Herrn Präsidenten des Deutschen Bundestages Hiermit übersende ich den von der Bundesregierung be-- schlossenen Entwurf eines Gesetzes zu dem Vertrag vom 23. November 1964 zwischen der Bundesrepu blik Deutschland und der Schweizerischen Eidgenossenschaft Tiber die Einbeziehung der Gemeinde Büsingen am Hochrhein in das schweizerische Zollgebiet nebst Begründung mit der Bitte, die Beschlußfassung des Deut- schen Bundestages herbeizuführen. Der Vertrag und das dazugehörige Schlußprotokoll in deutscher Sprache sowie eine Denkschrift nebst einer Anlage sind bei- gefügt. Federführend ist der Bundesminister des Auswärtigen. Der Bundesrat hat in seiner 299. Sitzung am 14. Oktober 1966 gemäß Artikel 76 Abs. 2 des Grundgesetzes beschlossen, gegen den Gesetzentwurf keine Einwendungen zu erheben. Er ist der Ansicht, daß das Gesetz seiner Zustimmung bedarf. Der Stellvertreter des Bundeskanzlers Mende Drucksache V/1032 Deutscher Bundestag — 5. Wahlperiode Entwurf eines Gesetzes zu dem Vertrag vom 23. November 1964 zwischen der Bundesrepublik Deutschland und der Schweizerischen Eidgenossenschaft über die Einbeziehung der Gemeinde Büsingen am Hochrhein in das schweizerische Zollgebiet Der Bundestag hat mit Zustimmung des Bundes- rates das folgende Gesetz beschlossen: Artikel 1 Dem in Freiburg im Breisgau am 23. November 1964 unterzeichneten Vertrag zwischen der Bundes- republik Deutschland und der Schweizerischen Eid- genossenschaft über die Einbeziehung der Gemeinde Büsingen am Hochrhein in das schweizerische Zoll- gebiet nebst Schlußprotokoll und Anlage wird zu- gestimmt. Der Vertrag, das Schlußprotokoll und die Anlage werden nachstehend veröffentlicht. Artikel 2 Dieses Gesetz gilt auch im Land Berlin, sofern das Land Berlin die Anwendung dieses Gesetzes fest- stellt. -

Zuwanderung“ Von Säuglingen Am Hochrhein Oder Wie Vorsichtig Man Mit Daten Umgehen Muss

Statistisches Monatsheft Baden-Württemberg 6/2007 Bevölkerung, Familie Geburtendefizite und hohe „Zuwanderung“ von Säuglingen am Hochrhein oder wie vorsichtig man mit Daten umgehen muss Cornelia Schwarck In einigen der an die Schweiz grenzenden Ge- Die Statistik der Geburten erfasst meinden am Hochrhein ist der Anteil der „Zu- alle Lebend- und Totgeburten, die wanderer“ unter einem Jahr deutlich höher sich in Deutschland im jeweiligen als im Landesschnitt. Je nach der Lage der Berichtszeitraum ereignet haben. Sie Gemein de und deren Nähe zu medizinischen werden standesamtlich am Geburtsort er- Einrichtungen kann vermutet werden, dass es fasst. Die Datensätze werden mindes- sich zum Teil um Kinder handelt, die in der tens monatlich an das zuständige Statis- Schweiz geboren wurden. Mit Hilfe der Ge- tische Landesamt übermittelt. Dort wer- burtenstatistik lässt sich dies allerdings nicht den die Angaben mitmilfe von Schlüsseln Dipl.-Ingenieurin nachweisen. Deshalb wurde anhand von wei- Cornelia Schwarck ist umkodiert und auf Plausibilität geprüft. teren Auswertungen versucht, die These zu Referentin im Referat Anschließend erfolgt zwischen den Sta- „Landesinformations- belegen. Es ergaben sich Anhaltspunkte, die system, Regionalstatistik, tistischen Landesämtern ein Austausch, diese Annahme zumindest für einige der Zentrale Informations- bei dem die Datensätze dem Bundesland dienste und Internet- Hochrheingemeinden bestätigen. angebot“ des Statistischen zugeordnet werden, in dem sich der Haupt- Landesamtes Baden- wohnsitz der Mutter befindet. Wird das Württemberg. Kind im Ausland geboren wie hier zum Beispiel in der Schweiz, erfolgt dort die Im Blickpunkt der Untersuchung stehen die di- Meldung an die zuständige Zivilstands- rekt an die Schweiz grenzenden Gemeinden der behörde. Am Hauptwohnsitz der Mutter Landkreise Lörrach, Waldshut und Konstanz. -

Gemeinde Zentrum Öffnungzeiten Angebotene Tests Anmeldun G

Anmeldung/Termin Gemeinde Zentrum Öffnungzeiten Angebotene Tests Anmeldung unter: erforderlich? Gemeinde Allensbach Mo 9-12 Uhr Allensbach Rathausplatz 1, Im Torkel Kostenloser Bürgerschnelltest Nein Mi und Fr 15-18 Uhr 78476 Allensbach Campingplatz Allensbach Allensbach Strandweg 30 Mo-So 9-19 Uhr Kostenloser Bürgerschnelltest Nein 78476 Allensbach Lochmühle Eigeltingen Mo-Sa 11-15 Uhr oder nach Eigeltingen Konstenloser Bürgerschnelltest Nein 0171/8238346 Hinterdorfstraße 44 telefonischer Vereinbarung Mo-Fr variabel, abhängig von MVZ der Nachfrage und der Engen Hewenstr. 19 Kostenloser Bürgerschnelltest Ja http://www.schnelltest-engen.de/ Kapazitäten der 78234 Engen durchführenden Ärzte Stadt Apotheke Engen Vorstadt 8 Mo-Fr 9-13 Uhr Kostenloser Bürgerschnelltests Nein 78234 Engen Mo-Fr 8:30-12 Uhr und 17- Fit for Life Gesundheits-Zentrum 20:30 Uhr andere Terminvereinbarung möglich unter Engen Ballenbergstraße 19 Konstenloser Bürgerschnelltest Nein, aber möglich Sa 14:30-16:30 Uhr 07733/7300 78234 Engen So 10-12 Uhr Yeti Dental www.testzentrum.yeti-dental.de Engen Industriestr. 3 Mo-Fr 14-15 Uhr Kostenloser Bürgerschnelltest Ja oder 07733/9410-19 78234 Engen für Termine außerhalb der Öffnungszeiten Corona Testservice BW Mo-So 10-12Uhr -Höri Testzentrum- zusätzlich von Do-Sa 16-18 Gaienhofen/ Horn Kostenloser Bürgerschnelltest Nein 0172 742 6636 Hauptstrasse 126 Uhr 78343 Gaienhofen - Horn Boxenstop (gehext UG ) Mo-So 10-12 Uhr und 15-19 Gaienhofen Uferstraße 23 Kostenloser Bürgerschnelltest Nein Uhr 78343 Gaienhofen www.gehext.net Mobiles Testzentrum, geführt von Gaienhofen Nach Bedarf Konstenloser Bürgerschnelltest Ja [email protected] Boxenstop (gehext UG) Sybille Treptow, Tel.: 01735637568 Fr 19-20 Uhr Hafen Testschiff So und Feiertags 11:30-12:30 01716817427 Gaienhofen Erbringstr. -

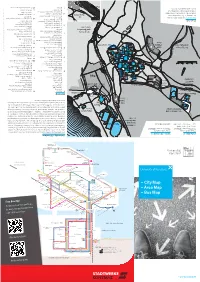

– City Map – Area Map – Bus

09.03.2015 10:13:18 09.03.2015 1 Lage-Stadt-Bus-Flyer_2015_engl.indd – uni-konstanz.de – Android handsets Android 908 Ostwind Lokalzone Kreuzlingen Lokalzone Ostwind Tarifzonengrenze Hauptzoll Tarifzonengrenze 908 Bodanplatz Schnetztor Haltestelle wird von dieser Linie nicht bedient nicht Linie dieser von wird Haltestelle X X 1 9B Haltestelle wird von dieser Linie nur in eine Richtung angefahren Richtung eine in nur Linie dieser von wird Haltestelle 9A 14 Döbele 12 2 Haltestelle wird nur in eine Richtung angefahren Richtung eine in nur wird Haltestelle 3 6 X 5 X (näheres entnehmen Sie bitte dem Fahrplan) dem bitte Sie entnehmen (näheres Döbelestr. Bodanplatz Nur zeitweiliger Linienbetrieb zeitweiliger Nur Nachtschwärmer Legende Hieronymusgasse Schulthaißstr. Lutherplatz 4 1 13 2 1 908 9B 1 9A 3 2 4 Tarifzonengrenze 6 5 4 Brüelstr. Bahnhof 13 908 Tarifzonengrenze iPhone handsets iPhone Marktstätte 6 9B 2 Stephansschule Bürgerbüro 908 9A 13 3 2 Stadtzentrum Paradies 14 6 4 12 3 12 14 5 1 6 BSB/Der Katamaran KN/FN Katamaran BSB/Der Konzilstraße 9B Gymnasium Ellenrieder 1 Gartenstr. Konzilstraße/Theater Gartenstr. Schänzle-Sporthalle/ Laube Niederburg Laube Schottenplatz VHB - City - Zone Konstanz Zone - City - VHB HTWG Wessenbergschule 5 6 3 2 12 14 9B 1 9A Bodensee-Therme Konstanz Bodensee-Therme one of these codes: codes: these of one Spanierstr. Sternenplatz Sternenplatz 4 1 13 2 1 908 9B Kliniken Schmieder Kliniken Hallenbad 9A 5 4 1 3 2 / to use journey planner from from planner journey use to Sternenplatz Rosenau Freibad Horn Freibad Tenbrinkstr. Hebelstr. 6 Markgrafenstr. Line-Eid-Str. Download our free and easy easy and free our Download Stromeyersdorf Gottmannplatz 6 Neuwerk Bodensee Stadion Bodensee Rudolf-Diesel-Str. -

Konstanz) Black Forest Railway Line

Welcome to BADEN-WÜRTTEMBERG Amenities Arriving by train/bus/metro Constance is the last station on the Offenburg to Constance Rooms (Konstanz) Black Forest Railway line. At the station, take a number 1 bus to the “Allmannsdorf“ stop or a number 4/13 • 177 beds in 44 rooms bus to the “Jugendherberge“ (“Youth Hostel“) stop. It‘s just • 6 single room with shower/toilet about 15 minutes from the station. • 28 four-bed rooms with shower/toilet • 1 five-bed room with shower/toilet • 9 six-bed rooms with shower/toilet Main a.M. >Würzbu Ta • 10 rooms for group leaders uber ankfurt> Weinheim Fr rg • 1 room accessible for wheelchairs with shower/toilet > Walldürn Mannheim Creglingen Heidelberg TAUBERTAL • 5 recreation rooms Koblenz Mosbach- Neckarelz 81 < 5 Neckargemünd- agst Dilsberg Neck J HOHENLOHE – ar SCHWÄBISCHER WALD er g Koch g 6 rnber 6 ein Nü > Amenities for groups Rh Heilbronn > Würzbur KURPFALZ – ODENWALD Karlsruhe Schwäbisch Hall room m2 persons Pforzheim- Dillweißenstein Murrhardt Enz 81 Ludwigsburg Lindau 36 max. 30 8 STUTTGART/ Aalen Baden-Baden MITTLERER Stuttgart Friedrichshafen 36 max. 30 NECKAR Göppingen- Hohenstaufen 5 Kehl Forbach-Herrenwies Tübingen Konstanz 36 max. 30 7 8 Überlingen 36 max. 30 Bad Urach Freudenstadt ar eck SCHWÄBISCHE ALB – > Ortenberg N DONAUTAL Mü Blaubeuren Ulm nc Meersburg 24 max. 20 RHEINEBENE he n 81 Sonnenbühl- Erpngen Friedrichshafen + Konstanz + Triberg 5 SCHWARZWALD Überlingen + Meersburg together 132 max. 150 Balingen-Lochen au Titisee-Neustadt/ Rottweil Don Breisach Rudenberg Sigmaringen Biberach 7 n ) he Conference equipment: CD player, DVD player, flip chart, Feldberg Leibertingen- Münc (F Freiburg Titisee > Hinterzarten/ Wildenstein > Todtnau- Titisee Ke internet access (WLAN), metaplan board, presentation case, Todtnauberg BODENSEE – mpten Schluchsee OBERSCHWABEN Schluchsee- 96 Mulhouse (Allgäu) projector, screen, TV set. -

Insights from Armed Groups in the Eastern DR Congo

æCLINICAL RESEARCH ARTICLE Aggression inoculates against PTSD symptom severity* insights from armed groups in the eastern DR Congo Tobias Hecker1,2*, Katharin Hermenau1,2, Anna Maedl2, Maggie Schauer1,2 and Thomas Elbert1,2 1Department of Psychology, University of Konstanz, Konstanz, Germany; 2vivo international, Allensbach, Germany Background: In the ongoing conflict in the Democratic Republic of the Congo (DRC), combatants are exposed to massive forms of violence and other traumatic stressors. Nevertheless, many combatants do not suffer from trauma-related disorders, although they have experienced numerous traumatizing events. Perceiving aggressive behavior as fascinating and arousing might be a defense against trauma-related disorders in the violent environment of war and conflict. Objective: Thus, in this study we investigated the relationship between the exposure to traumatic stressors, appe- titive aggression, and posttraumatic stress disorder (PTSD) symptom severity. We hypothesized that cumulative traumatic experiences correlated positively and appetitive aggression negatively with PTSD symptom severity. Method: In total, 105 voluntary male combatants from different armed groups in the eastern DRC took part in this study. In a semistructured interview, respondents were questioned about their exposure to traumatic stressors, the extent of appetitive aggression (Appetitive Aggression Scale) and their PTSD symptom severity (PTSD Symptom Scale - Interview). Results: A multiple sequential regression analysis showed that traumatic events were positively related to PTSD symptom severity. For participants with low to medium PTSD symptom severity, appetitive aggression correlated negatively with PTSD symptom severity. Conclusions: The results of this study provide further support for earlier findings that repeated exposure to traumatic stressors cumulatively heightens the risk of PTSD and revealed that appetitive aggression buffers the risk of developing PTSD symptoms under certain circumstances. -

Aus Der Geschichte Der Stadt Isny Tipps & Infos

Aus der Geschichte der Stadt Isny Villa Ysinensi Wohlstand durch Leinwandhandel Isny, selbstbewusste Stadt Die erste urkundliche Erwähnung Isnys geht auf das Jahr 1042 zurück. Wichtigste Quelle des Wohlstandes war in Isny der Leinwandhandel. Handel und Gewerbe bildeten stets das wirtschaftliche Rückgrat der Im Dezember dieses Jahres weihte Bischof Eberhard von Konstanz in Die Bürger der Stadt waren an der Ravensburger Handelsgesellschaft Stadt. Schon 1876 wurde ein Verschönerungsverein gegründet, der „villa Ysinensi“ eine von Graf Wolfrad von Altshausen-Veringen gestif- beteiligt und pflegten Kontakte in alle Länder Europas und bis nach Wege zum Schwarzen Grat – mit 1.118 m der höchste Berg Württem- tete Kirche. Manegold, der Sohn des Kirchenstifters und Bruder des Südamerika. 1507 erhielt Isny das Münzrecht und durfte Heller, bergs - anlegte, einen Aussichtsturm errichtete und Wegweiser auf- Reichenauer Mönches Hermannus Contractus, erweiterte die Stiftung Pfennige, Batzen, Kreuzer, Drittel-Güldiner und Taler prägen. stellte. Am Fuße des Höhenzuges wurde Anfang des 20. Jahrhunderts durch den Bau eines Klosters, stattete es reichlich mit Gütern aus und mit der Erbauung der Lungenheilstätte Überruh die Tradition Isnys als übergab es Hirsauer Benediktinermönchen. Bei dem aufblühenden Reformation prädikatisiertes Gesundheitszentrum begründet. Bis zum Ersten Welt- Benediktinerkloster siedelten sich bald Handwerker und Kaufleute an. Schnell fanden die Gedanken der Reformation in Isny Verbreitung, zumal krieg suchten Isny immer mehr Touristen auf. 1924 wurde hier die Es erfolgte der planmäßige Aufbau einer Marktsiedlung, die im 13. Jahr- sich die Bürger der Stadt mit dem Kloster immer wieder uneins waren. erste Deutsche Nordische Skimeisterschaft ausgetragen. hundert zur befestigten Stadt wurde. Als eines der Zentren der Reformation im Allgäu nahm Isny 1529 in Speyer an der Protestation der evangelischen Stände teil und wurde Das Gesicht der Stadt hat moderne Züge angenommen. -

Büsingen Am Hochrhein – Ein Dorf Zwischen Den Grenzen

Büsingen am Hochrhein – Ein Dorf zwischen den Grenzen Ortsentwicklungskonzept 1. Einführung 2. Rahmenbedingungen 2.1. historischer Hintergrund Exklave mit Staatsvertrag 2.2 Bevölkerungsentwicklung - Status Quo - Altersstruktur - Prognose 2.3. Wirtschaftliche Entwicklung - Status Quo - Pendlerstruktur - Verkehrssituation ÖPNV - Individualverkehr - Naherholung - Prognose 3. Potentialanalyse 3.1. Potentialabschätzung - Stemmer - Gries - Ortsetter 3.2 Bewertung Flächenpotentiale 4. Bewertung / Fazit AZ: 623.4 2 1. Einführung Ziel des vorliegenden Ortsentwicklungskonzeptes für die Gemeinde Büsingen soll eine nachhaltige und ganzheitliche Planung der langfristigen Entwicklung Büsingens sein. Hierbei soll die Ortsentwicklung - insbesondere die räumliche, wirtschaftliche und soziale Entwicklung der Gemeinde - auf integrierte Weise koordiniert werden. Die kommunal und privatwirtschaftlich organisierten Infrastrukturen sowie die Generalsanierung von privaten und öffentlichen Einrichtungen sollen mittelfris- tig umgesetzt werden. Eine aktive Grundstücks- und Baulandpolitik soll die Basis für eine positive Bevölkerungsentwicklung legen. 2. Rahmenbedingungen 2.1 Historische Hintergründe – Exklave mit Staatsvertrag Die Gemeinde Büsingen ist durch eine beeindruckende Ortsgeschichte ge- prägt, die sich bis zum heutigen Tage in der Struktur und Ausrichtung des Dorfes ablesen lässt. Die erste urkundliche Erwähnung von Büsingen geht auf das Jahr 1090 zu- rück. Seit dieser Zeit entwickelt sich der Ort kontinuierlich. Ein epochaler Ein- schnitt, der sich