Springer Series in Statistics

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

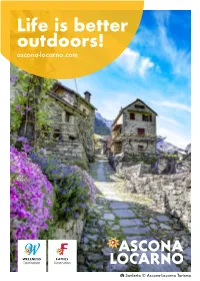

Life Is Better Outdoors! Ascona-Locarno.Com

Life is better outdoors! ascona-locarno.com Sonlerto © Ascona-Locarno Turismo Milano (I) Milano Lago Maggiore Lago Lugano (I) Torino Lyon (F) Lyon Sion Genève Bellinzona Ascona-Locarno Lausanne Switzerland Chur Bern Luzern Innsbruck (A) (A) Innsbruck Zürich Basel St. Gallen St. München (D) (D) München Freiburg (D) Freiburg Luino (I) Luino Cannobio (I) Cannobio Indemini Alpe di Neggia di Alpe Gambarogno i Ranzo Gambarogno Gerra Brissago S.Nazzaro di Brissago di km Bellinzona/ Lugano Porto Ronco Porto Piazzogna 5 Isole Isole Gambarogno Vira Bellinzona i s/Ascona Lugano Ascona Camedo Domodossola (I) Domodossola Quartino Ronco Palagnedra Bellinzona/ Cugnasco-Gerra Magadino i Contone Rasa 2 Borgnone Agarone Contone Arcegno Locarno Corcapolo Centovalli Losone i Muralto Quartino Golino Cugnasco-Gerra Intragna Verdasio Minusio Riazzino i 0 Verscio Bellinzona Comino Orselina Pila/Costa Tenero Gordola Agarone Cavigliano Brione Tegna Mosogno Riazzino Contra Spruga Gambarogno Vogorno Gordola Cardada Crana Auressio i Alpe di Neggia Vira Cimetta Russo Comologno Loco Berzona Avegno Mergoscia i Valle Onsernone Valle Magadino Piazzogna Tenero Indemini Valle Verzasca Minusio Salei Gresso Contra Gordevio Vogorno Muralto Vergeletto Aurigeno Corippo Mergoscia Gambarogno Lavertezzo S.Nazzaro Frasco Moghegno Locarno Cimetta Corippo Brione Brione Verzasca Gerra i i Maggia Gambarogno i Lodano Lavertezzo Orselina Cardada Coglio Ascona Ranzo Giumaglio Sonogno Gerra Verzasca Luino (I) Avegno Valle Verzasca Valle Losone Val di Campo di Val Gordevio Isole di Brissago -

Da Acquarossa Al Fronte Più Di Cento Anni Fa Covid-19, Intervista Al Medico Giuseppe Allegranza Emigrazione Bleniese E Ticinese

Mensile vallerano Maggio 2020 - Anno LI - N. 5 GAB - 6715 DONGIO - Fr. 5.– Una bella storia iniziata Da Acquarossa al fronte più di cento anni fa Covid-19, intervista al medico Giuseppe Allegranza Emigrazione bleniese e ticinese quiete degli ospedali di Acquarossa e Faido – dei quali è primario – per dare una mano nell’emergenza. “Quando mi hanno chiamato, sono stato contento. Già nei giorni pre- cedenti, vedendo l’evoluzione dei casi, quasi mi dispiaceva non poter essere d’aiuto. E quando poi sono arrivato a Locarno mi ha stupito come fossero riusciti a riorganizzare tutto in modo così rapido”. Molte incognite Poi, però, “è chiaro che questo virus ha qualcosa che spaventa, anzitutto i pazienti. In particolare il fatto che il suo decorso sia difficile da prevedere. E poi, come stiamo capendo col pas- sare del tempo, la polmonite è solo Verso il traguardo, il dr. Allegranza sulla Cima dell’Uomo foto Teto Arcioni uno dei suoi effetti: ci sono persone che sviluppano problemi al cuore, ai di Lorenzo Erroi e Red scorrere del tempo, l’intervista realizza- reni, embolie e trombosi. Mentre al- di Nivardo Maestrani ta dal collega Lorenzo Erroi al medico tri contagiati quasi non se ne accorgo- primario del nostro ospedale di valle, no”. Poi, dietro ai tubi e ai malanni, ai Come già lo scorso mese di aprile, anche Giuseppe Allegranza. numeri e alle complicazioni, ci sono Tra i ricordi della famiglia materna in questo mese stiamo vivendo all’inse- Redazione i singoli individui. “Una cosa che ho trovato la testimonianza del ma- gna dell’incertezza, sia personale sia per spaventa sempre è quando manca trimonio della zia Dora Fontana, ce- quanto riguarda la salute pubblica e il Chissà cosa dev’essere, fare il medi- il respiro. -

Bruzella, Cabbio, Caneggio, Morbio Superiore, Muggio, Sagno, Ddii Scudellate Quadrimestrale: Quadrimestrale: Ottobre 2013-Gennaio 2014 PELLEGRINAGGIO in POLONIA

Bruzella, Cabbio, Caneggio, Morbio Superiore, Muggio, Sagno, ddii Scudellate quadrimestrale: quadrimestrale: ottobre 2013-gennaio 2014 PELLEGRINAGGIO in POLONIA Nei giorni dal 24 giugno al 4 luglio si è svolto il Pellegrinaggio in Polo- nia. Vi hanno partecipato 45 persone. Questa volta ci siamo reca con il pullman per dare l’occasione anche a quelli “che non volano”. Ovviamente questo ha richiesto più tempo (11 giorni) e sforzo, ma ci ha permesso di visitare, strada facendo, pure le belle ci à (Praga, Vienna). Il primo giorno (24.06) era des nato soltanto alla trasferta a Praga. Il secondo giorno (25.06) era dedicato alla visita della ci à di Praga. Con l’aiuto di una guida locale abbiamo visitato il Hradcany, (con la Ca edrale, il Castello e la Via d’Oro) il Ponte Carlo, le principali vie e piazze della Ci à Vecchia (Piazza del Municipio). Dopo il pranzo, in uno dei ristoran della zona, abbiamo proseguito ancora la nostra visita guidata in centro ci à per concluderla alla famosa Piazza di S. Venceslao, dopo di che avevamo il tempo libero che ognuno poteva trascorrere come voleva (acquis , visite, riposo). Il giorno dopo (mercoledì, 26.06) in ma nata abbiamo lasciato Praga per spostarci in Polonia. Dopo il pranzo (già in territorio polacco) abbia- mo proseguito il nostro viaggio verso la ci à di Czestochowa, nota per 2 il famoso Santuario mariano con l’effi ge della Madonna Nera. Avevamo la fortuna e il privilegio di celebrare la “nostra” Santa Messa proprio davan a questa. Ovviamente, abbiamo visitato altre cose del luogo. -

Onomastikas Pētījumi Onomastic Investigations

Onomastikas pētījumi Onomastic Investigations Rīga 2014 Onomastikas pētījumi / Onomastic Investigations. Vallijas Dambes 100. dzimšanas dienai veltītās konferences materiāli / Proceedings of the International Scientific Conference to commemorate the 100th anniversary of Vallija Dambe. Rīga: LU Latviešu valodas institūts, 2014, 392 lpp. LU LATVIEŠU VALODAS INSTITŪTS Latvian LANGuaGE Institute, UniversitY OF Latvia Reģistrācijas apliecība Nr. 90002118365 Adrese / Address Akadēmijas lauk. 1-902, Rīga, LV-1050 Tālr. / Phone: 67227696, fakss / fax: +371 67227696, e-pasts / e-mail: [email protected] Atbildīgie redaktori / Editors Dr. habil. philol. Ojārs Bušs Dr. philol. Renāte Siliņa-Piņķe Dr. philol. Sanda Rapa Redakcijas kolēģija / Editorial Board Dr. philol. Laimute Balode Dr. philol. Pauls Balodis Asoc. prof. emeritus Botolv Helleland Dr. habil. philol. Ilga Jansone Dr. philol. Volker Kohlheim Dr. philol. Anna Stafecka Mg. philol. Ilze Štrausa Dr. philol. Anta Trumpa Dr. philol. Nataliya Vasilyeva Maketētāja Gunita Arnava ISBN 978-9984-742-75-5 © rakstu autori / authors of articles, 2014 © LU Latviešu valodas institūts, 2014 Saturs / Contents Priekšvārds / Foreword..................................................... 5 Приветствие участникам конференции от доктора филологических наук, профессора Александры Васильевны Суперанской ............................... 8 Philip W Matthews (Lower Hutt). Māori and English in New Zealand toponyms ............................................. 9 Harald Bichlmeier (Halle/Jena/Mainz). Welche Erkenntnisse lassen -

Ticino on the Move

Tales from Switzerland's Sunny South Ticino on theMuch has changed move since 1882, when the first railway tunnel was cut through the Gotthard and the Ceneri line began operating. Mendrisio’sTHE LIGHT Processions OF TRADITION are a moving experience. CrystalsTREASURE in the AMIDST Bedretto THE Valley. ROCKS ChestnutsA PRICKLY are AMBASSADOR a fruit for all seasons. EasyRide: Travel with ultimate freedom. Just check in and go. New on SBB Mobile. Further information at sbb.ch/en/easyride. EDITORIAL 3 A lakeside view: Angelo Trotta at the Monte Bar, overlooking Lugano. WHAT'S NEW Dear reader, A unifying path. Sopraceneri and So oceneri: The stories you will read as you look through this magazine are scented with the air of Ticino. we o en hear playful things They include portraits of men and women who have strong ties with the local area in the about this north-south di- truest sense: a collective and cultural asset to be safeguarded and protected. Ticino boasts vide. From this year, Ticino a local rural alpine tradition that is kept alive thanks to the hard work of numerous young will be unified by the Via del people. Today, our mountain pastures, dairies, wineries and chestnut woods have also been Ceneri themed path. restored to life thanks to tourism. 200 years old but The stories of Lara, Carlo and Doris give off a scent of local produce: of hay, fresh not feeling it. milk, cheese and roast chestnuts, one of the great symbols of Ticino. This odour was also Vincenzo Vela was born dear to the writer Plinio Martini, the author of Il fondo del sacco, who used these words to 200 years ago. -

Jus Plantandi" Nel Canton Ticino E in Val Mesolcina

Appunti sul cosiddetto "jus plantandi" nel Canton Ticino e in val Mesolcina Autor(en): Broggini, Romano Objekttyp: Article Zeitschrift: Vox Romanica Band (Jahr): 27 (1968) PDF erstellt am: 23.09.2021 Persistenter Link: http://doi.org/10.5169/seals-22578 Nutzungsbedingungen Die ETH-Bibliothek ist Anbieterin der digitalisierten Zeitschriften. Sie besitzt keine Urheberrechte an den Inhalten der Zeitschriften. Die Rechte liegen in der Regel bei den Herausgebern. Die auf der Plattform e-periodica veröffentlichten Dokumente stehen für nicht-kommerzielle Zwecke in Lehre und Forschung sowie für die private Nutzung frei zur Verfügung. Einzelne Dateien oder Ausdrucke aus diesem Angebot können zusammen mit diesen Nutzungsbedingungen und den korrekten Herkunftsbezeichnungen weitergegeben werden. Das Veröffentlichen von Bildern in Print- und Online-Publikationen ist nur mit vorheriger Genehmigung der Rechteinhaber erlaubt. Die systematische Speicherung von Teilen des elektronischen Angebots auf anderen Servern bedarf ebenfalls des schriftlichen Einverständnisses der Rechteinhaber. Haftungsausschluss Alle Angaben erfolgen ohne Gewähr für Vollständigkeit oder Richtigkeit. Es wird keine Haftung übernommen für Schäden durch die Verwendung von Informationen aus diesem Online-Angebot oder durch das Fehlen von Informationen. Dies gilt auch für Inhalte Dritter, die über dieses Angebot zugänglich sind. Ein Dienst der ETH-Bibliothek ETH Zürich, Rämistrasse 101, 8092 Zürich, Schweiz, www.library.ethz.ch http://www.e-periodica.ch Appunti sul eosiddetto «jus plantandi» nel Canton Ticino e in val Mesoleina1 La coscienza che, fra i diritti del vicino o patrizio, vi sia anche quello del eosiddetto jus plantandi, vive ancora qua e lä nel Canton Ticino, anche se le disposizioni legislative da oltre un secolo non solo Fabbiano ignorato, ma abbiano cercato di impe- dirne ogni applieazione. -

17A MAESTRIA PAC Faido Torre

2019 17 a MAESTRIA PAC Leventinese – Bleniese Faido Torre 8 linee elettroniche 8 linee elettroniche 6 – 8.12.2019 Fabbrica di sistemi Fabbrica di rubinetteria d’espansione, sicurezza e sistemi sanitari / e taratura riscaldamento e gas Fabbrica di circolatori e pompe Fabbrica di pompe fecali/drenaggio e sollevamento d’acqua Bärtschi SA Via Baragge 1 c - 6512 Giubiasco Tel. 091 857 73 27 - Fax 091 857 63 78 Fabbrica di corpi riscaldanti e www.impiantistica.ch Fabbrica di bollitori, cavo riscaldante, impianti di ventilazione e-mail: [email protected] caldaie, serbatoi per nafta, controllata impianti solari e termopompe Fabbrica di scambiatori di calore Fabbrica di diffusori per l’immissione a piastre, saldobrasati e a e l’aspirazione dell’aria, clappe fascio tubiero tagliafuoco e regolatori di portata DISPOSIZIONI GENERALI Organizzazione: Tiratori Aria Compressa Blenio (TACB) – Torre Carabinieri Faidesi, Sezione AC – Faido Poligoni: Torre vedi piantina 8 linee elettroniche Faido vedi piantina 8 linee elettroniche Date e Orari: 29.11.19 aperto solo se richiesto 30.11.19 dalle ore 14.00 – 19.00 1.12.19 6.12.19 dalle 20.00 alle 22.00 7.12.19 dalle 14.00 alle 19.00 8.12.19 dalle 14.00 alle 18.00 Un invito ai tiratori ticinesi e del Moesano, ma anche ad amici provenienti da fuori cantone, a voler sfruttare la possibilità di fissare un appuntamento, per telefono ai numeri sotto indicati. L’appuntamento nei giorni 29–30–01 è possibile solo negli orari indicati. Appuntamenti: Contattare i numeri telefonici sotto indicati. Iscrizioni: Mediante formulari ufficiali, entro il 19.11.2019 Maestria – TORRE: Barbara Solari Via Ponte vecchio 3 6742 Pollegio 076 399 75 16 [email protected] Maestria – FAIDO: Alidi Davide Via Mairengo 29 6763 Mairengo 079 543 78 75 [email protected] Formulari e www.tacb.ch piano di tiro: www.carabinierifaidesi.ch Regole di tiro: Fanno stato il regolamento ISSF e le regole per il tiro sportivo (RTSp) della FST. -

Orari Sante Messe

COMUNITÀ IN CAMMINO 2021 Anzonico, Calonico, Calpiogna, Campello, Cavagnago, Chiggiogna, Chironico, Faido, Mairengo, Molare, Osco, Rossura. Sobrio Venerdì 24 settembre 20.30 Faido Rassegna organistica Ticinese Concerto del Mo Andrea Predrazzini Si dovrà presentare il certificato Covid! Sabato 25 settembre 10.00 Convento Battesimo di Jungen Jason Ewan Liam 11.30 Lavorgo Battesimo di Marco Fornasier 17.00 Cavagnago Intenzione particolare 17.30 Osco Def.ti Riva Lucia, Americo, Gianni e Sebastiano Leg. De Sassi Maria/ Salzi Rosa/ Beffa- Camenzind 17.30 Chiggiogna Intenzione particolare 20.30 Chiggiogna Concerto di musica classica dell’Orchestra Vivace della Riviera Si dovrà presentare il certificato Covid! Domenica 26 settembre 9.00 Mairengo Ann. Maria Alliati IV dopo il 9.00 Chironico Def.ta Stella Zorzi Martirio di Leg. Cattomio Angelica San 10.30 Calpiogna Intenzione particolare Giovanni 10.30 Faido Def.ta Pozzi Laura Battista Defti Sérvulo Daniel Antunes e Edi Richina Lunedì 27 settembre 18.00 - Ostello Faido Gruppo di Lavoro inter-parrocchiale 19.30 Media Leventina Martedì 28 settembre Mercoledì 29 settembre Fino a nuovo avviso le SS. Messe feriali in Convento Giovedì 30 settembre sono sospese Venerdì 1°ottobre Sabato 2 ottobre 17.00 Sobrio Leg. Defanti Ernesta/Paolo/ Lorenza/ Angelica 17.30 Mairengo Intenzione particolare 17.30 Chiggiogna Intenzione particolare Domenica 3 ottobre 9.00 Chironico Leg. Solari Amtonia. Pedrini Maria/ Vigna Marta, Martini Gerolamo V dopo 10.30 Osco Ia Comunione il martirio di 10.30 Molare Leg. De Maria Dionigi, Luisa e Daniele S. Giovanni 10.30 Faido 10°Ann. Bruno Lepori Battista 15.00 Grumo Battesimo di Nicole Buletti Covid-19 / Celebrazioni liturgiche / aggiornamento dell’8 settembre 2021 Le recenti comunicazioni da parte dell’Autorità federale circa le nuove disposizioni in periodo di pandemia entreranno in vigore a partire da lunedì 13 settembre 2021 (cfr. -

Aerodrome Chart 18 NOV 2010

2010-10-19-lsza ad 2.24.1-1-CH1903.ai 19.10.2010 09:18:35 18 NOV 2010 AIP SWITZERLAND LSZA AD 2.24.1 - 1 Aerodrome Chart 18 NOV 2010 WGS-84 ELEV ft 008° 55’ ARP 46° 00’ 13” N / 008° 54’ 37’’ E 915 01 45° 59’ 58” N / 008° 54’ 30’’ E 896 N THR 19 46° 00’ 30” N / 008° 54’ 45’’ E 915 RWY LGT ALS RTHL RTIL VASIS RTZL RCLL REDL YCZ RENL 10 ft AGL PAPI 4.17° (3 m) MEHT 7.50 m 01 - - 450 m PAPI 6.00° MEHT 15.85 m SALS LIH 360 m RLLS* SALS 19 PAPI 4.17° - 450 m 360 m MEHT 7.50 m LIH Turn pad Vedeggio *RLLS follows circling Charlie track RENL TWY LGT EDGE TWY L, M, and N RTHL 19 RTIL 10 ft AGL (3 m) YCZ 450 m PAPI 4.17° HLDG POINT Z Z ACFT PRKG LSZA AD 2.24.2-1 GRASS PRKG ZULU HLDG POINT N 92 ft AGL (28 m) HEL H 4 N PRKG H 3 H 83 ft AGL 2 H (25 m) 1 ASPH 1350 x 30 m Hangar L H MAINT AIRPORT BDRY 83 ft AGL Surface Hangar (25 m) L APRON BDRY Apron ASPH HLDG POINT L TWY ASPH / GRASS MET HLDG POINT M AIS TWR M For steep APCH PROC only C HLDG POINT A 40 ft AGL HLDG POINT S PAPI (12 m) 6° S 33 ft AGL (10 m) GP / DME PAPI YCZ 450 m 4.17° GRASS PRKG SIERRA 01 50 ft AGL 46° (15 m) 46° RTHL 00’ 00’ RTIL RENL Vedeggio CWY 60 x 150 m 1:7500 Public road 100 0 100 200 300 400 m COR: RWY LGT, ALS, AD BDRY, Layout 008° 55’ SKYGUIDE, CH-8602 WANGEN BEI DUBENDORF AMDT 012 2010 18 NOV 2010 LSZA AD 2.24.1 - 2 AIP SWITZERLAND 18 NOV 2010 THIS PAGE INTENTIONALLY LEFT BLANK AMDT 012 2010 SKYGUIDE, CH-8602 WANGEN BEI DUBENDORF 16 JUL 2009 AIP SWITZERLAND LSZA AD 2.24.10 - 1 16 JUL 2009 SKYGUIDE, CH-8602 WANGEN BEI DUBENDORF REISSUE 2009 16 JUL 2009 LSZA AD 2.24.10 - 2 -

Itinerari Culturali IT

Itinerari culturali. Cultural itineraries. Le curiosità storiche della regione. The historical curiosities of the region. I / E Basso Mendrisiotto | 1 2 | Basso Mendrisiotto La Regione da scoprire. Le curiosità storiche della regione. The historical curiosities of the region. Rendete il vostro soggiorno interessante! Five routes to add interest to your stay! Cinque itinerari storico culturali sono stati disegna- Five cultural routes in the region of Mendrisiotto and ti nella Regione del Mendrisiotto e Basso Ceresio Basso Ceresio have been developed to take you on per accompagnarvi nella scoperta dei diversi co- a journey of discovery through various towns and muni e villaggi, per svelare le eccellenze e le curio- villages where you will see the sights and curiosities sità legate a luoghi, avvenimenti e persone che of places, events and people, and learn about their hanno avuto ruoli, o svolto compiti, importanti. important roles. I testi inseriti nelle isole informative lungo i cinque Along the five routes, you will find information points itinerari sono stati redatti da tre storici che, in stretta with texts and pictures from both public and private collaborazione con Mendrisiotto Turismo ed i comu- archives, prepared by three historians, together ni della regione, si sono anche occupati della scelta with Mendrisiotto Tourism and the municipalities of del materiale fotografico, reperito in prevalenza da the region. As a rule, each information point has archivi pubblici e privati. Di regola, le isole didattiche three double-sided boards, and presents the route, inserite nelle tappe dei cinque itinerari, sono compo- the history of the place, three points of interest and ste da tre pannelli bifacciali e presentano ciascuno: a curiosity (excepting: Morbio Superiore, Sagno, l’itinerario, la storia del luogo-paese, tre eccellenze Scudellate, Casima, Monte and Corteglia). -

Piano Zone Biglietti E Abbonamenti 2021

Comunità tariffale Arcobaleno – Piano delle zone arcobaleno.ch – [email protected] per il passo per Geirett/Luzzone per Göschenen - Erstfeld del Lucomagno Predelp Carì per Thusis - Coira per il passo S. Gottardo Altanca Campo (Blenio) S. Bernardino (Paese) Lurengo Osco Campello Quinto Ghirone 251 Airolo Mairengo 243 Pian S. Giacomo Bedretto Fontana Varenzo 241 Olivone Tortengo Calpiogna Mesocco per il passo All’Acqua Piotta Ambrì Tengia 25 della Novena Aquila 245 244 Fiesso Rossura Ponto Soazza Nante Rodi Polmengo Valentino 24 Dangio per Arth-Goldau - Zurigo/Lucerna Fusio Prato Faido 250 (Leventina) 242 Castro 331 33 Piano Chiggiogna Torre Cabbiolo Mogno 240 Augio Rossa S. Carlo di Peccia Dalpe Prugiasco Lostallo 332 Peccia Lottigna Lavorgo 222 Sorte Menzonio Broglio Sornico Sonogno Calonico 23 S. Domenica Prato Leontica Roseto 330 Cama Brontallo 230 Acquarossa 212 Frasco Corzoneso Cauco Foroglio Nivo Giornico Verdabbio Mondada Cavergno 326 Dongio 231 S. Maria Leggia Bignasco Bosco Gurin Gerra (Verz.) Chironico Ludiano Motto (Blenio) 221 322 Sobrio Selma 32 Semione Malvaglia 22 Grono Collinasca Someo Bodio Arvigo Cevio Brione (Verz.) Buseno Personico Pollegio Loderio Cerentino Linescio Riveo Giumaglio Roveredo (GR) Coglio Campo (V.Mag.) 325 Osogna 213 320 Biasca 21 Lodano Lavertezzo 220 Cresciano S. Vittore Cimalmotto 324 Maggia Iragna Moghegno Lodrino Claro 210 Lumino Vergeletto Gresso Aurigeno Gordevio Corippo Vogorno Berzona (Verzasca) Prosito 312 Preonzo 323 31 311 Castione Comologno Russo Berzona Cresmino Avegno Mergoscia Contra Gordemo Gnosca Ponte Locarno Gorduno Spruga Crana Mosogno Loco Brolla Orselina 20 Arbedo Verscio Monti Medoscio Carasso S. Martino Brione Bellinzona Intragna Tegna Gerra Camedo Borgnone Verdasio Minusio s. -

Appuntamenti Al Mulino Di Bruzella

Appuntamenti al Mulino di Bruzella Aprile Agosto Mulino in attività 14.00 – 16.30 1 domenica (Festa nazionale) 1 giovedì Brunch del Mugnaio 7 mercoledì - 8 giovedì 10.30 - 16.30 11 domenica - 14 mercoledì - 15 giovedì Posti limitati. 18 domenica - 21 mercoledì - 22 giovedì Iscrizione necessaria allo: 076 329 16 54 25 domenica - 28 mercoledì - 29 giovedì Mulino in attività 14.00 – 16.30 Maggio 4 mercoledì - 5 giovedì 8 domenica - 11mercoledì - 12 giovedì Museo etnografico della Mulino in attività 14.00 – 16.30 15 domenica - 18 mercoledì - 19 giovedì Valle di Muggio 2 domenica - 5 mercoledì - 6 giovedì 22 domenica - 25 mercoledì - 26 giovedì 9 domenica 29 domenica Festa della Mamma al Mulino Festa del Mulino di Bruzella Con animazioni e merenda offerta a mamme e Pranzo e animazioni Appuntamenti al Mulino di Bruzella Alla scoperta del territorio bambini Dalle 12.00 14.00 – 16.30 Informazioni presso il Museo Mulino in attività 14.00 – 16.30 Settembre 12 mercoledì -13 giovedì (Ascensione) Appuntamenti nella tradizione Mulino in attività 14.00 – 16.30 15 sabato 1 mercoledì - 2 giovedì Ul mistèe dal murnée 8 mercoledì - 9 giovedì Giornata svizzera dei mulini 12 domenica - 15 mercoledì - 16 giovedì Degustazione di polenta di mais rosso con 19 domenica - 22 mercoledì - 23 giovedì formaggi e formaggini della Valle di Muggio. 11.00 – 16.00 26 domenica Polenta e Bandella Mulino in attività 14.00 – 16.30 Pranzo a base di polenta di mais rosso e 16 domenica - 19 mercoledì - 20 giovedì formaggi della Valle di Muggio 23 domenica - 26 mercoledì -27giovedì 11.00 - 16.30 Informazioni presso il Museo Informazioni Giugno Mulino in attività 14.00 – 16.30 Mulino in attività 14.00 – 16.30 29 mercoledì - 30 giovedì Museo etnografico della Valle di Mulino di Bruzella Escursioni Associazione dei Comuni del Da oltre un secolo la fotografia aerea offre 2 mercoledì - 3 giovedì (Corpus domini) 6 domenica - 9 mercoledì -10 giovedì Muggio (MEVM), Casa Cantoni, Visita del mulino funzionante e della Scopo delle escursioni guidate è quello Generoso, RVM, vedute e prospettive inconsuete.