Making Intelligent Systems Team Players: Case Studies and Design Jssues

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Game Developers Conference Europe Wrap, New Women’S Group Forms, Licensed to Steal Super Genre Break Out, and More

>> PRODUCT REVIEWS SPEEDTREE RT 1.7 * SPACEPILOT OCTOBER 2005 THE LEADING GAME INDUSTRY MAGAZINE >>POSTMORTEM >>WALKING THE PLANK >>INNER PRODUCT ART & ARTIFICE IN DANIEL JAMES ON DEBUG? RELEASE? RESIDENT EVIL 4 CASUAL MMO GOLD LET’S DEVELOP! Thanks to our publishers for helping us create a new world of video games. GameTapTM and many of the video game industry’s leading publishers have joined together to create a new world where you can play hundreds of the greatest games right from your broadband-connected PC. It’s gaming freedom like never before. START PLAYING AT GAMETAP.COM TM & © 2005 Turner Broadcasting System, Inc. A Time Warner Company. Patent Pending. All Rights Reserved. GTP1-05-116-104_mstrA_v2.indd 1 9/7/05 10:58:02 PM []CONTENTS OCTOBER 2005 VOLUME 12, NUMBER 9 FEATURES 11 TOP 20 PUBLISHERS Who’s the top dog on the publishing block? Ranked by their revenues, the quality of the games they release, developer ratings, and other factors pertinent to serious professionals, our annual Top 20 list calls attention to the definitive movers and shakers in the publishing world. 11 By Tristan Donovan 21 INTERVIEW: A PIRATE’S LIFE What do pirates, cowboys, and massively multiplayer online games have in common? They all have Daniel James on their side. CEO of Three Rings, James’ mission has been to create an addictive MMO (or two) that has the pick-up-put- down rhythm of a casual game. In this interview, James discusses the barriers to distributing and charging for such 21 games, the beauty of the web, and the trouble with executables. -

Design Recommendations for Intelligent Tutoring Systems

Design Recommendations for Intelligent Tutoring Systems Volume 1 Learner Modeling Edited by: Robert Sottilare Arthur Graesser Xiangen Hu Heather Holden A Book in the Adaptive Tutoring Series Copyright © 2013 by the U.S. Army Research Laboratory. Copyright not claimed on material written by an employee of the U.S. Government. All rights reserved. No part of this book may be reproduced in any manner, print or electronic, without written permission of the copyright holder. The views expressed herein are those of the authors and do not necessarily reflect the views of the U.S. Army Research Laboratory. Use of trade names or names of commercial sources is for information only and does not imply endorsement by the U.S. Army Research Laboratory. This publication is intended to provide accurate information regarding the subject matter addressed herein. The information in this publication is subject to change at any time without notice. The U.S. Army Research Laboratory, nor the authors of the publication, makes any guarantees or warranties concerning the information contained herein. Printed in the United States of America First Printing, July 2013 First Printing (errata addressed), August 2013 U.S. Army Research Laboratory Human Research & Engineering Directorate SFC Paul Ray Smith Simulation & Training Technology Center Orlando, Florida International Standard Book Number: 978-0-9893923-0-3 We wish to acknowledge the editing and formatting contributions of Carol Johnson, ARL Dedicated to current and future scientists and developers of adaptive learning technologies CONTENTS Preface i Section I: Fundamentals of Learner Modeling 1 Chapter 1 ‒ A Guide to Understanding Learner Models ......... -

Towards Accessibility of Games: Mechanical Experience, Competence Profiles and Jutsus

Towards Accessibility of Games: Mechanical Experience, Competence Profiles and Jutsus. Abstract Accessibility of games is a multi-faceted problem, one of which is providing mechanically achievable gameplay to players. Previous work focused on adapting games to the individual through either dynamic difficulty adjustment or providing difficulty modes; thus focusing on their failure to meet a designed task. Instead, we look at it as a design issue; designers need to analyse the challenges they craft to understand why gameplay may be inaccessible to certain audiences. The issue is difficult to even discuss properly, whether by designers, academics or critics, as there is currently no comprehensive framework for that. That is our first contribution. We also propose challenge jutsus – structured representations of challenge descriptions (via competency profiles) and player models. This is a first step towards accessibility issues by better understanding the mechanical profile of various game challenges and what is the source of difficulty for different demographics of players. 1.0 Introduction Different Types of Experience When discussing, critiquing, and designing games, we are often concerned with the “player experience” – but what this means is unsettled as games are meant to be consumed and enjoyed in various ways. Players can experience games mechanically (through gameplay actions), aesthetically (through the visual and audio design), emotionally (through the narrative and characters), socially (through the communities of players), and culturally (through a combination of cultural interpretations and interactions). Each aspect corresponds to different ways that the player engages with the game. We can map the different forms of experience to the Eight Types of Fun (Hunicke, LeBlanc, & Zubek, 2004) (Table 1). -

Female Fighters

Press Start Female Fighters Female Fighters: Perceptions of Femininity in the Super Smash Bros. Community John Adams High Point University, USA Abstract This study takes on a qualitative analysis of the online forum, SmashBoards, to examine the way gender is perceived and acted upon in the community surrounding the Super Smash Bros. series. A total of 284 comments on the forum were analyzed using the concepts of gender performativity and symbolic interactionism to determine the perceptions of femininity, reactions to female players, and the understanding of masculinity within the community. Ultimately, although hypermasculine performances were present, a focus on the technical aspects of the game tended to take priority over any understanding of gender, resulting in a generally ambiguous approach to femininity. Keywords Nintendo; Super Smash Bros; gender performativity; symbolic interactionism; sexualization; hypermasculinity Press Start Volume 3 | Issue 1 | 2016 ISSN: 2055-8198 URL: http://press-start.gla.ac.uk Press Start is an open access student journal that publishes the best undergraduate and postgraduate research, essays and dissertations from across the multidisciplinary subject of game studies. Press Start is published by HATII at the University of Glasgow. Adams Female Fighters Introduction Examinations of gender in mainstream gaming circles typically follow communities surrounding hypermasculine games, in which members harass those who do not conform to hegemonic gender norms (Consalvo, 2012; Gray, 2011; Pulos, 2011), but do not tend to reach communities surrounding other types of games, wherein their less hypermasculine nature shapes the community. The Super Smash Bros. franchise stands as an example of this less examined type of game community, with considerably more representation of women and a colorful, simplified, and gore-free style. -

Week 2: Game Theory // History & Origins // Industry Stats

NMED 3300(A) // Theory and Aesthetics of Digital Games Friday Genre Discussions / Play Sessions Schedule, Spring 2016 Mondays and Wednesdays will consist of lectures. Fridays will be broken into two sessions. The first will take place in W866 where we will discuss particular genres and look at select examples. The second session will take place in W560 and will consist of hands-on gameplay (1 hour) of the games covered earlier in class. Some Rules for W560 Usage: 1. Please be considerate of others in the lab and those working in adjacent offices/classrooms by keeping noise to a minimum, 2. Please note that food and drink are not allowed in W560, except water if it is contained in a non-spillable container (with a screw-top or sealable cap) 3. Only students with official access are allowed in these labs (you cannot bring friends into the lab, sorry), 4. Please be gentle with equipment, consoles, and peripherals as a lot of the equipment is David’s personal property and much of the equipment is old and getting more difficult (if not impossible) to replace. 5. Finally, do not leave discs in consoles and make sure consoles and televisions are turned off when you are finished and that the area where you were working is clean and tidy. Notes on Gameplay Sessions in W560: Please keep the volume of the monitors and verbalizations to a minimum. Have a look at each of the games listed for that week’s gameplay sessions by consulting reviews/criticism, gameplay video, screenshots. As many games are released on multiple platforms and are often emulated, make sure you are viewing information for the correct version (platform, year). -

Pokemon Challenge Free

FREE POKEMON CHALLENGE PDF Tracey West,Katherine Nolls | 59 pages | 01 Aug 2008 | Scholastic US | 9780439530521 | English | New York, NY, United States Nuzlocke Challenge - Bulbapedia, the community-driven Pokémon encyclopedia However, the game Pokemon Challenge also focused around beating the Johto leaders in a puzzle challenge in Johto region. The game play mode is divided into 1 Player, 2 Player, and Training. The game was later released on the 3DS eShop on November 6, Similarly, the music in the game was redone from the original Gold and Silver with new upbeat, Pokemon Challenge familiar songs to complement the gameplay style. Pokemon Challenge attempt to meet a goal, achieve a high score, or outlast opponents by preventing blocks from reaching the top of the player's playfield. The game has three modes of play — single player, multi player, and training modes. Marathon involves playing infinitely until players lose; Challenge Pokemon Challenge a Pokemon Challenge computer or another player, involving forming combos to force the opposition to lose, though unlike other versions of the game, only the player's puzzle is visible, the opponent's replaced with a block meter HP meter in 1P Challenge indicating how close they are to losing; Time Zone involves forming a high enough score in a certain amount of time; LineClear Pokemon Challenge clearing levels by reaching a certain number of lines; Pokemon Challenge involves clearing a select number of blocks in a certain number of turns; and Garbage! With the exception of Puzzle, reaching the top in any of these modes results in the mode ending. -

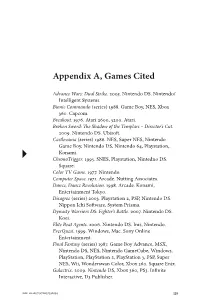

Appendix A, Games Cited

Appendix A, Games Cited Advance Wars: Dual Strike. 2005. Nintendo DS. Nintendo/ Intelligent Systems. Bionic Commando (series) 1988. Game Boy, NES, Xbox 360. Capcom. Breakout. 1976. Atari 2600, 5200. Atari. Broken Sword: The Shadow of the Templars – Director’s Cut. 2009. Nintendo DS. Ubisoft. Castlevania (series) 1986. NES, Super NES, Nintendo Game Boy, Nintendo DS, Nintendo 64, Playstation,. Konami. ChronoTrigger. 1995. SNES, Playstation, Nintedno DS. Square. Color TV Game. 1977. Nintendo. Computer Space. 1971. Arcade. Nutting Associates. Dance, Dance Revolution. 1998. Arcade. Konami, Entertainment Tokyo. Disagree (series) 2003. Playstation 2, PSP, Nintendo DS. Nippon Ichi Software, System Prisma. Dynasty Warriors DS: Fighter’s Battle. 2007. Nintendo DS. Koei. Elite Beat Agents. 2006. Nintendo DS. Inis, Nintendo. EverQuest. 1999. Windows, Mac. Sony Online Entertainment. Final Fantasy (series) 1987. Game Boy Advance, MSX, Nintendo DS, NES, Nintendo GameCube, Windows, PlayStation, PlayStation 2, PlayStation 3, PSP, Super NES, Wii, Wonderswan Color, Xbox 360. Square Enix. Galactrix. 2009. Nintendo DS, Xbox 360, PS3. Infinite Interactive, D3 Publisher. doi: 10.1057/9781137396594 Appendix Gun Fight. 1975. Arcade. Taito, Midway. Halo (series) 2001. (Xbox) Bungie, Micosoft Game Studios. Henry Hatsworth in the Puzzling Adventure. 2009. Nintendo DS. EA Tiburon, EA Games. Knights in the Nightmare. 2008. Nintendo DS. Sting, Sting Entertainment. Legend of Zelda Phantom Hourglass. 2007. Nintendo DS. Nintendo EAD. Metal Gear (series) 1987. NES, PC, Playstation, Playstation 2, Playstation 3, PSP, Xbox, Xbox360. Konami. Missile Command. 1980. Arcade. Atari. Mortal Kombat (series) 1992. Arcade, Super NES, Mega Drive/Genesis, Sega Mega CD, Amiga, Game Gear, Game Boy, Sega Master System, Midway Games, Nether Realms. -

Wii Super Paper Mario.Pdf

NEED HELP WITH INSTALLATION, BESOIN D’AIDE POUR L’INSTALLATION, ¿NECESITAS AYUDA DE INSTALACION, MAINTENANCE OR SERVICE? L’ENTRETIEN OU LA RÉPARATION? MANTENIMIENTO O SERVICIO? Nintendo Customer Service Service à la Clientèle de Nintendo Servicio al Cliente de Nintendo WWW.NINTENDO.COM WWW.NINTENDO.COM WWW.NINTENDO.COM or call 1-800-255-3700 ou appelez le 1-800-255-3700 o llame al 1-800-255-3700 NEED HELP PLAYING A GAME? BESOIN D’AIDE POUR JOUER? ¿NECESITAS AYUDA CON UN JUEGO? Nintendo's game pages, at Sur le site www.nintendo.com/games, des Las páginas de juegos de Nintendo, en www.nintendo.com/games, feature pages sur les jeux de Nintendo présentent le www.nintendo.com/games, incluyen instrucciones walkthroughs, frequently-asked questions, and déroulement des jeux, des foires aux questions paso a paso, preguntas frecuentes y códigos para codes for many of our games. If your answer et des codes pour plusieurs de nos jeux. Si la muchos de nuestros juegos. Si no encuentras tu isn't there, check out our forums where you can réponse que vous cherchez n’y est pas, consultez respuesta, visita nuestros foros, donde podrás exchange tips with other gamers online. nos forums où vous pouvez échanger, en ligne, intercambiar consejos con otros jugadores en línea. For more information about our forums, visit des indices avec d’autres joueurs. Para obtener más información sobre nuestros www.nintendo.com/community. Pour obtenir plus d’information sur nos forums en foros, visita www.nintendo.com/community. If you don't have access to the web-site, recorded ligne, visitez www.nintendo.com/community. -

Cut out Cut out the Pieces, Or You Can Use Your Own Glitter Or Confetti

Paper Mario: The Origami King confetti bookmark Materials needed: · Scissors* · Clear contact paper (preferred) · Additional confetti or glitter or clear packing tape (optional) · Tape *Kids, ask a grown-up for help · Glue with scissors! Instructions: Step 1: Cut out the bookmark frame along the dotted lines, then carefully cut out the blank middle pieces along the dotted lines to make two windows. Step 2: Fold the bookmark frame in half lengthwise along the solid line, then tape or glue the sides together. Step 3: To make the confetti, cut out the color swatches and tear up the paper into small pieces. You can also use scissors to cut up Cut out Cut out the pieces, or you can use your own glitter or confetti. Step 4: Cut out two pieces of contact paper. Each piece should be a little larger than the bookmark frame. Step 5: Peel off the backing of one piece of contact paper and carefully tape it to your workspace with the sticky side up. Step 6: Carefully stick the bookmark frame onto the contact paper. Use your finger to pick up pieces of confetti and stick them to the contact paper inside the window cut-out. Step 7: Once you're done, peel off the backing of the second piece of contact paper and carefully place it over the first piece. Step 8: Cut out your bookmark. It's now ready to use! play.nintendo.com ©2020 Nintendo. Program © 2020 Nintendo/INTELLIGENT SYSTEMS. Paper Mario is a trademark of Nintendo.. -

Super Smash Bros Nintendo 3Ds Iso Direct Download Super Smash Bros

super smash bros nintendo 3ds iso direct download Super Smash Bros. Free demo : Get ready to play Super Smash Bros. with friends this summer by practicing your moves now. Click here to find out how to download a demo of this game from the Nintendo eShop on your Nintendo 3DS system. Game update: Download the free game update (ver. 1.0.2) available now on Nintendo eShop. This update is required for online mode. Super Smash Bros. for Nintendo 3DS is the first portable entry in the renowned series, in which game worlds collide. Up to four players battle each other locally or online using some of Nintendo’s most well-known and iconic characters across beautifully designed stages inspired by classic portable Nintendo games. It’s a genuine, massive Super Smash Bros. experience that’s available to play on the go, anytime, anywhere. Smash and crash through “Smash Run” mode, a new mode exclusive to the Nintendo 3DS version that gives up to four players five minutes to fight solo through a huge battlefield while taking down recognizable enemies from almost every major Nintendo franchise and multiple third-party partners. Defeated enemies leave behind power-ups to collect. Players who collect more power-ups have an advantage once time runs out and the battle with opponents begins. Compete with classic characters from the Super Smash Bros. series like Mario, Link, Samus and Pikachu, along with new challengers like Mega Man, Little Mac and newly announced Palutena, the Goddess of Light from the Kid Icarus games. For the first time players can even compete as their own Mii characters. -

Quick Start Guide

MAA-CTR-AXCP-UKV NINTENDO 3DS SOFTWARE QUICK GUIDE SUPER SMASH BROS.™ FOR NINTENDO 3DS Controls There are two ways Grab Shield / Dodge or Jump to move While in the air: Slide Mid-air jump Move normally Tap Use a quick, Attack sharp motion Standard attack Move Strong attack You will attack in the Walk Crouch direction you slide Dash Smash attack You will launch your enemy in the Jump direction you tap You can also use or to jump Drop through a platform Special Move Perform one of four types of special move, or Taunt Pause a Final Smash. Note: You can change the controls in the Options menu in Games & More. Copy your customised fighters over to a Wii U™ console Choose and use them in Super Smash Bros.™ for Wii U *! Your Fighter! * Released at a later date For more information, see the Super Smash Bros. for Many different characters have risen to the Wii U electronic manual. Smash challenge. Make sure they’re ready by customising their equipment and special moves to change their stats! Copy Note: You can restrict online interaction and /or StreetPass™ functionality via Parental Controls. For more information, refer to the Operations Manual for your system. Electronic Manual Select the icon for this software on the HOME Menu and touch MANUAL to view the electronic manual. Please read this manual thoroughly to ensure maximum enjoyment of your new software. This software title includes an electronic manual to reduce the amount of paper used in its packaging. For support, please consult the electronic manual, the Operations Manual for your system or the Nintendo website. -

Games, AI, and Systems Michael Straeubig Eludamos

Vol. 10, No. 1 (2019) http://www.eludamos.org Games, AI, and Systems Michael Straeubig Eludamos. Journal for Computer Game Culture. 2019; 10 (1), pp. 141–160 Games, AI, and Systems MICHAEL STRAEUBIG 2002: A Revolution Brewing “A revolution has been brewing”, writes Paul Tozour, then an AI developer at Ion Storm, in his essay “The evolution of game AI” (Tozour 2002). The revolution he is alluding to is the rapidly growing role of artificial intelligence and machine learning in the video game industry. Tozour sees progress through advancements in hardware, a better understanding of AI in games, and dedicated AI programmers. He argues for AI-centric game design and predicts a closer relationship between academic AI and video game AI. If this sounds familiar from today’s perspective, one may ask if the current situation finally marks the revolution1 Tozour wished for. 2002 is the year that sees games like Metroid Prime on the Game Cube, Grand Theft Auto: Vice City on the Playstation 2, and Neverwinter Nights, America’s Army and Battlefield 1942 on the PC platform. Notable high-end game engines include id Tech 3 and Unreal Engine 2. Early versions of the beginner-friendly GameMaker are published as freeware, whereas the Unity game engine is not around yet—the company will be established two years later. Valve has just released a beta version of Steam, their revolutionary new digital distribution platform. Most commercial games still ship on CD-ROM, while some experimental ones run on the ubiquitous Macromedia Flash Player. The exhibition Game On: The History and Culture of Video Games (Carr 2003) celebrates 40 years of game development, running in summer 2002 at the Barbican in London, before it goes on tour worldwide.2 In the accompanying catalogue, we find Eric Zimmerman’s (2002) ontological musing titled “Do independent games exist?”.