THEORETICAL BEHAVIORISM John Staddon Duke University “Isms”

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Extinction of Cocaine Self-Administration Reveals Functionally and Temporally Distinct Dopaminergic Signals in the Nucleus Accumbens

Neuron, Vol. 46, 661–669, May 19, 2005, Copyright ©2005 by Elsevier Inc. DOI 10.1016/j.neuron.2005.04.036 Extinction of Cocaine Self-Administration Reveals Functionally and Temporally Distinct Dopaminergic Signals in the Nucleus Accumbens Garret D. Stuber,1 R. Mark Wightman,1,3 2004), which can then become associated with neutral and Regina M. Carelli1,2,* environmental stimuli. Because of this, dopaminergic 1Curriculum in Neurobiology transmission within the NAc may promote the formation 2 Department of Psychology of an unnatural relationship between drugs of abuse 3 Department of Chemistry and Neuroscience Center and environmental cues (O’Brien et al., 1993; Everitt et The University of North Carolina al., 1999; Hyman and Malenka, 2001; Wise, 2004). How- Chapel Hill, North Carolina 27599 ever, because cocaine has a potent pharmacological effect to inhibit monoamine uptake (Jones et al., 1995; Wu et al., 2001), it has been difficult to separate in- Summary creases in dopamine due to either the primary pharma- cological or secondary conditioned effects of the drug. While Pavlovian and operant conditioning influence Microdialysis studies in self-administrating animals drug-seeking behavior, the role of rapid dopamine have shown that NAc dopamine decreases over min- signaling in modulating these processes is unknown. utes leading up to a lever press for cocaine and then During self-administration of cocaine, two dopami- increases slowly after the drug infusion (Wise et al., nergic signals, measured with 100 ms resolution, oc- 1995). When dopamine changes are examined with curred immediately before and after the lever press greater temporal resolution, electrochemical studies (termed pre- and postresponse dopamine transients). -

Extinction Learning in Humans: Role of the Amygdala and Vmpfc

Neuron, Vol. 43, 897–905, September 16, 2004, Copyright 2004 by Cell Press Extinction Learning in Humans: Role of the Amygdala and vmPFC Elizabeth A. Phelps,1,* Mauricio R. Delgado,1 CR). Extinction occurs when a CS is presented alone, Katherine I. Nearing,1 and Joseph E. LeDoux2 without the US, for a number of trials and eventually the 1Department of Psychology and CR is diminished or eliminated. Behavioral studies of 2Center for Neural Science extinction suggest that it is not a process of “unlearning” New York University but rather is a process of new learning of fear inhibition. New York, New York 10003 This view of extinction as an active learning process is supported by studies showing that after extinction the CR can return in a number of situations, such as the Summary passage of time (spontaneous recovery), the presenta- tion of the US alone (reinstatement), or if the animal is Understanding how fears are acquired is an important placed in the context of initial learning (renewal; see step in translating basic research to the treatment Bouton, 2002, for a review). Although animal models of of fear-related disorders. However, understanding how the mechanisms of fear acquisition have been investi- learned fears are diminished may be even more valuable. gated over the last several decades, studies of the We explored the neural mechanisms of fear extinction mechanisms of extinction learning have only recently in humans. Studies of extinction in nonhuman animals started to emerge. Research examining the neural sys- have focused on two interconnected brain regions: tems of fear extinction in nonhuman animals have fo- the amygdala and the ventral medial prefrontal cortex cused on two interconnected brain regions: the ventral (vmPFC). -

AIH Chapter 2: Human Behavior

Aviation Instructor's Handbook (FAA-H-8083-9) Chapter 2: Human Behavior Introduction Derek’s learner, Jason, is very smart and able to retain a lot of information, but has a tendency to rush through the less exciting material and shows interest and attentiveness only when performing tasks that he finds to be interesting. This concerns Derek because he is worried that Jason will overlook many important details and rush through procedures. For a homework assignment Jason was told to take a very thorough look at Preflight Procedures and that for his next flight lesson they would discuss each step in detail. As Derek predicted, Jason found this assignment to be boring and was not prepared. Derek knows that Jason is a “thrill seeker” as he talks about his business, which is a wilderness adventure company. Derek wants to find a way to keep Jason focused and help him find excitement in all areas of learning so that he will understand the complex art of flying and aircraft safety. Learning is the acquisition of knowledge or understanding of a subject or skill through education, experience, practice, or study. This chapter discusses behavior and how it affects the learning process. An instructor seeks to understand why people act the way they do and how people learn. An effective instructor uses knowledge of human behavior, basic human needs, the defense mechanisms humans use that prevent learning, and how adults learn in order to organize and conduct productive learning activities. Definitions of Human Behavior The study of human behavior is an attempt to explain how and why humans function the way they do. -

The Psychology of Music Haverford College Psychology 303

The Psychology of Music Haverford College Psychology 303 Instructor: Marilyn Boltz Office: Sharpless 407 Contact Info: 610-896-1235 or [email protected] Office Hours: before class and by appointment Course Description Music is a human universal that has been found throughout history and across different cultures of the world. Why, then, is music so ubiquitous and what functions does it serve? The intent of this course is to examine this question from multiple psychological perspectives. Within a biological framework, it is useful to consider the evolutionary origins of music, its neural substrates, and the development of music processing. The field of cognitive psychology raises questions concerning the relationship between music and language, and music’s ability to communicate emotive meaning that may influence visual processing and body movement. From the perspectives of social and personality psychology, music can be argued to serve a number of social functions that, on a more individual level, contribute to a sense of self and identity. Lastly, musical behavior will be considered in a number of applied contexts that include consumer behavior, music therapy, and the medical environment. Prerequisites: Psychology 100, 200, and at least one advanced 200-level course. Biological Perspectives A. Evolutionary Origins of Music When did music evolve in the overall evolutionary scheme of events and why? Does music serve any adaptive purposes or is it, as some have argued, merely “auditory cheesecake”? What types of evidence allows us to make inferences about the origins of music? Reading: Thompson, W.F. (2009). Origins of Music. In W.F. Thompson, Music, thought, and feeling: Understanding the psychology of music. -

Social Psychology Glossary

Social Psychology Glossary This glossary defines many of the key terms used in class lectures and assigned readings. A Altruism—A motive to increase another's welfare without conscious regard for one's own self-interest. Availability Heuristic—A cognitive rule, or mental shortcut, in which we judge how likely something is by how easy it is to think of cases. Attractiveness—Having qualities that appeal to an audience. An appealing communicator (often someone similar to the audience) is most persuasive on matters of subjective preference. Attribution Theory—A theory about how people explain the causes of behavior—for example, by attributing it either to "internal" dispositions (e.g., enduring traits, motives, values, and attitudes) or to "external" situations. Automatic Processing—"Implicit" thinking that tends to be effortless, habitual, and done without awareness. B Behavioral Confirmation—A type of self-fulfilling prophecy in which people's social expectations lead them to behave in ways that cause others to confirm their expectations. Belief Perseverance—Persistence of a belief even when the original basis for it has been discredited. Bystander Effect—The tendency for people to be less likely to help someone in need when other people are present than when they are the only person there. Also known as bystander inhibition. C Catharsis—Emotional release. The catharsis theory of aggression is that people's aggressive drive is reduced when they "release" aggressive energy, either by acting aggressively or by fantasizing about aggression. Central Route to Persuasion—Occurs when people are convinced on the basis of facts, statistics, logic, and other types of evidence that support a particular position. -

The Importance of Sleep in Fear Conditioning and Posttraumatic Stress Disorder

Biological Psychiatry: Commentary CNNI The Importance of Sleep in Fear Conditioning and Posttraumatic Stress Disorder Robert Stickgold and Dara S. Manoach Abnormal sleep is a prominent feature of Axis I neuropsychia- fear and distress are extinguished. Based on a compelling tric disorders and is often included in their DSM-5 diagnostic body of work from human and rodent studies, fear extinction criteria. While often viewed as secondary, because these reflects not the erasure of the fear memory but the develop- disorders may themselves diminish sleep quality, there is ment of a new safety or “extinction memory” that inhibits the growing evidence that sleep disorders can aggravate, trigger, fear memory and its associated emotional response. and even cause a range of neuropsychiatric conditions. In this issue, Straus et al. (3 ) report that total sleep Moreover, as has been shown in major depression and deprivation can impair the retention of such extinction mem- attention-deficit/hyperactivity disorder, treating sleep can ories. In their study, healthy human participants in three improve symptoms, suggesting that disrupted sleep contri- groups successfully learned to associate a blue circle (condi- butes to the clinical syndrome and is an appropriate target for tioned stimulus) with the occurrence of an electric shock treatment. In addition to its effects on symptoms, sleep (unconditioned stimulus) during a fear acquisition session. disturbance, which is known to impair emotional regulation The following day, during extinction learning, the blue circle and cognition in otherwise healthy individuals, may contribute was repeatedly presented without the shock. The day after to or cause disabling cognitive deficits. For sleep to be a target that, extinction recall was tested by again repeatedly present- for treatment of symptoms and cognitive deficits in neurop- ing the blue circle without the shock. -

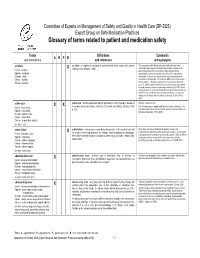

Glossary of Terms Related to Patient and Medication Safety

Committee of Experts on Management of Safety and Quality in Health Care (SP-SQS) Expert Group on Safe Medication Practices Glossary of terms related to patient and medication safety Terms Definitions Comments A R P B and translations and references and synonyms accident accident : an unplanned, unexpected, and undesired event, usually with adverse “For many years safety officials and public health authorities have Xconsequences (Senders, 1994). discouraged use of the word "accident" when it refers to injuries or the French : accident events that produce them. An accident is often understood to be Spanish : accidente unpredictable -a chance occurrence or an "act of God"- and therefore German : Unfall unavoidable. However, most injuries and their precipitating events are Italiano : incidente predictable and preventable. That is why the BMJ has decided to ban the Slovene : nesreča word accident. (…) Purging a common term from our lexicon will not be easy. "Accident" remains entrenched in lay and medical discourse and will no doubt continue to appear in manuscripts submitted to the BMJ. We are asking our editors to be vigilant in detecting and rejecting inappropriate use of the "A" word, and we trust that our readers will keep us on our toes by alerting us to instances when "accidents" slip through.” (Davis & Pless, 2001) active error X X active error : an error associated with the performance of the ‘front-line’ operator of Synonym : sharp-end error French : erreur active a complex system and whose effects are felt almost immediately. (Reason, 1990, This definition has been slightly modified by the Institute of Medicine : “an p.173) error that occurs at the level of the frontline operator and whose effects are Spanish : error activo felt almost immediately.” (Kohn, 2000) German : aktiver Fehler Italiano : errore attivo Slovene : neposredna napaka see also : error active failure active failures : actions or processes during the provision of direct patient care that Since failure is a term not defined in the glossary, its use is not X recommended. -

Genetics and Human Behaviour

Cover final A/W13657 19/9/02 11:52 am Page 1 Genetics and human behaviour : Genetic screening: ethical issues Published December 1993 the ethical context Human tissue: ethical and legal issues Published April 1995 Animal-to-human transplants: the ethics of xenotransplantation Published March 1996 Mental disorders and genetics: the ethical context Published September 1998 Genetically modified crops: the ethical and social issues Published May 1999 The ethics of clinical research in developing countries: a discussion paper Published October 1999 Stem cell therapy: the ethical issues – a discussion paper Published April 2000 The ethics of research related to healthcare in developing countries Published April 2002 Council on Bioethics Nuffield The ethics of patenting DNA: a discussion paper Published July 2002 Genetics and human behaviour the ethical context Published by Nuffield Council on Bioethics 28 Bedford Square London WC1B 3JS Telephone: 020 7681 9619 Fax: 020 7637 1712 Internet: www.nuffieldbioethics.org Cover final A/W13657 19/9/02 11:52 am Page 2 Published by Nuffield Council on Bioethics 28 Bedford Square London WC1B 3JS Telephone: 020 7681 9619 Fax: 020 7637 1712 Email: [email protected] Website: http://www.nuffieldbioethics.org ISBN 1 904384 03 X October 2002 Price £3.00 inc p + p (both national and international) Please send cheque in sterling with order payable to Nuffield Foundation © Nuffield Council on Bioethics 2002 All rights reserved. Apart from fair dealing for the purpose of private study, research, criticism or review, no part of the publication may be produced, stored in a retrieval system or transmitted in any form, or by any means, without prior permission of the copyright owners. -

Music Education and Music Psychology: What‟S the Connection? Research Studies in Music Education

Music Psychology and Music Education: What’s the connection? By: Donald A. Hodges Hodges, D. (2003) Music education and music psychology: What‟s the connection? Research Studies in Music Education. 21, 31-44. Made available courtesy of SAGE PUBLICATIONS LTD: http://rsm.sagepub.com/ ***Note: Figures may be missing from this format of the document Abstract: Music psychology is a multidisciplinary and interdisciplinary study of the phenomenon of music. The multidisciplinary nature of the field is found in explorations of the anthropology of music, the sociology of music, the biology of music, the physics of music, the philosophy of music, and the psychology of music. Interdisciplinary aspects are found in such combinatorial studies as psychoacoustics (e.g., music perception), psychobiology (e.g., the effects of music on the immune system), or social psychology (e.g., the role of music in social relationships). The purpose of this article is to explore connections between music psychology and music education. Article: ‘What does music psychology have to do with music education?’ S poken in a harsh, accusatory tone (with several expletives deleted), this was the opening salvo in a job interview I once had. I am happy, many years later, to have the opportunity to respond to that question in a more thoughtful and thorough way than I originally did. Because this special issue of Research Studies in Music Education is directed toward graduate students in music education and their teachers, I will proceed on the assumption that most of the readers of this article are more familiar with music education than music psychology. -

Noradrenergic Blockade Stabilizes Prefrontal Activity and Enables Fear

Noradrenergic blockade stabilizes prefrontal activity PNAS PLUS and enables fear extinction under stress Paul J. Fitzgeralda,1, Thomas F. Giustinoa,b,1, Jocelyn R. Seemanna,b,1, and Stephen Marena,b,2 aDepartment of Psychology, Texas A&M University, College Station, TX 77843; and bInstitute for Neuroscience, Texas A&M University, College Station, TX 77843 Edited by Bruce S. McEwen, The Rockefeller University, New York, NY, and approved June 1, 2015 (received for review January 12, 2015) Stress-induced impairments in extinction learning are believed to preferentially involved in fear extinction (32–34). Stress-induced sustain posttraumatic stress disorder (PTSD). Noradrenergic sig- alterations in the balance of neuronal activity in PL and IL as a naling may contribute to extinction impairments by modulating consequence of noradrenergic hyperarousal might therefore con- medial prefrontal cortex (mPFC) circuits involved in fear regula- tribute to extinction impairments and the maintenance of PTSD. tion. Here we demonstrate that aversive fear conditioning rapidly To address this question, we combine in vivo microelectrode re- and persistently alters spontaneous single-unit activity in the cording in freely moving rats with behavioral and pharmacological prelimbic and infralimbic subdivisions of the mPFC in behaving manipulations to determine whether β-noradrenergic receptors rats. These conditioning-induced changes in mPFC firing were mediate stress-induced alterations in mPFC neuronal activity. mitigated by systemic administration of propranolol (10 mg/kg, Further, we examine whether systemic propranolol treatment, i.p.), a β-noradrenergic receptor antagonist. Moreover, proprano- given immediately after an aversive experience, rescues stress- lol administration dampened the stress-induced impairment in ex- induced impairments in fear extinction. -

Genetic and Environmental Influences on Human Behavioral Differences

Annu. Rev. Neurosci. 1998. 21:1–24 Copyright c 1998 by Annual Reviews Inc. All rights reserved GENETIC AND ENVIRONMENTAL INFLUENCES ON HUMAN BEHAVIORAL DIFFERENCES Matt McGue and Thomas J. Bouchard, Jr Department of Psychology and Institute of Human Genetics, 75 East River Road, University of Minnesota, Minneapolis, Minnesota 55455; e-mail: [email protected] KEY WORDS: heritability, gene-environment interaction and correlation, nonshared environ- ment, psychiatric genetics ABSTRACT Human behavioral genetic research aimed at characterizing the existence and nature of genetic and environmental influences on individual differences in cog- nitive ability, personality and interests, and psychopathology is reviewed. Twin and adoption studies indicate that most behavioral characteristics are heritable. Nonetheless, efforts to identify the genes influencing behavior have produced a limited number of confirmed linkages or associations. Behavioral genetic re- search also documents the importance of environmental factors, but contrary to the expectations of many behavioral scientists, the relevant environmental factors appear to be those that are not shared by reared together relatives. The observation of genotype-environment correlational processes and the hypothesized existence of genotype-environment interaction effects serve to distinguish behavioral traits from the medical and physiological phenotypes studied by human geneticists. Be- havioral genetic research supports the heritability, not the genetic determination, of behavior. INTRODUCTION One of the longest, and at times most contentious, debates in Westernintellectual history concerns the relative influence of genetic and environmental factors on human behavioral differences, the so-called nature-nurture debate (Degler 1991). Remarkably, the past generation of behavioral genetic research has led 1 0147-006X/98/0301-0001$08.00 2 McGUE & BOUCHARD many to conclude that it may now be time to retire this debate in favor of a perspective that more strongly emphasizes the joint influence of genes and the environment. -

Mind/Brain/Behavior Study Guide

Guide to the Focus in Mind, Brain, Behavior For History and Science Concentrators Science and Society Track Honors Eligible 2018-2019 Department of the History of Science Science Center 371 ____________________________________________________________________________________ The Focus in Mind, Brain, Behavior in the Science and Society Track offers opportunities for study that are at once more interdisciplinary and more focused than those available to students doing a conventional plan of study in our Department. • More interdisciplinary because the track requires students to take a graduated set of specially designed or specially designated interdisciplinary courses in common with the students in all the other MBB tracks, and also because the track offers the option of augmenting a core historical disciplinary approach to the mind, brain, and behavioral sciences with one auxiliary social science or humanities discipline, such as medical anthropology, public policy, philosophy of mind, etc. • More focused, because it asks students to bring those interdisciplinary perspectives to bear on a particularly vexed arena of scientific inquiry: the mind, brain and behavioral sciences. These include, of course, the neurosciences and cognitive sciences, but also evolutionary perspectives on behavior and cognition, relevant aspects of genetics, and such areas of medical science as psychiatry and psychopharmacology. This study guide offers some guidelines as you go about composing a study program in MBB. The guide outlines the particular areas of focus you can pursue within MBB and offers suggestions for courses you might take within those areas. This study guide is not meant to replace the other available MBB resources or the course guides of the various Harvard divisions.