A Robust Method for VR-Based Hand Gesture Recognition Using Density-Based CNN

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1997 Conference Program Book (Hangul)

W ELCOME Korea Teachers of English to Speakers of Other Languages 대한영어교육학회 1997 National Conference and Publishers Exposition Technology in Education; Communicating Beyond Traditional networks October 3-5, 1997 Kyoung-ju Education and Cultural Center Kyoung-ju, South Korea Conference Co-chairs; Demetra Gates Taegu University of Education Kari Kugler Keimyung Junior University, Taegu 1996-97 KOTESOL President; Park Joo-kyung Honam University, Kwangju 1997-98 KOTESOL President Carl Dusthimer Hannam University, Taejon Presentation Selection Committee: Carl Dusthimer, Student Coordination: Steve Garrigues Demetra Gates, Kari Kugler, Jack Large Registration: Rodney Gillett, AeKyoung Large, Jack Program: Robert Dickey, Greg Wilson Large, Lynn Gregory, Betsy Buck Cover: Everette Busbee International Affairs: Carl Dusthimer, Kim Jeong- ryeol, Park Joo Kyung, Mary Wallace Publicity: Oryang Kwon Managing Information Systems: AeKyoung Large, Presiders: Kirsten Reitan Jack Large, Marc Gautron, John Phillips, Thomas Special Events: Hee-Bon Park Duvernay, Kim Jeong-ryeol, Sung Yong Gu, Ryu Seung Hee, The Kyoung-ju Board Of Education W ELCOME DEAR KOTESOL MEMBERS, SPEAKERS, AND FRIENDS: s the 1997 Conference Co-Chairs we would like to welcome you to this year's conference, "Technology Ain Education: Communicating Beyond Traditional Networks." While Korea TESOL is one of the youngest TESOL affiliates in this region of the world, our goal was to give you one of the finest opportunities for professional development available in Korea. The 1997 conference has taken a significant step in this direction. The progress we have made in this direction is based on the foundation developed by the coachers of the past: our incoming President Carl Dusthimer, Professor Woo Sang-do, and Andy Kim. -

An Autoethnography on Teaching Undergraduate Korean Studies, on and Off the Peninsula

No Frame to Fit It All: An Autoethnography on Teaching Undergraduate Korean Studies, on and off the Peninsula Cedarbough T. Saeji Acta Koreana, Volume 21, Number 2, December 2018, pp. 443-459 (Article) Published by Keimyung University, Academia Koreana For additional information about this article https://muse.jhu.edu/article/756425 [ Access provided at 1 Oct 2021 21:59 GMT with no institutional affiliation ] ACTA KOREANA Vol. 21, No. 2, December 2018: 443–460 doi:10.18399/acta.2018.21.2.004 No Frame to Fit It All: An Autoethnography on Teaching Undergraduate Korean Studies, on and off the Peninsula CEDARBOUGH T. SAEJI In the past two decades, Korean Studies has expanded to become an interdisciplinary and increasingly international field of study and research. While new undergraduate Korean Studies programs are opening at universities in the Republic of Korea (ROK) and intensifying multi-lateral knowledge transfers, this process also reveals the lack of a clear identity that continues to haunt the field. In this autoethnographic essay, I examine the possibilities and limitations of framing Korea as an object of study for diverse student audiences, looking towards potential futures for the field. I focus on 1) the struggle to escape the nation-state boundaries implied in the habitual terminology, particularly when teaching in the ROK, where the country is unmarked (“Han’guk”), the Democratic People’s Republic of Korea is marked (“Pukhan”), and the diaspora is rarely mentioned at all; 2) the implications of the expansion of Korean Studies as a major within the ROK; 3) in-class navigations of Korean national pride, the trap of Korean uniqueness and (self-)orientalization and CEDARBOUGH T. -

Guide for International Faculty (Fall 2021) Table of Contents

F Guide for International Faculty (Fall 2021) Table of Contents Ⅰ. General Information 1. Brief History .................................................................................... 1 2. Chronology .................................................................................... 2 3. Organizational Chart ...................................................................... 5 4. Campus Map .................................................................................. 6 5. Mission Statement ........................................................................... 6 6. Facts and Figures ........................................................................... 7 Ⅱ. Organization and Administration 1. University Primary Administrations and Affiliates A. Campus Ministry Team .............................................................. 8 B. Human Rights Center .................................................................. 8 C. Academic Affairs Team .............................................................. 8 D. Faculty Personnel Team .......................................................... 9 E. Center for International Affairs ................................................... 9 F. Center for Teaching & Learning ................................................. 10 G. Center for e-Learning .............................................................. 10 H. Health Care Center .................................................................. 10 I. System Operation Team ............................................................. -

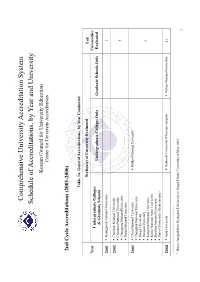

Schedule of Accreditations, by Year and University

Comprehensive University Accreditation System Schedule of Accreditations, by Year and University Korean Council for University Education Center for University Accreditation 2nd Cycle Accreditations (2001-2006) Table 1a: General Accreditations, by Year Conducted Section(s) of University Evaluated # of Year Universities Undergraduate Colleges Undergraduate Colleges Only Graduate Schools Only Evaluated & Graduate Schools 2001 Kyungpook National University 1 2002 Chonbuk National University Chonnam National University 4 Chungnam National University Pusan National University 2003 Cheju National University Mokpo National University Chungbuk National University Daegu University Daejeon University 9 Kangwon National University Korea National Sport University Sunchon National University Yonsei University (Seoul campus) 2004 Ajou University Dankook University (Cheonan campus) Mokpo National University 41 1 Name changed from Kyungsan University to Daegu Haany University in May 2003. 1 Andong National University Hanyang University (Ansan campus) Catholic University of Daegu Yonsei University (Wonju campus) Catholic University of Korea Changwon National University Chosun University Daegu Haany University1 Dankook University (Seoul campus) Dong-A University Dong-eui University Dongseo University Ewha Womans University Gyeongsang National University Hallym University Hanshin University Hansung University Hanyang University Hoseo University Inha University Inje University Jeonju University Konkuk University Korea -

Keimyung University 2

Contents 1. About Republic of Korea and Daegu 03 KEIMYUNG UNIVERSITY 2. About Keimyung University 04 3. Why Keimyung University? 06 4. Student Activities (Programs) 09 5. Faces of Keimyung University 13 OPENING THE LIGHT TO THE WORLD 1095 Dalgubeol-daero, Dalseo-gu, Daegu 42601, Republic of Korea · Undergraduate Program (Except Chinese Students) Tel. +82-53-580-6029 / E-mail. [email protected] · Undergraduate Program (Only Chinese Students) Tel. +82-53-580-6497 / E-mail. [email protected] · Graduate Program Tel. +82-53-580-6254 / E-mail. [email protected] · Korean Language Program Tel. +82-53-580-6353, 6355 / E-mail. [email protected] OPENING THE LIGHT TO THE WORLD_KMU 2 / 3 2 ABOUT REPUBLIC OF KOREA1 AND DAEGU REPUBLIC OF korea DAEGU METROPOLITAN CITY The Republic of Korea which is approximately 5,000 years Daegu is located in the south-east of the Republic of Korea old has overcome a variety of difficulties such as the Korean- and it is the fourth largest city after Seoul, Busan and War, but it has grown economically and is currently ranked Incheon with about 2.5 million residents. Daegu is famous the 11th richest economy in the world. The economy is driven for high quality apples, and its historic textile industry. With by manufacturing and exports including ships, automobiles, the establishment of the Daegu-Gyeongbuk Free Economic mobile phones, PCs, and TVs. Recently, the Korean-Wave has Zone, Daegu is currently focusing on fostering fashion and Seoul also added to the country’s exports through the popularity high-tech industries. -

College Codes (Outside the United States)

COLLEGE CODES (OUTSIDE THE UNITED STATES) ACT CODE COLLEGE NAME COUNTRY 7143 ARGENTINA UNIV OF MANAGEMENT ARGENTINA 7139 NATIONAL UNIVERSITY OF ENTRE RIOS ARGENTINA 6694 NATIONAL UNIVERSITY OF TUCUMAN ARGENTINA 7205 TECHNICAL INST OF BUENOS AIRES ARGENTINA 6673 UNIVERSIDAD DE BELGRANO ARGENTINA 6000 BALLARAT COLLEGE OF ADVANCED EDUCATION AUSTRALIA 7271 BOND UNIVERSITY AUSTRALIA 7122 CENTRAL QUEENSLAND UNIVERSITY AUSTRALIA 7334 CHARLES STURT UNIVERSITY AUSTRALIA 6610 CURTIN UNIVERSITY EXCHANGE PROG AUSTRALIA 6600 CURTIN UNIVERSITY OF TECHNOLOGY AUSTRALIA 7038 DEAKIN UNIVERSITY AUSTRALIA 6863 EDITH COWAN UNIVERSITY AUSTRALIA 7090 GRIFFITH UNIVERSITY AUSTRALIA 6901 LA TROBE UNIVERSITY AUSTRALIA 6001 MACQUARIE UNIVERSITY AUSTRALIA 6497 MELBOURNE COLLEGE OF ADV EDUCATION AUSTRALIA 6832 MONASH UNIVERSITY AUSTRALIA 7281 PERTH INST OF BUSINESS & TECH AUSTRALIA 6002 QUEENSLAND INSTITUTE OF TECH AUSTRALIA 6341 ROYAL MELBOURNE INST TECH EXCHANGE PROG AUSTRALIA 6537 ROYAL MELBOURNE INSTITUTE OF TECHNOLOGY AUSTRALIA 6671 SWINBURNE INSTITUTE OF TECH AUSTRALIA 7296 THE UNIVERSITY OF MELBOURNE AUSTRALIA 7317 UNIV OF MELBOURNE EXCHANGE PROGRAM AUSTRALIA 7287 UNIV OF NEW SO WALES EXCHG PROG AUSTRALIA 6737 UNIV OF QUEENSLAND EXCHANGE PROGRAM AUSTRALIA 6756 UNIV OF SYDNEY EXCHANGE PROGRAM AUSTRALIA 7289 UNIV OF WESTERN AUSTRALIA EXCHG PRO AUSTRALIA 7332 UNIVERSITY OF ADELAIDE AUSTRALIA 7142 UNIVERSITY OF CANBERRA AUSTRALIA 7027 UNIVERSITY OF NEW SOUTH WALES AUSTRALIA 7276 UNIVERSITY OF NEWCASTLE AUSTRALIA 6331 UNIVERSITY OF QUEENSLAND AUSTRALIA 7265 UNIVERSITY -

Daegu International School 2020-2021 High School Profile

DAEGU INTERNATIONAL SCHOOL 2020-2021 HIGH SCHOOL PROFILE CEEB CODE 682002 SCHOOL 22, Palgong-ro 50-gil, Dong-gu, Established in August 2010, Daegu International School (DIS) is a K-12 independent co- Daegu, South Korea 41021 educational day and boarding school located in Daegu, Republic of Korea. DIS is affiliated with Lee Academy in Lee, Maine, USA. DIS offers the same curriculum as Lee Academy, which is Contact based on the College Board’s Advanced Placement system, the Maine Learning Results, Common Core Curriculum and Next Generation Science Standards. DIS provides a holistic +82 (0)53 980 2100 education that stresses academic excellence, social engagement, and intellectual curiosity. In [email protected] addition, our small school size gives each student an opportunity to pursue leadership roles in www.dis.sc.kr student clubs and athletics. Daegu.International.School daegu_international_school MISSION DISTV Our mission at DIS is to help students become successful contributing members of a global society by providing a safe, nurturing environment in which students can reach their maximum potential: socially, emotionally, and intellectually. Head of School ACCREDITATION Mr. Chris Murphy [email protected] DIS is fully accredited by the Western Association of Schools and Colleges (WASC) and fully authorized Assistant Head of School by the Korean Ministry of Education (MOE). Mr. Scott Jolly [email protected] ENROLLMENT Director of Teaching & Learning Total enrollment for the 2020-21 school year is approximately 305 students in grades K-12. Mr. William Seward The class of 2021 consists of 16 students. Admission is based on academic records, personal [email protected] interview, admissions exam, and recommendations. -

The Handbook of East Asian Psycholinguistics, Volume III Korean Edited by Chungmin Lee, Greg Simpson and Youngjin Kim Frontmatter More Information

Cambridge University Press 978-0-521-83335-6 - The Handbook of East Asian Psycholinguistics, Volume III Korean Edited by Chungmin Lee, Greg Simpson and Youngjin Kim Frontmatter More information The Handbook of East Asian Psycholinguistics A large body of knowledge has accumulated in recent years on the cognitive processes and brain mechanisms underlying language. Much of this know- ledge has come from studies of Indo-European languages, in particular English. Korean, a language of growing interest to linguists, differs significantly from most Indo-European languages in its grammar, its lexicon, and its written and spoken forms – features which have profound implications for the learning, representation and processing of language. This handbook, the third in a three- volume series on East Asian psycholinguistics, presents a state-of-the-art discussion of the psycholinguistic study of Korean. With contributions by over sixty leading scholars, it covers topics in first and second language acquisition, language processing and reading, language disorders in children and adults, and the relationships between language, brain, culture, and cogni- tion. It will be invaluable to all scholars and students interested in the Korean language, as well as cognitive psychologists, linguists, and neuroscientists. ping li is Professor of Psychology, Linguistics, Information Sciences and Technology at Pennsylvania State University. His main research interests are in the area of psycholinguistics and cognitive science. He specializes in language acquisition, -

2019 Korean Government Scholarship Program Guideline for Czech Students

2019 Korean Government Scholarship Program Guideline For Czech Students 2019.03 교육부 Ministry of Education (MOE) 1. Program Objective In order to promote the study of the Korean language and culture in the Republic of Korea, the Government of Republic of Korea and the Government of the Czech Republic are desirous of developing their co-operation in the field of education, as well as mutual friendship between the two countries. 2. Total Number of Grantees: 5-10 students 3. Selection Schedule Contents Schedule Comments Application Period March 15 ~ April 22 Korean Embassy in Czech Republic The 1st Selection April 23 ~ May 3 Korean Embassy in Czech Republic The 2nd Selection May 10 ~ May 21 MOE Selection Committee Result will be posted at Embassy of the Announcement of May 24 Republic of Korea in Czech website Selection Result ※ Awardees will be individually informed Awardees will be informed of enrolling in Admission Process May 27 ~ June 8 the program Exchange Period June 23 ~ August 31 2~4 week summer school program 4. Eligible Universities and Fields of Study Eligible Universities : The 17 listed universities (or institutions) designated by MOE - Applicants must choose 3 desired universities out of the 17 universities listed below. 1) Hankuk University of Foreign Studies, 2) Seoul National University, 3) Sogang University, 4) Korea University, 5) Konkuk University, 6) Busan University of Foreign Studies, 7) Kyunghee University, 8) Chonnam National University, 9) Hanyang University, 10) Ewha Womans University, 11) Keimyung University, 12) Kyungnam University, 13) Pukyong National University, 14) Sookmyung Women's University, 15) Gachon University, 16) Yonsei University, 17) Chungbuk Health & Science University Available Courses : Summer language course ※ Tuition costs will be covered from two-week up to four-week summer language program. -

2019 FALL Established in 2000, KONG & PARK Is a Publishing Company That Has Specialized in Researching and Publishing Books for Studying Chinese Characters

2019 FALL Established in 2000, KONG & PARK is a publishing company that has specialized in researching and publishing books for studying Chinese characters. Since 2012, KONG & PARK has published and distributed worldwide books written in the English language. It has also acted as an agent to distribute books of Korea written in the English language to many English speaking countries such as the UK and the USA. Seoul Office KONG & PARK, Inc. 85, Gwangnaru-ro 56-gil, Gwangjin-gu Prime-center #1518 Seoul 05116, Korea Tel: +82 (0)2 565 1531 Fax: +82 (0)2 3445 1080 E-mail: [email protected] Chicago Office KONG & PARK USA, Inc. 1480 Renaissance Drive, Suite 412 Park Ridge, IL 60068 Tel: +1 847 241 4845 Fax: +1 312 757 5553 E-mail: [email protected] Beijing Office #401, Unit 1, Building 6, Xihucincun, Beiqijiazhen, Changping District Beijing 102200 China (102200 北京市 昌平区 北七家镇 西湖新村 6号楼 1单元 401) Tel: +86 186 1257 4230 E-mail: [email protected] Santiago Office KONG & PARK CHILE SPA. Av. Providencia 1208, #1603, Providencia Santiago, 7500571 Chile Tel: +56 22 833 9055 E-mail: [email protected] 1 CONTENTS NEW TITLES 2 ART 54 BIOGRAPHY & AUTOBIOGRAPHY 58 BUSINESS & ECONOMICS 59 COOKING 62 FOREIGN LANGUAGE STUDY 64 Chinese 64 Japanese 68 Korean 70 HISTORY 106 PHILOSOPHY 123 POLITICAL SCIENCE 124 RELIGION 126 SOCIAL SCIENCE 128 SPORTS & RECREATION 137 JOURNAL 138 INDEX 141 ISBN PREFIXES BY PUBLISHER 143 2 NEW TITLES NEW TITLES ART 3 US$109.95 Paperback / fine binding ISBN : 9781635190090 National Museum of Korea 360 pages, All Color 8.3 X 10.2 inch (210 X 260 mm) 2.8 lbs (1260g) Carton Quantity: 12 National Museum of Korea The Permanent Exhibition National Museum of Korea Amazingly, it has already been twelve years since the National Museum of Korea reopened at its current location in Yongsan in October 2005. -

Handbook for Korean Studies Librarianship Outside of Korea Published by the National Library of Korea

2014 Editorial Board Members: Copy Editors: Erica S. Chang Philip Melzer Mikyung Kang Nancy Sack Miree Ku Yunah Sung Hyokyoung Yi Handbook for Korean Studies Librarianship Outside of Korea Published by the National Library of Korea The National Library of Korea 201, Banpo-daero, Seocho-gu, Seoul, Korea, 137-702 Tel: 82-2-590-6325 Fax: 82-2-590-6329 www.nl.go.kr © 2014 Committee on Korean Materials, CEAL retains copyright for all written materials that are original with this volume. ISBN 979-11-5687-075-3 93020 Handbook for Korean Studies Librarianship Outside of Korea Table of Contents Foreword Ellen Hammond ······················· 1 Preface Miree Ku ································ 3 Chapter 1. Introduction Yunah Sung ···························· 5 Chapter 2. Acquisitions and Collection Development 2.1. Introduction Mikyung Kang·························· 7 2.2. Collection Development Hana Kim ······························· 9 2.2.1 Korean Studies ······································································· 9 2.2.2 Introduction: Area Studies and Korean Studies ································· 9 2.2.3 East Asian Collections in North America: the Historical Overview ········ 10 2.2.4 Collection Development and Management ···································· 11 2.2.4.1 Collection Development Policy ··········································· 12 2.2.4.2 Developing Collections ···················································· 13 2.2.4.3 Selection Criteria ···························································· 13 2.2.4.4 -

ACUCA 1St Qtr 2008

AA CC UU CC AA NN EE WW SS ASSOCIATION OF CHRISTIAN UNIVERSITIES AND COLLEGES IN ASIA “Committed to the mission of Christian higher education of uniting all men in the community of service and fellowship.” Volume VIII No. 1 January-March 2008 Message from the President ACUCA MEMBER INSTITUTIONS HONGKONG Chung Chi College, CUHK Hong Kong Baptist University uring the ACUCA Executive Committee meeting last April, a remark from Dr. Lingnan University INDONESIA Shinhye Kim got us engaged in a lively discussion on the growing experience Parahyangan Catholic University of multiculturalism in our countries and our universities. She remarked that Petra Christian University D Satya Wacana Christian University Korea has historically been a monocultural country. Universitas Kristen Indonesia But now they were seeing more migrants, including Filipino migrants, and Maranatha Christian University Duta Wacana Christian University Keimyung University was refl ecting on this new experience of multiculturalism. We Soegijapranata Catholic University Universitas Pelita Harapan said that the Philippines was also noting a large infl ux of Korean migrants and so this Krida Wacana Christian University might perhaps be an opportunity for our two universities, Keimyung and Ateneo, to Universitas Atma Jaya Yogyakarta JAPAN engage in a research project on migration and our growing multicultural experience. International Christian University Kwansei Gakuin University During the 35th General Congregation of and places – in the neighborhood we grew up Meiji Gakuin University Nanzan University the Jesuits, January 7 to March 6, this year in, the religious practices and customs of our Doshisha University in Rome, we focused a lot on migration as a upbringing.