Cit478 Course Title: Artificial Intelligence

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Humanoid Robot

NAIN 1.0 – A HUMANOID ROBOT by Shivam Shukla (1406831124) Shubham Kumar (1406831131) Shashank Bhardwaj (1406831117) Department of Electronics & Communication Engineering Meerut Institute of Engineering & Technology Meerut, U.P. (India)-250005 May, 2018 NAIN 1.0 – HUMANOID ROBOT by Shivam Shukla (1406831124) Shubham Kumar (1406831131) Shashank Bhardwaj (1406831117) Submitted to the Department of Electronics & Communication Engineering in partial fulfillment of the requirements for the degree of Bachelor of Technology in Electronics & Communication Meerut Institute of Engineering & Technology, Meerut Dr. A.P.J. Abdul Kalam Technical University, Lucknow May, 2018 DECLARATION I hereby declare that this submission is my own work and that, to the best of my knowledge and belief, it contains no material previously published or written by another person nor material which to a substantial extent has been accepted for the award of any other degree or diploma of the university or other institute of higher learning except where due acknowledgment has been made in the text. Signature Signature Name: Mr. Shivam Shukla Name: Mr. Shashank Bhardwaj Roll No. 1406831124 Roll No. 1406831117 Date: Date: Signature Name: Mr. Shubham Kumar Roll No. 1406831131 Date: ii CERTIFICATE This is to certify that Project Report entitled “Humanoid Robot” which is submitted by Shivam Shukla (1406831124), Shashank Bhardwaj (1406831117), Shubahm Kumar (1406831131) in partial fulfillment of the requirement for the award of degree B.Tech in Department of Electronics & Communication Engineering of Gautam Buddh Technical University (Formerly U.P. Technical University), is record of the candidate own work carried out by him under my/our supervision. The matter embodied in this thesis is original and has not been submitted for the award of any other degree. -

The Tip of a Hospitality Revolution (5:48 Mins) Listening Comprehension with Pre-Listening and Post-Listening Exercises Pre-List

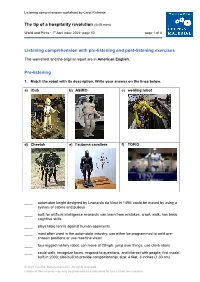

Listening comprehension worksheet by Carol Richards The tip of a hospitality revolution (5:48 mins) World and Press • 1st April issue 2021• page 10 page 1 of 8 Listening comprehension with pre-listening and post-listening exercises This worksheet and the original report are in American English. Pre-listening 1. Match the robot with its description. Write your answer on the lines below. a) iCub b) ASIMO c) welding robot d) Cheetah e) l’automa cavaliere f) TOPIO ____ automaton knight designed by Leonardo da Vinci in 1495; could be moved by using a system of cables and pulleys ____ built for artificial intelligence research; can learn from mistakes, crawl, walk; has basic cognitive skills ____ plays table tennis against human opponents ____ most often used in the automobile industry; can either be programmed to weld pre- chosen positions or use machine vision ____ four-legged military robot, can move at 28mph, jump over things, use climb stairs ____ could walk, recognize faces, respond to questions, and interact with people; first model built in 2000; also built to provide companionship; size: 4 feet, 3 inches (1.30 cm) © 2021 Carl Ed. Schünemann KG. All rights reserved. Copies of this material may only be produced by subscribers for use in their own lessons. The tip of a hospitality revolution World and Press • April 1 / 2021 • page 10 page 2 of 8 2. Vocabulary practice Match the terms on the left with their German meanings on the right. Write your answers in the grid below. a) billion A Abfindung b) competitive advantage B auf etw. -

Design and Realization of a Humanoid Robot for Fast and Autonomous Bipedal Locomotion

TECHNISCHE UNIVERSITÄT MÜNCHEN Lehrstuhl für Angewandte Mechanik Design and Realization of a Humanoid Robot for Fast and Autonomous Bipedal Locomotion Entwurf und Realisierung eines Humanoiden Roboters für Schnelles und Autonomes Laufen Dipl.-Ing. Univ. Sebastian Lohmeier Vollständiger Abdruck der von der Fakultät für Maschinenwesen der Technischen Universität München zur Erlangung des akademischen Grades eines Doktor-Ingenieurs (Dr.-Ing.) genehmigten Dissertation. Vorsitzender: Univ.-Prof. Dr.-Ing. Udo Lindemann Prüfer der Dissertation: 1. Univ.-Prof. Dr.-Ing. habil. Heinz Ulbrich 2. Univ.-Prof. Dr.-Ing. Horst Baier Die Dissertation wurde am 2. Juni 2010 bei der Technischen Universität München eingereicht und durch die Fakultät für Maschinenwesen am 21. Oktober 2010 angenommen. Colophon The original source for this thesis was edited in GNU Emacs and aucTEX, typeset using pdfLATEX in an automated process using GNU make, and output as PDF. The document was compiled with the LATEX 2" class AMdiss (based on the KOMA-Script class scrreprt). AMdiss is part of the AMclasses bundle that was developed by the author for writing term papers, Diploma theses and dissertations at the Institute of Applied Mechanics, Technische Universität München. Photographs and CAD screenshots were processed and enhanced with THE GIMP. Most vector graphics were drawn with CorelDraw X3, exported as Encapsulated PostScript, and edited with psfrag to obtain high-quality labeling. Some smaller and text-heavy graphics (flowcharts, etc.), as well as diagrams were created using PSTricks. The plot raw data were preprocessed with Matlab. In order to use the PostScript- based LATEX packages with pdfLATEX, a toolchain based on pst-pdf and Ghostscript was used. -

Design of a Drone with a Robotic End-Effector

Design of a Drone with a Robotic End-Effector Suhas Varadaramanujan, Sawan Sreenivasa, Praveen Pasupathy, Sukeerth Calastawad, Melissa Morris, Sabri Tosunoglu Department of Mechanical and Materials Engineering Florida International University Miami, Florida 33174 [email protected], [email protected] , [email protected], [email protected], [email protected], [email protected] ABSTRACT example, the widely-used predator drone for military purposes is the MQ-1 by General Atomics which is remote controlled, UAVs The concept presented involves a combination of a quadcopter typically fall into one of six functional categories (i.e. target and drone and an end-effector arm, which is designed with the decoy, reconnaissance, combat, logistics, R&D, civil and capability of lifting and picking fruits from an elevated position. commercial). The inspiration for this concept was obtained from the swarm robots which have an effector arm to pick small cubes, cans to even With the advent of aerial robotics technology, UAVs became more collecting experimental samples as in case of space exploration. sophisticated and led to development of quadcopters which gained The system as per preliminary analysis would contain two popularity as mini-helicopters. A quadcopter, also known as a physically separate components, but linked with a common quadrotor helicopter, is lifted by means of four rotors. In operation, algorithm which includes controlling of the drone’s positions along the quadcopters generally use two pairs of identical fixed pitched with the movement of the arm. propellers; two clockwise (CW) and two counterclockwise (CCW). They use independent variation of the speed of each rotor to achieve Keywords control. -

Ph. D. Thesis Stable Locomotion of Humanoid Robots Based

Ph. D. Thesis Stable locomotion of humanoid robots based on mass concentrated model Author: Mario Ricardo Arbul´uSaavedra Director: Carlos Balaguer Bernaldo de Quiros, Ph. D. Department of System and Automation Engineering Legan´es, October 2008 i Ph. D. Thesis Stable locomotion of humanoid robots based on mass concentrated model Author: Mario Ricardo Arbul´uSaavedra Director: Carlos Balaguer Bernaldo de Quiros, Ph. D. Signature of the board: Signature President Vocal Vocal Vocal Secretary Rating: Legan´es, de de Contents 1 Introduction 1 1.1 HistoryofRobots........................... 2 1.1.1 Industrialrobotsstory. 2 1.1.2 Servicerobots......................... 4 1.1.3 Science fiction and robots currently . 10 1.2 Walkingrobots ............................ 10 1.2.1 Outline ............................ 10 1.2.2 Themes of legged robots . 13 1.2.3 Alternative mechanisms of locomotion: Wheeled robots, tracked robots, active cords . 15 1.3 Why study legged machines? . 20 1.4 What control mechanisms do humans and animals use? . 25 1.5 What are problems of biped control? . 27 1.6 Features and applications of humanoid robots with biped loco- motion................................. 29 1.7 Objectives............................... 30 1.8 Thesiscontents ............................ 33 2 Humanoid robots 35 2.1 Human evolution to biped locomotion, intelligence and bipedalism 36 2.2 Types of researches on humanoid robots . 37 2.3 Main humanoid robot research projects . 38 2.3.1 The Humanoid Robot at Waseda University . 38 2.3.2 Hondarobots......................... 47 2.3.3 TheHRPproject....................... 51 2.4 Other humanoids . 54 2.4.1 The Johnnie project . 54 2.4.2 The Robonaut project . 55 2.4.3 The COG project . -

Surveillance Robot Controlled Using an Android App Project Report Submitted in Partial Fulfillment of the Requirements for the Degree Of

Surveillance Robot controlled using an Android app Project Report Submitted in partial fulfillment of the requirements for the degree of Bachelor of Engineering by Shaikh Shoeb Maroof Nasima (Roll No.12CO92) Ansari Asgar Ali Shamshul Haque Shakina (Roll No.12CO106) Khan Sufiyan Liyaqat Ali Kalimunnisa (Roll No.12CO81) Mir Ibrahim Salim Farzana (Roll No.12CO82) Supervisor Prof. Kalpana R. Bodke Co-Supervisor Prof. Amer Syed Department of Computer Engineering, School of Engineering and Technology Anjuman-I-Islam’s Kalsekar Technical Campus Plot No. 2 3, Sector -16, Near Thana Naka, Khanda Gaon, New Panvel, Navi Mumbai. 410206 Academic Year : 2014-2015 CERTIFICATE Department of Computer Engineering, School of Engineering and Technology, Anjuman-I-Islam’s Kalsekar Technical Campus Khanda Gaon,New Panvel, Navi Mumbai. 410206 This is to certify that the project entitled “Surveillance Robot controlled using an Android app” is a bonafide work of Shaikh Shoeb Maroof Nasima (12CO92), Ansari Asgar Ali Shamshul Haque Shakina (12CO106), Khan Sufiyan Liyaqat Ali Kalimunnisa (12CO81), Mir Ibrahim Salim Farzana (12CO82) submitted to the University of Mumbai in partial ful- fillment of the requirement for the award of the degree of “Bachelor of Engineering” in De- partment of Computer Engineering. Prof. Kalpana R. Bodke Prof. Amer Syed Supervisor/Guide Co-Supervisor/Guide Prof. Tabrez Khan Dr. Abdul Razak Honnutagi Head of Department Director Project Approval for Bachelor of Engineering This project I entitled Surveillance robot controlled using an android application by Shaikh Shoeb Maroof Nasima, Ansari Asgar Ali Shamshul Haque Shakina, Mir Ibrahim Salim Farzana,Khan Sufiyan Liyaqat Ali Kalimunnisa is approved for the degree of Bachelor of Engineering in Department of Computer Engineering. -

2014-2015 SISD Literary Anthology

2014-2015 SISD Literary Anthology SADDLE UP Socorro ISD Board of Trustees Paul Guerra - President Angelica Rodriguez - Vice President Antonio ‘Tony’ Ayub - Secretary Gary Gandara - Trustee Hector F. Gonzalez - Trustee Michael A. Najera - Trustee Cynthia A. Najera - Trustee Superintendent of Schools José Espinoza, Ed.D. Socorro ISD District Service Center 12440 Rojas Dr. • El Paso, TX 79928 Phn 915.937.0000 • www.sisd.net Socorro Independent School District The Socorro Independent School District does not discriminate on the basis of race, color, national origin, sex, disability, or age in its programs, activities or employment. Leading • Inspiring • Innovating Socorro Independent School District Literary Anthology 2014-2015 An Award Winning Collection of: Poetry Real/Imaginative/Engaging Stories Persuasive Essays Argumentative Essays Informative Essays Analytical Essays Letters Scripts Personal Narrative Special thanks to: Yvonne Aragon and Sylvia Gómez Soriano District Coordinators Socorro Independent School District 2014-15 Literary Anthology - Writing Round-Up 1 Board of Trustees The Socorro ISD Board of Trustees consists of seven elected citizens who work with community leaders, families, and educators to develop sound educational policies to support student achievement and ensure the solvency of the District. Together, they are a strong and cohesive team that helps the District continuously set and achieve new levels of excellence. Five of the trustees represent single-member districts and two are elected at-large. Mission Statement -

Humanoid Robot Soccer Locomotion and Kick Dynamics

Humanoid Robot Soccer Locomotion and Kick Dynamics Open Loop Walking, Kicking and Morphing into Special Motions on the Nao Robot Alexander Buckley B.Eng (Computer) (Hons.) In Partial Fulfillment of the Requirements for the Degree Master of Science Presented to the Faculty of Science and Engineering of the National University of Ireland Maynooth in June 2013 Department Head: Prof. Douglas Leith Supervisor: Prof. Richard Middleton Acknowledgements This work would not have been possible without the guidance, help and most of all the patience of my supervisor, Rick Middleton. I would like to thank the members of the RoboEireann team, and those I have worked with at the Hanilton institute. Their contribution to my work has been invaluable, and they have helped make my time here an enjoyable experience. Finally, RoboCup itself has been an exceptional experience. It has provided an environment that is both challenging and rewarding, allowing me the chance to fulfill one of my earliest goals in life, to work intimately with robots. Abstract Striker speed and accuracy in the RoboCup (SPL) international robot soccer league is becoming increasingly important as the level of play rises. Competition around the ball is now decided in a matter of seconds. Therefore, eliminating any wasted actions or motions is crucial when attempting to kick the ball. It is common to see a discontinuity between walking and kicking where a robot will return to an initial pose in preparation for the kick action. In this thesis we explore the removal of this behaviour by developing a transition gait that morphs the walk directly into the kick back swing pose. -

The Dialectics of Virtuosity: Dance in the People's Republic of China

The Dialectics of Virtuosity: Dance in the People’s Republic of China, 1949-2009 by Emily Elissa Wilcox A dissertation submitted in partial satisfaction of the requirements for the degree of Joint Doctor of Philosophy with the University of California, San Francisco in Medical Anthropology of the University of California, Berkeley Committee in charge: Professor Xin Liu, Chair Professor Vincanne Adams Professor Alexei Yurchak Professor Michael Nylan Professor Shannon Jackson Spring 2011 Abstract The Dialectics of Virtuosity: Dance in the People’s Republic of China, 1949-2009 by Emily Elissa Wilcox Joint Doctor of Philosophy with the University of California, San Francisco in Medical Anthropology University of California, Berkeley Professor Xin Liu, Chair Under state socialism in the People’s Republic of China, dancers’ bodies became important sites for the ongoing negotiation of two paradoxes at the heart of the socialist project, both in China and globally. The first is the valorization of physical labor as a path to positive social reform and personal enlightenment. The second is a dialectical approach to epistemology, in which world-knowing is connected to world-making. In both cases, dancers in China found themselves, their bodies, and their work at the center of conflicting ideals, often in which the state upheld, through its policies and standards, what seemed to be conflicting points of view and directions of action. Since they occupy the unusual position of being cultural workers who labor with their bodies, dancers were successively the heroes and the victims in an ever unresolved national debate over the value of mental versus physical labor. -

Generation of the Whole-Body Motion for Humanoid Robots with the Complete Dynamics Oscar Efrain Ramos Ponce

Generation of the whole-body motion for humanoid robots with the complete dynamics Oscar Efrain Ramos Ponce To cite this version: Oscar Efrain Ramos Ponce. Generation of the whole-body motion for humanoid robots with the complete dynamics. Robotics [cs.RO]. Universite Toulouse III Paul Sabatier, 2014. English. tel- 01134313 HAL Id: tel-01134313 https://tel.archives-ouvertes.fr/tel-01134313 Submitted on 23 Mar 2015 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Christine CHEVALLEREAU: Directeur de Recherche, École Centrale de Nantes, France Francesco NORI: Researcher, Italian Institute of Technology, Italy Patrick DANÈS: Professeur des Universités, Université de Toulouse III, France Ludovic RIGHETTI: Researcher, Max-Plank-Institute for Intelligent Systems, Germany Nicolas MANSARD: Chargé de Recherche, LAAS-CNRS, France Philippe SOUÈRES: Directeur de recherche, LAAS-CNRS, France Yuval TASSA: Researcher, University of Washington, USA Abstract This thesis aims at providing a solution to the problem of motion generation for humanoid robots. The proposed framework generates whole-body motion using the complete robot dy- namics in the task space satisfying contact constraints. This approach is known as operational- space inverse-dynamics control. The specification of the movements is done through objectives in the task space, and the high redundancy of the system is handled with a prioritized stack of tasks where lower priority tasks are only achieved if they do not interfere with higher priority ones. -

One Robot at a Time Tas¸ Kin Padir Believes Robots Will Revolutionize Our Lives, and He’S Proving It Through His Innovative Research

WPI Re search 2015 Spring Saving the World, One Robot at a Time Tas¸ kin Padir believes robots will revolutionize our lives, and he’s proving it through his innovative research. IF… WE INVEST IN FACILITIES FOR CUTTING-EDGE RESEARCH. THEN… JUST IMAGINE WHAT WE CAN ACCOMPLISH. Through the DARPA Robotics Challenge, WPI researchers are exploring how humanoid robots might one day perform the dangerous work of responding to disasters. That’s just one way the university’s pioneering program in robotics engineering is helping build a world where robots help people in areas as diverse as manufacturing and health care. WPI was the first university in the nation to offer a bachelor’s degree in robotics engineering and the first with BS, MS, and PhD degrees. Through groundbreaking academic programs and research, we are committed to advancing one of the most important emerging areas of technology. Imagine what our impact could be with your support. if… The Campaign to Advance WPI — a comprehensive, $200 million fundraising endeavor — is about providing outstanding resources for education and research. The Campaign will supply ONLINE: WPI.EDU/+GIVE the facilities to help the university’s talented faculty and students address important challenges PHONE: 508-831-4177 that matter to society and produce innovations and advances that help build a better world. EMAIL: [email protected] if… we imagine a bright future, together we can make it happen. WPI Re search Discovery and Innovation with Purpose > wpi.edu/+research 2015 Spring 2 > From the Office of the Provost Celebrating WPI’s seamless spectrum of research. -

Communicating Simulated Emotional States of Robots by Expressive Movements

Middlesex University Research Repository An open access repository of Middlesex University research http://eprints.mdx.ac.uk Sial, Sara Baber (2013) Communicating simulated emotional states of robots by expressive movements. Masters thesis, Middlesex University. [Thesis] Final accepted version (with author’s formatting) This version is available at: https://eprints.mdx.ac.uk/12312/ Copyright: Middlesex University Research Repository makes the University’s research available electronically. Copyright and moral rights to this work are retained by the author and/or other copyright owners unless otherwise stated. The work is supplied on the understanding that any use for commercial gain is strictly forbidden. A copy may be downloaded for personal, non-commercial, research or study without prior permission and without charge. Works, including theses and research projects, may not be reproduced in any format or medium, or extensive quotations taken from them, or their content changed in any way, without first obtaining permission in writing from the copyright holder(s). They may not be sold or exploited commercially in any format or medium without the prior written permission of the copyright holder(s). Full bibliographic details must be given when referring to, or quoting from full items including the author’s name, the title of the work, publication details where relevant (place, publisher, date), pag- ination, and for theses or dissertations the awarding institution, the degree type awarded, and the date of the award. If you believe that any material held in the repository infringes copyright law, please contact the Repository Team at Middlesex University via the following email address: [email protected] The item will be removed from the repository while any claim is being investigated.