3 Metrics for Technology Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

IBM Z Systems Introduction May 2017

IBM z Systems Introduction May 2017 IBM z13s and IBM z13 Frequently Asked Questions Worldwide ZSQ03076-USEN-15 Table of Contents z13s Hardware .......................................................................................................................................................................... 3 z13 Hardware ........................................................................................................................................................................... 11 Performance ............................................................................................................................................................................ 19 z13 Warranty ............................................................................................................................................................................ 23 Hardware Management Console (HMC) ..................................................................................................................... 24 Power requirements (including High Voltage DC Power option) ..................................................................... 28 Overhead Cabling and Power ..........................................................................................................................................30 z13 Water cooling option .................................................................................................................................................... 31 Secure Service Container ................................................................................................................................................. -

Annual Reports of FCCSET Subcommittee Annual Trip Reports To

Annual Reports of FCCSET Subcommittee Annual trip reports to supercomputer manufacturers trace the changes in technology and in the industry, 1985-1989. FY 1986 Annual Report of the Federal Coordinating Council on Science, Engineering and Technology (FCCSET). by the FCCSET Ocnmittee. n High Performance Computing Summary During the past year, the Committee met on a regular basis to review government and industry supported programs in research, development, and application of new supercomputer technology. The Committee maintains an overview of commercial developments in the U.S. and abroad. It regularly receives briefings from Government agency sponsored R&D efforts and makes such information available, where feasible, to industry and universities. In addition, the committee coordinates agency supercomputer access programs and promotes cooperation with particular emphasis on aiding the establish- ment of new centers and new communications networks. The Committee made its annual visit to supercomputer manufacturers in August and found that substantial progress had been made by Cray Research and ETA Systems toward developing their next generations of machines. The Cray II and expanded Cray XMP series supercomputers are now being marketed commercially; the Committee was briefed on plans for the next generation of Cray machines. ETA Systems is beyond the prototype stage for the ETA-10 and planning to ship one machine this year. A ^-0 A 1^'Tr 2 The supercomputer vendors continue to have difficulty in obtaining high performance IC's from U.S. chip makers, leaving them dependent on Japanese suppliers. In some cases, the Japanese chip suppliers are the same companies, e.g., Fujitsu, that provide the strongest foreign competition in the supercomputer market. -

Computer Organization and Architecture Designing for Performance Ninth Edition

COMPUTER ORGANIZATION AND ARCHITECTURE DESIGNING FOR PERFORMANCE NINTH EDITION William Stallings Boston Columbus Indianapolis New York San Francisco Upper Saddle River Amsterdam Cape Town Dubai London Madrid Milan Munich Paris Montréal Toronto Delhi Mexico City São Paulo Sydney Hong Kong Seoul Singapore Taipei Tokyo Editorial Director: Marcia Horton Designer: Bruce Kenselaar Executive Editor: Tracy Dunkelberger Manager, Visual Research: Karen Sanatar Associate Editor: Carole Snyder Manager, Rights and Permissions: Mike Joyce Director of Marketing: Patrice Jones Text Permission Coordinator: Jen Roach Marketing Manager: Yez Alayan Cover Art: Charles Bowman/Robert Harding Marketing Coordinator: Kathryn Ferranti Lead Media Project Manager: Daniel Sandin Marketing Assistant: Emma Snider Full-Service Project Management: Shiny Rajesh/ Director of Production: Vince O’Brien Integra Software Services Pvt. Ltd. Managing Editor: Jeff Holcomb Composition: Integra Software Services Pvt. Ltd. Production Project Manager: Kayla Smith-Tarbox Printer/Binder: Edward Brothers Production Editor: Pat Brown Cover Printer: Lehigh-Phoenix Color/Hagerstown Manufacturing Buyer: Pat Brown Text Font: Times Ten-Roman Creative Director: Jayne Conte Credits: Figure 2.14: reprinted with permission from The Computer Language Company, Inc. Figure 17.10: Buyya, Rajkumar, High-Performance Cluster Computing: Architectures and Systems, Vol I, 1st edition, ©1999. Reprinted and Electronically reproduced by permission of Pearson Education, Inc. Upper Saddle River, New Jersey, Figure 17.11: Reprinted with permission from Ethernet Alliance. Credits and acknowledgments borrowed from other sources and reproduced, with permission, in this textbook appear on the appropriate page within text. Copyright © 2013, 2010, 2006 by Pearson Education, Inc., publishing as Prentice Hall. All rights reserved. Manufactured in the United States of America. -

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

Trends in Electrical Efficiency in Computer Performance

ASSESSING TRENDS IN THE ELECTRICAL EFFICIENCY OF COMPUTATION OVER TIME Jonathan G. Koomey*, Stephen Berard†, Marla Sanchez††, Henry Wong** * Lawrence Berkeley National Laboratory and Stanford University †Microsoft Corporation ††Lawrence Berkeley National Laboratory **Intel Corporation Contact: [email protected], http://www.koomey.com Final report to Microsoft Corporation and Intel Corporation Submitted to IEEE Annals of the History of Computing: August 5, 2009 Released on the web: August 17, 2009 EXECUTIVE SUMMARY Information technology (IT) has captured the popular imagination, in part because of the tangible benefits IT brings, but also because the underlying technological trends proceed at easily measurable, remarkably predictable, and unusually rapid rates. The number of transistors on a chip has doubled more or less every two years for decades, a trend that is popularly (but often imprecisely) encapsulated as “Moore’s law”. This article explores the relationship between the performance of computers and the electricity needed to deliver that performance. As shown in Figure ES-1, computations per kWh grew about as fast as performance for desktop computers starting in 1981, doubling every 1.5 years, a pace of change in computational efficiency comparable to that from 1946 to the present. Computations per kWh grew even more rapidly during the vacuum tube computing era and during the transition from tubes to transistors but more slowly during the era of discrete transistors. As expected, the transition from tubes to transistors shows a large jump in computations per kWh. In 1985, the physicist Richard Feynman identified a factor of one hundred billion (1011) possible theoretical improvement in the electricity used per computation. -

Computer Performance Evaluation and Benchmarking

Computer Performance Evaluation and Benchmarking EE 382M Dr. Lizy Kurian John Evolution of Single-Chip Microprocessors 1970’s 1980’s 1990’s 2010s Transistor Count 10K- 100K-1M 1M-100M 100M- 100K 10 B Clock Frequency 0.2- 2-20MHz 20M- 0.1- 2MHz 1GHz 4GHz Instruction/Cycle < 0.1 0.1-0.9 0.9- 2.0 1-100 MIPS/MFLOPS < 0.2 0.2-20 20-2,000 100- 10,000 Hot Chips 2014 (August 2014) AMD KAVERI HOT CHIPS 2014 AMD KAVERI HOTCHIPS 2014 Hotchips 2014 Hotchips 2014 - NVIDIA Power Density in Microprocessors 10000 Sun’s Surface 1000 Rocket Nozzle ) 2 Nuclear Reactor 100 Core 2 8086 10 Hot Plate 8008 Pentium® Power Density (W/cm 8085 4004 386 Processors 286 486 8080 1 1970 1980 1990 2000 2010 Source: Intel Why Performance Evaluation? • For better Processor Designs • For better Code on Existing Designs • For better Compilers • For better OS and Runtimes Design Analysis Lord Kelvin “To measure is to know.” "If you can not measure it, you can not improve it.“ "I often say that when you can measure what you are speaking about, and express it in numbers, you know something about it; but when you cannot measure it, when you cannot express it in numbers, your knowledge is of a meagre and unsatisfactory kind; it may be the beginning of knowledge, but you have scarcely in your thoughts advanced to the state of Science, whatever the matter may be." [PLA, vol. 1, "Electrical Units of Measurement", 1883-05-03] Designs evolve based on Analysis • Good designs are impossible without good analysis • Workload Analysis • Processor Analysis Design Analysis Performance Evaluation -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

System Administration

System Administration Varian NMR Spectrometer Systems With VNMR 6.1C Software Pub. No. 01-999166-00, Rev. C0503 System Administration Varian NMR Spectrometer Systems With VNMR 6.1C Software Pub. No. 01-999166-00, Rev. C0503 Revision history: A0800 – Initial release for VNMR 6.1C A1001 – Corrected errors on pg 120, general edit B0202 – Updated AutoTest B0602 – Added additional Autotest sections including VNMRJ update B1002 – Updated Solaris patch information and revised section 21.7, Autotest C0503 – Add additional Autotest sections including cryogenic probes Applicability: Varian NMR spectrometer systems with Sun workstations running Solaris 2.x and VNMR 6.1C software By Rolf Kyburz ([email protected]) Varian International AG, Zug, Switzerland, and Gerald Simon ([email protected]) Varian GmbH, Darmstadt, Germany Additional contributions by Frits Vosman, Dan Iverson, Evan Williams, George Gray, Steve Cheatham Technical writer: Mike Miller Technical editor: Dan Steele Copyright 2001, 2002, 2003 by Varian, Inc., NMR Systems 3120 Hansen Way, Palo Alto, California 94304 1-800-356-4437 http://www.varianinc.com All rights reserved. Printed in the United States. The information in this document has been carefully checked and is believed to be entirely reliable. However, no responsibility is assumed for inaccuracies. Statements in this document are not intended to create any warranty, expressed or implied. Specifications and performance characteristics of the software described in this manual may be changed at any time without notice. Varian reserves the right to make changes in any products herein to improve reliability, function, or design. Varian does not assume any liability arising out of the application or use of any product or circuit described herein; neither does it convey any license under its patent rights nor the rights of others. -

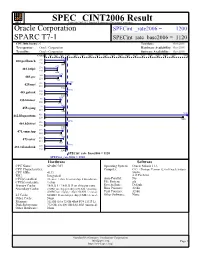

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Performance Scalability of N-Tier Application in Virtualized Cloud Environments: Two Case Studies in Vertical and Horizontal Scaling

PERFORMANCE SCALABILITY OF N-TIER APPLICATION IN VIRTUALIZED CLOUD ENVIRONMENTS: TWO CASE STUDIES IN VERTICAL AND HORIZONTAL SCALING A Thesis Presented to The Academic Faculty by Junhee Park In Partial Fulfillment of the Requirements for the Degree Doctor of Philosophy in the School of Computer Science Georgia Institute of Technology May 2016 Copyright c 2016 by Junhee Park PERFORMANCE SCALABILITY OF N-TIER APPLICATION IN VIRTUALIZED CLOUD ENVIRONMENTS: TWO CASE STUDIES IN VERTICAL AND HORIZONTAL SCALING Approved by: Professor Dr. Calton Pu, Advisor Professor Dr. Shamkant B. Navathe School of Computer Science School of Computer Science Georgia Institute of Technology Georgia Institute of Technology Professor Dr. Ling Liu Professor Dr. Edward R. Omiecinski School of Computer Science School of Computer Science Georgia Institute of Technology Georgia Institute of Technology Professor Dr. Qingyang Wang Date Approved: December 11, 2015 School of Electrical Engineering and Computer Science Louisiana State University To my parents, my wife, and my daughter ACKNOWLEDGEMENTS My Ph.D. journey was a precious roller-coaster ride that uniquely accelerated my personal growth. I am extremely grateful to anyone who has supported and walked down this path with me. I want to apologize in advance in case I miss anyone. First and foremost, I am extremely thankful and lucky to work with my advisor, Dr. Calton Pu who guided me throughout my Masters and Ph.D. programs. He first gave me the opportunity to start work in research environment when I was a fresh Master student and gave me an admission to one of the best computer science Ph.D. -

VALIDATED PRODUCTS LIST 1992 No.2

NISTIR 4820 (Supersedes NISTIR 4739) VALIDATED PRODUCTS LIST 1992 No. 2 Programming Languages Database Language SQL Graphics GOSIP POSIX Security Judy B. Kailey U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technoiogy Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 “QC — TOO .U56 4820 NIST 1992 N I STIR 482 (Supersedes NISTIR 4739) ' O VALIDATED PRODUCTS LIST 1992 No.2 Programming Languages Database Language SQL Graphics GOSIP POSiX Security Judy B. Kailey U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 April 1992 (Supersedes January 1992 Issue) U.S. DEPARTMENT OF COMMERCE Barbara Hackman Franklin, Secretary TECHNOLOGY ADMINISTRATION Robert M. White, Linder Secretary for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY John W. Lyons, Director FOREWORD The Validated Products List (formerly called the Validated Processor List) is a collection of registers describing implementations of Federal Information Processing Standards (FTPS) that have been validated for conformance to FTPS. The Validated Products List also contains information about the organizations, test methods and procedures that support the validation programs for the FTPS identified in this document. The Validated Products List is updated quarterly. lii ' " M'- v^,.^.:v;/i'fr•i•:‘fey^^?4 .•:, .V.' ini‘,r^f' isfc'^feV VihV'’, !iV.V I t: 4 #> vm- 'at' Mil! .M'? Of'S8r'»'' SIVS 'V-tv. ','V ..(Vi feiii yA ' r' ' '4: ,. = r,: 0 r^'-' ".V.^l , ;‘ • » JjTT ;»»!£ ... •':«5(4i ' |i“' T" •.(''’'ia\.':l'"' f*l, r-’i"' i'-" '.:"::'.".vi ';... '('?. .;r'H vl. '' ' " .. i.” -' f-j'" , '' '(^ • '.v;.» . -

Programming Languages, Database Language SQL, Graphics, GOSIP

b fl ^ b 2 5 I AH1Q3 NISTIR 4951 (Supersedes NISTIR 4871) VALIDATED PRODUCTS LIST 1992 No. 4 PROGRAMMING LANGUAGES DATABASE LANGUAGE SQL GRAPHICS Judy B. Kailey GOSIP Editor POSIX COMPUTER SECURITY U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 100 . U56 4951 1992 NIST (Supersedes NISTIR 4871) VALIDATED PRODUCTS LIST 1992 No. 4 PROGRAMMING LANGUAGES DATABASE LANGUAGE SQL GRAPHICS Judy B. Kailey GOSIP Editor POSIX COMPUTER SECURITY U.S. DEPARTMENT OF COMMERCE Technology Administration National Institute of Standards and Technology Computer Systems Laboratory Software Standards Validation Group Gaithersburg, MD 20899 October 1992 (Supersedes July 1992 issue) U.S. DEPARTMENT OF COMMERCE Barbara Hackman Franklin, Secretary TECHNOLOGY ADMINISTRATION Robert M. White, Under Secretary for Technology NATIONAL INSTITUTE OF STANDARDS AND TECHNOLOGY John W. Lyons, Director - ;,’; '^'i -; _ ^ '’>.£. ; '':k ' ' • ; <tr-f'' "i>: •v'k' I m''M - i*i^ a,)»# ' :,• 4 ie®®;'’’,' ;SJ' v: . I 'i^’i i 'OS -.! FOREWORD The Validated Products List is a collection of registers describing implementations of Federal Information Processing Standards (FTPS) that have been validated for conformance to FTPS. The Validated Products List also contains information about the organizations, test methods and procedures that support the validation programs for the FTPS identified in this document. The Validated Products List is updated quarterly. iii ' ;r,<R^v a;-' i-'r^ . /' ^'^uffoo'*^ ''vCJIt<*bjteV sdT : Jr /' i^iL'.JO 'j,-/5l ':. ;urj ->i: • ' *?> ^r:nT^^'Ad JlSid Uawfoof^ fa«Di)itbiI»V ,, ‘ isbt^u ri il .r^^iytsrH n 'V TABLE OF CONTENTS 1.