Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

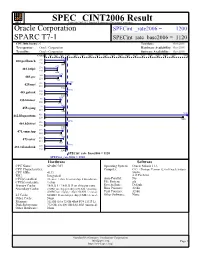

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Poweredge R940 (Intel Xeon Gold 5122, 3.60 Ghz) Specint Rate Base2006 = 1080 CPU2006 License: 55 Test Date: Jun-2017 Test Sponsor: Dell Inc

SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. SPECint_rate2006 = 1150 PowerEdge R940 (Intel Xeon Gold 5122, 3.60 GHz) SPECint_rate_base2006 = 1080 CPU2006 license: 55 Test date: Jun-2017 Test sponsor: Dell Inc. Hardware Availability: Jul-2017 Tested by: Dell Inc. Software Availability: Nov-2016 Copies 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 11000 12000 13000 14000 15000 16000 18000 917 400.perlbench 32 32 762 521 401.bzip2 32 32 487 808 403.gcc 32 32 802 1480 429.mcf 32 624 445.gobmk 32 2010 456.hmmer 32 32 1530 710 458.sjeng 32 32 658 17700 462.libquantum 32 1170 464.h264ref 32 32 1130 554 471.omnetpp 32 32 509 614 473.astar 32 1430 483.xalancbmk 32 SPECint_rate_base2006 = 1080 SPECint_rate2006 = 1150 Hardware Software CPU Name: Intel Xeon Gold 5122 Operating System: SUSE Linux Enterprise Server 12 SP2 CPU Characteristics: Intel Turbo Boost Technology up to 3.70 GHz 4.4.21-69-default CPU MHz: 3600 Compiler: C/C++: Version 17.0.3.191 of Intel C/C++ FPU: Integrated Compiler for Linux CPU(s) enabled: 16 cores, 4 chips, 4 cores/chip, 2 threads/core Auto Parallel: Yes CPU(s) orderable: 2,4 chip File System: xfs Primary Cache: 32 KB I + 32 KB D on chip per core System State: Run level 3 (multi-user) Secondary Cache: 1 MB I+D on chip per core Base Pointers: 32-bit L3 Cache: 16.5 MB I+D on chip per chip Peak Pointers: 32/64-bit Other Cache: None Other Software: Microquill SmartHeap V10.2 Memory: 768 GB (48 x 16 GB 2Rx8 PC4-2666V-R) Disk Subsystem: 1 x 960 GB SATA SSD Other Hardware: None Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. -

3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) Zero Ovf

Chapter 3 Arithmetic for Computers 1 § 3.1Introduction Arithmetic for Computers Operations on integers Addition and subtraction Multiplication and division Dealing with overflow Floating-point real numbers Representation and operations Rechnerstrukturen 182.092 3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) zero ovf 1 Must support the Arithmetic/Logic 1 operations of the ISA A 32 add, addi, addiu, addu ALU result sub, subu 32 mult, multu, div, divu B 32 sqrt 4 and, andi, nor, or, ori, xor, xori m (operation) beq, bne, slt, slti, sltiu, sltu With special handling for sign extend – addi, addiu, slti, sltiu zero extend – andi, ori, xori overflow detection – add, addi, sub Rechnerstrukturen 182.092 3 — Arithmetic for Computers 3 (Simplyfied) 1-bit MIPS ALU AND, OR, ADD, SLT Rechnerstrukturen 182.092 3 — Arithmetic for Computers 4 Final 32-bit ALU Rechnerstrukturen 182.092 3 — Arithmetic for Computers 5 Performance issues Critical path of n-bit ripple-carry adder is n*CP CarryIn0 A0 1-bit result0 ALU B0 CarryOut0 CarryIn1 A1 1-bit result1 B ALU 1 CarryOut 1 CarryIn2 A2 1-bit result2 ALU B2 CarryOut CarryIn 2 3 A3 1-bit result3 ALU B3 CarryOut3 Design trick – throw hardware at it (Carry Lookahead) Rechnerstrukturen 182.092 3 — Arithmetic for Computers 6 Carry Lookahead Logic (4 bit adder) LS 283 Rechnerstrukturen 182.092 3 — Arithmetic for Computers 7 § 3.2 Addition and Subtraction 3.2 Integer Addition Example: 7 + 6 Overflow if result out of range Adding +ve and –ve operands, no overflow Adding two +ve operands -

IBM Power Systems Performance Report Apr 13, 2021

IBM Power Performance Report Power7 to Power10 September 8, 2021 Table of Contents 3 Introduction to Performance of IBM UNIX, IBM i, and Linux Operating System Servers 4 Section 1 – SPEC® CPU Benchmark Performance 4 Section 1a – Linux Multi-user SPEC® CPU2017 Performance (Power10) 4 Section 1b – Linux Multi-user SPEC® CPU2017 Performance (Power9) 4 Section 1c – AIX Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 5 Section 1d – Linux Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 6 Section 2 – AIX Multi-user Performance (rPerf) 6 Section 2a – AIX Multi-user Performance (Power8, Power9 and Power10) 9 Section 2b – AIX Multi-user Performance (Power9) in Non-default Processor Power Mode Setting 9 Section 2c – AIX Multi-user Performance (Power7 and Power7+) 13 Section 2d – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power8) 15 Section 2e – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power7 and Power7+) 20 Section 3 – CPW Benchmark Performance 19 Section 3a – CPW Benchmark Performance (Power8, Power9 and Power10) 22 Section 3b – CPW Benchmark Performance (Power7 and Power7+) 25 Section 4 – SPECjbb®2015 Benchmark Performance 25 Section 4a – SPECjbb®2015 Benchmark Performance (Power9) 25 Section 4b – SPECjbb®2015 Benchmark Performance (Power8) 25 Section 5 – AIX SAP® Standard Application Benchmark Performance 25 Section 5a – SAP® Sales and Distribution (SD) 2-Tier – AIX (Power7 to Power8) 26 Section 5b – SAP® Sales and Distribution (SD) 2-Tier – Linux on Power (Power7 to Power7+) -

Asustek Computer Inc.: Asus P6T

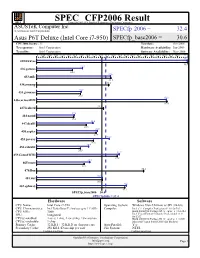

SPEC CFP2006 Result spec Copyright 2006-2014 Standard Performance Evaluation Corporation ASUSTeK Computer Inc. (Test Sponsor: Intel Corporation) SPECfp 2006 = 32.4 Asus P6T Deluxe (Intel Core i7-950) SPECfp_base2006 = 30.6 CPU2006 license: 13 Test date: Oct-2008 Test sponsor: Intel Corporation Hardware Availability: Jun-2009 Tested by: Intel Corporation Software Availability: Nov-2008 0 3.00 6.00 9.00 12.0 15.0 18.0 21.0 24.0 27.0 30.0 33.0 36.0 39.0 42.0 45.0 48.0 51.0 54.0 57.0 60.0 63.0 66.0 71.0 70.7 410.bwaves 70.8 21.5 416.gamess 16.8 34.6 433.milc 34.8 434.zeusmp 33.8 20.9 435.gromacs 20.6 60.8 436.cactusADM 61.0 437.leslie3d 31.3 17.6 444.namd 17.4 26.4 447.dealII 23.3 28.5 450.soplex 27.9 34.0 453.povray 26.6 24.4 454.calculix 20.5 38.7 459.GemsFDTD 37.8 24.9 465.tonto 22.3 470.lbm 50.2 30.9 481.wrf 30.9 42.1 482.sphinx3 41.3 SPECfp_base2006 = 30.6 SPECfp2006 = 32.4 Hardware Software CPU Name: Intel Core i7-950 Operating System: Windows Vista Ultimate w/ SP1 (64-bit) CPU Characteristics: Intel Turbo Boost Technology up to 3.33 GHz Compiler: Intel C++ Compiler Professional 11.0 for IA32 CPU MHz: 3066 Build 20080930 Package ID: w_cproc_p_11.0.054 Intel Visual Fortran Compiler Professional 11.0 FPU: Integrated for IA32 CPU(s) enabled: 4 cores, 1 chip, 4 cores/chip, 2 threads/core Build 20080930 Package ID: w_cprof_p_11.0.054 CPU(s) orderable: 1 chip Microsoft Visual Studio 2008 (for libraries) Primary Cache: 32 KB I + 32 KB D on chip per core Auto Parallel: Yes Secondary Cache: 256 KB I+D on chip per core File System: NTFS Continued on next page Continued on next page Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CFP2006 Result spec Copyright 2006-2014 Standard Performance Evaluation Corporation ASUSTeK Computer Inc. -

Modeling and Analyzing CPU Power and Performance: Metrics, Methods, and Abstractions

Modeling and Analyzing CPU Power and Performance: Metrics, Methods, and Abstractions Margaret Martonosi David Brooks Pradip Bose VET NOV TES TAM EN TVM DE I VI GE T SV B NV M I NE Moore’s Law & Power Dissipation... Moore’s Law: ❚ The Good News: 2X Transistor counts every 18 months ❚ The Bad News: To get the performance improvements we’re accustomed to, CPU Power consumption will increase exponentially too... (Graphs courtesy of Fred Pollack, Intel) Why worry about power dissipation? Battery life Thermal issues: affect cooling, packaging, reliability, timing Environment Hitting the wall… ❚ Battery technology ❚ ❙ Linear improvements, nowhere Past: near the exponential power ❙ Power important for increases we’ve seen laptops, cell phones ❚ Cooling techniques ❚ Present: ❙ Air-cooled is reaching limits ❙ Power a Critical, Universal ❙ Fans often undesirable (noise, design constraint even for weight, expense) very high-end chips ❙ $1 per chip per Watt when ❚ operating in the >40W realm Circuits and process scaling can no longer solve all power ❙ Water-cooled ?!? problems. ❚ Environment ❙ SYSTEMS must also be ❙ US EPA: 10% of current electricity usage in US is directly due to power-aware desktop computers ❙ Architecture, OS, compilers ❙ Increasing fast. And doesn’t count embedded systems, Printers, UPS backup? Power: The Basics ❚ Dynamic power vs. Static power vs. short-circuit power ❙ “switching” power ❙ “leakage” power ❙ Dynamic power dominates, but static power increasing in importance ❙ Trends in each ❚ Static power: steady, per-cycle energy cost ❚ Dynamic power: power dissipation due to capacitance charging at transitions from 0->1 and 1->0 ❚ Short-circuit power: power due to brief short-circuit current during transitions. -

Continuous Profiling: Where Have All the Cycles Gone?

Continuous Profiling: Where Have All the Cycles Gone? JENNIFER M. ANDERSON, LANCE M. BERC, JEFFREY DEAN, SANJAY GHEMAWAT, MONIKA R. HENZINGER, SHUN-TAK A. LEUNG, RICHARD L. SITES, MARK T. VANDEVOORDE, CARL A. WALDSPURGER, and WILLIAM E. WEIHL Digital Equipment Corporation This article describes the Digital Continuous Profiling Infrastructure, a sampling-based profiling system designed to run continuously on production systems. The system supports multiprocessors, works on unmodified executables, and collects profiles for entire systems, including user programs, shared libraries, and the operating system kernel. Samples are collected at a high rate (over 5200 samples/sec. per 333MHz processor), yet with low overhead (1–3% slowdown for most workloads). Analysis tools supplied with the profiling system use the sample data to produce a precise and accurate accounting, down to the level of pipeline stalls incurred by individual instructions, of where time is being spent. When instructions incur stalls, the tools identify possible reasons, such as cache misses, branch mispredictions, and functional unit contention. The fine-grained instruction-level analysis guides users and automated optimizers to the causes of performance problems and provides important insights for fixing them. Categories and Subject Descriptors: C.4 [Computer Systems Organization]: Performance of Systems; D.2.2 [Software Engineering]: Tools and Techniques—profiling tools; D.2.6 [Pro- gramming Languages]: Programming Environments—performance monitoring; D.4 [Oper- ating Systems]: General; D.4.7 [Operating Systems]: Organization and Design; D.4.8 [Operating Systems]: Performance General Terms: Performance Additional Key Words and Phrases: Profiling, performance understanding, program analysis, performance-monitoring hardware An earlier version of this article appeared at the 16th ACM Symposium on Operating System Principles (SOSP), St. -

Specfp Benchmark Disclosure

SPEC CFP2006 Result Copyright 2006-2016 Standard Performance Evaluation Corporation Cisco Systems SPECfp2006 = Not Run Cisco UCS C220 M4 (Intel Xeon E5-2667 v4, 3.20 GHz) SPECfp_base2006 = 125 CPU2006 license: 9019 Test date: Mar-2016 Test sponsor: Cisco Systems Hardware Availability: Mar-2016 Tested by: Cisco Systems Software Availability: Dec-2015 0 30.0 60.0 90.0 120 150 180 210 240 270 300 330 360 390 420 450 480 510 540 570 600 630 660 690 720 750 780 810 840 900 410.bwaves 572 416.gamess 43.1 433.milc 72.5 434.zeusmp 225 435.gromacs 63.4 436.cactusADM 894 437.leslie3d 427 444.namd 31.6 447.dealII 67.6 450.soplex 48.3 453.povray 62.2 454.calculix 61.6 459.GemsFDTD 242 465.tonto 52.1 470.lbm 799 481.wrf 120 482.sphinx3 91.6 SPECfp_base2006 = 125 Hardware Software CPU Name: Intel Xeon E5-2667 v4 Operating System: SUSE Linux Enterprise Server 12 SP1 (x86_64) CPU Characteristics: Intel Turbo Boost Technology up to 3.60 GHz 3.12.49-11-default CPU MHz: 3200 Compiler: C/C++: Version 16.0.0.101 of Intel C++ Studio XE for Linux; FPU: Integrated Fortran: Version 16.0.0.101 of Intel Fortran CPU(s) enabled: 16 cores, 2 chips, 8 cores/chip Studio XE for Linux CPU(s) orderable: 1,2 chips Auto Parallel: Yes Primary Cache: 32 KB I + 32 KB D on chip per core File System: xfs Secondary Cache: 256 MB I+D on chip per core System State: Run level 3 (multi-user) Continued on next page Continued on next page Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CFP2006 Result Copyright 2006-2016 Standard Performance -

QSCORES: Trading Dark Silicon for Scalable Energy Efficiency With

QSCORES: Trading Dark Silicon for Scalable Energy Efficiency with Quasi-Specific Cores Ganesh Venkatesh+, Jack Sampson, Nathan Goulding-Hotta, Sravanthi Kota Venkata+, Michael Bedford Taylor and Steven Swanson http://greendroid.org Department of Computer Science and Engineering University of California, San Diego ABSTRACT General Terms Transistor density continues to increase exponentially, but power Design, Experimentation, Measurement dissipation per transistor is improving only slightly with each gen- eration of Moore’s law. Given the constant chip-level power bud- Keywords gets, this exponentially decreases the percentage of transistors that QSCORE, Conservation Core, Merging, Dark Silicon, Utilization can switch at full frequency with each technology generation. Hence, Wall, Heterogeneous Many-Core, Specialization while the transistor budget continues to increase exponentially, the power budget has become the dominant limiting factor in processor design. In this regime, utilizing transistors to design specialized 1. INTRODUCTION cores that optimize energy-per-computation becomes an effective Although transistor density continues to scale, nearly constant approach to improve system performance. per-transistor power and fixed chip-level power budget place tight To trade transistors for energy efficiency in a scalable manner, we constraints on how much of a chip can be active at full frequency at propose Quasi-specific Cores, or QSCORES, specialized processors one time. Hence, as transistor density increases with each process capable of executing multiple general-purpose computations while generation, so does the fraction of the chip that must be under- providing an order of magnitude more energy efficiency than a clocked or under-utilized because of power concerns [27]. Re- general-purpose processor. The QSCORE design flow is based on searchers have termed these passive expanses of silicon area dark the insight that similar code patterns exist within and across appli- silicon [14, 11, 15, 17, 21]. -

(SLB) Predictor: a Compiler Assisted Branch Prediction for Data Dependent Branches

Store-Load-Branch (SLB) Predictor: A Compiler Assisted Branch Prediction for Data Dependent Branches M. Umar Farooq Khubaib Lizy K. John Department of Electrical and Computer Engineering The University of Texas at Austin [email protected], [email protected], [email protected] Abstract This work is based on the following observation: Hard- to-predict data-dependent branches are commonly associ- Data-dependent branches constitute single biggest ated with program data structures such as arrays, linked source of remaining branch mispredictions. Typically, lists, trees etc., and follow store-load-branch execution se- data-dependent branches are associated with program quence similar to one shown in listing 1. A set of memory data structures, and follow store-load-branch execution se- locations is written while building and updating the data quence. A set of memory locations is written at an earlier structure (line 2, listing 1). During data structure traver- point in a program. Later, these locations are read, and sal, these locations are read, and used for evaluating branch used for evaluating branch condition. Branch outcome de- condition (line 7, listing 1). pends on data values stored in data structure, which, typi- 52 50 53 cally do not have repeatable pattern. Therefore, in addition 35 Gshare to history-based dynamic predictor, we need a different kind 30 YAGS BiMode of predictor for handling such branches. TAGE 25 This paper presents Store-Load-Branch (SLB) predic- 20 tor; a compiler-assisted dynamic branch prediction scheme for data-dependent direct and indirect branches. For ev- 15 ery data-dependent branch, compiler identifies store in- 10 5 structions that modify the data structure associated with Mispredictions per 1K instructions the branch. -

Benchmarking-HOWTO.Pdf

Linux Benchmarking HOWTO Linux Benchmarking HOWTO Table of Contents Linux Benchmarking HOWTO.........................................................................................................................1 by André D. Balsa, [email protected] ..............................................................................................1 1.Introduction ..........................................................................................................................................1 2.Benchmarking procedures and interpretation of results.......................................................................1 3.The Linux Benchmarking Toolkit (LBT).............................................................................................1 4.Example run and results........................................................................................................................2 5.Pitfalls and caveats of benchmarking ..................................................................................................2 6.FAQ .....................................................................................................................................................2 7.Copyright, acknowledgments and miscellaneous.................................................................................2 1.Introduction ..........................................................................................................................................2 1.1 Why is benchmarking so important ? ...............................................................................................3