(SLB) Predictor: a Compiler Assisted Branch Prediction for Data Dependent Branches

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

MIPS® Architecture for Programmers Volume I-B: Introduction to the Micromips32™ Architecture, Revision 5.03

MIPS® Architecture For Programmers Volume I-B: Introduction to the microMIPS32™ Architecture Document Number: MD00741 Revision 5.03 Sept. 9, 2013 Unpublished rights (if any) reserved under the copyright laws of the United States of America and other countries. This document contains information that is proprietary to MIPS Tech, LLC, a Wave Computing company (“MIPS”) and MIPS’ affiliates as applicable. Any copying, reproducing, modifying or use of this information (in whole or in part) that is not expressly permitted in writing by MIPS or MIPS’ affiliates as applicable or an authorized third party is strictly prohibited. At a minimum, this information is protected under unfair competition and copyright laws. Violations thereof may result in criminal penalties and fines. Any document provided in source format (i.e., in a modifiable form such as in FrameMaker or Microsoft Word format) is subject to use and distribution restrictions that are independent of and supplemental to any and all confidentiality restrictions. UNDER NO CIRCUMSTANCES MAY A DOCUMENT PROVIDED IN SOURCE FORMAT BE DISTRIBUTED TO A THIRD PARTY IN SOURCE FORMAT WITHOUT THE EXPRESS WRITTEN PERMISSION OF MIPS (AND MIPS’ AFFILIATES AS APPLICABLE) reserve the right to change the information contained in this document to improve function, design or otherwise. MIPS and MIPS’ affiliates do not assume any liability arising out of the application or use of this information, or of any error or omission in such information. Any warranties, whether express, statutory, implied or otherwise, including but not limited to the implied warranties of merchantability or fitness for a particular purpose, are excluded. Except as expressly provided in any written license agreement from MIPS or an authorized third party, the furnishing of this document does not give recipient any license to any intellectual property rights, including any patent rights, that cover the information in this document. -

Overview of the SPEC Benchmarks

9 Overview of the SPEC Benchmarks Kaivalya M. Dixit IBM Corporation “The reputation of current benchmarketing claims regarding system performance is on par with the promises made by politicians during elections.” Standard Performance Evaluation Corporation (SPEC) was founded in October, 1988, by Apollo, Hewlett-Packard,MIPS Computer Systems and SUN Microsystems in cooperation with E. E. Times. SPEC is a nonprofit consortium of 22 major computer vendors whose common goals are “to provide the industry with a realistic yardstick to measure the performance of advanced computer systems” and to educate consumers about the performance of vendors’ products. SPEC creates, maintains, distributes, and endorses a standardized set of application-oriented programs to be used as benchmarks. 489 490 CHAPTER 9 Overview of the SPEC Benchmarks 9.1 Historical Perspective Traditional benchmarks have failed to characterize the system performance of modern computer systems. Some of those benchmarks measure component-level performance, and some of the measurements are routinely published as system performance. Historically, vendors have characterized the performances of their systems in a variety of confusing metrics. In part, the confusion is due to a lack of credible performance information, agreement, and leadership among competing vendors. Many vendors characterize system performance in millions of instructions per second (MIPS) and millions of floating-point operations per second (MFLOPS). All instructions, however, are not equal. Since CISC machine instructions usually accomplish a lot more than those of RISC machines, comparing the instructions of a CISC machine and a RISC machine is similar to comparing Latin and Greek. 9.1.1 Simple CPU Benchmarks Truth in benchmarking is an oxymoron because vendors use benchmarks for marketing purposes. -

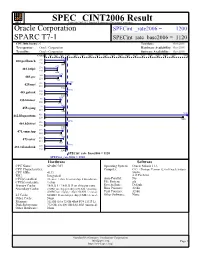

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Poweredge R940 (Intel Xeon Gold 5122, 3.60 Ghz) Specint Rate Base2006 = 1080 CPU2006 License: 55 Test Date: Jun-2017 Test Sponsor: Dell Inc

SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. SPECint_rate2006 = 1150 PowerEdge R940 (Intel Xeon Gold 5122, 3.60 GHz) SPECint_rate_base2006 = 1080 CPU2006 license: 55 Test date: Jun-2017 Test sponsor: Dell Inc. Hardware Availability: Jul-2017 Tested by: Dell Inc. Software Availability: Nov-2016 Copies 0 1000 2000 3000 4000 5000 6000 7000 8000 9000 10000 11000 12000 13000 14000 15000 16000 18000 917 400.perlbench 32 32 762 521 401.bzip2 32 32 487 808 403.gcc 32 32 802 1480 429.mcf 32 624 445.gobmk 32 2010 456.hmmer 32 32 1530 710 458.sjeng 32 32 658 17700 462.libquantum 32 1170 464.h264ref 32 32 1130 554 471.omnetpp 32 32 509 614 473.astar 32 1430 483.xalancbmk 32 SPECint_rate_base2006 = 1080 SPECint_rate2006 = 1150 Hardware Software CPU Name: Intel Xeon Gold 5122 Operating System: SUSE Linux Enterprise Server 12 SP2 CPU Characteristics: Intel Turbo Boost Technology up to 3.70 GHz 4.4.21-69-default CPU MHz: 3600 Compiler: C/C++: Version 17.0.3.191 of Intel C/C++ FPU: Integrated Compiler for Linux CPU(s) enabled: 16 cores, 4 chips, 4 cores/chip, 2 threads/core Auto Parallel: Yes CPU(s) orderable: 2,4 chip File System: xfs Primary Cache: 32 KB I + 32 KB D on chip per core System State: Run level 3 (multi-user) Secondary Cache: 1 MB I+D on chip per core Base Pointers: 32-bit L3 Cache: 16.5 MB I+D on chip per chip Peak Pointers: 32/64-bit Other Cache: None Other Software: Microquill SmartHeap V10.2 Memory: 768 GB (48 x 16 GB 2Rx8 PC4-2666V-R) Disk Subsystem: 1 x 960 GB SATA SSD Other Hardware: None Standard Performance Evaluation Corporation [email protected] Page 1 http://www.spec.org/ SPEC CINT2006 Result spec Copyright 2006-2017 Standard Performance Evaluation Corporation Dell Inc. -

IBM Power Systems Performance Report Apr 13, 2021

IBM Power Performance Report Power7 to Power10 September 8, 2021 Table of Contents 3 Introduction to Performance of IBM UNIX, IBM i, and Linux Operating System Servers 4 Section 1 – SPEC® CPU Benchmark Performance 4 Section 1a – Linux Multi-user SPEC® CPU2017 Performance (Power10) 4 Section 1b – Linux Multi-user SPEC® CPU2017 Performance (Power9) 4 Section 1c – AIX Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 5 Section 1d – Linux Multi-user SPEC® CPU2006 Performance (Power7, Power7+, Power8) 6 Section 2 – AIX Multi-user Performance (rPerf) 6 Section 2a – AIX Multi-user Performance (Power8, Power9 and Power10) 9 Section 2b – AIX Multi-user Performance (Power9) in Non-default Processor Power Mode Setting 9 Section 2c – AIX Multi-user Performance (Power7 and Power7+) 13 Section 2d – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power8) 15 Section 2e – AIX Capacity Upgrade on Demand Relative Performance Guidelines (Power7 and Power7+) 20 Section 3 – CPW Benchmark Performance 19 Section 3a – CPW Benchmark Performance (Power8, Power9 and Power10) 22 Section 3b – CPW Benchmark Performance (Power7 and Power7+) 25 Section 4 – SPECjbb®2015 Benchmark Performance 25 Section 4a – SPECjbb®2015 Benchmark Performance (Power9) 25 Section 4b – SPECjbb®2015 Benchmark Performance (Power8) 25 Section 5 – AIX SAP® Standard Application Benchmark Performance 25 Section 5a – SAP® Sales and Distribution (SD) 2-Tier – AIX (Power7 to Power8) 26 Section 5b – SAP® Sales and Distribution (SD) 2-Tier – Linux on Power (Power7 to Power7+) -

MIPS32™ Architecture for Programmers Volume I: Introduction to the MIPS32™ Architecture

MIPS32™ Architecture For Programmers Volume I: Introduction to the MIPS32™ Architecture Document Number: MD00082 Revision 0.95 March 12, 2001 MIPS Technologies, Inc. 1225 Charleston Road Mountain View, CA 94043-1353 Copyright © 2001 MIPS Technologies, Inc. All rights reserved. Unpublished rights reserved under the Copyright Laws of the United States of America. This document contains information that is proprietary to MIPS Technologies, Inc. (“MIPS Technologies”). Any copying, modifyingor use of this information (in whole or in part) which is not expressly permitted in writing by MIPS Technologies or a contractually-authorized third party is strictly prohibited. At a minimum, this information is protected under unfair competition laws and the expression of the information contained herein is protected under federal copyright laws. Violations thereof may result in criminal penalties and fines. MIPS Technologies or any contractually-authorized third party reserves the right to change the information contained in this document to improve function, design or otherwise. MIPS Technologies does not assume any liability arising out of the application or use of this information. Any license under patent rights or any other intellectual property rights owned by MIPS Technologies or third parties shall be conveyed by MIPS Technologies or any contractually-authorized third party in a separate license agreement between the parties. The information contained in this document constitutes one or more of the following: commercial computer software, commercial -

QSCORES: Trading Dark Silicon for Scalable Energy Efficiency With

QSCORES: Trading Dark Silicon for Scalable Energy Efficiency with Quasi-Specific Cores Ganesh Venkatesh+, Jack Sampson, Nathan Goulding-Hotta, Sravanthi Kota Venkata+, Michael Bedford Taylor and Steven Swanson http://greendroid.org Department of Computer Science and Engineering University of California, San Diego ABSTRACT General Terms Transistor density continues to increase exponentially, but power Design, Experimentation, Measurement dissipation per transistor is improving only slightly with each gen- eration of Moore’s law. Given the constant chip-level power bud- Keywords gets, this exponentially decreases the percentage of transistors that QSCORE, Conservation Core, Merging, Dark Silicon, Utilization can switch at full frequency with each technology generation. Hence, Wall, Heterogeneous Many-Core, Specialization while the transistor budget continues to increase exponentially, the power budget has become the dominant limiting factor in processor design. In this regime, utilizing transistors to design specialized 1. INTRODUCTION cores that optimize energy-per-computation becomes an effective Although transistor density continues to scale, nearly constant approach to improve system performance. per-transistor power and fixed chip-level power budget place tight To trade transistors for energy efficiency in a scalable manner, we constraints on how much of a chip can be active at full frequency at propose Quasi-specific Cores, or QSCORES, specialized processors one time. Hence, as transistor density increases with each process capable of executing multiple general-purpose computations while generation, so does the fraction of the chip that must be under- providing an order of magnitude more energy efficiency than a clocked or under-utilized because of power concerns [27]. Re- general-purpose processor. The QSCORE design flow is based on searchers have termed these passive expanses of silicon area dark the insight that similar code patterns exist within and across appli- silicon [14, 11, 15, 17, 21]. -

MCST~8 Assembly Language Programming Manual PRELIMINARY EDITION

INTEL CORP. 3065 Bowers Avenue, Santa Clara, California 95051 • (408) 246-7501 MCST~8 Assembly Language Programming Manual PRELIMINARY EDITION November 1973 © Intel Corporation 1973 -- TABLE OF CONTENTS -- 8008 PROGRAMMING MANUAL Page No. 1.0 INTRODUCTION 1-1 2.0 COMPUTER ORGANIZATION 2-1 2.1 THE CENTRAL PROCESSING UNIT 2-3 2.1.1 WORKING REGISTERS 2-3 2. 1 .2 THE STACK 2-5 2.1.3 ARITHMETIC AND LOGIC UNIT 2-7 2.2 MEMORY 2-8 2.3 COMPUTER PROGRAM REPRESENTATION IN MEMORY 2-8 2.4 MEMORY ADDRESSING 2-10 2.4.1 DIRECT ADDRESSING 2-11 2 .4 .2 INDEXED ADDRESSING 2-13 2.4.3 INDIRECT ADDRESSING 2-13 2.4.4 IMMEDIATE ADDRESSING 2-14 2.4.5 SUBROUTINES AND USE OF THE STACK FOR ADDRESSING 2-15 2.5 CONDITION BITS 2-18 2.5.1 CARRY BIT 2-18 2 .5 • 2 SIGN BIT 2-19 2 .5 . 3 ZERO BIT 2-19 2 • 5 . 4 PARITY BIT 2-20 3.{) THE 8008 INSTRUCTION SET 3-1 3.1 ASSEMBLY LANG UAGE 3-1 3.1.1 HOW ASSEMBLY LANGUAGE IS USED 3-1 3.1.2 STATEMENT MNEMONICS 3-4 3 • 1. 3 LABEL FIELD 3-5 3.1.4 CODE FIELD 3-7 3 • 1 .5 OPERAND FIELD 3-7 3.1.6 COMMENT FIELD 3-15 i Page No. 3.2 DATA STATEMENTS 3-15 3.2.1 TWO'S COMPLEMENT 3-16 3.2.2 DB DEFINE BYTE(S) OF DATA 3-20 3.2.3 DW DEFINE WORD (TWO BYTES) OF DATA 3-21 3.2.4 DS DEFINE STORAGE (BYTES) 3-22 3.3 SINGLE REGISTER INSTRUCTIONS 3-23 3.3.1 INR INCREMENT REGISTER 3-24 3.3.2 DCR DECREMENT REGISTER 3-24 3.4 MOV INSTRUCTION 3-25 3.5 REGISTER OR MEMORY TO ACCUMULATOR INSTRUCTIONS 3-28 3.5.1 ADD ADD REGISTER OR MEMORY TO ACCUMULATOR 3-29 3.5.2 ADC ADD REGISTI:R OR MEMORY TO ACCUMULATOR WITH CARRY 3-31 3.5.3 SUB SUBTRACT REGISTER OR MEMORY -

Notes on Usage of the RX Family C/C++ Compiler V.1.00 Release 01 and Corrections in the User's Manual

R0C5RX00XSW01R-ERN100630 Notes on Usage of the RX Family C/C++ Compiler V.1.00 Release 01 and Corrections in the User's Manual There are notes on usage of the RX Family C/C++ compiler V.1.00 Release 01 and corrections to be made in the bundled user's manual (REJ10J2062-0100) as listed below. 1. Notes on Usage 1.1 Note on a Case of the C1804 Message [C/C++ Compiler] When the int_to_short option is specified and a file including a C standard header is compiled as C++ or EC++, the compiler may show the C1804(W) message. In this case, simply ignore the message because there are no problems. [NOTE] In compilation of C++ or EC++, the int_to_short option will be invalid. Data that are shared between C and C++ (EC++) program must be declared as the long or short type rather than as the int type. 1.2 Note on using MVTC or POPC instructions [Assembler] In the assembly language, the program counter (PC) cannot be specified for MVTC or POPC instructions. 1.3 Note on the delete Option for Linkage [Optimizing linkage editor] When a function symbol is removed by the delete option, its following function in the source program is not allowed to have a breakpoint at its function name on the editor in your debugging. If you would like to set a breakpoint at the function entrance, set the breakpoint via the Label window or at the prologue code of the function. 1.4 Notes of Paths Name and File Name [Optimizing linkage editor] In the specification of the optimizing linkage editor, the parentheses "(" and ")" signs for the path names and file names cannot be used because these signs are used for the option descriptions. -

Benchmarking-HOWTO.Pdf

Linux Benchmarking HOWTO Linux Benchmarking HOWTO Table of Contents Linux Benchmarking HOWTO.........................................................................................................................1 by André D. Balsa, [email protected] ..............................................................................................1 1.Introduction ..........................................................................................................................................1 2.Benchmarking procedures and interpretation of results.......................................................................1 3.The Linux Benchmarking Toolkit (LBT).............................................................................................1 4.Example run and results........................................................................................................................2 5.Pitfalls and caveats of benchmarking ..................................................................................................2 6.FAQ .....................................................................................................................................................2 7.Copyright, acknowledgments and miscellaneous.................................................................................2 1.Introduction ..........................................................................................................................................2 1.1 Why is benchmarking so important ? ...............................................................................................3 -

The Benefits of Hardware-Software Co-Design/Convergence for Large- Scale Enterprise Workloads

The Benefits of Hardware-Software Co-Design/Convergence for Large- Scale Enterprise Workloads Michael Palmeter Sr. Director Oracle Systems Engineering Copyright © 2016, Oracle and/or its affiliates. All rights reserved. | Required Benchmark Disclosure Statement Must be in SPARC S7 or M7 Presentations with Benchmark Results •Additional Info: http://blogs.oracle.com/bestperf •Copyright 2017, Oracle &/or its affiliates. All rights reserved. Oracle & Java are registered trademarks of Oracle &/or its affiliates. Other names may be trademarks of their respective owners •SPEC and the benchmark name SPECjEnterprise are registered trademarks of the Standard Performance Evaluation Corporation. Results from www.spec.org as of 10/25/2017. SPARC T7-1, 25,818.85 SPECjEnterprise2010 EjOPS (unsecure); SPARC T7-1, 25,093.06 SPECjEnterprise2010 EjOPS (secure); Oracle Server X5-2, 21,504.30 SPECjEnterprise2010 EjOPS (unsecure); IBM Power S824, 22,543.34 SPECjEnterprise2010 EjOPS (unsecure); IBM x3650 M5, 19,282.14 SPECjEnterprise2010 EjOPS (unsecure). •SPEC and the benchmark name SPECvirt_sc are registered trademarks of the Standard Performance Evaluation Corporation. Results from www.spec.org as of 10/25/2017. SPARC T7-2, SPECvirt_sc2013 3026@168 VMs; HP DL580 Gen9, SPECvirt_sc2013 3020@168 VMs; Lenovo x3850 X6; SPECvirt_sc2013 2655@147 VMs; Huawei FusionServer RH2288H V3, SPECvirt_sc2013 1616@95 VMs; HP ProLiant DL360 Gen9, SPECvirt_sc2013 1614@95 VMs; IBM Power S824, SPECvirt_sc2013 1371@79 VMs. •SPEC and the benchmark names SPECfp and SPECint are registered trademarks of the Standard Performance Evaluation Corporation. Results as of October 25, 2017 from www.spec.org and this report. 1 chip results SPARC T7-1: 1200 SPECint_rate2006, 1120 SPECint_rate_base2006, 832 SPECfp_rate2006, 801 SPECfp_rate_base2006; SPARC T5-1B: 489 SPECint_rate2006, 440 SPECint_rate_base2006, 369 SPECfp_rate2006, 350 SPECfp_rate_base2006; Fujitsu SPARC M10-4S: 546 SPECint_rate2006, 479 SPECint_rate_base2006, 462 SPECfp_rate2006, 418 SPECfp_rate_base2006.