Overview of the SPEC Benchmarks

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Microprocessor

MICROPROCESSOR www.MPRonline.com THE REPORTINSIDER’S GUIDE TO MICROPROCESSOR HARDWARE EEMBC’S MULTIBENCH ARRIVES CPU Benchmarks: Not Just For ‘Benchmarketing’ Any More By Tom R. Halfhill {7/28/08-01} Imagine a world without measurements or statistical comparisons. Baseball fans wouldn’t fail to notice that a .300 hitter is better than a .100 hitter. But would they welcome a trade that sends the .300 hitter to Cleveland for three .100 hitters? System designers and software developers face similar quandaries when making trade-offs EEMBC’s role has evolved over the years, and Multi- with multicore processors. Even if a dual-core processor Bench is another step. Originally, EEMBC was conceived as an appears to be better than a single-core processor, how much independent entity that would create benchmark suites and better is it? Twice as good? Would a quad-core processor be certify the scores for accuracy, allowing vendors and customers four times better? Are more cores worth the additional cost, to make valid comparisons among embedded microproces- design complexity, power consumption, and programming sors. (See MPR 5/1/00-02, “EEMBC Releases First Bench- difficulty? marks.”) EEMBC still serves that role. But, as it turns out, most The Embedded Microprocessor Benchmark Consor- EEMBC members don’t openly publish their scores. Instead, tium (EEMBC) wants to help answer those questions. they disclose scores to prospective customers under an NDA or EEMBC’s MultiBench 1.0 is a new benchmark suite for use the benchmarks for internal testing and analysis. measuring the throughput of multiprocessor systems, Partly for this reason, MPR rarely cites EEMBC scores including those built with multicore processors. -

Memory Centric Characterization and Analysis of SPEC CPU2017 Suite

Session 11: Performance Analysis and Simulation ICPE ’19, April 7–11, 2019, Mumbai, India Memory Centric Characterization and Analysis of SPEC CPU2017 Suite Sarabjeet Singh Manu Awasthi [email protected] [email protected] Ashoka University Ashoka University ABSTRACT These benchmarks have become the standard for any researcher or In this paper, we provide a comprehensive, memory-centric charac- commercial entity wishing to benchmark their architecture or for terization of the SPEC CPU2017 benchmark suite, using a number of exploring new designs. mechanisms including dynamic binary instrumentation, measure- The latest offering of SPEC CPU suite, SPEC CPU2017, was re- ments on native hardware using hardware performance counters leased in June 2017 [8]. SPEC CPU2017 retains a number of bench- and operating system based tools. marks from previous iterations but has also added many new ones We present a number of results including working set sizes, mem- to reflect the changing nature of applications. Some recent stud- ory capacity consumption and memory bandwidth utilization of ies [21, 24] have already started characterizing the behavior of various workloads. Our experiments reveal that, on the x86_64 ISA, SPEC CPU2017 applications, looking for potential optimizations to SPEC CPU2017 workloads execute a significant number of mem- system architectures. ory related instructions, with approximately 50% of all dynamic In recent years the memory hierarchy, from the caches, all the instructions requiring memory accesses. We also show that there is way to main memory, has become a first class citizen of computer a large variation in the memory footprint and bandwidth utilization system design. -

Load Testing, Benchmarking, and Application Performance Management for the Web

Published in the 2002 Computer Measurement Group (CMG) Conference, Reno, NV, Dec. 2002. LOAD TESTING, BENCHMARKING, AND APPLICATION PERFORMANCE MANAGEMENT FOR THE WEB Daniel A. Menascé Department of Computer Science and E-center of E-Business George Mason University Fairfax, VA 22030-4444 [email protected] Web-based applications are becoming mission-critical for many organizations and their performance has to be closely watched. This paper discusses three important activities in this context: load testing, benchmarking, and application performance management. Best practices for each of these activities are identified. The paper also explains how basic performance results can be used to increase the efficiency of load testing procedures. infrastructure depends on the traffic it expects to see 1. Introduction at its site. One needs to spend enough but no more than is required in the IT infrastructure. Besides, Web-based applications are becoming mission- resources need to be spent where they will generate critical to most private and governmental the most benefit. For example, one should not organizations. The ever-increasing number of upgrade the Web servers if most of the delay is in computers connected to the Internet and the fast the database server. So, in order to maximize the growing number of Web-enabled wireless devices ROI, one needs to know when and how to upgrade create incentives for companies to invest in Web- the IT infrastructure. In other words, not spending at based infrastructures and the associated personnel the right time and spending at the wrong place will to maintain them. By establishing a Web presence, a reduce the cost-benefit of the investment. -

Microbenchmarks in Big Data

M Microbenchmark Overview Microbenchmarks constitute the first line of per- Nicolas Poggi formance testing. Through them, we can ensure Databricks Inc., Amsterdam, NL, BarcelonaTech the proper and timely functioning of the different (UPC), Barcelona, Spain individual components that make up our system. The term micro, of course, depends on the prob- lem size. In BigData we broaden the concept Synonyms to cover the testing of large-scale distributed systems and computing frameworks. This chap- Component benchmark; Functional benchmark; ter presents the historical background, evolution, Test central ideas, and current key applications of the field concerning BigData. Definition Historical Background A microbenchmark is either a program or routine to measure and test the performance of a single Origins and CPU-Oriented Benchmarks component or task. Microbenchmarks are used to Microbenchmarks are closer to both hardware measure simple and well-defined quantities such and software testing than to competitive bench- as elapsed time, rate of operations, bandwidth, marking, opposed to application-level – macro or latency. Typically, microbenchmarks were as- – benchmarking. For this reason, we can trace sociated with the testing of individual software microbenchmarking influence to the hardware subroutines or lower-level hardware components testing discipline as can be found in Sumne such as the CPU and for a short period of time. (1974). Furthermore, we can also find influence However, in the BigData scope, the term mi- in the origins of software testing methodology crobenchmarking is broadened to include the during the 1970s, including works such cluster – group of networked computers – acting as Chow (1978). One of the first examples of as a single system, as well as the testing of a microbenchmark clearly distinguishable from frameworks, algorithms, logical and distributed software testing is the Whetstone benchmark components, for a longer period and larger data developed during the late 1960s and published sizes. -

Examining the Viability of FPGA Supercomputing

1 Examining the Viability of FPGA Supercomputing Stephen D. Craven and Peter Athanas Bradley Department of Electrical and Computer Engineering Virginia Polytechnic Institute and State University Blacksburg, VA 24061 USA email: {scraven,athanas}@vt.edu Abstract—For certain applications, custom computational hardware created using field programmable gate arrays (FPGAs) produces significant performance improvements over processors, leading some in academia and industry to call for the inclusion of FPGAs in supercomputing clusters. This paper presents a comparative analysis of FPGAs and traditional processors, focusing on floating- point performance and procurement costs, revealing economic hurdles in the adoption of FPGAs for general High-Performance Computing (HPC). Index Terms— computational accelerator, digital arithmetic, Field programmable gate arrays, high- performance computing, supercomputers. I. INTRODUCTION Supercomputers have experienced a recent resurgence, fueled by government research dollars and the development of low-cost supercomputing clusters. Unlike the Massively Parallel Processor (MPP) designs found in Cray and CDC machines of the 70s and 80s, featuring proprietary processor architectures, many modern supercomputing clusters are constructed from commodity PC processors, significantly reducing procurement costs. In an effort to improve performance, several companies offer machines that place one or more FPGAs in each node of the cluster. Configurable logic devices, of which FPGAs are one example, permit the device’s hardware to be programmed multiple times after manufacture. A wide body of research over two decades has repeatedly demonstrated significant performance improvements for certain classes of applications when implemented within an FPGA’s configurable logic [1]. Applications well suited to speed-up by FPGAs typically exhibit massive parallelism and small integer or fixed-point data types. -

Chapter 3 CSE Implementation Issues

UvA-DARE (Digital Academic Repository) Studies in Interactive Visualization van Liere, R. Publication date 2001 Link to publication Citation for published version (APA): van Liere, R. (2001). Studies in Interactive Visualization. General rights It is not permitted to download or to forward/distribute the text or part of it without the consent of the author(s) and/or copyright holder(s), other than for strictly personal, individual use, unless the work is under an open content license (like Creative Commons). Disclaimer/Complaints regulations If you believe that digital publication of certain material infringes any of your rights or (privacy) interests, please let the Library know, stating your reasons. In case of a legitimate complaint, the Library will make the material inaccessible and/or remove it from the website. Please Ask the Library: https://uba.uva.nl/en/contact, or a letter to: Library of the University of Amsterdam, Secretariat, Singel 425, 1012 WP Amsterdam, The Netherlands. You will be contacted as soon as possible. UvA-DARE is a service provided by the library of the University of Amsterdam (https://dare.uva.nl) Download date:25 Sep 2021 Chapter 3 CSE Implementation Issues An overview of the CSE architecture was presented in Chapter 2. In this chapter a more in-depth discussion on the technical issues related to the design and implementation is given. 3.1 Design 3.1.1 Data Storage and Mappings A variable managed by the blackboard consists of a name, type, value, dimensions, and user defined attributes. Data is stored in the blackboard as an n-dimensional array (where n is0, 1, 2,...) containing elements of the same scalar type (e.g., 8-bit character, 32-bit integer). -

Hypervisors Vs. Lightweight Virtualization: a Performance Comparison

2015 IEEE International Conference on Cloud Engineering Hypervisors vs. Lightweight Virtualization: a Performance Comparison Roberto Morabito, Jimmy Kjällman, and Miika Komu Ericsson Research, NomadicLab Jorvas, Finland [email protected], [email protected], [email protected] Abstract — Virtualization of operating systems provides a container and alternative solutions. The idea is to quantify the common way to run different services in the cloud. Recently, the level of overhead introduced by these platforms and the lightweight virtualization technologies claim to offer superior existing gap compared to a non-virtualized environment. performance. In this paper, we present a detailed performance The remainder of this paper is structured as follows: in comparison of traditional hypervisor based virtualization and Section II, literature review and a brief description of all the new lightweight solutions. In our measurements, we use several technologies and platforms evaluated is provided. The benchmarks tools in order to understand the strengths, methodology used to realize our performance comparison is weaknesses, and anomalies introduced by these different platforms in terms of processing, storage, memory and network. introduced in Section III. The benchmark results are presented Our results show that containers achieve generally better in Section IV. Finally, some concluding remarks and future performance when compared with traditional virtual machines work are provided in Section V. and other recent solutions. Albeit containers offer clearly more dense deployment of virtual machines, the performance II. BACKGROUND AND RELATED WORK difference with other technologies is in many cases relatively small. In this section, we provide an overview of the different technologies included in the performance comparison. -

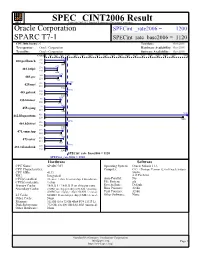

Oracle Corporation: SPARC T7-1

SPEC CINT2006 Result spec Copyright 2006-2015 Standard Performance Evaluation Corporation Oracle Corporation SPECint_rate2006 = 1200 SPARC T7-1 SPECint_rate_base2006 = 1120 CPU2006 license: 6 Test date: Oct-2015 Test sponsor: Oracle Corporation Hardware Availability: Oct-2015 Tested by: Oracle Corporation Software Availability: Oct-2015 Copies 0 300 600 900 1200 1600 2000 2400 2800 3200 3600 4000 4400 4800 5200 5600 6000 6400 6800 7600 1100 400.perlbench 192 224 1040 675 401.bzip2 256 224 666 875 403.gcc 160 224 720 1380 429.mcf 128 224 1160 1190 445.gobmk 256 224 1120 854 456.hmmer 96 224 813 1020 458.sjeng 192 224 988 7550 462.libquantum 416 224 7330 1190 464.h264ref 256 224 1150 956 471.omnetpp 255 224 885 986 473.astar 416 224 862 1180 483.xalancbmk 256 224 1140 SPECint_rate_base2006 = 1120 SPECint_rate2006 = 1200 Hardware Software CPU Name: SPARC M7 Operating System: Oracle Solaris 11.3 CPU Characteristics: Compiler: C/C++/Fortran: Version 12.4 of Oracle Solaris CPU MHz: 4133 Studio, FPU: Integrated 4/15 Patch Set CPU(s) enabled: 32 cores, 1 chip, 32 cores/chip, 8 threads/core Auto Parallel: No CPU(s) orderable: 1 chip File System: zfs Primary Cache: 16 KB I + 16 KB D on chip per core System State: Default Secondary Cache: 2 MB I on chip per chip (256 KB / 4 cores); Base Pointers: 32-bit 4 MB D on chip per chip (256 KB / 2 cores) Peak Pointers: 32-bit L3 Cache: 64 MB I+D on chip per chip (8 MB / 4 cores) Other Software: None Other Cache: None Memory: 512 GB (16 x 32 GB 4Rx4 PC4-2133P-L) Disk Subsystem: 732 GB, 4 x 400 GB SAS SSD -

Power Measurement Tutorial for the Green500 List

Power Measurement Tutorial for the Green500 List R. Ge, X. Feng, H. Pyla, K. Cameron, W. Feng June 27, 2007 Contents 1 The Metric for Energy-Efficiency Evaluation 1 2 How to Obtain P¯(Rmax)? 2 2.1 The Definition of P¯(Rmax)...................................... 2 2.2 Deriving P¯(Rmax) from Unit Power . 2 2.3 Measuring Unit Power . 3 3 The Measurement Procedure 3 3.1 Equipment Check List . 4 3.2 Software Installation . 4 3.3 Hardware Connection . 4 3.4 Power Measurement Procedure . 5 4 Appendix 6 4.1 Frequently Asked Questions . 6 4.2 Resources . 6 1 The Metric for Energy-Efficiency Evaluation This tutorial serves as a practical guide for measuring the computer system power that is required as part of a Green500 submission. It describes the basic procedures to be followed in order to measure the power consumption of a supercomputer. A supercomputer that appears on The TOP500 List can easily consume megawatts of electric power. This power consumption may lead to operating costs that exceed acquisition costs as well as intolerable system failure rates. In recent years, we have witnessed an increasingly stronger movement towards energy-efficient computing systems in academia, government, and industry. Thus, the purpose of the Green500 List is to provide a ranking of the most energy-efficient supercomputers in the world and serve as a complementary view to the TOP500 List. However, as pointed out in [1, 2], identifying a single objective metric for energy efficiency in supercom- puters is a difficult task. Based on [1, 2] and given the already existing use of the “performance per watt” metric, the Green500 List uses “performance per watt” (PPW) as its metric to rank the energy efficiency of supercomputers. -

Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK

1 From Start-ups to Scale-ups: Opportunities and Open Problems for Static and Dynamic Program Analysis Mark Harman∗, Peter O’Hearn∗ ∗Facebook London and University College London, UK Abstract—This paper1 describes some of the challenges and research questions that target the most productive intersection opportunities when deploying static and dynamic analysis at we have yet witnessed: that between exciting, intellectually scale, drawing on the authors’ experience with the Infer and challenging science, and real-world deployment impact. Sapienz Technologies at Facebook, each of which started life as a research-led start-up that was subsequently deployed at scale, Many industrialists have perhaps tended to regard it unlikely impacting billions of people worldwide. that much academic work will prove relevant to their most The paper identifies open problems that have yet to receive pressing industrial concerns. On the other hand, it is not significant attention from the scientific community, yet which uncommon for academic and scientific researchers to believe have potential for profound real world impact, formulating these that most of the problems faced by industrialists are either as research questions that, we believe, are ripe for exploration and that would make excellent topics for research projects. boring, tedious or scientifically uninteresting. This sociological phenomenon has led to a great deal of miscommunication between the academic and industrial sectors. I. INTRODUCTION We hope that we can make a small contribution by focusing on the intersection of challenging and interesting scientific How do we transition research on static and dynamic problems with pressing industrial deployment needs. Our aim analysis techniques from the testing and verification research is to move the debate beyond relatively unhelpful observations communities to industrial practice? Many have asked this we have typically encountered in, for example, conference question, and others related to it. -

3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) Zero Ovf

Chapter 3 Arithmetic for Computers 1 § 3.1Introduction Arithmetic for Computers Operations on integers Addition and subtraction Multiplication and division Dealing with overflow Floating-point real numbers Representation and operations Rechnerstrukturen 182.092 3 — Arithmetic for Computers 2 MIPS Arithmetic Logic Unit (ALU) zero ovf 1 Must support the Arithmetic/Logic 1 operations of the ISA A 32 add, addi, addiu, addu ALU result sub, subu 32 mult, multu, div, divu B 32 sqrt 4 and, andi, nor, or, ori, xor, xori m (operation) beq, bne, slt, slti, sltiu, sltu With special handling for sign extend – addi, addiu, slti, sltiu zero extend – andi, ori, xori overflow detection – add, addi, sub Rechnerstrukturen 182.092 3 — Arithmetic for Computers 3 (Simplyfied) 1-bit MIPS ALU AND, OR, ADD, SLT Rechnerstrukturen 182.092 3 — Arithmetic for Computers 4 Final 32-bit ALU Rechnerstrukturen 182.092 3 — Arithmetic for Computers 5 Performance issues Critical path of n-bit ripple-carry adder is n*CP CarryIn0 A0 1-bit result0 ALU B0 CarryOut0 CarryIn1 A1 1-bit result1 B ALU 1 CarryOut 1 CarryIn2 A2 1-bit result2 ALU B2 CarryOut CarryIn 2 3 A3 1-bit result3 ALU B3 CarryOut3 Design trick – throw hardware at it (Carry Lookahead) Rechnerstrukturen 182.092 3 — Arithmetic for Computers 6 Carry Lookahead Logic (4 bit adder) LS 283 Rechnerstrukturen 182.092 3 — Arithmetic for Computers 7 § 3.2 Addition and Subtraction 3.2 Integer Addition Example: 7 + 6 Overflow if result out of range Adding +ve and –ve operands, no overflow Adding two +ve operands -

I.MX 8Quadxplus Power and Performance

NXP Semiconductors Document Number: AN12338 Application Note Rev. 4 , 04/2020 i.MX 8QuadXPlus Power and Performance 1. Introduction Contents This application note helps you to design power 1. Introduction ........................................................................ 1 management systems. It illustrates the current drain 2. Overview of i.MX 8QuadXPlus voltage supplies .............. 1 3. Power measurement of the i.MX 8QuadXPlus processor ... 2 measurements of the i.MX 8QuadXPlus Applications 3.1. VCC_SCU_1V8 power ........................................... 4 Processors taken on NXP Multisensory Evaluation Kit 3.2. VCC_DDRIO power ............................................... 4 (MEK) Platform through several use cases. 3.3. VCC_CPU/VCC_GPU/VCC_MAIN power ........... 5 3.4. Temperature measurements .................................... 5 This document provides details on the performance and 3.5. Hardware and software used ................................... 6 3.6. Measuring points on the MEK platform .................. 6 power consumption of the i.MX 8QuadXPlus 4. Use cases and measurement results .................................... 6 processors under a variety of low- and high-power 4.1. Low-power mode power consumption (Key States modes. or ‘KS’)…… ......................................................................... 7 4.2. Complex use case power consumption (Arm Core, The data presented in this application note is based on GPU active) ......................................................................... 11 5. SOC