The Effect of Pilot and Air Traffic Control Experiences & Automation Management Strategies on UAS Mission Task Performance

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Human Systems Integration Is Worth the Money and Effort! the Argument for the Implementation of Human Systems Integration Processes in Defence Capability Acquisition

Human Systems Integration is worth the money and effort! The argument for the implementation of Human Systems Integration processes in Defence capability acquisition Defence Intranet ohsc.defence.gov.au/ Defence Internet www.defence.gov.au/dpe/ohsc PAGE INTENTIONALLY BLANK Human Systems Integration is worth the money and effort! The argument for the implementation of Human Systems iii Integration processes in Defence capability acquisition Prepared for: Human Systems Integration Framework, Occupational Health & Safety Branch People Strategies and Policy Group, Department of Defence Canberra, ACT, 2600 National Library of Australia Cataloguing-in-Publication entry Author: Burgess-Limerick, Robin. Title: Human Systems Integration is worth the money and effort! The argument for the implementation of Human Systems Integration processes in Defence capability acquisition [electronic resource] / prepared by Robin Burgess-Limerick, Cristina Cotea, Eva Pietrzak. ISBN: 9780642297327 (pdf) Subjects: Australia. Dept. of Defence. Human engineering. Systems engineering. Automation–Human factors. Other Authors/Contributors: Cotea, Cristina. Pietrzak, Eva. Defence Occupational Health and Safety Branch (Australia). Dewey Number: 620.8 iv Conditions of Release and Disposal © Commonwealth of Australia 2010 This work is copyright. Apart from any use as permitted under the Copyright Act 1968, no part may be reproduced by any process without prior written permission from the Department of Defence. Announcement statement—may be announced to the public. Secondary release—may be released to the public. All Defence information, whether classified or not, is protected from unauthorised disclosure under the Crimes Act 1914. Defence information may only be released in accordance with the Defence Protective Security Manual (SECMAN 4) and/or Defence Instruction (General) OPS 13–4—Release of Classified Defence Information to Other Countries, as appropriate. -

36Th Commencement Exercises Saturday, the Sixteenth of May · Two Thousand Fifteen

Uniformed Services University of the Health Sciences “Learning to Care for Those in Harm’s Way” 36th Commencement Exercises Saturday, the Sixteenth of May · Two Thousand Fifteen The Mace he mace was a weapon of war originating with the loaded club and stone Thammer of primitive man. Although it continued to be used as a weapon through the Middle Ages, during this period it also became symbolic as an ornament representing power. Sergeants-at-Arms, who were guards of kings and other high officials, carried a mace to protect their monarch during processions. By the 14th century, the mace had become more ceremonial in use and was decorated with jewels and precious metals, losing its war-club appearance. Three hundred years later, the mace was used solely as a symbol of authority. The mace is used during sessions of legislative assemblies such as the U.S. House of Representatives, where it is placed to the right of the Speaker. More frequently, maces are seen at university commencements and convocations, exemplifying knowledge as power. The USU mace was a glorious gift from the Honorable Sam Nixon, MD, past chairman of the Board of Regents, and his wife, Elizabeth. The mace was used for the first time at the 1995 commencement ceremony. It is handcrafted in sterling silver and carries the seal of the university along with the emblems of the U.S. Army, Navy, Air Force and Public Health Service. The university seal and service emblems are superimposed on the earth’s globe to symbolize the worldwide mission of the university and its graduates. -

Aeromedical Evacuation Springer New York Berlin Heidelberg Hong Kong London Milan Paris Tokyo William W

Aeromedical Evacuation Springer New York Berlin Heidelberg Hong Kong London Milan Paris Tokyo William W. Hurd, MD, MS, FACOG Nicholas J. Thompson Professor and Chair, Department of Obstetrics and Gynecology, Wright State University School of Medicine, Dayton, Ohio; Col, USAFR, MC, FS, Commander, 445th Aeromedical Staging Squadron, Wright-Patterson AFB, Dayton, Ohio John G. Jernigan, MD Brig Gen, USAF, CFS (ret), Formerly Commander, Human Systems Center, Brooks AFB, San Antonio, Texas Editors Aeromedical Evacuation Management of Acute and Stabilized Patients Foreword by Paul K. Carlton, Jr., MD Lt Gen, USAF, MC, CFS USAF Surgeon General With 122 Illustrations 1 3 William W. Hurd, MD, MS John G. Jernigan, MD Nicholas J. Thompson Professor and Chair Brig Gen, USAF, CFS (ret) Department of Obstetrics and Gynecology Formerly Commander Wright State University School of Medicine Human Systems Center Dayton, OH, USA Brooks AFB Col, USAFR, MC, FS San Antonio, TX, USA Commander 445th Aeromedical Staging Squadron Wright-Patterson AFB Dayton, OH, USA Cover illustration: Litter bearers carry a patient up the ramp of a C-9 Nightingale medical transport aircraft. (US Air Force photo by Staff Sgt. Gary R. Coppage). (Figure 7.4 in text) Library of Congress Cataloging-in-Publication Data Aeromedical evacuation : management of acute and stabilized patients / [edited by] William W. Hurd, John G. Jernigan. p. ; cm Includes bibliographical references and index. ISBN 0-387-98604-9 (h/c : alk. paper) 1. Airplane ambulances. 2. Emergency medical services. I. Hurd, William W. II. Jernigan, John J. [DNLM: 1. Air Ambulances. 2. Emergency Medical Services. 3. Rescue Work. WX 215 A252 2002] RA996.5 .A325 2002 616.02¢5—dc21 2002021045 ISBN 0-387-98604-9 Printed on acid-free paper. -

Fiscal Year 2010 Air Force Posture Statement

United States Air Force Presentation Before the House Appropriations Committee, Subcommittee on Defense Defense Health Programs Witness Statement of Lieutenant General (Dr.) Thomas Travis, Surgeon General, USAF April 14, 2015 Not for publication until released by the House Appropriations Committee, Subcommittee on Defense Defense Health Programs April 14, 2015 U N I T E D S T A T E S A I R F O R C E LIEUTENANT GENERAL (DR.) THOMAS W. TRAVIS Lt. Gen. (Dr.) Thomas W. Travis is the Surgeon General of the Air Force, Headquarters U.S. Air Force, Washington, D.C. General Travis serves as functional manager of the U.S. Air Force Medical Service. In this capacity, he advises the Secretary of the Air Force and Air Force Chief of Staff, as well as the Assistant Secretary of Defense for Health Affairs on matters pertaining to the medical aspects of the air expeditionary force and the health of Air Force people. General Travis has authority to commit resources worldwide for the Air Force Medical Service, to make decisions affecting the delivery of medical services, and to develop plans, programs and procedures to support worldwide medical service missions. He exercises direction, guidance and technical management of a $6.6 billion, 44,000-person integrated health care delivery system serving 2.6 million beneficiaries at 75 military treatment facilities worldwide. General Travis entered the Air Force in 1976 as a distinguished graduate of the ROTC program at Virginia Polytechnic Institute and State University. He was awarded his pilot wings in 1978 and served as an F-4 pilot and aircraft commander. -

Army National Guard

Deliberative Document - For Discussion Purposes Only - Do Not Release Under FOIA DCN: 10783 THE UNDER SECRETARY OF DEFENSE 30 10 DEFENSE PENTAGON WASHINGTON, DC 2030 1-3010 ACQUISITION. TECHNOLOGY AND LOGISTICS NOV 3 0 2904 MEMORANDUM FOR INFRASTRUCTURE STEERING GROUP (ISG) MEMBERS SUBJECT: Coordination on Scenario Conflicts Proposed Resolutions As agreed at the October 15,2004, Infrastructure Steering Group meeting, the DASs were to brief only those scenarios for which they could not agree on a proposed resolution. Because the DAS's review of the seventh batch of scenarios indicated there were no issues for in-person ISG deliberations, we will obtain your concurrence on the DAS's newly proposed resolutions via paper. The attached package includes all 5 18 scenarios registered in the tracking tool as of November 12,2004. A summary of these scenarios, broken out by category (Independent, Enabling, Conflicting, Deleted, and Not Ready for Categorization) can be found at TAB 1. The section titled "New Conflicts Settled" includes new scenario conflicts on which the DASs and affected JCSGs agree regarding the proposed resolution (TAB 2). TAB 3 represents the one contested conflict resolution for an E&T and Air Force scenario. Discussions regarding this issue are scheduled for the December 3,2004, ISG meeting. Details of the remaining scenarios, by category (Old Conflicts Settled, Independent, Enabling, Deleted, and Not Ready for Categorization), are provided on a disc at TAB 4-8. Request your written concurrence on the proposed resolutions -

Defense AT&L, May-June 2008

May-June 2008 A PUBLICATION OF THE Some photos appearing in this publication may be digitally enhanced. Vol XXXVII, No.3, DAU 201 2 18 Changing DoD’s IT Bridging the DoD-In- Capabilities dustry Communica- Lt. Gen. Charles E. tions Gap Croom, USAF, Scott Littlefield Director, Defense Infor- Industry members are mation Systems Agency often in the dark about and Commander, Joint DoD’s actual capability Task Force for Global needs, and thus, make Network Operations decisions based on poor The DISA director and assumptions. If DoD ex- JTF-GNO commander pects industry to meet its describes what’s next for needs, it must find better DoD’s networks in the ways to provide reliable face of changing technol- information to industry. ogy. 10 22 Looking at the A Great System Right Root Causes of Prob- Out of the Chocks lems Will Broadus, Duane Mal- Col. Brian Shimel, USAF licoat, Capt. Tom Payne, A problem with an ac- USN, with Maj. Gen. quisition program isn’t a Charles R. Davis, USAF one-time event. DoD The largest DoD acquisi- must conduct an tion program in history objective program succeeded by following review identifying what a step-by-step block plan “broke” the program and a rigorous system and how the department engineering process, should improve perfor- ensuring the final product mance, delivery speed, satisfied its specific mis- and economy. sion requirements. 14 26 The Team Develop- The Increasing Role ment Life Cycle of Robots in National Tom Edison Security Dr. Bruce Tuckman Ellen M. Purdy identified five phases DoD’s acquisition and experienced by project technology organizations work teams: forming, are overseeing a tremen- storming, norming, per- dous increase in robotic forming, and adjourning. -

Human Effectiveness Directorate

th Proceedings of the 19th Conference on Behavior Representation711 in Modeling Human and Simulation, Charleston,Performance SC, 21 - 24 March 2010Wing World Leader for Human Performance 711th Human Performance Wing Mr. Thomas S. Wells, SES, Director The historic activation of the 711th Human Performance Wing at Wright-Patterson Air Force Base culminated two years of inspired strategic planning. Standup of the Wing creates the first human- centric warfare wing to consolidate research, education, and consultation under a single organization. The 711 HPW merges the Air Force Research Laboratory Human Effectiveness Directorate with functions of the 311th Human Systems Wing currently located at Brooks City-Base: the United States Air Force School of Aerospace Medicine (including functions of the former Air Force Institute for Operational Health that are merged into USAFSAM) and the 311th Performance Enhancement Directorate (renamed Human Performance Integration Directorate). The Wing’s primary focus areas are aerospace medicine, human effectiveness science and technology, and human systems integration. In conjunction with the Navy Aerospace Medical Research Laboratory (NAMRL) moving to WPAFB, and surrounding universities and medical institutions, the 711 HPW will function as a Joint Department of Defense Center of Excellence for human performance sustainment and readiness, optimization and effectiveness research. AFRL 711th Human Performance Wing Mr. Thomas S. Wells, CL Col Richard E. Bachmann, Jr., DV Col Jon C. Welch, OM, DS Human Effectiveness Directorate USAF School of Aerospace Medicine Human Performance Integration 711 HPW/RH USAFSAM/CC 711 HPW/HP Mr. Jack Blackhurst Col Charles R. Fisher, Jr. Col David L. Brown The 711th Human Performance Wing mission is to advance human performance in air, space, and cyberspace through research, education, and consultation, accomplished through synergies created by the wing’s three distinct but complementary entities: the U. -

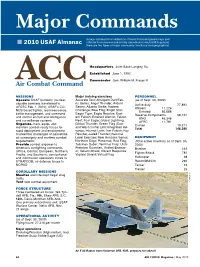

Major Commands

Major Commands A major command is a subdivision of the Air Force assigned a major part of the Air Force mission and directly subordinate to Hq. USAF. In general, ■ 2010 USAF Almanac there are two types of major commands: functional and geographical. Headquarters Joint Base Langley, Va. Established June 1, 1992 Commander Gen. William M. Fraser III AirACC Combat Command Missions Major training exercises PErsonnEl operate USAF bombers (nuclear- Accurate Test; Amalgam Dart/Fab- (as of Sept. 30, 2009) capable bombers transferred to ric Series; Angel Thunder; Ardent Active duty 77,892 AFGSC Feb. 1, 2010); USAF’s CO- Sentry; Atlantic Strike; Austere Officers 11,226 NUS-based fighter, reconnaissance, Challenge; Blue Flag; Bright Star; Enlisted 66,666 battle management, and command Eager Tiger; Eagle Resolve; East- Reserve Components 58,127 and control aircraft and intelligence ern Falcon; Emerald Warrior; Falcon ANG 46,346 and surveillance systems Nest; Foal Eagle; Global Lightning; AFRC 11,781 organize, train, equip, and Global Thunder; Green Flag (East Civilian 10,371 maintain combat-ready forces for and West); Initial Link; Integrated Ad- Total 146,390 rapid deployment and employment vance; Internal Look; Iron Falcon; Key to meet the challenges of peacetime Resolve; Jaded Thunder; National air sovereignty and wartime combat Level Exercise; New Horizons Series; EquipmenT requirements Northern Edge; Panamax; Red Flag; (Total active inventory as of Sept. 30, Provide combat airpower to Talisman Saber; Terminal Fury; Ulchi 2009) America’s warfighting -

July 2005 699 Medical News

President’s Page The science and art of aerospace give us a buzz of excitement with whichever branch we are involved. That same excitement also applies to membership in the community of AsMA which encompasses so many disciplines and so many cultures. As usual in aerospace, change and evolution are occurring. We hope to see the Shuttle program restarting, with a launch date in July. We believe that space tourism is now more than a dream. The European Space Agency has formulated a “Cosmic Vision 2015-2025 science programme,” incorporating new planetary missions, space tele- Michael Bagshaw, M.B., B.Ch. scopes, and searches for conditions able to sup- port life on other worlds. On the other hand, a British space programme begun in 1957 has mix well in close quarters with manned strike air- drawn to a close with the launch of the last craft. This is something which AsMA’s human fac- Skylark rocket. The final 441st mission carried ex- tors specialists need to be thinking about. We periments including a biological investigation of should also be aware that the single largest driver the protein actin and a study of evaporating liq- for science and technology spending by the U.S. uids in low gravity. Army is making helicopters safer in combat situa- The Airbus A380 is well into its test pro- tions. Controlled flight into terrain still kills too gramme and the order book for Boeing’s 787 is many people. filling. There is significant aviation medical input Despite the advent of new aircraft and tech- to both these new aircraft. -

Hq. Air Force

Hq. Air Force The Department of the Air Force incorporates all elements of the Air ■ 2008 USAF Almanac Force and is administered by a civilian Secretary and supervised by a military Chief of Staff. The Secretariat and the Air Staff help the Secretary and the Chief of Staff direct the Air Force mission. Headquarters Pentagon, Washington, D.C. Headquarters Air Force Established Sept. 18, 1947 Secretary Michael W. Wynne HAF Chief of Staff Gen. T. Michael Moseley ROLE PERSONNEL Organize, train, and equip air and (as of Sept. 30, 2007) space forces Active duty 1,642 Officers 1,389 MISSION Enlisted 253 Deliver sovereign options for the Reserve components 493 defense of the United States of ANG 61 America and its global interests—to AFRC 432 fly and fight in air, space, and cyber- Civilian 818 space Total 2,953 FORCE STRUCTURE— SECRETARIAT One Secretary One undersecretary Four assistant secretaries Two deputy undersecretaries Five directors Five offices FORCE STRUCTURE— USAF photo by SSgt. Aaron D. Allmon II AIR STAFF One Chief of Staff One vice chief of staff One Chief Master Sergeant of the Air Force Six deputy chiefs of staff Three directors Eight offices An F-16 of the 20th Fighter Wing, Shaw AFB, S.C., flies near the Pentagon as part of Noble Eagle. 94 AIR FORCE Magazine / May 2008 SECRETARIAT, PENTAGON, WASHINGTON, D.C. Secretary of the Air Force Undersecretary of the Air Force Asst. Secretary of Asst. Secretary of Asst. Secretary of Asst. Secretary Deputy Undersec- Deputy Undersec- the Air Force for the Air Force for the Air Force for In- of the Air Force retary of the Air retary of the Air Acquisition Financial Mgmt. -

Docket 08-Afc-9

DOCKET 08-AFC-9 STATE OF CALIFORNIA DATE FEB 04 2011 FEB 04 2011 Energy Resources Conservation RECD. And Development Commission In the Matter of: Docket No. 08-AFC-9 Application for Certification For the Palmdale Hybrid Power Project Energy Commission Staff’s Prehearing Conference Statement On January 31, 2011, the Committee assigned to this proceeding issued a Second Revised Notice of Prehearing Conference and Evidentiary Hearing and Order requiring all parties to file Prehearing Conference Statements and specifying what information the prehearing conference statements must contain. Staff provides the requested information below. Due to a planned vacation, staff must file this prior to receiving the intervenor’s testimony on staff’s rebuttal testimony. We, therefore, respectfully reserve the right to orally augment this statement at the Prehearing Conference in response to testimony submitted by the intervenors. Additionally, staff would like to bring to the Committee’s attention the issue of alternative routes for the transmission line. The applicant has proposed a route and staff has identified an additional route, Route 4, that it believes is also feasible. Staff and the applicant propose that the Commission certify both routes and let the applicant determine which to construct. Staff recommends that the testimony of both parties on this matter be entered by declaration. If, however, the Committee has questions regarding this issue, staff is able to provide witnesses to answer any questions regarding staff’s identified alternative route. Staff also has included a few items in this submittal. The Visual Resources analysis of the roadpaving proposal was mistakenly left out of the Rebuttal Testimony and is attached to this document along with a declaration by the author. -

Military Construction, Veterans Affairs, and Related Agencies Appropriations for 2011 Hearings Committee on Appropriations

MILITARY CONSTRUCTION, VETERANS AFFAIRS, AND RELATED AGENCIES APPROPRIATIONS FOR 2011 HEARINGS BEFORE A SUBCOMMITTEE OF THE COMMITTEE ON APPROPRIATIONS HOUSE OF REPRESENTATIVES ONE HUNDRED ELEVENTH CONGRESS SECOND SESSION SUBCOMMITTEE ON MILITARY CONSTRUCTION, VETERANS AFFAIRS, AND RELATED AGENCIES APPROPRIATIONS CHET EDWARDS, Texas, Chairman SAM FARR, California ZACH WAMP, Tennessee JOHN T. SALAZAR, Colorado ANDER CRENSHAW, Florida PETER J. VISCLOSKY, Indiana C. W. BILL YOUNG, Florida DAVID E. PRICE, North Carolina JOHN CARTER, Texas PATRICK J. KENNEDY, Rhode Island ALLEN BOYD, Florida SANFORD D. BISHOP, JR., Georgia NOTE: Under Committee Rules, Mr. Obey, as Chairman of the Full Committee, and Mr. Lewis, as Ranking Minority Member of the Full Committee, are authorized to sit as Members of all Subcommittees. TIM PETERSON, SUE QUANTIUS, WALTER HEARNE, and MARY C. ARNOLD, Subcommittee Staff PART 7 Page American Battle Monuments Commission .................................................. 1 United States Court of Appeals for Veterans Claims ............................... 25 Arlington National Cemetery ......................................................................... 43 Armed Forces Retirement Home ................................................................... 61 Veterans Affairs ................................................................................................. 79 Navy and Marine Corps Budget .................................................................... 151 European Command ........................................................................................