UCLA Electronic Theses and Dissertations

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Unintended Consequences of the American Presidential Selection System

\\jciprod01\productn\H\HLP\15-1\HLP104.txt unknown Seq: 1 14-JUL-21 12:54 The Best Laid Plans: Unintended Consequences of the American Presidential Selection System Samuel S.-H. Wang and Jacob S. Canter* The mechanism for selecting the President of the United States, the Electoral College, causes outcomes that weaken American democracy and that the delegates at the Constitu- tional Convention never intended. The core selection process described in Article II, Section 1 was hastily drawn in the final days of the Convention based on compromises made originally to benefit slave-owning states and states with smaller populations. The system was also drafted to have electors deliberate and then choose the President in an age when travel and news took weeks or longer to cross the new country. In the four decades after ratification, the Electoral College was modified further to reach its current form, which includes most states using a winner-take-all method to allocate electors. The original needs this system was designed to address have now disappeared. But the persistence of these Electoral College mechanisms still causes severe unanticipated problems, including (1) con- tradictions between the electoral vote winner and national popular vote winner, (2) a “battleground state” phenomenon where all but a handful of states are safe for one political party or the other, (3) representational and policy benefits that citizens in only some states receive, (4) a decrease in the political power of non-battleground demographic groups, and (5) vulnerability of elections to interference. These outcomes will not go away without intervention. -

The Popular Culture Studies Journal

THE POPULAR CULTURE STUDIES JOURNAL VOLUME 6 NUMBER 1 2018 Editor NORMA JONES Liquid Flicks Media, Inc./IXMachine Managing Editor JULIA LARGENT McPherson College Assistant Editor GARRET L. CASTLEBERRY Mid-America Christian University Copy Editor Kevin Calcamp Queens University of Charlotte Reviews Editor MALYNNDA JOHNSON Indiana State University Assistant Reviews Editor JESSICA BENHAM University of Pittsburgh Please visit the PCSJ at: http://mpcaaca.org/the-popular-culture- studies-journal/ The Popular Culture Studies Journal is the official journal of the Midwest Popular and American Culture Association. Copyright © 2018 Midwest Popular and American Culture Association. All rights reserved. MPCA/ACA, 421 W. Huron St Unit 1304, Chicago, IL 60654 Cover credit: Cover Artwork: “Wrestling” by Brent Jones © 2018 Courtesy of https://openclipart.org EDITORIAL ADVISORY BOARD ANTHONY ADAH FALON DEIMLER Minnesota State University, Moorhead University of Wisconsin-Madison JESSICA AUSTIN HANNAH DODD Anglia Ruskin University The Ohio State University AARON BARLOW ASHLEY M. DONNELLY New York City College of Technology (CUNY) Ball State University Faculty Editor, Academe, the magazine of the AAUP JOSEF BENSON LEIGH H. EDWARDS University of Wisconsin Parkside Florida State University PAUL BOOTH VICTOR EVANS DePaul University Seattle University GARY BURNS JUSTIN GARCIA Northern Illinois University Millersville University KELLI S. BURNS ALEXANDRA GARNER University of South Florida Bowling Green State University ANNE M. CANAVAN MATTHEW HALE Salt Lake Community College Indiana University, Bloomington ERIN MAE CLARK NICOLE HAMMOND Saint Mary’s University of Minnesota University of California, Santa Cruz BRIAN COGAN ART HERBIG Molloy College Indiana University - Purdue University, Fort Wayne JARED JOHNSON ANDREW F. HERRMANN Thiel College East Tennessee State University JESSE KAVADLO MATTHEW NICOSIA Maryville University of St. -

Women's Experimental Autobiography from Counterculture Comics to Transmedia Storytelling: Staging Encounters Across Time, Space, and Medium

Women's Experimental Autobiography from Counterculture Comics to Transmedia Storytelling: Staging Encounters Across Time, Space, and Medium Dissertation Presented in partial fulfillment of the requirement for the Degree Doctor of Philosophy in the Graduate School of Ohio State University Alexandra Mary Jenkins, M.A. Graduate Program in English The Ohio State University 2014 Dissertation Committee: Jared Gardner, Advisor Sean O’Sullivan Robyn Warhol Copyright by Alexandra Mary Jenkins 2014 Abstract Feminist activism in the United States and Europe during the 1960s and 1970s harnessed radical social thought and used innovative expressive forms in order to disrupt the “grand perspective” espoused by men in every field (Adorno 206). Feminist student activists often put their own female bodies on display to disrupt the disembodied “objective” thinking that still seemed to dominate the academy. The philosopher Theodor Adorno responded to one such action, the “bared breasts incident,” carried out by his radical students in Germany in 1969, in an essay, “Marginalia to Theory and Praxis.” In that essay, he defends himself against the students’ claim that he proved his lack of relevance to contemporary students when he failed to respond to the spectacle of their liberated bodies. He acknowledged that the protest movements seemed to offer thoughtful people a way “out of their self-isolation,” but ultimately, to replace philosophy with bodily spectacle would mean to miss the “infinitely progressive aspect of the separation of theory and praxis” (259, 266). Lisa Yun Lee argues that this separation continues to animate contemporary feminist debates, and that it is worth returning to Adorno’s reasoning, if we wish to understand women’s particular modes of theoretical ii insight in conversation with “grand perspectives” on cultural theory in the twenty-first century. -

Professional Wrestling, Sports Entertainment and the Liminal Experience in American Culture

PROFESSIONAL WRESTLING, SPORTS ENTERTAINMENT AND THE LIMINAL EXPERIENCE IN AMERICAN CULTURE By AARON D, FEIGENBAUM A DISSERTATION PRESENTED TO THE GRADUATE SCHOOL OF THE UNIVERSITY OF FLORIDA IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF DOCTOR OF PHILOSOPHY UNIVERSITY OF FLORIDA 2000 Copyright 2000 by Aaron D. Feigenbaum ACKNOWLEDGMENTS There are many people who have helped me along the way, and I would like to express my appreciation to all of them. I would like to begin by thanking the members of my committee - Dr. Heather Gibson, Dr. Amitava Kumar, Dr. Norman Market, and Dr. Anthony Oliver-Smith - for all their help. I especially would like to thank my Chair, Dr. John Moore, for encouraging me to pursue my chosen field of study, guiding me in the right direction, and providing invaluable advice and encouragement. Others at the University of Florida who helped me in a variety of ways include Heather Hall, Jocelyn Shell, Jim Kunetz, and Farshid Safi. I would also like to thank Dr. Winnie Cooke and all my friends from the Teaching Center and Athletic Association for putting up with me the past few years. From the World Wrestling Federation, I would like to thank Vince McMahon, Jr., and Jim Byrne for taking the time to answer my questions and allowing me access to the World Wrestling Federation. A very special thanks goes out to Laura Bryson who provided so much help in many ways. I would like to thank Ed Garea and Paul MacArthur for answering my questions on both the history of professional wrestling and the current sports entertainment product. -

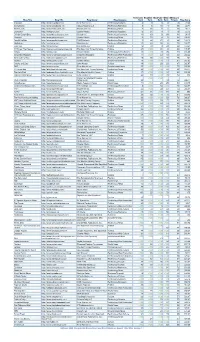

Blog Title Blog URL Blog Owner Blog Category Technorati Rank

Technorati Bloglines BlogPulse Wikio SEOmoz’s Blog Title Blog URL Blog Owner Blog Category Rank Rank Rank Rank Trifecta Blog Score Engadget http://www.engadget.com Time Warner Inc. Technology/Gadgets 4 3 6 2 78 19.23 Boing Boing http://www.boingboing.net Happy Mutants LLC Technology/Marketing 5 6 15 4 89 33.71 TechCrunch http://www.techcrunch.com TechCrunch Inc. Technology/News 2 27 2 1 76 42.11 Lifehacker http://lifehacker.com Gawker Media Technology/Gadgets 6 21 9 7 78 55.13 Official Google Blog http://googleblog.blogspot.com Google Inc. Technology/Corporate 14 10 3 38 94 69.15 Gizmodo http://www.gizmodo.com/ Gawker Media Technology/News 3 79 4 3 65 136.92 ReadWriteWeb http://www.readwriteweb.com RWW Network Technology/Marketing 9 56 21 5 64 142.19 Mashable http://mashable.com Mashable Inc. Technology/Marketing 10 65 36 6 73 160.27 Daily Kos http://dailykos.com/ Kos Media, LLC Politics 12 59 8 24 63 163.49 NYTimes: The Caucus http://thecaucus.blogs.nytimes.com The New York Times Company Politics 27 >100 31 8 93 179.57 Kotaku http://kotaku.com Gawker Media Technology/Video Games 19 >100 19 28 77 216.88 Smashing Magazine http://www.smashingmagazine.com Smashing Magazine Technology/Web Production 11 >100 40 18 60 283.33 Seth Godin's Blog http://sethgodin.typepad.com Seth Godin Technology/Marketing 15 68 >100 29 75 284 Gawker http://www.gawker.com/ Gawker Media Entertainment News 16 >100 >100 15 81 287.65 Crooks and Liars http://www.crooksandliars.com John Amato Politics 49 >100 33 22 67 305.97 TMZ http://www.tmz.com Time Warner Inc. -

Facebook, Social Media Privacy, and the Use and Abuse of Data

S. HRG. 115–683 FACEBOOK, SOCIAL MEDIA PRIVACY, AND THE USE AND ABUSE OF DATA JOINT HEARING BEFORE THE COMMITTEE ON COMMERCE, SCIENCE, AND TRANSPORTATION UNITED STATES SENATE AND THE COMMITTEE ON THE JUDICIARY UNITED STATES SENATE ONE HUNDRED FIFTEENTH CONGRESS SECOND SESSION APRIL 10, 2018 Serial No. J–115–40 Printed for the use of the Committee on Commerce, Science, and Transportation ( Available online: http://www.govinfo.gov VerDate Nov 24 2008 09:24 Nov 08, 2019 Jkt 000000 PO 00000 Frm 00001 Fmt 6011 Sfmt 6011 S:\GPO\DOCS\37801.TXT JACKIE FACEBOOK, SOCIAL MEDIA PRIVACY, AND THE USE AND ABUSE OF DATA VerDate Nov 24 2008 09:24 Nov 08, 2019 Jkt 000000 PO 00000 Frm 00002 Fmt 6019 Sfmt 6019 S:\GPO\DOCS\37801.TXT JACKIE S. HRG. 115–683 FACEBOOK, SOCIAL MEDIA PRIVACY, AND THE USE AND ABUSE OF DATA JOINT HEARING BEFORE THE COMMITTEE ON COMMERCE, SCIENCE, AND TRANSPORTATION UNITED STATES SENATE AND THE COMMITTEE ON THE JUDICIARY UNITED STATES SENATE ONE HUNDRED FIFTEENTH CONGRESS SECOND SESSION APRIL 10, 2018 Serial No. J–115–40 Printed for the use of the Committee on Commerce, Science, and Transportation ( Available online: http://www.govinfo.gov U.S. GOVERNMENT PUBLISHING OFFICE 37–801 PDF WASHINGTON : 2019 VerDate Nov 24 2008 09:24 Nov 08, 2019 Jkt 000000 PO 00000 Frm 00003 Fmt 5011 Sfmt 5011 S:\GPO\DOCS\37801.TXT JACKIE SENATE COMMITTEE ON COMMERCE, SCIENCE, AND TRANSPORTATION ONE HUNDRED FIFTEENTH CONGRESS SECOND SESSION JOHN THUNE, South Dakota, Chairman ROGER WICKER, Mississippi BILL NELSON, Florida, Ranking ROY BLUNT, Missouri MARIA -

The Atlantic Return and the Payback of Evangelization

Vol. 3, no. 2 (2013), 207-221 | URN:NBN:NL:UI:10-1-114482 The Atlantic Return and the Payback of Evangelization VALENTINA NAPOLITANO* Abstract This article explores Catholic, transnational Latin American migration to Rome as a gendered and ethnicized Atlantic Return, which is figured as a source of ‘new blood’ that fortifies the Catholic Church but which also profoundly unset- tles it. I analyze this Atlantic Return as an angle on the affective force of his- tory in critical relation to two main sources: Diego Von Vacano’s reading of the work of Bartolomeo de las Casas, a 16th-century Spanish Dominican friar; and to Nelson Maldonado-Torres’ notion of the ‘coloniality of being’ which he suggests has operated in Atlantic relations as enduring and present forms of racial de-humanization. In his view this latter can be counterbalanced by embracing an economy of the gift understood as gendered. However, I argue that in the light of a contemporary payback of evangelization related to the original ‘gift of faith’ to the Americas, this economy of the gift is less liberatory than Maldonado-Torres imagines, and instead part of a polyfaceted reproduc- tion of a postsecular neoliberal affective, and gendered labour regime. Keywords Transnational migration; Catholicism; economy of the gift; de Certeau; Atlantic Return; Latin America; Rome. Author affiliation Valentina Napolitano is an Associate Professor in the Department of Anthro- pology and the Director of the Latin American Studies Program, University of *Correspondence: Department of Anthropology, University of Toronto, 19 Russell St., Toronto, M5S 2S2, Canada. E-mail: [email protected] This work is licensed under a Creative Commons Attribution License (3.0) Religion and Gender | ISSN: 1878-5417 | www.religionandgender.org | Igitur publishing Downloaded from Brill.com09/30/2021 10:21:12AM via free access Napolitano: The Atlantic Return and the Payback of Evangelization Toronto. -

Body Work and the Work of the Body

Journal of Moral Theology, Vol. 2, No. 1 (2013): 113-131 Body Work and the Work of the Body Jeffrey P. Bishop e cult of the body beautiful, symbolizing the sacrament of life, is celebrated everywhere with the earnest vigour that characterized rit- ual in the major religions; beauty is the last remaining manifestation of the sacred in societies that have abolished all the others. Hervé Juvin HE WORLD OF MEDICINE has always been focused on bod- ies—typically ailing bodies. Yet recently, say over the last century or so, medicine has gradually shied its emphasis away from the ailing body, turning its attention to the body Tin health. us, it seems that the meaning of the body has shied for contemporary medicine and that it has done so precisely because our approach to the body has changed. Put in more philosophical terms, our metaphysical assumptions about the body, that is to say our on- tologies (and even the implied theologies) of the body, come to shape the way that a culture and its medicine come to treat the body. In other words, the ethics of the body is tied to the assumed metaphys- ics of the body.1 is attitude toward the body is clear in the rise of certain con- temporary cultural practices. In one sense, the body gets more atten- tion than it ever has, as evident in the rise of the cosmetics industry, massage studios, exercise gyms, not to mention the attention given to the body by the medical community with the rise in cosmetic derma- tology, cosmetic dentistry, Botox injections, and cosmetic surgery. -

February 2012

The Park Press Winter Park | Baldwin Park | College Park | M a i t l a n d F E b R u a R y 2 0 1 2 FREE Duck Derby a Makeover Technology Winner allows Creativity 10 15 18 It’s Great To be Grand! – by Tricia Cable Teachers, in general, are some of the the doctor’s visits, helping to pay for med- most thoughtful human beings on the icine, paying for children with impaired planet. They certainly don’t become vision to get glasses, and on occasion, pay- teachers because of the pay, and many of ing their rent to avoid eviction. the professional educators in my circle But the team of five woman who take spend a great deal of their hard-earned on the responsibility to educate the 88 5 wages on classroom supplies and oth- and 6-year-olds who attend kindergarten er items in an effort to be the very best there are not only incredibly dedicated and teachers for our children. John F. Ken- competent, but also a very compassionate nedy once said “Our progress as a nation and caring group. “Students here are such can be no swifter than our progress in sweet and inquisitive boys and girls,” ex- education. The human mind is our fun- plains team leader Sarah Schneider. “They damental resource.” tend to lack the knowledge of the world The kindergarten team at Grand Av- beyond their own neighborhood, and that enue Primary Learning Center takes that Kindergarten Team Leader Sarah Schneider with a group of her is where we come in,” she continues. -

Polish Musicians Merge Art, Business the INAUGURAL EDITION of JAZZ FORUM SHOWCASE POWERED by Szczecin Jazz—Which Ran from Oct

DECEMBER 2019 VOLUME 86 / NUMBER 12 President Kevin Maher Publisher Frank Alkyer Editor Bobby Reed Reviews Editor Dave Cantor Contributing Editor Ed Enright Creative Director ŽanetaÎuntová Design Assistant Will Dutton Assistant to the Publisher Sue Mahal Bookkeeper Evelyn Oakes ADVERTISING SALES Record Companies & Schools Jennifer Ruban-Gentile Vice President of Sales 630-359-9345 [email protected] Musical Instruments & East Coast Schools Ritche Deraney Vice President of Sales 201-445-6260 [email protected] Advertising Sales Associate Grace Blackford 630-359-9358 [email protected] OFFICES 102 N. Haven Road, Elmhurst, IL 60126–2970 630-941-2030 / Fax: 630-941-3210 http://downbeat.com [email protected] CUSTOMER SERVICE 877-904-5299 / [email protected] CONTRIBUTORS Senior Contributors: Michael Bourne, Aaron Cohen, Howard Mandel, John McDonough Atlanta: Jon Ross; Boston: Fred Bouchard, Frank-John Hadley; Chicago: Alain Drouot, Michael Jackson, Jeff Johnson, Peter Margasak, Bill Meyer, Paul Natkin, Howard Reich; Indiana: Mark Sheldon; Los Angeles: Earl Gibson, Andy Hermann, Sean J. O’Connell, Chris Walker, Josef Woodard, Scott Yanow; Michigan: John Ephland; Minneapolis: Andrea Canter; Nashville: Bob Doerschuk; New Orleans: Erika Goldring, Jennifer Odell; New York: Herb Boyd, Bill Douthart, Philip Freeman, Stephanie Jones, Matthew Kassel, Jimmy Katz, Suzanne Lorge, Phillip Lutz, Jim Macnie, Ken Micallef, Bill Milkowski, Allen Morrison, Dan Ouellette, Ted Panken, Tom Staudter, Jack Vartoogian; Philadelphia: Shaun Brady; Portland: Robert Ham; San Francisco: Yoshi Kato, Denise Sullivan; Seattle: Paul de Barros; Washington, D.C.: Willard Jenkins, John Murph, Michael Wilderman; Canada: J.D. Considine, James Hale; France: Jean Szlamowicz; Germany: Hyou Vielz; Great Britain: Andrew Jones; Portugal: José Duarte; Romania: Virgil Mihaiu; Russia: Cyril Moshkow; South Africa: Don Albert. -

The Complete Guide to Social Media from the Social Media Guys

The Complete Guide to Social Media From The Social Media Guys PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Mon, 08 Nov 2010 19:01:07 UTC Contents Articles Social media 1 Social web 6 Social media measurement 8 Social media marketing 9 Social media optimization 11 Social network service 12 Digg 24 Facebook 33 LinkedIn 48 MySpace 52 Newsvine 70 Reddit 74 StumbleUpon 80 Twitter 84 YouTube 98 XING 112 References Article Sources and Contributors 115 Image Sources, Licenses and Contributors 123 Article Licenses License 125 Social media 1 Social media Social media are media for social interaction, using highly accessible and scalable publishing techniques. Social media uses web-based technologies to turn communication into interactive dialogues. Andreas Kaplan and Michael Haenlein define social media as "a group of Internet-based applications that build on the ideological and technological foundations of Web 2.0, which allows the creation and exchange of user-generated content."[1] Businesses also refer to social media as consumer-generated media (CGM). Social media utilization is believed to be a driving force in defining the current time period as the Attention Age. A common thread running through all definitions of social media is a blending of technology and social interaction for the co-creation of value. Distinction from industrial media People gain information, education, news, etc., by electronic media and print media. Social media are distinct from industrial or traditional media, such as newspapers, television, and film. They are relatively inexpensive and accessible to enable anyone (even private individuals) to publish or access information, compared to industrial media, which generally require significant resources to publish information. -

Dating Expert and Digital Matchmaker Julie Spira

Dating Expert and Digital Matchmaker Julie Spira Bestselling Author • Online Dating Expert • Media Personality America’s Top Online Dating Expert • The Digital Matchmaker • Relationship Coach • “Top 10 Advice Columnist to Follow on Twitter” • Most influential person in “Dating” and “Online Dating” on social media influence site Klout • Founder of CyberDatingExpert.com • Ranks at the top of a Google search for “online dating expert”, “ghosting expert”, “mobile dating expert” and “Internet dating expert” • Creator of Online Dating Boot Camp and Mobile Dating Boot Camp • Spokesperson for lifestyle brands • Advice appears on eHarmony, Huffington Post, JDate, Match, PlentyofFish, and Zoosk Media Quotes “She’s truly the pioneer of online dating” ~Cosmopolitan “One of America’s ultimate experts” ~Woman’s World magazine “Julie is a top notch professional and rare expert in the MODERN attributes of dating. She knows old school etiquette with new school technology. Beyond just knowing her game, Julie is talented in explaining her science both on and off air!” ~ FOX 11 News As Seen On ABC News WPIX – NY E! Entertainment Good Day LA Good Morning America Hallmark Channel Julie Spira Has Appeared In the News Over 1000 Times ABC News • ABC TV, Los Angeles, San Francisco • AOL • Ask Men • Associated Press • BBC • Baltimore Sun • Betty Confidential • Blog World • Bottom Line Personal • Boston Globe • The Brooklyn Paper • Buffalo News • Bustle • Business Insider • Buzzfeed • Campus Explorer • Canadian Living • Canadian TV • CBC • CBS News • CBS Radio • CCTV • Christian Science Monitor • CNBC • CNET • CNN • College Magazine • College Times • Columbia News Service • Cosmopolitan • Cosmo Radio • Crain’s New York Business • Cult of Mac • Cupid’s Pulse • Daily Beast • Daily Kansan • Daily Mail • Dallas News • Denver Post • Detroit Free Press • Digital LA • Dr.