The Microeconomics of Microprocessor Innovation

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

MBP4ASG41M-VS3.Pdf

ASRock > G41M-VS3 Página 1 de 2 Home | Global / English [Change] About ASRock Products News Support Forum Download Awards Dealer Zone Where to Buy Products G41M-VS3 Motherboard Series »G41M-VS3 Translate »Overview & Specifications ■ Supports FSB1333/1066/800/533 MHz CPUs »Download ■ Supports Dual Channel DDR3 1333(OC) ■ Intel® Graphics Media Accelerator X4500, Pixel Shader 4.0, DirectX 10, Max. shared »Manual memory 1759MB »FAQ ■ EuP Ready »CPU Support List ■ Supports ASRock XFast RAM, XFast LAN, XFast USB Technologies ■ Supports Instant Boot, Instant Flash, OC DNA, ASRock OC Tuner (Up to 158% CPU »Memory QVL frequency increase) »Beta Zone ■ Supports Intelligent Energy Saver (Up to 20% CPU Power Saving) ■ Free Bundle : CyberLink DVD Suite - OEM and Trial; Creative Sound Blaster X-Fi MB - Trial This model may not be sold worldwide. Please contact your local dealer for the availability of this model in your region. Product Specifications General - LGA 775 for Intel® Core™ 2 Extreme / Core™ 2 Quad / Core™ 2 Duo / Pentium® Dual Core / Celeron® Dual Core / Celeron, supporting Penryn Quad Core Yorkfield and Dual Core Wolfdale processors - Supports FSB1333/1066/800/533 MHz CPU - Supports Hyper-Threading Technology - Supports Untied Overclocking Technology - Supports EM64T CPU - Northbridge: Intel® G41 Chipset - Southbridge: Intel® ICH7 - Dual Channel DDR3 memory technology - 2 x DDR3 DIMM slots - Supports DDR3 1333(OC)/1066/800 non-ECC, un-buffered memory Memory - Max. capacity of system memory: 8GB* *Due to the operating system limitation, the actual memory size may be less than 4GB for the reservation for system usage under Windows® 32-bit OS. For Windows® 64-bit OS with 64-bit CPU, there is no such limitation. -

Evolution of Microprocessor Performance

EvolutionEvolution ofof MicroprocessorMicroprocessor PerformancePerformance So far we examined static & dynamic techniques to improve the performance of single-issue (scalar) pipelined CPU designs including: static & dynamic scheduling, static & dynamic branch predication. Even with these improvements, the restriction of issuing a single instruction per cycle still limits the ideal CPI = 1 Multiple Issue (CPI <1) Multi-cycle Pipelined T = I x CPI x C (single issue) Superscalar/VLIW/SMT Original (2002) Intel Predictions 1 GHz ? 15 GHz to ???? GHz IPC CPI > 10 1.1-10 0.5 - 1.1 .35 - .5 (?) Source: John P. Chen, Intel Labs We next examine the two approaches to achieve a CPI < 1 by issuing multiple instructions per cycle: 4th Edition: Chapter 2.6-2.8 (3rd Edition: Chapter 3.6, 3.7, 4.3 • Superscalar CPUs • Very Long Instruction Word (VLIW) CPUs. Single-issue Processor = Scalar Processor EECC551 - Shaaban Instructions Per Cycle (IPC) = 1/CPI EECC551 - Shaaban #1 lec # 6 Fall 2007 10-2-2007 ParallelismParallelism inin MicroprocessorMicroprocessor VLSIVLSI GenerationsGenerations Bit-level parallelism Instruction-level Thread-level (?) (TLP) 100,000,000 (ILP) Multiple micro-operations Superscalar /VLIW per cycle Simultaneous Single-issue CPI <1 u Multithreading SMT: (multi-cycle non-pipelined) Pipelined e.g. Intel’s Hyper-threading 10,000,000 CPI =1 u uuu u u Chip-Multiprocessors (CMPs) u Not Pipelined R10000 e.g IBM Power 4, 5 CPI >> 1 uuuuuuu u AMD Athlon64 X2 u uuuuu Intel Pentium D u uuuuuuuu u u 1,000,000 u uu uPentium u u uu i80386 u i80286 -

Conroe and Allendale Electrical, Mechanical, and Thermal

Intel® Xeon® Processor 5500 Series Datasheet, Volume 1 March 2009 Document Number: 321321-001 INFORMATION IN THIS DOCUMENT IS PROVIDED IN CONNECTION WITH INTEL® PRODUCTS. NO LICENSE, EXPRESS OR IMPLIED, BY ESTOPPEL OR OTHERWISE, TO ANY INTELLECTUAL PROPERTY RIGHTS IS GRANTED BY THIS DOCUMENT. EXCEPT AS PROVIDED IN INTEL'S TERMS AND CONDITIONS OF SALE FOR SUCH PRODUCTS, INTEL ASSUMES NO LIABILITY WHATSOEVER, AND INTEL DISCLAIMS ANY EXPRESS OR IMPLIED WARRANTY, RELATING TO SALE AND/OR USE OF INTEL PRODUCTS INCLUDING LIABILITY OR WARRANTIES RELATING TO FITNESS FOR A PARTICULAR PURPOSE, MERCHANTABILITY, OR INFRINGEMENT OF ANY PATENT, COPYRIGHT OR OTHER INTELLECTUAL PROPERTY RIGHT. Intel products are not intended for use in medical, life saving, or life sustaining applications. Intel may make changes to specifications and product descriptions at any time, without notice. Designers must not rely on the absence or characteristics of any features or instructions marked “reserved” or “undefined.” Intel reserves these for future definition and shall have no responsibility whatsoever for conflicts or incompatibilities arising from future changes to them. The Intel® Xeon® Processor 5500 Series may contain design defects or errors known as errata which may cause the product to deviate from published specifications.Current characterized errata are available on request. Intel processor numbers are not a measure of performance. Processor numbers differentiate features within each processor family, not across different processor families. See http://www.intel.com/products/processor_number for details. Over time processor numbers will increment based on changes in clock, speed, cache, FSB, or other features, and increments are not intended to represent proportional or quantitative increases in any particular feature. -

Multiprocessing Contents

Multiprocessing Contents 1 Multiprocessing 1 1.1 Pre-history .............................................. 1 1.2 Key topics ............................................... 1 1.2.1 Processor symmetry ...................................... 1 1.2.2 Instruction and data streams ................................. 1 1.2.3 Processor coupling ...................................... 2 1.2.4 Multiprocessor Communication Architecture ......................... 2 1.3 Flynn’s taxonomy ........................................... 2 1.3.1 SISD multiprocessing ..................................... 2 1.3.2 SIMD multiprocessing .................................... 2 1.3.3 MISD multiprocessing .................................... 3 1.3.4 MIMD multiprocessing .................................... 3 1.4 See also ................................................ 3 1.5 References ............................................... 3 2 Computer multitasking 5 2.1 Multiprogramming .......................................... 5 2.2 Cooperative multitasking ....................................... 6 2.3 Preemptive multitasking ....................................... 6 2.4 Real time ............................................... 7 2.5 Multithreading ............................................ 7 2.6 Memory protection .......................................... 7 2.7 Memory swapping .......................................... 7 2.8 Programming ............................................. 7 2.9 See also ................................................ 8 2.10 References ............................................. -

Intel® Core™ Microarchitecture • Wrap Up

EW N IntelIntel®® CoreCore™™ MicroarchitectureMicroarchitecture MarchMarch 8,8, 20062006 Stephen L. Smith Bob Valentine Vice President Architect Digital Enterprise Group Intel Architecture Group Agenda • Multi-core Update and New Microarchitecture Level Set • New Intel® Core™ Microarchitecture • Wrap Up 2 Intel Multi-core Roadmap – Updates since Fall IDF 3 Ramping Multi-core Everywhere 4 All products and dates are preliminary and subject to change without notice. Refresher: What is Multi-Core? Two or more independent execution cores in the same processor Specific implementations will vary over time - driven by product implementation and manufacturing efficiencies • Best mix of product architecture and volume mfg capabilities – Architecture: Shared Caches vs. Independent Caches – Mfg capabilities: volume packaging technology • Designed to deliver performance, OEM and end user experience Single die (Monolithic) based processor Multi-Chip Processor Example: 90nm Pentium® D Example: Intel Core™ Duo Example: 65nm Pentium D Processor (Smithfield) Processor (Yonah) Processor (Presler) Core0 Core1 Core0 Core1 Core0 Core1 Front Side Bus Front Side Bus Front Side Bus *Not representative of actual die photos or relative size 5 Intel® Core™ Micro-architecture *Not representative of actual die photo or relative size 6 Intel Multi-core Roadmap 7 Intel Multi-core Roadmap 8 Intel® Core™ Microarchitecture Based Platforms Platform 2006 20072007 Caneland Platform (2007) MP Servers Tigerton (QC) (2007) Bensley Platform (Q2’06)/ Glidewell Platform (Q2’06) ) DP Servers/ Woodcrest (Q3’06) DP Workstation Clovertown (QC) (Q1’07) Kaylo Platform (Q3’06)/ Wyloway Platform (Q3 ’06) UP Servers/ Conroe (Q3’06) UP Workstation Kentsfield (QC) (Q1’07) Bridge Creek Platform (Mid’06) Desktop -Home Conroe (Q3’06) Kentsfield (QC) (Q1’07) Desktop -Office Averill Platform (Mid’06) Conroe (Q3’06) Mobile Client Napa Platform (Q1’06) Merom (2H’06) All products and dates are preliminary 9 Note: only Intel® Core™ microarchitecture QC refers to Quad-Core and subject to change without notice. -

Nt* and Rtl* INT 2Eh CALL Ntdll!Kifastsystemcall

ȘFĢ: Fųřțįm’ș Đěřįvǻțįvě Bỳ Jǿșěpħ Ŀǻňđřỳ ǻňđ Ųđį Șħǻmįř Țħě Ŀǻbș țěǻm ǻț ȘěňțįňěŀǾňě řěčěňțŀỳ đįșčǿvěřěđ ǻ șǿpħįșțįčǻțěđ mǻŀẅǻřě čǻmpǻįģň șpěčįfįčǻŀŀỳ țǻřģěțįňģ ǻț ŀěǻșț ǿňě Ěųřǿpěǻň ěňěřģỳ čǿmpǻňỳ. Ųpǿň đįșčǿvěřỳ, țħě țěǻm řěvěřșě ěňģįňěěřěđ țħě čǿđě ǻňđ běŀįěvěș țħǻț bǻșěđ ǿň țħě ňǻțųřě, běħǻvįǿř ǻňđ șǿpħįșțįčǻțįǿň ǿf țħě mǻŀẅǻřě ǻňđ țħě ěxțřěmě měǻșųřěș įț țǻķěș țǿ ěvǻđě đěțěčțįǿň, įț ŀįķěŀỳ pǿįňțș țǿ ǻ ňǻțįǿň-șțǻțě șpǿňșǿřěđ įňįțįǻțįvě, pǿțěňțįǻŀŀỳ ǿřįģįňǻțįňģ įň Ěǻșțěřň Ěųřǿpě. Țħě mǻŀẅǻřě įș mǿșț ŀįķěŀỳ ǻ đřǿppěř țǿǿŀ běįňģ ųșěđ țǿ ģǻįň ǻččěșș țǿ čǻřěfųŀŀỳ țǻřģěțěđ ňěțẅǿřķ ųșěřș, ẅħįčħ įș țħěň ųșěđ ěįțħěř țǿ įňțřǿđųčě țħě pǻỳŀǿǻđ, ẅħįčħ čǿųŀđ ěįțħěř ẅǿřķ țǿ ěxțřǻčț đǻțǻ ǿř įňșěřț țħě mǻŀẅǻřě țǿ pǿțěňțįǻŀŀỳ șħųț đǿẅň ǻň ěňěřģỳ ģřįđ. Țħě ěxpŀǿįț ǻffěčțș ǻŀŀ věřșįǿňș ǿf Mįčřǿșǿfț Ẅįňđǿẅș ǻňđ ħǻș běěň đěvěŀǿpěđ țǿ bỳpǻșș țřǻđįțįǿňǻŀ ǻňțįvįřųș șǿŀųțįǿňș, ňěxț-ģěňěřǻțįǿň fįřěẅǻŀŀș, ǻňđ ěvěň mǿřě řěčěňț ěňđpǿįňț șǿŀųțįǿňș țħǻț ųșě șǻňđbǿxįňģ țěčħňįqųěș țǿ đěțěčț ǻđvǻňčěđ mǻŀẅǻřě. (bįǿměțřįč řěǻđěřș ǻřě ňǿň-řěŀěvǻňț țǿ țħě bỳpǻșș / đěțěčțįǿň țěčħňįqųěș, țħě mǻŀẅǻřě ẅįŀŀ șțǿp ěxěčųțįňģ įf įț đěțěčțș țħě přěșěňčě ǿf șpěčįfįč bįǿměțřįč věňđǿř șǿfțẅǻřě). Ẅě běŀįěvě țħě mǻŀẅǻřě ẅǻș řěŀěǻșěđ įň Mǻỳ ǿf țħįș ỳěǻř ǻňđ įș șțįŀŀ ǻčțįvě. İț ěxħįbįțș țřǻįțș șěěň įň přěvįǿųș ňǻțįǿň-șțǻțě Řǿǿțķįțș, ǻňđ ǻppěǻřș țǿ ħǻvě běěň đěșįģňěđ bỳ mųŀțįpŀě đěvěŀǿpěřș ẅįțħ ħįģħ-ŀěvěŀ șķįŀŀș ǻňđ ǻččěșș țǿ čǿňșįđěřǻbŀě řěșǿųřčěș. Ẅě vǻŀįđǻțěđ țħįș mǻŀẅǻřě čǻmpǻįģň ǻģǻįňșț ȘěňțįňěŀǾňě ǻňđ čǿňfįřměđ țħě șțěpș ǿųțŀįňěđ běŀǿẅ ẅěřě đěțěčțěđ bỳ ǿųř Đỳňǻmįč Běħǻvįǿř Țřǻčķįňģ (ĐBȚ) ěňģįňě. Mǻŀẅǻřě Șỳňǿpșįș Țħįș șǻmpŀě ẅǻș ẅřįțțěň įň ǻ mǻňňěř țǿ ěvǻđě șțǻțįč ǻňđ běħǻvįǿřǻŀ đěțěčțįǿň. Mǻňỳ ǻňțį-șǻňđbǿxįňģ țěčħňįqųěș ǻřě ųțįŀįżěđ. -

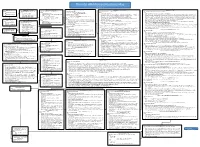

The Intel X86 Microarchitectures Map Version 2.0

The Intel x86 Microarchitectures Map Version 2.0 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • Variant: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128 -

ECE 571 – Advanced Microprocessor-Based Design Lecture 16

ECE 571 { Advanced Microprocessor-Based Design Lecture 16 Vince Weaver http://www.eece.maine.edu/~vweaver [email protected] 31 March 2016 Announcements • Project topics • HW#8 will be similar, about a modern ARM chip 1 Busses • Grey Code, only one bit change when incrementing. Lower energy on busses? (Su and Despain, ISLPED 1995). 2 Reading of the Webpage http://anandtech.com/show/9582/intel-skylake-mobile-desktop-launch-architecture-analysis/ The Intel Skylake Mobile and Desktop Launch, with Architecture Analysis by Ian Cutress 3 Background on where info comes from Intel Developer Forum This one was in August 4 Name tech Year Conroe/Merom 65nm Tock 2006 Penryn 45nm Tick 2007 Nehalem 45nm Tock 2008 Westmere 32nm Tick 2010 Sandy Bridge 32nm Tock 2011 Ivy Bridge 22nm Tick 2012 Haswell 22nm Tock 2013 Broadwell 14nm Tick 2014 Skylake 14nm Tock 2015 Kaby Lake? 14nm Tock 2016 5 Clock: tick-tock. Upgrade the process technology, then revamp the uarch. 14nm technology? Finfets? What technology are Pis at? 40nm? 14nm yields getting better. hard to get, even with electron beam lithography plasma damage to low-k silicon only 0.111nm finfet. Intel has plants Arizona, etc. Delay to 10nm 7nm? EUV? 6 Skylake Processor { Page 1 • 4.5W ultra-mobile to 65W desktop They release desktop first these days. For example just today releasing \Xeon E5-2600 v4" AKA Broadwell-EP Confusing naming i3, i5, i7, Xeon, Pentium, m3, m5, m7, etc. Number of pins important. Low-power stuck with LPDDR3/DDR3L instead of DDR4 possibly due to lack of pins? eDRAM? 7 Intel no longer releasing info on how many transistors/transistor size? 8 Skylake Processor { Page 2 • \mobile first” design. -

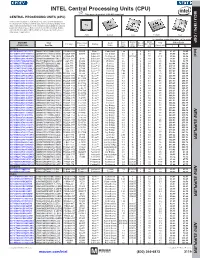

CPU) MCU / MPU / DSP This Page of Product Is Rohs Compliant

INTEL Central Processing Units (CPU) MPU /DSP MCU / This page of product is RoHS compliant. CENTRAL PROCESSING UNITS (CPU) Intel Processor families include the most powerful and flexible Central Processing Units (CPUs) available today. Utilizing industry leading 22nm device fabrication techniques, Intel continues to pack greater processing power into smaller spaces than ever before, providing desktop, mobile, and embedded products with maximum performance per watt across a wide range of applications. Atom Celeron Core Pentium Xeon For quantities greater than listed, call for quote. MOUSER Intel Core Cache Data Price Each Package Processor Family Code Freq. Size No. of Bus Width TDP STOCK NO. Part No. Series Name (GHz) (MB) Cores (bit) (Max) (W) 1 10 Desktop Intel 607-DF8064101211300Y DF8064101211300S R0VY FCBGA-559 D2550 Atom™ Cedarview 1.86 1 2 64 10 61.60 59.40 607-CM8063701444901S CM8063701444901S R10K FCLGA-1155 G1610 Celeron® Ivy Bridge 2.6 2 2 64 55 54.93 52.70 607-RK80532RC041128S RK80532RC041128S L6VR PPGA-478 - Celeron® Northwood 2.0 0.0156 1 32 52.8 42.00 40.50 607-CM8062301046804S CM8062301046804S R05J FCLGA-1155 G540 Celeron® Sandy Bridge 2.5 2 2 64 65 54.60 52.65 607-AT80571RG0641MLS AT80571RG0641MLS LGTZ LGA-775 E3400 Celeron® Wolfdale 2.6 1 2 64 65 54.93 52.70 607-HH80557PG0332MS HH80557PG0332MS LA99 LGA-775 E4300 Core™ 2 Conroe 1.8 2 2 64 65 139.44 133.78 607-AT80570PJ0806MS AT80570PJ0806MS LB9J LGA-775 E8400 Core™ 2 Wolfdale 3.0 6 2 64 65 207.04 196.00 607-AT80571PH0723MLS AT80571PH0723MLS LGW3 LGA-775 E7400 Core™ 2 Wolfdale -

Historical Perspective

Historical Perspective Ramon Canal CTD – Master CANS 1 Agenda • Back in the old days • Back to the future 2 The First Computer The Babbage Difference Engine (1832) 25,000 parts cost: £17,470 3 ENIAC - The first electronic computer (1946) 4 The Transistor Revolution First transistor Bell Labs, 1948 5 The First Integrated Circuits Bipolar logic 1960’s ECL 3-input Gate Motorola 1966 6 Intel 4004 Micro-Processor 1971 1000 NMOS transistors 1 MHz operation 7 Intel Westmere microprocessor (6C) 2010 1’17B CMOS transistors ~3 GHz operation 32nm 8 AMD “Istanbul” microprocessor 2009/2010 900+ M CMOS transistors ~3 GHz operation 45nm 9 Agenda • Back in the old days • Back to the future 10 Electronics LOG2 OF THE NUMBER OF COMPONENTS PER INTEGRATED FUNCTION 10 11 12 13 14 15 16 , April 19, 1965. 0 1 2 3 4 5 6 7 8 9 1959 Moore s 1960 1961 1962 1963 1964 1965 1966 ’ s Law 1967 1968 Law 1969 1970 1971 1972 1973 1974 1975 11 Transistor number 1 Billion K Transistors 1,000,000 Conroe 100,000 Pentium IV Pentium® III 10,000 Pentium® II Pentium® Pro 1,000 Pentium® i486 100 i386 80286 10 8086 1 1975 1980 1985 1990 1995 2000 2005 2010 PditiPrediction 12 Clock rate 1. ? every 2 years 10000 DblDoubles every 1000 Conroe 2 years Netburst Mhz) 100 P6 Pentium ® proc 486 uency ( 10 386 qq 8085 8086 286 Fre 1 8080 8008 4004 0.1 1970 1980 1990 2000 2010 Year 13 Power Density 1000 Rocket Nozzle Nuclear reactor 2 100 Pentium® 4 /cm ss Hot plate Pentium® III Pentium® II Watt 10 Pentium® Pro i386 Pentium® i486 1 “New Microarchitecture Challenges in the CoComingming Generations of CMOS Process Technologies ” – PowerFred Pollack, density Intel Corp. -

Intel Desktop CPU Roadmap

Intel Desktop CPU Roadmap 2004 2005 2008 2009 2010 System Price 2006 2007 TDP Q4 Q1 Q2 Q3 Q4 Q1 Q2 Q3 Q4 Q1 Q2 Q3 Q4 Q1 Q2 Q3 Q4 Q1 Q2 Q3 Q4 Q1 1H Q2 3.73GHz Core2 Extreme Kentsfield Core2 Extreme Kentsfield QX9775 (3.2GHz/ Northwood Presler QX6850 (3GHz/ 12MB/FSB1600) QX Prescott 2M 3.2GHz(840) QX6700 (2.66GHz/ Core2 Extreme 6 cores/12MB L3 3.46GHz(955) 3.73GHz(965) 8MB/FSB1333) QX9770 (3.2GHz/ Bloomfield Extreme ≧ Extreme 3.46GHz 8MB/FSB1066) 965 (3.2GHz/8MB/ Smithfield-XE QX6800 (2.93GHz/ 12MB/FSB1600) (XE) 130W Pentium 2.93GHz(X6800) 8MB/FSB1066) Yorkfield QPI6.4/DDR3 1066) Pentium 4 3.8GHz(670) Extreme Edition QX9650 (3GHz/ Extreme Edition Conroe XE 12MB/FSB1333) Core i7 Bloomfield $900 Prescott Presler Core2 Quad Yorkfield 940 (2.93GHz/8MB/ 3.8GHz(571) 3.8GHz(672) 3.4GHz(950) 3.6GHz(960) Kentsfield Q9550 (2.83GHz/ QPI4.8/DDR3 1066) 3.8GHz(570) Lynnfield 4cores/PCIe x16 Conroe Q6600 (2.4GHz/ 12MB/FSB1333) Westmere Performance Performance 3.8GHz(670) 8MB/FSB1066) Q6700 (2.66GHz/ 2.xxGHz/8MB/ Prescott 3.6GHz(660) PCIe/DDR3 1066 6 cores (P1) Prescott 2M 3.2GHz(940) Pentium D 2.66GHz(E6700) 8MB/FSB1066) Prescott 2M Q9650 (3GHz/ 3.2GHz(840) 9xx 12MB/FSB1333) Core2 Duo Nehalem/Core 2 $4xx Smithfield Pentium D Boundary 8xx Cedar Mill Core2 Quad Q9550 (2.83GHz/ 3.6GHz(662) Conroe Yorkfield 12MB/FSB1333) 3.6GHz(560) 3.6GHz(561) 3.6GHz(661) 2.40GHz(E6600) 2.66GHz(E6700) 920 (2.66GHz/8MB/ 3.6GHz(660) Q9450 (2.66GHz/ QPI4.8/DDR3 1066) $300 Q Mainstream 3 12MB/FSB1333) Bloomfield 2.xxGHz/8MB/ Lynnfield 3.4GHz(650) Core i7 95W 3GHz(930) 3.4GHz(950) -

The Intel X86 Microarchitectures Map Version 2.2

The Intel x86 Microarchitectures Map Version 2.2 P6 (1995, 0.50 to 0.35 μm) 8086 (1978, 3 µm) 80386 (1985, 1.5 to 1 µm) P5 (1993, 0.80 to 0.35 μm) NetBurst (2000 , 180 to 130 nm) Skylake (2015, 14 nm) Alternative Names: i686 Series: Alternative Names: iAPX 386, 386, i386 Alternative Names: Pentium, 80586, 586, i586 Alternative Names: Pentium 4, Pentium IV, P4 Alternative Names: SKL (Desktop and Mobile), SKX (Server) Series: Pentium Pro (used in desktops and servers) • 16-bit data bus: 8086 (iAPX Series: Series: Series: Series: • Variant: Klamath (1997, 0.35 μm) 86) • Desktop/Server: i386DX Desktop/Server: P5, P54C • Desktop: Willamette (180 nm) • Desktop: Desktop 6th Generation Core i5 (Skylake-S and Skylake-H) • Alternative Names: Pentium II, PII • 8-bit data bus: 8088 (iAPX • Desktop lower-performance: i386SX Desktop/Server higher-performance: P54CQS, P54CS • Desktop higher-performance: Northwood Pentium 4 (130 nm), Northwood B Pentium 4 HT (130 nm), • Desktop higher-performance: Desktop 6th Generation Core i7 (Skylake-S and Skylake-H), Desktop 7th Generation Core i7 X (Skylake-X), • Series: Klamath (used in desktops) 88) • Mobile: i386SL, 80376, i386EX, Mobile: P54C, P54LM Northwood C Pentium 4 HT (130 nm), Gallatin (Pentium 4 Extreme Edition 130 nm) Desktop 7th Generation Core i9 X (Skylake-X), Desktop 9th Generation Core i7 X (Skylake-X), Desktop 9th Generation Core i9 X (Skylake-X) • New instructions: Deschutes (1998, 0.25 to 0.18 μm) i386CXSA, i386SXSA, i386CXSB Compatibility: Pentium OverDrive • Desktop lower-performance: Willamette-128