Register Machines

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Large but Finite

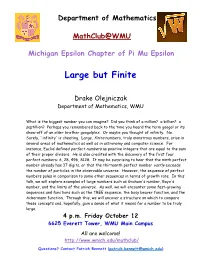

Department of Mathematics MathClub@WMU Michigan Epsilon Chapter of Pi Mu Epsilon Large but Finite Drake Olejniczak Department of Mathematics, WMU What is the biggest number you can imagine? Did you think of a million? a billion? a septillion? Perhaps you remembered back to the time you heard the term googol or its show-off of an older brother googolplex. Or maybe you thought of infinity. No. Surely, `infinity' is cheating. Large, finite numbers, truly monstrous numbers, arise in several areas of mathematics as well as in astronomy and computer science. For instance, Euclid defined perfect numbers as positive integers that are equal to the sum of their proper divisors. He is also credited with the discovery of the first four perfect numbers: 6, 28, 496, 8128. It may be surprising to hear that the ninth perfect number already has 37 digits, or that the thirteenth perfect number vastly exceeds the number of particles in the observable universe. However, the sequence of perfect numbers pales in comparison to some other sequences in terms of growth rate. In this talk, we will explore examples of large numbers such as Graham's number, Rayo's number, and the limits of the universe. As well, we will encounter some fast-growing sequences and functions such as the TREE sequence, the busy beaver function, and the Ackermann function. Through this, we will uncover a structure on which to compare these concepts and, hopefully, gain a sense of what it means for a number to be truly large. 4 p.m. Friday October 12 6625 Everett Tower, WMU Main Campus All are welcome! http://www.wmich.edu/mathclub/ Questions? Contact Patrick Bennett ([email protected]) . -

PERL – a Register-Less Processor

PERL { A Register-Less Processor A Thesis Submitted in Partial Fulfillment of the Requirements for the Degree of Doctor of Philosophy by P. Suresh to the Department of Computer Science & Engineering Indian Institute of Technology, Kanpur February, 2004 Certificate Certified that the work contained in the thesis entitled \PERL { A Register-Less Processor", by Mr.P. Suresh, has been carried out under my supervision and that this work has not been submitted elsewhere for a degree. (Dr. Rajat Moona) Professor, Department of Computer Science & Engineering, Indian Institute of Technology, Kanpur. February, 2004 ii Synopsis Computer architecture designs are influenced historically by three factors: market (users), software and hardware methods, and technology. Advances in fabrication technology are the most dominant factor among them. The performance of a proces- sor is defined by a judicious blend of processor architecture, efficient compiler tech- nology, and effective VLSI implementation. The choices for each of these strongly depend on the technology available for the others. Significant gains in the perfor- mance of processors are made due to the ever-improving fabrication technology that made it possible to incorporate architectural novelties such as pipelining, multiple instruction issue, on-chip caches, registers, branch prediction, etc. To supplement these architectural novelties, suitable compiler techniques extract performance by instruction scheduling, code and data placement and other optimizations. The performance of a computer system is directly related to the time it takes to execute programs, usually known as execution time. The expression for execution time (T), is expressed as a product of the number of instructions executed (N), the average number of machine cycles needed to execute one instruction (Cycles Per Instruction or CPI), and the clock cycle time (), as given in equation 1. -

Parameter Free Induction and Provably Total Computable Functions

Parameter free induction and provably total computable functions Lev D. Beklemishev∗ Steklov Mathematical Institute Gubkina 8, 117966 Moscow, Russia e-mail: [email protected] November 20, 1997 Abstract We give a precise characterization of parameter free Σn and Πn in- − − duction schemata, IΣn and IΠn , in terms of reflection principles. This I − I − allows us to show that Πn+1 is conservative over Σn w.r.t. boolean com- binations of Σn+1 sentences, for n ≥ 1. In particular, we give a positive I − answer to a question, whether the provably recursive functions of Π2 are exactly the primitive recursive ones. We also characterize the provably − recursive functions of theories of the form IΣn + IΠn+1 in terms of the fast growing hierarchy. For n = 1 the corresponding class coincides with the doubly-recursive functions of Peter. We also obtain sharp results on the strength of bounded number of instances of parameter free induction in terms of iterated reflection. Keywords: parameter free induction, provably recursive functions, re- flection principles, fast growing hierarchy Mathematics Subject Classification: 03F30, 03D20 1 Introduction In this paper we shall deal with the first order theories containing Kalmar ele- mentary arithmetic EA or, equivalently, I∆0 + Exp (cf. [11]). We are interested in the general question how various ways of formal reasoning correspond to models of computation. This kind of analysis is traditionally based on the con- cept of provably total recursive function (p.t.r.f.) of a theory. Given a theory T containing EA, a function f(~x) is called provably total recursive in T , iff there is a Σ1 formula φ(~x, y), sometimes called specification, that defines the graph of f in the standard model of arithmetic and such that T ⊢ ∀~x∃!y φ(~x, y). -

Computer Architecture Basics

V03: Computer Architecture Basics Prof. Dr. Anton Gunzinger Supercomputing Systems AG Technoparkstrasse 1 8005 Zürich Telefon: 043 – 456 16 00 Email: [email protected] Web: www.scs.ch Supercomputing Systems AG Phone +41 43 456 16 00 Technopark 1 Fax +41 43 456 16 10 8005 Zürich www.scs.ch Computer Architecture Basic 1. Basic Computer Architecture Diagram 2. Basic Computer Architectures 3. Von Neumann versus Harvard Architecture 4. Computer Performance Measurement 5. Technology Trends 2 Zürich 20.09.2019 © by Supercomputing Systems AG CONFIDENTIAL 1.1 Basic Computer Architecture Diagram Sketch the basic computer architecture diagram Describe the functions of the building blocks 3 Zürich 20.09.2019 © by Supercomputing Systems AG CONFIDENTIAL 1.2 Basic Computer Architecture Diagram Describe the execution of a single instruction 5 Zürich 20.09.2019 © by Supercomputing Systems AG CONFIDENTIAL 2 Basic Computer Architecture 2.1 Sketch an Accumulator Machine 2.2 Sketch a Register Machine 2.3 Sketch a Stack Machine 2.4 Sketch the analysis of computing expression in a Stack Machine 2.5 Write the micro program for an Accumulator, a Register and a Stack Machine for the instruction: C:= A + B and estimate the numbers of cycles 2.6 Write the micro program for an Accumulator, a Register and a Stack Machine for the instruction: C:= (A - B)2 + C and estimate the numbers of cycles 2.7 Compare Accumulator, Register and Stack Machine 7 Zürich 20.09.2019 © by Supercomputing Systems AG CONFIDENTIAL 2.1 Accumulator Machine Draw the Accumulator Machine 8 Zürich -

Computers, Complexity, and Controversy

Instruction Sets and Beyond: Computers, Complexity, and Controversy Robert P. Colwell, Charles Y. Hitchcock m, E. Douglas Jensen, H. M. Brinkley Sprunt, and Charles P. Kollar Carnegie-Mellon University t alanc o tyreceived Instruction set design is important, but lt1Mthi it should not be driven solely by adher- ence to convictions about design style, ,,tl ica' ch m e n RISC or CISC. The focus ofdiscussion o09 Fy ipt issues. RISC should be on the more general question of the assignment of system function- have guided ality to implementation levels within years. A study of an architecture. This point of view en- d yield a deeper un- compasses the instruction set-CISCs f hardware/software tend to install functionality at lower mputer performance, the system levels than RISCs-but also JOCUS on rne assignment iluence of VLSI on processor design, takes into account other design fea- ofsystem functionality to and many other topics. Articles on tures such as register sets, coproces- RISC research, however, often fail to sors, and caches. implementation levels explore these topics properly and can While the implications of RISC re- within an architecture, be misleading. Further, the few papers search extend beyond the instruction and not be guided by that present comparisons with com- set, even within the instruction set do- whether it is a RISC plex instruction set computer design main, there are limitations that have or CISC design. often do not address the same issues. not been identified. Typical RISC As a result, even careful study of the papers give few clues about where the literature is likely to give a distorted RISC approach might break down. -

Primality Testing for Beginners

STUDENT MATHEMATICAL LIBRARY Volume 70 Primality Testing for Beginners Lasse Rempe-Gillen Rebecca Waldecker http://dx.doi.org/10.1090/stml/070 Primality Testing for Beginners STUDENT MATHEMATICAL LIBRARY Volume 70 Primality Testing for Beginners Lasse Rempe-Gillen Rebecca Waldecker American Mathematical Society Providence, Rhode Island Editorial Board Satyan L. Devadoss John Stillwell Gerald B. Folland (Chair) Serge Tabachnikov The cover illustration is a variant of the Sieve of Eratosthenes (Sec- tion 1.5), showing the integers from 1 to 2704 colored by the number of their prime factors, including repeats. The illustration was created us- ing MATLAB. The back cover shows a phase plot of the Riemann zeta function (see Appendix A), which appears courtesy of Elias Wegert (www.visual.wegert.com). 2010 Mathematics Subject Classification. Primary 11-01, 11-02, 11Axx, 11Y11, 11Y16. For additional information and updates on this book, visit www.ams.org/bookpages/stml-70 Library of Congress Cataloging-in-Publication Data Rempe-Gillen, Lasse, 1978– author. [Primzahltests f¨ur Einsteiger. English] Primality testing for beginners / Lasse Rempe-Gillen, Rebecca Waldecker. pages cm. — (Student mathematical library ; volume 70) Translation of: Primzahltests f¨ur Einsteiger : Zahlentheorie - Algorithmik - Kryptographie. Includes bibliographical references and index. ISBN 978-0-8218-9883-3 (alk. paper) 1. Number theory. I. Waldecker, Rebecca, 1979– author. II. Title. QA241.R45813 2014 512.72—dc23 2013032423 Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy a chapter for use in teaching or research. Permission is granted to quote brief passages from this publication in reviews, provided the customary acknowledgment of the source is given. -

The Notion Of" Unimaginable Numbers" in Computational Number Theory

Beyond Knuth’s notation for “Unimaginable Numbers” within computational number theory Antonino Leonardis1 - Gianfranco d’Atri2 - Fabio Caldarola3 1 Department of Mathematics and Computer Science, University of Calabria Arcavacata di Rende, Italy e-mail: [email protected] 2 Department of Mathematics and Computer Science, University of Calabria Arcavacata di Rende, Italy 3 Department of Mathematics and Computer Science, University of Calabria Arcavacata di Rende, Italy e-mail: [email protected] Abstract Literature considers under the name unimaginable numbers any positive in- teger going beyond any physical application, with this being more of a vague description of what we are talking about rather than an actual mathemati- cal definition (it is indeed used in many sources without a proper definition). This simply means that research in this topic must always consider shortened representations, usually involving recursion, to even being able to describe such numbers. One of the most known methodologies to conceive such numbers is using hyper-operations, that is a sequence of binary functions defined recursively starting from the usual chain: addition - multiplication - exponentiation. arXiv:1901.05372v2 [cs.LO] 12 Mar 2019 The most important notations to represent such hyper-operations have been considered by Knuth, Goodstein, Ackermann and Conway as described in this work’s introduction. Within this work we will give an axiomatic setup for this topic, and then try to find on one hand other ways to represent unimaginable numbers, as well as on the other hand applications to computer science, where the algorith- mic nature of representations and the increased computation capabilities of 1 computers give the perfect field to develop further the topic, exploring some possibilities to effectively operate with such big numbers. -

Programmable Digital Microcircuits - a Survey with Examples of Use

- 237 - PROGRAMMABLE DIGITAL MICROCIRCUITS - A SURVEY WITH EXAMPLES OF USE C. Verkerk CERN, Geneva, Switzerland 1. Introduction For most readers the title of these lecture notes will evoke microprocessors. The fixed instruction set microprocessors are however not the only programmable digital mi• crocircuits and, although a number of pages will be dedicated to them, the aim of these notes is also to draw attention to other useful microcircuits. A complete survey of programmable circuits would fill several books and a selection had therefore to be made. The choice has rather been to treat a variety of devices than to give an in- depth treatment of a particular circuit. The selected devices have all found useful ap• plications in high-energy physics, or hold promise for future use. The microprocessor is very young : just over eleven years. An advertisement, an• nouncing a new era of integrated electronics, and which appeared in the November 15, 1971 issue of Electronics News, is generally considered its birth-certificate. The adver• tisement was for the Intel 4004 and its three support chips. The history leading to this announcement merits to be recalled. Intel, then a very young company, was working on the design of a chip-set for a high-performance calculator, for and in collaboration with a Japanese firm, Busicom. One of the Intel engineers found the Busicom design of 9 different chips too complicated and tried to find a more general and programmable solu• tion. His design, the 4004 microprocessor, was finally adapted by Busicom, and after further négociation, Intel acquired marketing rights for its new invention. -

ABSTRACT Virtualizing Register Context

ABSTRACT Virtualizing Register Context by David W. Oehmke Chair: Trevor N. Mudge A processor designer may wish for an implementation to support multiple reg- ister contexts for several reasons: to support multithreading, to reduce context switch overhead, or to reduce procedure call/return overhead by using register windows. Conventional designs require that each active context be present in its entirety, increasing the size of the register file. Unfortunately, larger register files are inherently slower to access and may lead to a slower cycle time or additional cycles of register access latency, either of which reduces overall performance. We seek to bypass the trade-off between multiple context support and register file size by mapping registers to memory, thereby decoupling the logical register requirements of active contexts from the contents of the physical register file. Just as caches and virtual memory allow a processor to give the illusion of numerous multi-gigabyte address spaces with an average access time approach- ing that of several kilobytes of SRAM, we propose an architecture that gives the illusion of numerous active contexts with an average access time approaching that of a single active context using a conventionally sized register file. This dis- sertation introduces the virtual context architecture, a new architecture that virtual- izes logical register contexts. Complete contexts, whether activation records or threads, are kept in memory and are no longer required to reside in their entirety in the physical register file. Instead, the physical register file is treated as a cache of the much larger memory-mapped logical register space. The implementation modifies the rename stage of the pipeline to trigger the movement of register val- ues between the physical register file and the data cache. -

Ackermann and the Superpowers

Ackermann and the superpowers Ant´onioPorto and Armando B. Matos Original version 1980, published in \ACM SIGACT News" Modified in October 20, 2012 Modified in January 23, 2016 (working paper) Abstract The Ackermann function a(m; n) is a classical example of a total re- cursive function which is not primitive recursive. It grows faster than any primitive recursive function. It is usually defined by a general recurrence together with two \boundary" conditions. In this paper we obtain a closed form of a(m; n), which involves the Knuth superpower notation, namely m−2 a(m; n) = 2 " (n + 3) − 3. Generalized Ackermann functions, that is functions satisfying only the general recurrence and one of the bound- ary conditions are also studied. In particular, we show that the function m−2 2 " (n + 2) − 2 also belongs to the \Ackermann class". 1 Introduction and definitions The \arrow" or \superpower" notation has been introduced by Knuth [1] as a convenient way of expressing very large numbers. It is based on the infinite sequence of operators: +, ∗, ",... We shall see that the arrow notation is closely related to the Ackermann function (see, for instance, [2]). 1.1 The Superpowers Let us begin with the following sequence of integer operators, where all the operators are right associative. a × n = a + a + ··· + a (n a's) a " n = a × a × · · · × a (n a's) 2 a " n = a " a "···" a (n a's) m In general we define a " n as 1 Definition 1 m m−1 m−1 m−1 a " n = a " a "··· " a | {z } n a's m The operator " is not associative for m ≥ 1. -

53 More Algorithms

Eric Roberts Handout #53 CS 106B March 9, 2015 More Algorithms Outline for Today • The plan for today is to walk through some of my favorite tree More Algorithms and graph algorithms, partly to demystify the many real-world applications that make use of those algorithms and partly to for Trees and Graphs emphasize the elegance and power of algorithmic thinking. • These algorithms include: – Google’s Page Rank algorithm for searching the web – The Directed Acyclic Word Graph format – The union-find algorithm for efficient spanning-tree calculation Eric Roberts CS 106B March 9, 2015 Page Rank Page Rank Algorithm The heart of the Google search engine is the page rank algorithm, The page rank algorithm gives each page a rating of its importance, which was described in a 1999 by Larry Page, Sergey Brin, Rajeev which is a recursively defined measure whereby a page becomes Motwani, and Terry Winograd. important if other important pages link to it. The PageRank Citation Ranking: One way to think about page rank is to imagine a random surfer on Bringing Order to the Web the web, following links from page to page. The page rank of any page is roughly the probability that the random surfer will land on a January 29, 1998 particular page. Since more links go to the important pages, the Abstract surfer is more likely to end up there. The importance of a Webpage is an inherently subjective matter, which depends on the reader’s interests, knowledge and attitudes. But there is still much that can be said objectively about the relative importance of Web pages. -

![Turing Machines [Fa’16]](https://docslib.b-cdn.net/cover/4789/turing-machines-fa-16-1324789.webp)

Turing Machines [Fa’16]

Models of Computation Lecture 6: Turing Machines [Fa’16] Think globally, act locally. — Attributed to Patrick Geddes (c.1915), among many others. We can only see a short distance ahead, but we can see plenty there that needs to be done. — Alan Turing, “Computing Machinery and Intelligence” (1950) Never worry about theory as long as the machinery does what it’s supposed to do. — Robert Anson Heinlein, Waldo & Magic, Inc. (1950) It is a sobering thought that when Mozart was my age, he had been dead for two years. — Tom Lehrer, introduction to “Alma”, That Was the Year That Was (1965) 6 Turing Machines In 1936, a few months before his 24th birthday, Alan Turing launched computer science as a modern intellectual discipline. In a single remarkable paper, Turing provided the following results: • A simple formal model of mechanical computation now known as Turing machines. • A description of a single universal machine that can be used to compute any function computable by any other Turing machine. • A proof that no Turing machine can solve the halting problem—Given the formal description of an arbitrary Turing machine M, does M halt or run forever? • A proof that no Turing machine can determine whether an arbitrary given proposition is provable from the axioms of first-order logic. This is Hilbert and Ackermann’s famous Entscheidungsproblem (“decision problem”). • Compelling arguments1 that his machines can execute arbitrary “calculation by finite means”. Although Turing did not know it at the time, he was not the first to prove that the Entschei- dungsproblem had no algorithmic solution.