A Topological Regularizer for Classifiers Via Persistent Homology

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

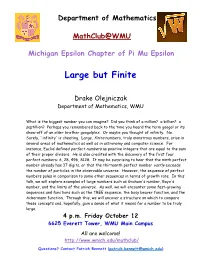

Large but Finite

Department of Mathematics MathClub@WMU Michigan Epsilon Chapter of Pi Mu Epsilon Large but Finite Drake Olejniczak Department of Mathematics, WMU What is the biggest number you can imagine? Did you think of a million? a billion? a septillion? Perhaps you remembered back to the time you heard the term googol or its show-off of an older brother googolplex. Or maybe you thought of infinity. No. Surely, `infinity' is cheating. Large, finite numbers, truly monstrous numbers, arise in several areas of mathematics as well as in astronomy and computer science. For instance, Euclid defined perfect numbers as positive integers that are equal to the sum of their proper divisors. He is also credited with the discovery of the first four perfect numbers: 6, 28, 496, 8128. It may be surprising to hear that the ninth perfect number already has 37 digits, or that the thirteenth perfect number vastly exceeds the number of particles in the observable universe. However, the sequence of perfect numbers pales in comparison to some other sequences in terms of growth rate. In this talk, we will explore examples of large numbers such as Graham's number, Rayo's number, and the limits of the universe. As well, we will encounter some fast-growing sequences and functions such as the TREE sequence, the busy beaver function, and the Ackermann function. Through this, we will uncover a structure on which to compare these concepts and, hopefully, gain a sense of what it means for a number to be truly large. 4 p.m. Friday October 12 6625 Everett Tower, WMU Main Campus All are welcome! http://www.wmich.edu/mathclub/ Questions? Contact Patrick Bennett ([email protected]) . -

Parameter Free Induction and Provably Total Computable Functions

Parameter free induction and provably total computable functions Lev D. Beklemishev∗ Steklov Mathematical Institute Gubkina 8, 117966 Moscow, Russia e-mail: [email protected] November 20, 1997 Abstract We give a precise characterization of parameter free Σn and Πn in- − − duction schemata, IΣn and IΠn , in terms of reflection principles. This I − I − allows us to show that Πn+1 is conservative over Σn w.r.t. boolean com- binations of Σn+1 sentences, for n ≥ 1. In particular, we give a positive I − answer to a question, whether the provably recursive functions of Π2 are exactly the primitive recursive ones. We also characterize the provably − recursive functions of theories of the form IΣn + IΠn+1 in terms of the fast growing hierarchy. For n = 1 the corresponding class coincides with the doubly-recursive functions of Peter. We also obtain sharp results on the strength of bounded number of instances of parameter free induction in terms of iterated reflection. Keywords: parameter free induction, provably recursive functions, re- flection principles, fast growing hierarchy Mathematics Subject Classification: 03F30, 03D20 1 Introduction In this paper we shall deal with the first order theories containing Kalmar ele- mentary arithmetic EA or, equivalently, I∆0 + Exp (cf. [11]). We are interested in the general question how various ways of formal reasoning correspond to models of computation. This kind of analysis is traditionally based on the con- cept of provably total recursive function (p.t.r.f.) of a theory. Given a theory T containing EA, a function f(~x) is called provably total recursive in T , iff there is a Σ1 formula φ(~x, y), sometimes called specification, that defines the graph of f in the standard model of arithmetic and such that T ⊢ ∀~x∃!y φ(~x, y). -

Primality Testing for Beginners

STUDENT MATHEMATICAL LIBRARY Volume 70 Primality Testing for Beginners Lasse Rempe-Gillen Rebecca Waldecker http://dx.doi.org/10.1090/stml/070 Primality Testing for Beginners STUDENT MATHEMATICAL LIBRARY Volume 70 Primality Testing for Beginners Lasse Rempe-Gillen Rebecca Waldecker American Mathematical Society Providence, Rhode Island Editorial Board Satyan L. Devadoss John Stillwell Gerald B. Folland (Chair) Serge Tabachnikov The cover illustration is a variant of the Sieve of Eratosthenes (Sec- tion 1.5), showing the integers from 1 to 2704 colored by the number of their prime factors, including repeats. The illustration was created us- ing MATLAB. The back cover shows a phase plot of the Riemann zeta function (see Appendix A), which appears courtesy of Elias Wegert (www.visual.wegert.com). 2010 Mathematics Subject Classification. Primary 11-01, 11-02, 11Axx, 11Y11, 11Y16. For additional information and updates on this book, visit www.ams.org/bookpages/stml-70 Library of Congress Cataloging-in-Publication Data Rempe-Gillen, Lasse, 1978– author. [Primzahltests f¨ur Einsteiger. English] Primality testing for beginners / Lasse Rempe-Gillen, Rebecca Waldecker. pages cm. — (Student mathematical library ; volume 70) Translation of: Primzahltests f¨ur Einsteiger : Zahlentheorie - Algorithmik - Kryptographie. Includes bibliographical references and index. ISBN 978-0-8218-9883-3 (alk. paper) 1. Number theory. I. Waldecker, Rebecca, 1979– author. II. Title. QA241.R45813 2014 512.72—dc23 2013032423 Copying and reprinting. Individual readers of this publication, and nonprofit libraries acting for them, are permitted to make fair use of the material, such as to copy a chapter for use in teaching or research. Permission is granted to quote brief passages from this publication in reviews, provided the customary acknowledgment of the source is given. -

The Notion Of" Unimaginable Numbers" in Computational Number Theory

Beyond Knuth’s notation for “Unimaginable Numbers” within computational number theory Antonino Leonardis1 - Gianfranco d’Atri2 - Fabio Caldarola3 1 Department of Mathematics and Computer Science, University of Calabria Arcavacata di Rende, Italy e-mail: [email protected] 2 Department of Mathematics and Computer Science, University of Calabria Arcavacata di Rende, Italy 3 Department of Mathematics and Computer Science, University of Calabria Arcavacata di Rende, Italy e-mail: [email protected] Abstract Literature considers under the name unimaginable numbers any positive in- teger going beyond any physical application, with this being more of a vague description of what we are talking about rather than an actual mathemati- cal definition (it is indeed used in many sources without a proper definition). This simply means that research in this topic must always consider shortened representations, usually involving recursion, to even being able to describe such numbers. One of the most known methodologies to conceive such numbers is using hyper-operations, that is a sequence of binary functions defined recursively starting from the usual chain: addition - multiplication - exponentiation. arXiv:1901.05372v2 [cs.LO] 12 Mar 2019 The most important notations to represent such hyper-operations have been considered by Knuth, Goodstein, Ackermann and Conway as described in this work’s introduction. Within this work we will give an axiomatic setup for this topic, and then try to find on one hand other ways to represent unimaginable numbers, as well as on the other hand applications to computer science, where the algorith- mic nature of representations and the increased computation capabilities of 1 computers give the perfect field to develop further the topic, exploring some possibilities to effectively operate with such big numbers. -

Ackermann and the Superpowers

Ackermann and the superpowers Ant´onioPorto and Armando B. Matos Original version 1980, published in \ACM SIGACT News" Modified in October 20, 2012 Modified in January 23, 2016 (working paper) Abstract The Ackermann function a(m; n) is a classical example of a total re- cursive function which is not primitive recursive. It grows faster than any primitive recursive function. It is usually defined by a general recurrence together with two \boundary" conditions. In this paper we obtain a closed form of a(m; n), which involves the Knuth superpower notation, namely m−2 a(m; n) = 2 " (n + 3) − 3. Generalized Ackermann functions, that is functions satisfying only the general recurrence and one of the bound- ary conditions are also studied. In particular, we show that the function m−2 2 " (n + 2) − 2 also belongs to the \Ackermann class". 1 Introduction and definitions The \arrow" or \superpower" notation has been introduced by Knuth [1] as a convenient way of expressing very large numbers. It is based on the infinite sequence of operators: +, ∗, ",... We shall see that the arrow notation is closely related to the Ackermann function (see, for instance, [2]). 1.1 The Superpowers Let us begin with the following sequence of integer operators, where all the operators are right associative. a × n = a + a + ··· + a (n a's) a " n = a × a × · · · × a (n a's) 2 a " n = a " a "···" a (n a's) m In general we define a " n as 1 Definition 1 m m−1 m−1 m−1 a " n = a " a "··· " a | {z } n a's m The operator " is not associative for m ≥ 1. -

53 More Algorithms

Eric Roberts Handout #53 CS 106B March 9, 2015 More Algorithms Outline for Today • The plan for today is to walk through some of my favorite tree More Algorithms and graph algorithms, partly to demystify the many real-world applications that make use of those algorithms and partly to for Trees and Graphs emphasize the elegance and power of algorithmic thinking. • These algorithms include: – Google’s Page Rank algorithm for searching the web – The Directed Acyclic Word Graph format – The union-find algorithm for efficient spanning-tree calculation Eric Roberts CS 106B March 9, 2015 Page Rank Page Rank Algorithm The heart of the Google search engine is the page rank algorithm, The page rank algorithm gives each page a rating of its importance, which was described in a 1999 by Larry Page, Sergey Brin, Rajeev which is a recursively defined measure whereby a page becomes Motwani, and Terry Winograd. important if other important pages link to it. The PageRank Citation Ranking: One way to think about page rank is to imagine a random surfer on Bringing Order to the Web the web, following links from page to page. The page rank of any page is roughly the probability that the random surfer will land on a January 29, 1998 particular page. Since more links go to the important pages, the Abstract surfer is more likely to end up there. The importance of a Webpage is an inherently subjective matter, which depends on the reader’s interests, knowledge and attitudes. But there is still much that can be said objectively about the relative importance of Web pages. -

Ackermann Function 1 Ackermann Function

Ackermann function 1 Ackermann function In computability theory, the Ackermann function, named after Wilhelm Ackermann, is one of the simplest and earliest-discovered examples of a total computable function that is not primitive recursive. All primitive recursive functions are total and computable, but the Ackermann function illustrates that not all total computable functions are primitive recursive. After Ackermann's publication[1] of his function (which had three nonnegative integer arguments), many authors modified it to suit various purposes, so that today "the Ackermann function" may refer to any of numerous variants of the original function. One common version, the two-argument Ackermann–Péter function, is defined as follows for nonnegative integers m and n: Its value grows rapidly, even for small inputs. For example A(4,2) is an integer of 19,729 decimal digits.[2] History In the late 1920s, the mathematicians Gabriel Sudan and Wilhelm Ackermann, students of David Hilbert, were studying the foundations of computation. Both Sudan and Ackermann are credited[3] with discovering total computable functions (termed simply "recursive" in some references) that are not primitive recursive. Sudan published the lesser-known Sudan function, then shortly afterwards and independently, in 1928, Ackermann published his function . Ackermann's three-argument function, , is defined such that for p = 0, 1, 2, it reproduces the basic operations of addition, multiplication, and exponentiation as and for p > 2 it extends these basic operations in a way -

Kapitola: the Connection Between the Iterated Logarithm and the Inverse Ackermann Function

Michal Fori¹ek: Early beta verzia skrípt z Vypočítateľnosti Kapitola: The connection between the iterated logarithm and the inverse Ackermann function This chapter is about two functions: The iterated logarithm function log∗ n, and the inverse Ackermann function α(n). Both log∗ n and α(n) are very slowly growing functions: for any number n that fits into the memory of your computer, log∗ n ≤ 6 and α(n) ≤ 4. Historically, both functions have been used in upper bounds for the time complexity of a particular implemen- tation of the Disjoint-set data structure: Union-Find algorithm with both union by rank and path compression. One particular insight I have been missing for a long time is the connection between the two functions. Here it is: for large enough n, i−3 z }| { α(n) = minfi : log∗ · · · ∗(n) ≤ ig Okay, you got me, I made that scary on purpose :) After the few seconds of stunned silence, it's now time for a decent explanation. The iterated logarithm The function iteration (the asterisk operator ∗) can be seen as a functional (a higher-order function): You can take any function f and define a new function f ∗ as follows: ( 0 n ≤ 1 f ∗(n) = 1 + f ∗(f(n)) n > 1 In human words: Imagine that you have a calculator with a button that applies f to the currently displayed value. Then, f ∗(n) is the number of times you need to push that button in order to make the value decrease to 1 or less. f approximate f ∗ f(n) = n − 1 f ∗(n) = n − 1 f(n) = n − 2 f ∗(n) = n=2 Here are a few examples of what the iteration produces: ∗ f(n) = pn=2 f (n) = log(n) f(n) = n f ∗(n) = log(log(n)) f(n) = log n f ∗(n) = log∗ n We don't have any simpler expression for log∗. -

Ackermann Functions of Complex Argument

Ackermann functions of complex argument D. Kouznetsov a,∗, aInstitute for Laser Science, University of Electro-Communications 1-5-1 Chofu-Gaoka, Chofu, Tokyo, 182-8585, Japan Abstract Existence of analytic extension of the fourth Ackermann function A(4, z) to the complex z plane is supposed. This extension is assumed to remain finite at the imaginary axis. On the base of this assumption, the algorithm is suggested for evaluation of this function. The numerical implementation with double-precision arithmetics leads to residual at the level of rounding errors. Application of the algorithm to more general cases of the Abel equation is discussed. Key words: Ackermann functions, tetration, ultra-exponential, super-exponential, generalized exponential, Abel equation PACS: , 02.30.Sa Functional analysis , 02.60.Nm Integral and integrodifferential equations , 02.30.Gp Special functions , 02.60.Gf Algorithms for functional approximation , 02.60.Jh Numerical differentiation and integration 1 Introduction For integer non-negative values of arguments, the Ackermann function A can be defined [1,2] as follows: ∗ Corresponding author. Email address: [email protected] (D. Kouznetsov). URL: http://www.ils/∼dima (D. Kouznetsov). Preprint submitted to Elsevier 24 March 2008 n + 1 , if m=0 A(m, n) = A(m−1, 1 ) , if m>0 and n=0 . (1) A m−1,A(m, n−1) , if m>0 and n>0 At relatively moderated values of m, functions A(m, z) grow quickly at z → +∞, at least while z is integer. This makes them useful for the representation of huge numbers. Powers of 2 are actually used in the most of computers for the “floating-point” variables. -

1. Super-Functions the Functional Equation F(Z+1)

MATHEMATICS OF COMPUTATION Volume 79, Number 271, July 2010, Pages 1727–1756 S 0025-5718(10)02342-2 Article electronically published on February 12, 2010 PORTRAIT OF THE FOUR REGULAR SUPER-EXPONENTIALS TO BASE SQRT(2) DMITRII KOUZNETSOV AND HENRYK TRAPPMANN Abstract. We introduce the concept of regular super-functions at a fixed point. It is derived from the concept of regular iteration. A super-function F of h is a solution of F(z+1)=h(F(z)). We provide a condition for F being entire, we also give two uniqueness criteria for regular super-functions. In the particular case h(x)=bˆx we call F super-exponential. h has two real fixed points for b between 1 and eˆ(1/e). Exemplary we choose the base b=sqrt(2) and portray the four classes of real regular super-exponentials in the complex plane. There are two at fixed point 2 and two at fixed point 4. Each class is given by the translations along the x-axis of a suitable representative. Both super-exponentials at fixed point 4—one strictly increasing and one strictly decreasing—are entire. Both super-exponentials at fixed point 2— one strictly increasing and one strictly decreasing—are holomorphic on a right half-plane. All four super-exponentials are periodic along the imaginary axis. Only the strictly increasing super-exponential at 2 can satisfy F(0)=1 and can hence be called tetrational. We develop numerical algorithms for the precise evaluation of these func- tions and their inverses in the complex plane. We graph the two corresponding different half-iterates of h(z)=sqrt(2)ˆz. -

Theory of Computation (Turing-Complete Systems)

Theory of Computation (Turing-Complete Systems) Pramod Ganapathi Department of Computer Science State University of New York at Stony Brook January 24, 2021 Contents Contents Turing-Complete Systems Unrestricted Grammars Lindenmayer Systems Gödel’s µ-Recursive Functions While Programs Turing-Complete Systems Solution Nobody knows if there are more powerful models. However, there are many computational models equivalent in power to TM’s. They are called Turing-complete systems. Problem How do you prove the functional equivalence of two given com- putation models M1 and M2, i.e., M1 ⇔ M2? Solution Simulation! Simulate M1 from M2. Simulate M2 from M1. Models more powerful than TM’s Problem Are there models of computation more powerful than Turing machines? Solution Simulation! Simulate M1 from M2. Simulate M2 from M1. Models more powerful than TM’s Problem Are there models of computation more powerful than Turing machines? Solution Nobody knows if there are more powerful models. However, there are many computational models equivalent in power to TM’s. They are called Turing-complete systems. Problem How do you prove the functional equivalence of two given com- putation models M1 and M2, i.e., M1 ⇔ M2? Models more powerful than TM’s Problem Are there models of computation more powerful than Turing machines? Solution Nobody knows if there are more powerful models. However, there are many computational models equivalent in power to TM’s. They are called Turing-complete systems. Problem How do you prove the functional equivalence of two given com- putation models M1 and M2, i.e., M1 ⇔ M2? Solution Simulation! Simulate M1 from M2. -

Chapter 5 Partial Orders, Lattices, Well Founded Orderings

Chapter 5 Partial Orders, Lattices, Well Founded Orderings, Equivalence Relations, Distributive Lattices, Boolean Algebras, Heyting Algebras 5.1 Partial Orders There are two main kinds of relations that play a very important role in mathematics and computer science: 1. Partial orders 2. Equivalence relations. In this section and the next few ones, we define partial orders and investigate some of their properties. 459 460 CHAPTER 5. PARTIAL ORDERS, EQUIVALENCE RELATIONS, LATTICES As we will see, the ability to use induction is intimately related to a very special property of partial orders known as well-foundedness. Intuitively, the notion of order among elements of a set, X, captures the fact some elements are bigger than oth- ers, perhaps more important, or perhaps that they carry more information. For example, we are all familiar with the natural ordering, , of the integers ≤ , 3 2 1 0 1 2 3 , ··· − ≤− ≤− ≤ ≤ ≤ ≤ ≤··· the ordering of the rationals (where p1 p2 iff q1 q2 p2q1 p1q2 ≤ − 0, i.e., p2q1 p1q2 0ifq1q2 > 0 else q1q2 ≥ − ≥ p2q1 p1q2 0ifq1q2 < 0), and the ordering of the real numbers.− ≤ In all of the above orderings, note that for any two number a and b, either a b or b a. ≤ ≤ We say that such orderings are total orderings. 5.1. PARTIAL ORDERS 461 A natural example of an ordering which is not total is provided by the subset ordering. Given a set, X, we can order the subsets of X by the subset relation: A B, where A, B are any subsets of X. ⊆ For example, if X = a, b, c , we have a a, b .