Ackermann Function 1 Ackermann Function

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Five Fundamental Operations of Mathematics: Addition, Subtraction

The five fundamental operations of mathematics: addition, subtraction, multiplication, division, and modular forms Kenneth A. Ribet UC Berkeley Trinity University March 31, 2008 Kenneth A. Ribet Five fundamental operations This talk is about counting, and it’s about solving equations. Counting is a very familiar activity in mathematics. Many universities teach sophomore-level courses on discrete mathematics that turn out to be mostly about counting. For example, we ask our students to find the number of different ways of constituting a bag of a dozen lollipops if there are 5 different flavors. (The answer is 1820, I think.) Kenneth A. Ribet Five fundamental operations Solving equations is even more of a flagship activity for mathematicians. At a mathematics conference at Sundance, Robert Redford told a group of my colleagues “I hope you solve all your equations”! The kind of equations that I like to solve are Diophantine equations. Diophantus of Alexandria (third century AD) was Robert Redford’s kind of mathematician. This “father of algebra” focused on the solution to algebraic equations, especially in contexts where the solutions are constrained to be whole numbers or fractions. Kenneth A. Ribet Five fundamental operations Here’s a typical example. Consider the equation y 2 = x3 + 1. In an algebra or high school class, we might graph this equation in the plane; there’s little challenge. But what if we ask for solutions in integers (i.e., whole numbers)? It is relatively easy to discover the solutions (0; ±1), (−1; 0) and (2; ±3), and Diophantus might have asked if there are any more. -

Introduction to Dependence Relations and Their Links to Algebraic Hyperstructures

mathematics Article Introduction to Dependence Relations and Their Links to Algebraic Hyperstructures Irina Cristea 1,* , Juš Kocijan 1,2 and Michal Novák 3 1 Centre for Information Technologies and Applied Mathematics, University of Nova Gorica, 5000 Nova Gorica, Slovenia; [email protected] 2 Department of Systems and Control, Jožef Stefan Institut, Jamova Cesta 39, 1000 Ljubljana, Slovenia 3 Faculty of Electrical Engineering and Communication, Brno University of Technology, Technická 10, 61600 Brno, Czech Republic; [email protected] * Correspondence: [email protected] or [email protected]; Tel.: +386-533-153-95 Received: 11 July 2019; Accepted: 19 September 2019; Published: 23 September 2019 Abstract: The aim of this paper is to study, from an algebraic point of view, the properties of interdependencies between sets of elements (i.e., pieces of secrets, atmospheric variables, etc.) that appear in various natural models, by using the algebraic hyperstructure theory. Starting from specific examples, we first define the relation of dependence and study its properties, and then, we construct various hyperoperations based on this relation. We prove that two of the associated hypergroupoids are Hv-groups, while the other two are, in some particular cases, only partial hypergroupoids. Besides, the extensivity and idempotence property are studied and related to the cyclicity. The second goal of our paper is to provide a new interpretation of the dependence relation by using elements of the theory of algebraic hyperstructures. Keywords: hyperoperation; hypergroupoid; dependence relation; influence; impact 1. Introduction In many real-life situations, there are contexts with numerous “variables”, which somehow depend on one another. -

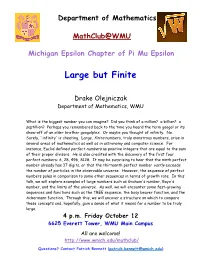

Large but Finite

Department of Mathematics MathClub@WMU Michigan Epsilon Chapter of Pi Mu Epsilon Large but Finite Drake Olejniczak Department of Mathematics, WMU What is the biggest number you can imagine? Did you think of a million? a billion? a septillion? Perhaps you remembered back to the time you heard the term googol or its show-off of an older brother googolplex. Or maybe you thought of infinity. No. Surely, `infinity' is cheating. Large, finite numbers, truly monstrous numbers, arise in several areas of mathematics as well as in astronomy and computer science. For instance, Euclid defined perfect numbers as positive integers that are equal to the sum of their proper divisors. He is also credited with the discovery of the first four perfect numbers: 6, 28, 496, 8128. It may be surprising to hear that the ninth perfect number already has 37 digits, or that the thirteenth perfect number vastly exceeds the number of particles in the observable universe. However, the sequence of perfect numbers pales in comparison to some other sequences in terms of growth rate. In this talk, we will explore examples of large numbers such as Graham's number, Rayo's number, and the limits of the universe. As well, we will encounter some fast-growing sequences and functions such as the TREE sequence, the busy beaver function, and the Ackermann function. Through this, we will uncover a structure on which to compare these concepts and, hopefully, gain a sense of what it means for a number to be truly large. 4 p.m. Friday October 12 6625 Everett Tower, WMU Main Campus All are welcome! http://www.wmich.edu/mathclub/ Questions? Contact Patrick Bennett ([email protected]) . -

Grade 7/8 Math Circles the Scale of Numbers Introduction

Faculty of Mathematics Centre for Education in Waterloo, Ontario N2L 3G1 Mathematics and Computing Grade 7/8 Math Circles November 21/22/23, 2017 The Scale of Numbers Introduction Last week we quickly took a look at scientific notation, which is one way we can write down really big numbers. We can also use scientific notation to write very small numbers. 1 × 103 = 1; 000 1 × 102 = 100 1 × 101 = 10 1 × 100 = 1 1 × 10−1 = 0:1 1 × 10−2 = 0:01 1 × 10−3 = 0:001 As you can see above, every time the value of the exponent decreases, the number gets smaller by a factor of 10. This pattern continues even into negative exponent values! Another way of picturing negative exponents is as a division by a positive exponent. 1 10−6 = = 0:000001 106 In this lesson we will be looking at some famous, interesting, or important small numbers, and begin slowly working our way up to the biggest numbers ever used in mathematics! Obviously we can come up with any arbitrary number that is either extremely small or extremely large, but the purpose of this lesson is to only look at numbers with some kind of mathematical or scientific significance. 1 Extremely Small Numbers 1. Zero • Zero or `0' is the number that represents nothingness. It is the number with the smallest magnitude. • Zero only began being used as a number around the year 500. Before this, ancient mathematicians struggled with the concept of `nothing' being `something'. 2. Planck's Constant This is the smallest number that we will be looking at today other than zero. -

Subtraction Strategy

Subtraction Strategies Bley, N.S., & Thornton, C.A. (1989). Teaching mathematics to the learning disabled. Austin, TX: PRO-ED. These math strategies focus on subtraction. They were developed to assist students who are experiencing difficulty remembering subtraction facts, particularly those facts with teen minuends. They are beneficial to students who put heavy reliance on counting. Students can benefit from of the following strategies only if they have ability: to recognize when two or three numbers have been said; to count on (from any number, 4 to 9); to count back (from any number, 4 to 12). Students have to make themselves master related addition facts before using subtraction strategies. These strategies are not research based. Basic Sequence Count back 27 Count Backs: (10, 9, 8, 7, 6, 5, 4, 3, 2) - 1 (11, 10, 9, 8, 7, 6, 5, 4, 3) - 2 (12, 11, 10, 9, 8, 7, 6, 5,4) – 3 Example: 12-3 Students start with a train of 12 cubes • break off 3 cubes, then • count back one by one The teacher gets students to point where they touch • look at the greater number (12 in 12-3) • count back mentally • use the cube train to check Language emphasis: See –1 (take away 1), -2, -3? Start big and count back. Add to check After students can use a strategy accurately and efficiently to solve a group of unknown subtraction facts, they are provided with “add to check activities.” Add to check activities are additional activities to check for mastery. Example: Break a stick • Making "A" train of cubes • Breaking off "B" • Writing or telling the subtraction sentence A – B = C • Adding to check Putting the parts back together • A - B = C because B + C = A • Repeating Show with objects Introduce subtraction zero facts Students can use objects to illustrate number sentences involving zero 19 Zeros: n – 0 = n n – n =0 (n = any number, 0 to 9) Use a picture to help Familiar pictures from addition that can be used to help students with subtraction doubles 6 New Doubles: 8 - 4 10 - 5 12 - 6 14 - 7 16 - 8 18-9 Example 12 eggs, remove 6: 6 are left. -

![Arxiv:1504.04798V1 [Math.LO] 19 Apr 2015 of Principia Squarely in an Empiricist Framework](https://docslib.b-cdn.net/cover/1119/arxiv-1504-04798v1-math-lo-19-apr-2015-of-principia-squarely-in-an-empiricist-framework-481119.webp)

Arxiv:1504.04798V1 [Math.LO] 19 Apr 2015 of Principia Squarely in an Empiricist Framework

HEINRICH BEHMANN'S 1921 LECTURE ON THE DECISION PROBLEM AND THE ALGEBRA OF LOGIC PAOLO MANCOSU AND RICHARD ZACH Abstract. Heinrich Behmann (1891{1970) obtained his Habilitation under David Hilbert in G¨ottingenin 1921 with a thesis on the decision problem. In his thesis, he solved|independently of L¨owenheim and Skolem's earlier work|the decision prob- lem for monadic second-order logic in a framework that combined elements of the algebra of logic and the newer axiomatic approach to logic then being developed in G¨ottingen. In a talk given in 1921, he outlined this solution, but also presented important programmatic remarks on the significance of the decision problem and of decision procedures more generally. The text of this talk as well as a partial English translation are included. x1. Behmann's Career. Heinrich Behmann was born January 10, 1891, in Bremen. In 1909 he enrolled at the University of T¨ubingen. There he studied mathematics and physics for two semesters and then moved to Leipzig, where he continued his studies for three semesters. In 1911 he moved to G¨ottingen,at that time the most important center of mathematical activity in Germany. He volunteered for military duty in World War I, was severely wounded in 1915, and returned to G¨ottingenin 1916. In 1918, he obtained his doctorate with a thesis titled The Antin- omy of Transfinite Numbers and its Resolution by the Theory of Russell and Whitehead [Die Antinomie der transfiniten Zahl und ihre Aufl¨osung durch die Theorie von Russell und Whitehead] under the supervision of David Hilbert [Behmann 1918]. -

Power Towers and the Tetration of Numbers

POWER TOWERS AND THE TETRATION OF NUMBERS Several years ago while constructing our newly found hexagonal integer spiral graph for prime numbers we came across the sequence- i S {i,i i ,i i ,...}, Plotting the points of this converging sequence out to the infinite term produces the interesting three prong spiral converging toward a single point in the complex plane as shown in the following graph- An inspection of the terms indicate that they are generated by the iteration- z[n 1] i z[n] subject to z[0] 0 As n gets very large we have z[]=Z=+i, where =exp(-/2)cos(/2) and =exp(-/2)sin(/2). Solving we find – Z=z[]=0.4382829366 + i 0.3605924718 It is the purpose of the present article to generalize the above result to any complex number z=a+ib by looking at the general iterative form- z[n+1]=(a+ib)z[n] subject to z[0]=1 Here N=a+ib with a and b being real numbers which are not necessarily integers. Such an iteration represents essentially a tetration of the number N. That is, its value up through the nth iteration, produces the power tower- Z Z n Z Z Z with n-1 zs in the exponents Thus- 22 4 2 22 216 65536 Note that the evaluation of the powers is from the top down and so is not equivalent to the bottom up operation 44=256. Also it is clear that the sequence {1 2,2 2,3 2,4 2,...}diverges very rapidly unlike the earlier case {1i,2 i,3i,4i,...} which clearly converges. -

Parameter Free Induction and Provably Total Computable Functions

Parameter free induction and provably total computable functions Lev D. Beklemishev∗ Steklov Mathematical Institute Gubkina 8, 117966 Moscow, Russia e-mail: [email protected] November 20, 1997 Abstract We give a precise characterization of parameter free Σn and Πn in- − − duction schemata, IΣn and IΠn , in terms of reflection principles. This I − I − allows us to show that Πn+1 is conservative over Σn w.r.t. boolean com- binations of Σn+1 sentences, for n ≥ 1. In particular, we give a positive I − answer to a question, whether the provably recursive functions of Π2 are exactly the primitive recursive ones. We also characterize the provably − recursive functions of theories of the form IΣn + IΠn+1 in terms of the fast growing hierarchy. For n = 1 the corresponding class coincides with the doubly-recursive functions of Peter. We also obtain sharp results on the strength of bounded number of instances of parameter free induction in terms of iterated reflection. Keywords: parameter free induction, provably recursive functions, re- flection principles, fast growing hierarchy Mathematics Subject Classification: 03F30, 03D20 1 Introduction In this paper we shall deal with the first order theories containing Kalmar ele- mentary arithmetic EA or, equivalently, I∆0 + Exp (cf. [11]). We are interested in the general question how various ways of formal reasoning correspond to models of computation. This kind of analysis is traditionally based on the con- cept of provably total recursive function (p.t.r.f.) of a theory. Given a theory T containing EA, a function f(~x) is called provably total recursive in T , iff there is a Σ1 formula φ(~x, y), sometimes called specification, that defines the graph of f in the standard model of arithmetic and such that T ⊢ ∀~x∃!y φ(~x, y). -

The Exponential Function

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln MAT Exam Expository Papers Math in the Middle Institute Partnership 5-2006 The Exponential Function Shawn A. Mousel University of Nebraska-Lincoln Follow this and additional works at: https://digitalcommons.unl.edu/mathmidexppap Part of the Science and Mathematics Education Commons Mousel, Shawn A., "The Exponential Function" (2006). MAT Exam Expository Papers. 26. https://digitalcommons.unl.edu/mathmidexppap/26 This Article is brought to you for free and open access by the Math in the Middle Institute Partnership at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in MAT Exam Expository Papers by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. The Exponential Function Expository Paper Shawn A. Mousel In partial fulfillment of the requirements for the Masters of Arts in Teaching with a Specialization in the Teaching of Middle Level Mathematics in the Department of Mathematics. Jim Lewis, Advisor May 2006 Mousel – MAT Expository Paper - 1 One of the basic principles studied in mathematics is the observation of relationships between two connected quantities. A function is this connecting relationship, typically expressed in a formula that describes how one element from the domain is related to exactly one element located in the range (Lial & Miller, 1975). An exponential function is a function with the basic form f (x) = ax , where a (a fixed base that is a real, positive number) is greater than zero and not equal to 1. The exponential function is not to be confused with the polynomial functions, such as x 2. One way to recognize the difference between the two functions is by the name of the function. -

Simple Statements, Large Numbers

University of Nebraska - Lincoln DigitalCommons@University of Nebraska - Lincoln MAT Exam Expository Papers Math in the Middle Institute Partnership 7-2007 Simple Statements, Large Numbers Shana Streeks University of Nebraska-Lincoln Follow this and additional works at: https://digitalcommons.unl.edu/mathmidexppap Part of the Science and Mathematics Education Commons Streeks, Shana, "Simple Statements, Large Numbers" (2007). MAT Exam Expository Papers. 41. https://digitalcommons.unl.edu/mathmidexppap/41 This Article is brought to you for free and open access by the Math in the Middle Institute Partnership at DigitalCommons@University of Nebraska - Lincoln. It has been accepted for inclusion in MAT Exam Expository Papers by an authorized administrator of DigitalCommons@University of Nebraska - Lincoln. Master of Arts in Teaching (MAT) Masters Exam Shana Streeks In partial fulfillment of the requirements for the Master of Arts in Teaching with a Specialization in the Teaching of Middle Level Mathematics in the Department of Mathematics. Gordon Woodward, Advisor July 2007 Simple Statements, Large Numbers Shana Streeks July 2007 Page 1 Streeks Simple Statements, Large Numbers Large numbers are numbers that are significantly larger than those ordinarily used in everyday life, as defined by Wikipedia (2007). Large numbers typically refer to large positive integers, or more generally, large positive real numbers, but may also be used in other contexts. Very large numbers often occur in fields such as mathematics, cosmology, and cryptography. Sometimes people refer to numbers as being “astronomically large”. However, it is easy to mathematically define numbers that are much larger than those even in astronomy. We are familiar with the large magnitudes, such as million or billion. -

Henkin's Method and the Completeness Theorem

Henkin's Method and the Completeness Theorem Guram Bezhanishvili∗ 1 Introduction Let L be a first-order logic. For a sentence ' of L, we will use the standard notation \` '" for ' is provable in L (that is, ' is derivable from the axioms of L by the use of the inference rules of L); and \j= '" for ' is valid (that is, ' is satisfied in every interpretation of L). The soundness theorem for L states that if ` ', then j= '; and the completeness theorem for L states that if j= ', then ` '. Put together, the soundness and completeness theorems yield the correctness theorem for L: a sentence is derivable in L iff it is valid. Thus, they establish a crucial feature of L; namely, that syntax and semantics of L go hand-in-hand: every theorem of L is a logical law (can not be refuted in any interpretation of L), and every logical law can actually be derived in L. In fact, a stronger version of this result is also true. For each first-order theory T and a sentence ' (in the language of T ), we have that T ` ' iff T j= '. Thus, each first-order theory T (pick your favorite one!) is sound and complete in the sense that everything that we can derive from T is true in all models of T , and everything that is true in all models of T is in fact derivable from T . This is a very strong result indeed. One possible reading of it is that the first-order formalization of a given mathematical theory is adequate in the sense that every true statement about T that can be formalized in the first-order language of T is derivable from the axioms of T . -

Categorical Comprehensions and Recursion Arxiv:1501.06889V2

Categorical Comprehensions and Recursion Joaquín Díaz Boilsa,∗ aFacultad de Ciencias Exactas y Naturales. Pontificia Universidad Católica del Ecuador. 170150. Quito. Ecuador. Abstract A new categorical setting is defined in order to characterize the subrecursive classes belonging to complexity hierarchies. This is achieved by means of coer- cion functors over a symmetric monoidal category endowed with certain recur- sion schemes that imitate the bounded recursion scheme. This gives a categorical counterpart of generalized safe composition and safe recursion. Keywords: Symmetric Monoidal Category, Safe Recursion, Ramified Recursion. 1. Introduction Various recursive function classes have been characterized in categorical terms. It has been achieved by considering a category with certain structure endowed with a recursion scheme. The class of Primitive Recursive Functions (PR in the sequel), for instance, has been chased simply by means of a carte- sian category and a Natural Numbers Object with parameters (nno in the sequel, see [11]). In [13] it can be found a generalization of that characterization to a monoidal setting, that is achieved by endowing a monoidal category with a spe- cial kind of nno (a left nno) where the tensor product is included. It is also known that other classes containing PR can be obtained by adding more struc- ture: considering for instance a topos ([8]), a cartesian closed category ([14]) or a category with finite limits ([12]).1 Less work has been made, however, on categorical characterizations of sub- arXiv:1501.06889v2 [math.CT] 28 Jan 2015 recursive function classes, that is, those contained in PR (see [4] and [5]). In PR there is at least a sequence of functions such that every function in it has a more complex growth than the preceding function in the sequence.