Petabit Switch Fabric Design

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Demand-Based Scheduling Using Noc Probes

Demand-based Scheduling using NoC Probes Kurt Shuler Jonah Probell Monica Tang Arteris, Inc. Sunnyvale, CA, USA www.arteris.com ABSTRACT Contention for shared resources, such as memory in a system-on-chip, is inefficient. It limits performance and requires initiator IPs, such as CPUs, to stay powered up and run at clock speeds higher than they would otherwise. When a system runs multiple tasks simultaneously, contention will vary depending on each task’s demand for shared resources. Scheduling in a conventional system does not consider how much each task will demand shared resources. Proposed is a demand-based method of scheduling tasks that have diverse demand for shared resources in a multi- processor system-on-chip. Tasks with extremes of high and low demand are scheduled to run simultaneously. Where tasks in a system are cyclical, knowledge of period, duty cycle, phase, and magnitude are considered in the scheduling algorithm. Using demand-based scheduling, the time variation of aggregate demand is reduced, as might be its maximum. The average access latency and resulting idle clock cycles are reduced. This allows initiator IPs to run at lower clock frequencies, finish their tasks sooner, and spend more time in powered down modes. Use of probes within a network-on-chip to gather demand statistics, application-specific algorithm considerations, operating system thread scheduler integration, and heterogeneous system middleware integration are discussed. Contents 1. Introduction ...................................................................................................................................................................... -

MIPI Low Latency Interface (LLI) for Mobile Phone Bom Cost Savings

MIPI Low Latency Interface (LLI) for Mobile Phone BoM Cost Savings Scott Yang Greater China Country Manger Arteris Inc 1 Copyright © 2012 MIPI Alliance. All rights reserved. Legal Disclaimer The material contained herein is not a license, either expressly or impliedly, to any IPR owned or controlled by any of the authors or developers of this material or MIPI. The material contained herein is provided on an “AS IS” basis and to the maximum extent permitted by applicable law, this material is provided AS IS AND WITH ALL FAULTS, and the authors and developers of this material and MIPI hereby disclaim all other warranties and conditions, either express, implied or statutory, including, but not limited to, any (if any) implied warranties, duties or conditions of merchantability, of fitness for a particular purpose, of accuracy or completeness of responses, of results, of workmanlike effort, of lack of viruses, and of lack of negligence. ALSO, THERE IS NO WARRANTY OR CONDITION OF TITLE, QUIET ENJOYMENT, QUIET POSSESSION, CORRESPONDENCE TO DESCRIPTION OR NON-INFRINGEMENT WITH REGARD TO THIS MATERIAL. All materials contained herein are protected by copyright laws, and may not be reproduced, republished, distributed, transmitted, displayed, broadcast or otherwise exploited in any manner without the express prior written permission of MIPI Alliance. MIPI, MIPI Alliance and the dotted rainbow arch and all related trademarks, tradenames, and other intellectual property are the exclusive property of MIPI Alliance and cannot be used without its express prior written permission. IN NO EVENT WILL ANY AUTHOR OR DEVELOPER OF THIS MATERIAL OR MIPI BE LIABLE TO ANY OTHER PARTY FOR THE COST OF PROCURING SUBSTITUTE GOODS OR SERVICES, LOST PROFITS, LOSS OF USE, LOSS OF DATA, OR ANY INCIDENTAL, CONSEQUENTIAL, DIRECT, INDIRECT, OR SPECIAL DAMAGES WHETHER UNDER CONTRACT, TORT, WARRANTY, OR OTHERWISE, ARISING IN ANY WAY OUT OF THIS OR ANY OTHER AGREEMENT RELATING TO THIS MATERIAL, WHETHER OR NOT SUCH PARTY HAD ADVANCE NOTICE OF THE POSSIBILITY OF SUCH DAMAGES. -

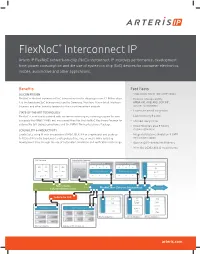

Flexnoc® Interconnect IP

FlexNoC® Interconnect IP Arteris IP FlexNoC network-on-chip (NoC) interconnect IP improves performance, development time, power consumption and die size of system on chip (SoC) devices for consumer electronics, mobile, automotive and other applications. Benefits Fast Facts SILICON PROVEN • Scales from 10s to 100s of IP blocks FlexNoC is the first commercial NoC interconnect and is shipping in over 1.5 Billion chips. • Protocol interoperability: It is the backbone SoC interconnect used by Samsung, Mobileye, Altera (Intel), Hisilicon AMBA AXI, AHB, APB; OCP; PIF; (Huawei) and other industry leaders for their most important projects. custom / proprietary • Lowest die area & congestion STATE-OF-THE-ART TECHNOLOGY FlexNoC is continually updated with the latest technologies, including support for new • Lowest latency & power protocols like AMBA® AHB5 and new capabilities like the FlexNoC Resilience Package for • Shortest design time automotive ISO 26262 compliance and the PIANO Timing Assistance Package. • Visual floorplan, area & timing SCALABILITY & PRODUCTIVITY closure estimation Create SoCs using IP with any protocol (AMBA, OCP, PIF or proprietary) and scale up • Integrated systemc simulation & UVM to 100s of IP blocks. Implement any topology (tree, ring, or mesh) while reducing verification support development time through the use of automated simulation and verification technology. • Optimal QOS—bandwidth & latency • Meet ISO 26262 ASIL D requirements CPU Subsystem Design-Specific Subsystem DSP Subsystem (A/V) Application IP Subsystem 2D A72 A72 A53 A53 IP IP IP IP IP IP CPU Subsystems GR. A72 A72 A53 A53 3D Graphics FlexWay Interconnect FlexWay Interconnect MPEG L2 Cache L2 Cache IP IP IP IP IP IP Ect. Ncore Cache Proxy $ FlexNoC Non-Coherent Interconnect Interchip Links CMC Coherent Interconnect CodaCache LLC WiFi CRI HDMI Crypto Firewall MIPI GSM (PCF+) Display LTE Memory Scheduler Subsystem Interconnect RSA-PSS PMU Cert. -

Download.Nvidia.Com/Pdf/Tegra/Tegra-X1-Whitepaper

Models and Techniques for Designing Mobile System-on-Chip Devices by Ayub Ahmed Gubran A DISSERTATION SUBMITTED IN PARTIAL FULFILLMENT OF THE REQUIREMENTS FOR THE DEGREE OF Doctor of Philosophy in THE FACULTY OF GRADUATE AND POSTDOCTORAL STUDIES (Electrical and Computer Engineering) The University of British Columbia (Vancouver) August 2020 © Ayub Ahmed Gubran, 2020 The following individuals certify that they have read, and recommend to the Fac- ulty of Graduate and Postdoctoral Studies for acceptance, the dissertation entitled: Models and Techniques for Designing Mobile System-on-Chip Devices submitted by Ayub Ahmed Gubran in partial fulfillment of the requirements for the degree of Doctor of Philosophy in Electrical and Computer Engineering Examining Committee: Tor M. Aamodt, Electrical and Computer Engineering Supervisor Steve Wilton, Electrical and Computer Engineering Supervisory Committee Member Alan Hu, Computer Science University Examiner Andre Ivanov, Electrical and Computer Engineering University Examiner John Owens, The University of California-Davis, Electrical and Computer Engineering External Examiner Additional Supervisory Committee Members: Sidney Fels, Electrical and Computer Engineering Supervisory Committee Member ii Abstract Mobile SoCs have become ubiquitous computing platforms, and, in recent years, they have become increasingly heterogeneous and complex. A typical SoC to- day includes CPUs, GPUs, image processors, video encoders/decoders, and AI engines. This dissertation addresses some of the challenges associated with SoCs in three pieces of work. The first piece of work develops a cycle-accurate model, Emerald, which pro- vides a platform for studying system-level SoC interactions while including the impact of graphics. Our cycle-accurate infrastructure builds upon well-established tools, GPGPU-Sim and gem5, with support for graphics and GPGPU workloads, and full system simulation with Android. -

Arteris IP, On-Chip Interconnect Market Leader, Takes Full Control

“With insight, monitoring and troubleshooting my networks in my Austin and Paris locations has never been easier.” Stéphane Méhat, CFO, Arteris IP Arteris IP, On-Chip Interconnect Market Company name: Arteris IP Leader, Takes Full Control of its Network Company Size: 51-200 through NETGEAR Insight Industry: BACKGROUND Semiconductors Founded in February 2003, Arteris IP provides Network-on-Chip (NoC) interconnect Company website: semiconductor intellectual property (IP) to System on Chip (SoC) makers to help reduce chip www.arteris.com development time, increase margins, and accelerate performance. Being the industry leader, Arteris IP invented the first commercial NoC SoC interconnect IP solution, FlexNoC, and the first Geographic region: Silicon Valley, California highly-configurable cache coherent interconnect IP, Ncore, which are used by notable design teams such as Samsung, Toshiba, Huawei / HiSilicon, Mobileye (Intel), Altera (Intel), and Texas Instruments. With a focus on providing innovative SoC interconnect IP technologies for an array of applications from automobiles to mobile phones, strong network technology enables Arteris IP to serve its customers. Stéphane Méhat, Chief Financial Officer, manages Arteris IP’s financial strategy, accounting, taxes, HR, and administration, and also oversees the company’s IT management. His nimble team includes IT manager, Bhavin Vaidya, an accountants, and office managers to support both the California, France, and Texas locations. PROBLEM/OBJECTIVE: Similar to other thriving small businesses in the Silicon Valley, Arteris IP is based primarily in Campbell, California, but also operates on a global scale. Stéphane was using a competitor’s devices in the Arteris IP France office and was beginning to set up a new office in Austin, Texas. -

The Future of Semiconductor Intellectual Property Architectural Blocks in Europe

The Future of Semiconductor Intellectual Property Architectural Blocks in Europe Author: Ilkka Tuomi Editor: Marc Bogdanowicz EUR 23962 EN - 2009 The Future of Semiconductor Intellectual Property Architectural Blocks in Europe Author: Ilkka Tuomi Editor: Marc Bogdanowicz 2009 EUR 23962 EN The mission of the JRC-IPTS is to provide customer-driven support to the EU policy-making process by developing science-based responses to policy challenges that have both a socio-economic as well as a scientific/technological dimension. European Commission Joint Research Centre Institute for Prospective Technological Studies Contact information Address: Edificio Expo. c/ Inca Garcilaso, 3. E-41092 Seville (Spain) E-mail: [email protected] Tel.: +34 954488318 Fax: +34 954488300 http://ipts.jrc.ec.europa.eu http://www.jrc.ec.europa.eu Legal Notice Neither the European Commission nor any person acting on behalf of the Commission is responsible for the use which might be made of this publication. Europe Direct is a service to help you find answers to your questions about the European Union Freephone number (*): 00 800 6 7 8 9 10 11 (*) Certain mobile telephone operators do not allow access to 00 800 numbers or these calls may be billed. A great deal of additional information on the European Union is available on the Internet. It can be accessed through the Europa server http://europa.eu/ JRC52422 EUR 23962 EN Catalogue number: LF-NA-23962-EN-C ISSN: 1018-5593 ISBN: 978-92-79-13058-8 DOI: 10.2791/13315 Luxembourg: Office for Official Publications of the European Communities © European Communities, 2009 Reproduction is authorised provided the source is acknowledged Printed in Spain Preface Over the last decades, developed economies have been undergoing a structural transformation towards knowledge economies. -

CCIX, GEN-Z, Opencapi: OVERVIEW & COMPARISON Brad Benton Advanced Micro Devices March, 2017 NEWLY EMERGING BUS/INTERCONNECT STANDARDS

13th ANNUAL WORKSHOP 2017 CCIX, GEN-Z, OpenCAPI: OVERVIEW & COMPARISON Brad Benton Advanced Micro Devices March, 2017 NEWLY EMERGING BUS/INTERCONNECT STANDARDS . Three new bus/interconnect standards announced in 2016 • Cache Coherent Interconnect for Accelerators (CCIX) www.ccixconsortium.com • Gen-Z genzconsortium.org • Open Coherent Accelerator Processor Interface (OpenCAPI) opencapi.org . Driving forces behind these new standards • Tighter coupling between processors and accelerators (GPUs, FPGAs, etc.) • Better exploitation of new and emerging memory/storage technologies • Streamline software stacks • Reduce data movement by direct access to memory • Open standards-based solutions . Why 3 different standards? • Different groups have been working to solve similar problems • However, each approach has its differences • Many companies involved with multiple consortia • Possible shake outs/convergence as things move forward CCIX, Gen-Z, OpenCAPI 2 OpenFabrics Alliance Workshop 2017 CONSORTIA MEMBER COMPANIES CCIX Gen-Z OpenCAPI AMD Keysight Alpha Data Lenovo Achronix NGCodec Amphenol Mellanox AMD Lotes AMD NVIDIA ARM Micron Amphenol Mellanox Amphenol Parade Arteris Netspeed ARM Micron AppliedMaterials Samsung Avery Design Qualcomm Broadcom Microsemi Dell SuperMicro Broadcom Redhat Cavium Nokia ELI Beamlines Synology Bull/Atos Synopsys Cray PDLA Group Google Tektronix Cadence Teledyne Dell Redhat HPE Toshiba Cavium TMSC FIT Samsung IBM Univ. Cordoba Huawei Xlinx HPE Seagate Mellanox WesternDigital IBM Huawei SK hynix Micron Xilinx IDT IBM SpinTransfer Micronsemi IDT WesternDigital IntelliProp Xilinx JaBil Yadro CCIX, Gen-Z, OpenCAPI 3 OpenFabrics Alliance Workshop 2017 CONSORTIA MEMBERSHIP DISTRIBUTION Membership Distribution Many companies have dual or triple memberships CCIX, Gen-Z, OpenCAPI 4 OpenFabrics Alliance Workshop 2017 CCIX: CACHE COHERENT INTERCONNECT FOR ACCELERATORS www.ccixconsortium.com . -

Arteris IP and Magillem Partner to Create Integrated System- On-Chip

For Release June 25, 2018 Contacts: Kurt Shuler Pascal Chauvet Arteris IP Magillem +1 408-470-7300 +1 415-867-5094 [email protected] [email protected] Arteris IP and Magillem Partner to Create Integrated System- on-Chip Architecture Environment Single environment allows design teams to more easily build AI and autonomous driving SoCs using FlexNoC and Ncore IP and share data for ISO 26262 compliance CAMPBELL, Calif. – June 25, 2018 – Arteris IP, the world’s leading supplier of silicon-proven commercial network-on-chip (NoC) interconnect intellectual property (IP), and Magillem, the leading provider of front-end design XML solutions and best-in-class tools to reduce the global cost of complex designs, today announced a partnership and product integration that accelerates the architectural definition of complex chips. The first technical fruit of the partnership is the validation of full compliance of Arteris IP interconnects with the Magillem environment. Within a single environment, joint customers can now easily build a system-on-chip with multiple Ncore and FlexNoC instances. Using the Magillem front-end design environment (MAI, MPA and MRV), users can import Arteris FlexNoC non-coherent interconnects and Ncore cache coherent interconnects using the IP-XACT format. Detailed descriptions of the Arteris IP NoC instances imported into the Magillem environment can be used for full SoC assembly at the RTL and SystemC levels. The Magillem / Arteris IP integration will be demonstrated at the Design Automation Conference (DAC), 24-28 June 2018, in the Magillem booth #1351. This integration eases the design of today’s highly complex artificial intelligence (AI) and autonomous driving SoCs, which are now bounded by the performance of on-chip interconnects rather than the performance of on-chip processors and hardware accelerators. -

Market Dynamics Fuel Semiconductor Evolution

Global Semiconductor Alliance ENABLING THE AUTOMOTIVE DESIGN CHAIN WITH VIRTUALIZATION CLOUD COMPUTING EVOLUTION: DISRUPTING THE IT SUPPLY CHAIN IP INNOVATION: AT THE CORE OF CONSUMER ELECTRONICS DESIGN SHIFT IN CONSUMER FOCUS DRIVES EVOLUTION OF THE SEMICONDUCTOR INDUSTRY ONSHORE SILICON FOR LONG-TERM SUPPLY SECURITY: FOUNDRY SELECTION FOR MILITARY APPLICATIONS Market Dynamics Fuel Semiconductor Evolution Vol.17 No.2 June 2010 Published by GSA $60 (U.S.) 1 The First Truly Global Foundry Congratulations to the new GLOBALFOUNDRIES, the merged operations of GLOBALFOUNDRIES and Chartered. To our continued mutual success from your Global Customers and Partners ARTICLES CONTENTS 2 Enabling the Automotive Design Chain with Virtualization Frank Schirrmeister, Director, Product Marketing, System-level Solutions, Synopsys Inc. ACCELERATE THE Mark Williams, Director, Solutions Marketing, Synopsys Inc. GROWTH AND 6 Cloud Computing Evolution: Disrupting the IT Supply Chain Chris Weitz, Director, Technology Strategy and Architecture, Deloitte Consulting LLP INCREASE THE John La Bouff, Senior Manager, Deloitte Consulting LLP RETURN ON 10 IP Innovation: At the Core of Consumer Electronics Design Frank Ferro, Director, Marketing, Sonics Inc. INVESTED CAPITAL 15 Shift in Consumer Focus Drives Evolution of the Semiconductor Industry OF THE GLOBAL John Brewer, Vice President, Corporate and Business Development, SiGe Semiconductor SEMICONDUCTOR 19 Onshore Silicon for Long-term Supply Security: Foundry Selection for Military Applications INDUSTRY BY Kirk Peterson, CFD Foundry Manager, ON Semiconductor FOSTERING A MORE 24 Integrated Power Management Platforms: The Entry of Fabless Design Houses to Power Management System Design EFFECTIVE FABLESS Dr. Shye Shapira, Director, Research and Development, Power Management Platforms, TowerJazz Todd Mahlen, Director, Marketing, Power Management Platforms, TowerJazz ECOSYSTEM Dr. -

US Department of Justice Antitrust Division Notice

This document is scheduled to be published in the Federal Register on 11/06/2012 and available online at http://federalregister.gov/a/2012-27107, and on FDsys.gov U.S. Department of Justice Antitrust Division Notice Pursuant to the National Cooperative Research and Production Act of 1993 -- Heterogeneous System Architecture Foundation Notice is hereby given that, on October 9, 2012, pursuant to Section 6(a) of the National Cooperative Research and Production Act of 1993, 15 U.S.C. 301 et seq. (“the Act”), Heterogeneous System Architecture Foundation (“HSA Foundation”) has filed written notifications simultaneously with the Attorney General and the Federal Trade Commission disclosing changes in its membership. The notifications were filed for the purpose of extending the Act’s provisions limiting the recovery of antitrust plaintiffs to actual damages under specified circumstances. Specifically, Samsung Electronics Co., Ltd., Gyeonggi-do, REPUBLIC OF KOREA; Symbio, San Jose, CA; Arteris, Inc., Sunnyvale, CA; Vivante Corporation, Sunnyvale, CA; Apical Ltd., London, UNITED KINGDOM; MulticoreWare, Cupertino, CA; Sonics, Inc., Milpitas, CA; Qualcomm Incorporated, San Diego, CA; and LG Electronics, Inc., Seocho-gu, Seoul, REPUBLIC OF KOREA, have been added as parties to this venture. No other changes have been made in either the membership or planned activity of the group research project. Membership in this group research project remains open, and HSA Foundation intends to file additional written notifications disclosing all changes in membership. On August 31, 2012, HSA Foundation filed its original notification pursuant to Section 6(a) of the Act. The Department of Justice published a notice in the Federal Register pursuant to Section 6(b) of the Act on October 11, 2012 (77 FR 61786). -

OMAP4460 Multimedia Device Silicon Errata Silicon Revision 1.1, 1.0 Texas Instruments OMAP™ Family of Products Version L

Public Version OMAP4460 Multimedia Device Silicon Errata Silicon Revision 1.1, 1.0 Texas Instruments OMAP™ Family of Products Version L Errata Literature Number: SWPZ017L September 2011–Revised September 2013 WARNING: EXPORT NOTICE Recipient agrees to not knowingly export or re-export, directly or indirectly, any product or technical data (as defined by the U.S., EU, and other Export Administration Regulations) including software, or any controlled product restricted by other applicable national regulations, received from Disclosing party under this Agreement, or any direct product of such technology, to any destination to which such export or re-export is restricted or prohibited by U.S. or other applicable laws, without obtaining prior authorisation from U.S. Department of Commerce and other competent Government authorities to the extent required by those laws. This provision shall survive termination or expiration of this Agreement. According to our best knowledge of the state and end-use of this product or technology, and in compliance with the export control regulations of dual-use goods in force in the origin and exporting countries, this technology is classified as follows: US ECCN: 3E991 EU ECCN: EAR99 And may require export or re-export license for shipping it in compliance with the applicable regulations of certain countries. Public Version Contents Preface ....................................................................................................................................... 7 1 Bugs ............................................................................................................................... -

Flexnoc® Resilience Package Arteris Flexnoc Resilience Package IP

Arteris® ® FlexNoC Resilience Package Arteris FlexNoC Resilience Package IP The Arteris FlexNoC Resilience Package is a complementary product to Arteris FlexNoC fabric IP. It implements hardware resilience features essential for systems-on-chip (SoCs) targeted for mission- LEARN MORE critical uses, such as those requiring ISO 26262 / ASIL (automotive) or IEC 61508 compliance. www.Arteris.com END-TO-END HARDWARE RESILIENCE FOR SOCs Resilience and Functional Safety Currently developed systems-on-chip for safety-related applications in the automotive, industrial ® ® and medical markets use CPU cores like the ARM Cortex -R5 and Cortex-R7 processors. These HIGHLIGHTS types of CPU core IP implement techniques like ECC and parity data protection, dual-core lockstep (DCLS) redundancy, duplicated internal memories, safety checkers, and built-in self-tests (BIST). The 1st and only commer- cial interconnect fabric IP to provide built-in sup- port for ARM® Cortex®- R5 and Cortex-R7 pro- cessor port checking. End-to-End protection of any or all IP-to-IP commu- nications within the SoC, selectable by the user. Advanced Technology: Unit duplication and com- parison, custom transport protection, packet validity checking, transaction timeout, control register parity checking, BIST and fault controller. Supplements existing SoC fabric, making it easy However, this CPU-only approach neglects to provide end-to-end protection from master to slave. to add safety features to an End-to-end protection can only be provided by implementing resilience features in the on-chip existing SoC or partition an interconnect. SoC into safe and non-safe regions. The FlexNoC Resilience Package enables the implementation of data protection and control fea- tures like these within the on-chip interconnect fabric.