Introduction to I18n

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ALPHA CHI OMEGA Accreditation Report 2014-2015

ALPHA CHI OMEGA Accreditation Report 2014-2015 Intellectual Development Alpha Chi Omega was ranked second out of nine Panhellenic Sororities in the fall 2014 semester with a GPA of 3.4475, a decrease of .01306 from the spring 2014 semester. The 3.4475 GPA placed the chapter above the All Sorority and All Greek average. Alpha Chi Omega was ranked first out of nine Panhellenic Sororities in the spring 2015 semester with a GPA of 3.48402, an increase of .03652 from the fall 2014 semester. The 3.48402 GPA placed the chapter above the All Sorority and All Greek average. Alpha Chi Omega’s spring 2015 new member class GPA was 3.383, ranking first out of nine Panhellenic Sororities. Alpha Chi Omega had 46.6% of the chapter on the Dean’s List in the fall 2014 semester and 28.2% on the Dean’s List in the spring 2015 semester. Alpha Chi Omega requires a minimum 2.6 GPA for membership. This standard is higher than the Inter/National Headquarters and University requirements. Alpha Chi Omega fosters an environment for strong academic performance. The chapter provides in-house tutoring, peer mentoring, and regular study hours. The chapter also connects members to the Center for Academic Success, the Writing and Math Center, and other on-campus resources. Alpha Chi Omega maintains a designated study space frequently used by members as well as tutors, teaching assistants, and professors leading study sessions. This space is complete with a study buddy desk fully stocked with office and study supplies. Alpha Chi Omega’s academic plan—incorporating individualization and positive incentives—is consistently recognized as a best practice. -

SLP-5 Setup for Uni-3/5/7 & WM-Nano Chinese BIG5 Characters

SLP-5 Configuration for Uni-3, Uni-5, Uni-7 & WM-Nano Chinese BIG5 Characters The Uni-3, Uni-5, Uni-7 scales and WM-Nano wrapper can display and print PLU information in Chinese BIG5, also known as Traditional, characters but all menus, etc. remain in English. Chinese characters are entered in SLP-5 and sent to the scales. Chinese characters cannot be entered at the scales. Refer to the following procedure to configure the scales and wrapper and SLP-5. SCALE SETUP Update the firmware for the Uni-5, Uni-7 and WM-Nano to the following versions. Uni-5: C1830x Uni-7: C1828x (PK-260A CF CPU), C2072x (PK-260B SD CPU) WM-Nano: C1832x (PK-260A CF CPU), C2074x (PK-260B SD CPU) Uni-3: C1937U or later, C2271x The Language setting in the Uni-3 must be set to print and display Chinese characters. Note: Only the Uni-3L2 models display Chinese characters. KEY IN ITEM No. Enter 6000, press MODE 0.000 0.000 0.00 0.00 <B00 SETUP> Enter 495344, press PLU <B00 SETUP> <B00 SETUP> Enter 26, press Down Arrow <B00 SETUP> B26 COUNTRY Press ENTER B26 COUNTRY *LANGUAGE 1:ENGLISH Enter 15, press ENTER B26-01-02 LANGUAGE 1 *LANGUAGE 15:FORMOSAN After the long beep press MODE B26-01-02 LANGUAGE 15 REBOOT Shut off the scale for this setting change to 19004-0000 take effect. SLP-5 SETUP SLP-5 must be configured to use Chinese fonts. Refer to the instructions below. The following Chinese character sizes are available. -

The Backspace

spumante e rosato* (choose 2) per la tavola glera NV lunetta prosecco, veneto straw, baked apple, peach 11 43 farm greens :: giardiniera, red onion, pecorino romano lambrusco NV medici ermete, emilia romagna black currant, violet, graphite 10 39 lamb & pork meatballs :: stewed tomato, bread crumb, asiago, mint NV l’onesta, emilia romagna fresh strawberry, violet, orange zest 11 43 baked ricotta :: lemon, poached tomato, olive oil * lambrusco whipped lardo bruschetta :: herbs, citrus segment * aglianico 2015 feudi di san gregorio ‘ros‘aura’, irpinia, campania fresh strawberry, rose petal 12 47 shishito peppers :: melon, basil roasted corn :: ricotta, pesto bianco bombino bianco 2016 poderi dal nespoli “pagadebit”, romagna gooseberry, apple, hawthorn blossom 11 43 antipasti (choose 3) pinot grigio 2015 ronchi di pietro, friuli hazelnut, white peach, limestone 48 sweet potato :: cippolini onion, mushroom, rosemary friulano 2014 villa chiopris, friuli grave straw, white flowers, almond 10 39 braised greens :: golden raisins, pecan, red pepper falanghina 2014 terredora, campagna lemon, quince, pear 13 51 :: tomato, basil, balsamic house-made mozzarella chardonnay 2014 tenute del cabreo ‘la pietra’, chianti classico peach preserves, salted popcorn, vanilla 85 roasted beets :: celery, lemon zest, pepperoncini moscato 2015 oddera cascina di fiori, asti tangerine, peach, sage 12 47 the backspace marinated olives :: lemon, rosemary, oregano green beans :: pancetta, garlic, lemon family-style menu rosso $20 per guest pizze (choose 2) pergola rosso -

INTERSKILL MAINFRAME QUARTERLY December 2011

INTERSKILL MAINFRAME QUARTERLY December 2011 Retaining Data Center Skills Inside This Issue and Knowledge Retaining Data Center Skills and Knowledge 1 Interskill Releases - December 2011 2 By Greg Hamlyn Vendor Briefs 3 This the final chapter of this four part series that briefly Taking Care of Storage 4 explains the data center skills crisis and the pros and cons of Learning Spotlight – Managing Projects 5 implementing a coaching or mentoring program. In this installment we will look at some of the steps to Tech-Head Knowledge Test – Utilizing ISPF 5 implementing a program such as this into your data center. OPINION: The Case for a Fresh Technical If you missed these earlier installments, click the links Opinion 6 below. TECHNICAL: Lost in Translation Part 1 - EBCDIC Code Pages 7 Part 1 – The Data Center Skills Crisis MAINFRAME – Weird and Unusual! 10 Part 2 – How Can I Prevent Skills Loss in My Data Center? Part 3 – Barriers to Implementing a Coaching or Mentoring Program should consider is the GROW model - Determine whether an external consultant should be Part Four – Implementing a Successful Coaching used (include pros and cons) - Create a basic timeline of the project or Mentoring Program - Identify how you will measure the effectiveness of the project The success of any project comes down to its planning. If - Provide some basic steps describing the coaching you already believe that your data center can benefit from and mentoring activities skills and knowledge transfer and that coaching and - Next phase if the pilot program is deemed successful mentoring will assist with this, then outlining a solid (i.e. -

Edit Bibliographic Records

OCLC Connexion Browser Guides Edit Bibliographic Records Last updated: May 2014 6565 Kilgour Place, Dublin, OH 43017-3395 www.oclc.org Revision History Date Section title Description of changes May 2014 All Updated information on how to open the diacritic window. The shortcut key is no longer available. May 2006 1. Edit record: basics Minor updates. 5. Insert diacritics Revised to update list of bar syntax character codes to reflect and special changes in character names and to add newly supported characters characters. November 2006 1. Edit record: basics Minor updates. 2. Editing Added information on guided editing for fields 541 and 583, techniques, template commonly used when cataloging archival materials. view December 2006 1. Edit record: basics Updated to add information about display of WorldCat records that contain non-Latin scripts.. May 2007 4. Validate record Revised to document change in default validation level from None to Structure. February 2012 2 Editing techniques, Series added entry fields 800, 810, 811, 830 can now be used to template view insert data from a “cited” record for a related series item. Removed “and DDC” from Control All commands. DDC numbers are no longer controlled in Connexion. April 2012 2. Editing New section on how to use the prototype OCLC Classify service. techniques, template view September 2012 All Removed all references to Pathfinder. February 2013 All Removed all references to Heritage Printed Book. April 2013 All Removed all references to Chinese Name Authority © 2014 OCLC Online Computer Library Center, Inc. 6565 Kilgour Place Dublin, OH 43017-3395 USA The following OCLC product, service and business names are trademarks or service marks of OCLC, Inc.: CatExpress, Connexion, DDC, Dewey, Dewey Decimal Classification, OCLC, WorldCat, WorldCat Resource Sharing and “The world’s libraries. -

Consonant Characters and Inherent Vowels

Global Design: Characters, Language, and More Richard Ishida W3C Internationalization Activity Lead Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 1 Getting more information W3C Internationalization Activity http://www.w3.org/International/ Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 2 Outline Character encoding: What's that all about? Characters: What do I need to do? Characters: Using escapes Language: Two types of declaration Language: The new language tag values Text size Navigating to localized pages Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 3 Character encoding Character encoding: What's that all about? Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 4 Character encoding The Enigma Photo by David Blaikie Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 5 Character encoding Berber 4,000 BC Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 6 Character encoding Tifinagh http://www.dailymotion.com/video/x1rh6m_tifinagh_creation Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 7 Character encoding Character set Character set ⴰ ⴱ ⴲ ⴳ ⴴ ⴵ ⴶ ⴷ ⴸ ⴹ ⴺ ⴻ ⴼ ⴽ ⴾ ⴿ ⵀ ⵁ ⵂ ⵃ ⵄ ⵅ ⵆ ⵇ ⵈ ⵉ ⵊ ⵋ ⵌ ⵍ ⵎ ⵏ ⵐ ⵑ ⵒ ⵓ ⵔ ⵕ ⵖ ⵗ ⵘ ⵙ ⵚ ⵛ ⵜ ⵝ ⵞ ⵟ ⵠ ⵢ ⵣ ⵤ ⵥ ⵯ Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 8 Character encoding Coded character set 0 1 2 3 0 1 Coded character set 2 3 4 5 6 7 8 9 33 (hexadecimal) A B 52 (decimal) C D E F Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 9 Character encoding Code pages ASCII Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 10 Character encoding Code pages ISO 8859-1 (Latin 1) Western Europe ç (E7) Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 11 Character encoding Code pages ISO 8859-7 Greek η (E7) Copyright © 2005 W3C (MIT, ERCIM, Keio) slide 12 Character encoding Double-byte characters Standard Country No. -

SUPPORTING the CHINESE, JAPANESE, and KOREAN LANGUAGES in the OPENVMS OPERATING SYSTEM by Michael M. T. Yau ABSTRACT the Asian L

SUPPORTING THE CHINESE, JAPANESE, AND KOREAN LANGUAGES IN THE OPENVMS OPERATING SYSTEM By Michael M. T. Yau ABSTRACT The Asian language versions of the OpenVMS operating system allow Asian-speaking users to interact with the OpenVMS system in their native languages and provide a platform for developing Asian applications. Since the OpenVMS variants must be able to handle multibyte character sets, the requirements for the internal representation, input, and output differ considerably from those for the standard English version. A review of the Japanese, Chinese, and Korean writing systems and character set standards provides the context for a discussion of the features of the Asian OpenVMS variants. The localization approach adopted in developing these Asian variants was shaped by business and engineering constraints; issues related to this approach are presented. INTRODUCTION The OpenVMS operating system was designed in an era when English was the only language supported in computer systems. The Digital Command Language (DCL) commands and utilities, system help and message texts, run-time libraries and system services, and names of system objects such as file names and user names all assume English text encoded in the 7-bit American Standard Code for Information Interchange (ASCII) character set. As Digital's business began to expand into markets where common end users are non-English speaking, the requirement for the OpenVMS system to support languages other than English became inevitable. In contrast to the migration to support single-byte, 8-bit European characters, OpenVMS localization efforts to support the Asian languages, namely Japanese, Chinese, and Korean, must deal with a more complex issue, i.e., the handling of multibyte character sets. -

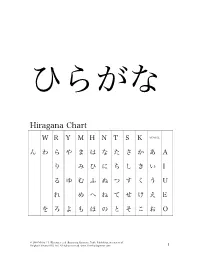

Hiragana Chart

ひらがな Hiragana Chart W R Y M H N T S K VOWEL ん わ ら や ま は な た さ か あ A り み ひ に ち し き い I る ゆ む ふ ぬ つ す く う U れ め へ ね て せ け え E を ろ よ も ほ の と そ こ お O © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 1 Beginning Japanese 名前: ________________________ 1-1 Hiragana Activity Book 日付: ___月 ___日 一、 Practice: あいうえお かきくけこ がぎぐげご O E U I A お え う い あ あ お え う い あ お う あ え い あ お え う い お う い あ お え あ KO KE KU KI KA こ け く き か か こ け く き か こ け く く き か か こ き き か こ こ け か け く く き き こ け か © 2010 Michael L. Kluemper et al. Beginning Japanese, Tuttle Publishing, an imprint of Periplus Editions (HK) Ltd. All rights reserved. www.TimeForJapanese.com. 2 GO GE GU GI GA ご げ ぐ ぎ が が ご げ ぐ ぎ が ご ご げ ぐ ぐ ぎ ぎ が が ご げ ぎ が ご ご げ が げ ぐ ぐ ぎ ぎ ご げ が 二、 Fill in each blank with the correct HIRAGANA. SE N SE I KI A RA NA MA E 1. -

14 * (Asterisk), 169 \ (Backslash) in Smb.Conf File, 85

,sambaIX.fm.28352 Page 385 Friday, November 19, 1999 3:40 PM Index <> (angled brackets), 14 archive files, 137 * (asterisk), 169 authentication, 19, 164–171 \ (backslash) in smb.conf file, 85 mechanisms for, 35 \\ (backslashes, two) in directories, 5 NT domain, 170 : (colon), 6 share-level option for, 192 \ (continuation character), 85 auto services option, 124 . (dot), 128, 134 automounter, support for, 35 # (hash mark), 85 awk script, 176 % (percent sign), 86 . (period), 128 B ? (question mark), 135 backup browsers ; (semicolon), 85 local master browser, 22 / (slash character), 129, 134–135 per local master browser, 23 / (slash) in shares, 116 maximum number per workgroup, 22 _ (underscore) 116 backup domain controllers (BDCs), 20 * wildcard, 177 backups, with smbtar program, 245–248 backwards compatibility elections and, 23 for filenames, 143 A Windows domains and, 20 access-control options (shares), 160–162 base directory, 40 accessing Samba server, 61 .BAT scripts, 192 accounts, 51–53 BDCs (backup domain controllers), 20 active connections, option for, 244 binary vs. source files, 32 addresses, networking option for, 106 bind interfaces only option, 106 addtosmbpass executable, 176 bindings, 71 admin users option, 161 Bindings tab, 60 AFS files, support for, 35 blocking locks option, 152 aliases b-node, 13 multiple, 29 boolean type, 90 for NetBIOS names, 107 bottlenecks, 320–328 alid users option, 161 reducing, 321–326 announce as option, 123 types of, 320 announce version option, 123 broadcast addresses, troubleshooting, 289 API -

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding A

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding Adobe-Symbol-Encoding csHPPSMath Adobe-Zapf-Dingbats-Encoding csZapfDingbats Arabic ISO-8859-6, csISOLatinArabic, iso-ir-127, ECMA-114, ASMO-708 ASCII US-ASCII, ANSI_X3.4-1968, iso-ir-6, ANSI_X3.4-1986, ISO646-US, us, IBM367, csASCI big-endian ISO-10646-UCS-2, BigEndian, 68k, PowerPC, Mac, Macintosh Big5 csBig5, cn-big5, x-x-big5 Big5Plus Big5+, csBig5Plus BMP ISO-10646-UCS-2, BMPstring CCSID-1027 csCCSID1027, IBM1027 CCSID-1047 csCCSID1047, IBM1047 CCSID-290 csCCSID290, CCSID290, IBM290 CCSID-300 csCCSID300, CCSID300, IBM300 CCSID-930 csCCSID930, CCSID930, IBM930 CCSID-935 csCCSID935, CCSID935, IBM935 CCSID-937 csCCSID937, CCSID937, IBM937 CCSID-939 csCCSID939, CCSID939, IBM939 CCSID-942 csCCSID942, CCSID942, IBM942 ChineseAutoDetect csChineseAutoDetect: Candidate encodings: GB2312, Big5, GB18030, UTF32:UTF8, UCS2, UTF32 EUC-H, csCNS11643EUC, EUC-TW, TW-EUC, H-EUC, CNS-11643-1992, EUC-H-1992, csCNS11643-1992-EUC, EUC-TW-1992, CNS-11643 TW-EUC-1992, H-EUC-1992 CNS-11643-1986 EUC-H-1986, csCNS11643_1986_EUC, EUC-TW-1986, TW-EUC-1986, H-EUC-1986 CP10000 csCP10000, windows-10000 CP10001 csCP10001, windows-10001 CP10002 csCP10002, windows-10002 CP10003 csCP10003, windows-10003 CP10004 csCP10004, windows-10004 CP10005 csCP10005, windows-10005 CP10006 csCP10006, windows-10006 CP10007 csCP10007, windows-10007 CP10008 csCP10008, windows-10008 CP10010 csCP10010, windows-10010 CP10017 csCP10017, windows-10017 CP10029 csCP10029, windows-10029 CP10079 csCP10079, windows-10079 -

Programmer's Manual SP2000 Series

Dot Matrix Printer SP2000 Series Programmer’s Manual TABLE OF CONTENTS 1. Control Codes (Star Mode) ......................................................................... 1 1-1. Control Codes List .............................................................................. 1 1-1-1. Character Selection .................................................................. 1 1-1-2. Print Position Control ............................................................... 3 1-1-3. Dot Graphics Control ............................................................... 4 1-1-4. Download Graphics Printing .................................................... 4 1-1-5. Peripheral Device Control ........................................................ 4 1-1-6. Auto Cutter Control (SP2500 type printers only) .................... 5 1-1-7. Commands to Set the Page Format .......................................... 5 1-1-8. Other Commands...................................................................... 6 1-2. Control Code Details ........................................................................... 7 1-2-1. Character Selection .................................................................. 7 1-2-2. Print Position Control ............................................................. 17 1-2-3. Dot Graphics Control ............................................................. 25 1-2-4. Download Graphics Printing .................................................. 28 1-2-5. Peripheral Device Control ..................................................... -

Orthographies in Grammar Books

Preprints (www.preprints.org) | NOT PEER-REVIEWED | Posted: 30 July 2018 doi:10.20944/preprints201807.0565.v1 Tomislav Stojanov, [email protected], [email protected] Institute of Croatian Language and Linguistic Republike Austrije 16, 10.000 Zagreb, Croatia Orthographies in Grammar Books – Antiquity and Humanism Summary This paper researches the as yet unstudied topic of orthographic content in antique, medieval, and Renaissance grammar books in European languages, as part of a wider research of the origin of orthographic standards in European languages. As a central place for teachings about language, grammar books contained orthographic instructions from the very beginning, and such practice continued also in later periods. Understanding the function, content, and orthographic forms in the past provides for a better description of the nature of the orthographic standard in the present. The evolution of grammatographic practice clearly shows the continuity of development of orthographic content from a constituent of grammar studies through the littera unit gradually to an independent unit, then into annexed orthographic sections, and later into separate orthographic manuals. 5 antique, 22 Latin, and 17 vernacular grammars were analyzed, describing 19 European languages. The research methodology is based on distinguishing orthographic content in the narrower sense (grapheme to meaning) from the broader sense (grapheme to phoneme). In this way, the function of orthographic description was established separately from the study of spelling. As for the traditional description of orthographic content in the broader sense in old grammar books, it is shown that orthographic content can also be studied within the grammatographic framework of a specific period, similar to the description of morphology or syntax.