INTERSKILL MAINFRAME QUARTERLY December 2011

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

ISO Basic Latin Alphabet

ISO basic Latin alphabet The ISO basic Latin alphabet is a Latin-script alphabet and consists of two sets of 26 letters, codified in[1] various national and international standards and used widely in international communication. The two sets contain the following 26 letters each:[1][2] ISO basic Latin alphabet Uppercase Latin A B C D E F G H I J K L M N O P Q R S T U V W X Y Z alphabet Lowercase Latin a b c d e f g h i j k l m n o p q r s t u v w x y z alphabet Contents History Terminology Name for Unicode block that contains all letters Names for the two subsets Names for the letters Timeline for encoding standards Timeline for widely used computer codes supporting the alphabet Representation Usage Alphabets containing the same set of letters Column numbering See also References History By the 1960s it became apparent to thecomputer and telecommunications industries in the First World that a non-proprietary method of encoding characters was needed. The International Organization for Standardization (ISO) encapsulated the Latin script in their (ISO/IEC 646) 7-bit character-encoding standard. To achieve widespread acceptance, this encapsulation was based on popular usage. The standard was based on the already published American Standard Code for Information Interchange, better known as ASCII, which included in the character set the 26 × 2 letters of the English alphabet. Later standards issued by the ISO, for example ISO/IEC 8859 (8-bit character encoding) and ISO/IEC 10646 (Unicode Latin), have continued to define the 26 × 2 letters of the English alphabet as the basic Latin script with extensions to handle other letters in other languages.[1] Terminology Name for Unicode block that contains all letters The Unicode block that contains the alphabet is called "C0 Controls and Basic Latin". -

Unicode and Code Page Support

Natural for Mainframes Unicode and Code Page Support Version 4.2.6 for Mainframes October 2009 This document applies to Natural Version 4.2.6 for Mainframes and to all subsequent releases. Specifications contained herein are subject to change and these changes will be reported in subsequent release notes or new editions. Copyright © Software AG 1979-2009. All rights reserved. The name Software AG, webMethods and all Software AG product names are either trademarks or registered trademarks of Software AG and/or Software AG USA, Inc. Other company and product names mentioned herein may be trademarks of their respective owners. Table of Contents 1 Unicode and Code Page Support .................................................................................... 1 2 Introduction ..................................................................................................................... 3 About Code Pages and Unicode ................................................................................ 4 About Unicode and Code Page Support in Natural .................................................. 5 ICU on Mainframe Platforms ..................................................................................... 6 3 Unicode and Code Page Support in the Natural Programming Language .................... 7 Natural Data Format U for Unicode-Based Data ....................................................... 8 Statements .................................................................................................................. 9 Logical -

Iso/Iec 8632-4:1999(E)

This is a preview - click here to buy the full publication INTERNATIONAL ISO/IEC STANDARD 8632-4 Second edition 1999-12-01 Information technology — Computer graphics — Metafile for the storage and transfer of picture description information — Part 4: Clear text encoding Technologies de l'information — Infographie — Métafichier de stockage et de transfert des informations de description d'images — Partie 4: Codage en clair des textes Reference number ISO/IEC 8632-4:1999(E) © ISO/IEC 1999 ISO/IEC 8632-4:1999(E) This is a preview - click here to buy the full publication PDF disclaimer This PDF file may contain embedded typefaces. In accordance with Adobe's licensing policy, this file may be printed or viewed but shall not be edited unless the typefaces which are embedded are licensed to and installed on the computer performing the editing. In downloading this file, parties accept therein the responsibility of not infringing Adobe's licensing policy. The ISO Central Secretariat accepts no liability in this area. Adobe is a trademark of Adobe Systems Incorporated. Details of the software products used to create this PDF file can be found in the General Info relative to the file; the PDF-creation parameters were optimized for printing. Every care has been taken to ensure that the file is suitable for use by ISO member bodies. In the unlikely event that a problem relating to it is found, please inform the Central Secretariat at the address given below. © ISO/IEC 1999 All rights reserved. Unless otherwise specified, no part of this publication may be reproduced or utilized in any form or by any means, electronic or mechanical, including photocopying and microfilm, without permission in writing from either ISO at the address below or ISO's member body in the country of the requester. -

Database Globalization Support Guide

Oracle® Database Database Globalization Support Guide 19c E96349-05 May 2021 Oracle Database Database Globalization Support Guide, 19c E96349-05 Copyright © 2007, 2021, Oracle and/or its affiliates. Primary Author: Rajesh Bhatiya Contributors: Dan Chiba, Winson Chu, Claire Ho, Gary Hua, Simon Law, Geoff Lee, Peter Linsley, Qianrong Ma, Keni Matsuda, Meghna Mehta, Valarie Moore, Cathy Shea, Shige Takeda, Linus Tanaka, Makoto Tozawa, Barry Trute, Ying Wu, Peter Wallack, Chao Wang, Huaqing Wang, Sergiusz Wolicki, Simon Wong, Michael Yau, Jianping Yang, Qin Yu, Tim Yu, Weiran Zhang, Yan Zhu This software and related documentation are provided under a license agreement containing restrictions on use and disclosure and are protected by intellectual property laws. Except as expressly permitted in your license agreement or allowed by law, you may not use, copy, reproduce, translate, broadcast, modify, license, transmit, distribute, exhibit, perform, publish, or display any part, in any form, or by any means. Reverse engineering, disassembly, or decompilation of this software, unless required by law for interoperability, is prohibited. The information contained herein is subject to change without notice and is not warranted to be error-free. If you find any errors, please report them to us in writing. If this is software or related documentation that is delivered to the U.S. Government or anyone licensing it on behalf of the U.S. Government, then the following notice is applicable: U.S. GOVERNMENT END USERS: Oracle programs (including any operating system, integrated software, any programs embedded, installed or activated on delivered hardware, and modifications of such programs) and Oracle computer documentation or other Oracle data delivered to or accessed by U.S. -

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding A

Basis Technology Unicode対応ライブラリ スペックシート 文字コード その他の名称 Adobe-Standard-Encoding Adobe-Symbol-Encoding csHPPSMath Adobe-Zapf-Dingbats-Encoding csZapfDingbats Arabic ISO-8859-6, csISOLatinArabic, iso-ir-127, ECMA-114, ASMO-708 ASCII US-ASCII, ANSI_X3.4-1968, iso-ir-6, ANSI_X3.4-1986, ISO646-US, us, IBM367, csASCI big-endian ISO-10646-UCS-2, BigEndian, 68k, PowerPC, Mac, Macintosh Big5 csBig5, cn-big5, x-x-big5 Big5Plus Big5+, csBig5Plus BMP ISO-10646-UCS-2, BMPstring CCSID-1027 csCCSID1027, IBM1027 CCSID-1047 csCCSID1047, IBM1047 CCSID-290 csCCSID290, CCSID290, IBM290 CCSID-300 csCCSID300, CCSID300, IBM300 CCSID-930 csCCSID930, CCSID930, IBM930 CCSID-935 csCCSID935, CCSID935, IBM935 CCSID-937 csCCSID937, CCSID937, IBM937 CCSID-939 csCCSID939, CCSID939, IBM939 CCSID-942 csCCSID942, CCSID942, IBM942 ChineseAutoDetect csChineseAutoDetect: Candidate encodings: GB2312, Big5, GB18030, UTF32:UTF8, UCS2, UTF32 EUC-H, csCNS11643EUC, EUC-TW, TW-EUC, H-EUC, CNS-11643-1992, EUC-H-1992, csCNS11643-1992-EUC, EUC-TW-1992, CNS-11643 TW-EUC-1992, H-EUC-1992 CNS-11643-1986 EUC-H-1986, csCNS11643_1986_EUC, EUC-TW-1986, TW-EUC-1986, H-EUC-1986 CP10000 csCP10000, windows-10000 CP10001 csCP10001, windows-10001 CP10002 csCP10002, windows-10002 CP10003 csCP10003, windows-10003 CP10004 csCP10004, windows-10004 CP10005 csCP10005, windows-10005 CP10006 csCP10006, windows-10006 CP10007 csCP10007, windows-10007 CP10008 csCP10008, windows-10008 CP10010 csCP10010, windows-10010 CP10017 csCP10017, windows-10017 CP10029 csCP10029, windows-10029 CP10079 csCP10079, windows-10079 -

IBM Data Conversion Under Websphere MQ

IBM WebSphere MQ Data Conversion Under WebSphere MQ Table of Contents .................................................................................................................................................... 3 .................................................................................................................................................... 3 Int roduction............................................................................................................................... 4 Ac ronyms and terms used in Data Conversion........................................................................ 5 T he Pieces in the Data Conversion Puzzle............................................................................... 7 Coded Character Set Identifier (CCSID)........................................................................................ 7 Encoding .............................................................................................................................................. 7 What Gets Converted, and How............................................................................................... 9 The Message Descriptor.................................................................................................................... 9 The User portion of the message..................................................................................................... 10 Common Procedures when doing the MQPUT................................................................. 10 The message -

JFP Reference Manual 5 : Standards, Environments, and Macros

JFP Reference Manual 5 : Standards, Environments, and Macros Sun Microsystems, Inc. 4150 Network Circle Santa Clara, CA 95054 U.S.A. Part No: 817–0648–10 December 2002 Copyright 2002 Sun Microsystems, Inc. 4150 Network Circle, Santa Clara, CA 95054 U.S.A. All rights reserved. This product or document is protected by copyright and distributed under licenses restricting its use, copying, distribution, and decompilation. No part of this product or document may be reproduced in any form by any means without prior written authorization of Sun and its licensors, if any. Third-party software, including font technology, is copyrighted and licensed from Sun suppliers. Parts of the product may be derived from Berkeley BSD systems, licensed from the University of California. UNIX is a registered trademark in the U.S. and other countries, exclusively licensed through X/Open Company, Ltd. Sun, Sun Microsystems, the Sun logo, docs.sun.com, AnswerBook, AnswerBook2, and Solaris are trademarks, registered trademarks, or service marks of Sun Microsystems, Inc. in the U.S. and other countries. All SPARC trademarks are used under license and are trademarks or registered trademarks of SPARC International, Inc. in the U.S. and other countries. Products bearing SPARC trademarks are based upon an architecture developed by Sun Microsystems, Inc. The OPEN LOOK and Sun™ Graphical User Interface was developed by Sun Microsystems, Inc. for its users and licensees. Sun acknowledges the pioneering efforts of Xerox in researching and developing the concept of visual or graphical user interfaces for the computer industry. Sun holds a non-exclusive license from Xerox to the Xerox Graphical User Interface, which license also covers Sun’s licensees who implement OPEN LOOK GUIs and otherwise comply with Sun’s written license agreements. -

Making the Most of Your Mainframe Data: from EBCDIC to ASCII

Making the Most of Your Mainframe Data: From EBCDIC to ASCII Syncsort | Making the Most of Your Mainframe Data: From EBCDIC to ASCII Introduction Providing access to mainframe data in systems across your organization is unfortunately not as simple as a one-to-one database duplication. Mainframe data represents one of the most complex data formats found in enterprise systems, and as a result, many teams struggle with data integration projects for this crucial asset and fail to extract the maximum value from their mainframe data. Organizations that implement a data integration strategy that encompasses mainframe data see significant benefits. To make the most of your mainframe data and realize its full potential, it is vital that you take into consideration the human and technical challenges that can occur. By avoiding common pitfalls, you’ll be on the road to unlocking your mainframe data and receiving what is most important to your organization – maximum business value. Syncsort | Making the Most of Your Mainframe Data: From EBCDIC to ASCII 02 Mainframe Data is Still the Bedrock of your Business Despite the growth of next-wave technologies, mainframes will continue to play an essential role in many businesses. Mainframes have no peer when it comes to the volume of transactions they can handle. In fact, estimates are that 2.5 billion transactions are run per day, per mainframe across the world, and analysts estimate that more than 70% of Fortune 500 companies use mainframes at the core of their most valuable business systems. Mainframes contain the vital data that organizations run on, and in turn, they power initiatives that help move a business forward, such as machine learning, AI, and predictive analytics. -

Onetouch 4.0 Sanned Documents

TO: MSPM Distribution FROM: J. H. Saltzer SUBJECT: 88.3.02 DATE: 02/05/68 This revision of BB.3.02 is because 1. The ASCII standard character set has been approved. References are altered accordingly. 2. The latest proposed ASCII standard card code has been revised slightly. Since the Multics standard card code matches the ASCII standard wherever convenient# 88.3.02 is changed. Codes for the grave accent# left and right brace, and tilde are affected. 3. One misprint has been corrected; the code for capita 1 11 S" is changed. MULTICS SYSTEM-PROGRAMMERS' MANUAL SECTION BB.3.02 PAGE 1 Published: 02/05/68; (Supersedes: BB.3.02; 03/30/67; BC.2.06; 11/10/66) Identification Multics standard card punch codes and Relation between ASCII and EBCDIC J • H • Sa 1 tze r Purpose This section defines standard card punch codes to be used in representing ASCII characters for use with Multics. Since the card punch codes are based on the punch codes defined for the IBM EBCDIC standard, automatically a correspondence between the EBCDIC and ASCII character sets is also defined. Note The Multics standard card punch codes described in this section are DQ! identical to the currently proposed ASCII punched card code. The proposed ASCII standard code is not supported by any currently available punched card equipment; until such support exists it is not a practical standard for Multics work. The Multics standard card punch code described here is based on widely available card handling equipment used with IBM System/360 computers. The six characters for which the Multics standard card code differs with the ASCII card code are noted in the table below. -

Windows NLS Considerations Version 2.1

Windows NLS Considerations version 2.1 Radoslav Rusinov [email protected] Windows NLS Considerations Contents 1. Introduction ............................................................................................................................................... 3 1.1. Windows and Code Pages .................................................................................................................... 3 1.2. CharacterSet ........................................................................................................................................ 3 1.3. Encoding Scheme ................................................................................................................................ 3 1.4. Fonts ................................................................................................................................................... 4 1.5. So Why Are There Different Charactersets? ........................................................................................ 4 1.6. What are the Difference Between 7 bit, 8 bit and Unicode Charactersets? ........................................... 4 2. NLS_LANG .............................................................................................................................................. 4 2.1. Setting the Character Set in NLS_LANG ............................................................................................ 4 2.2. Where is the Character Conversion Done? ......................................................................................... -

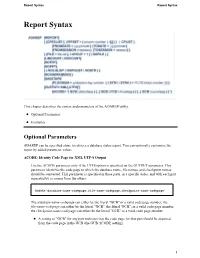

Report Syntax Report Syntax

Report Syntax Report Syntax Report Syntax This chapter describes the syntax and parameters of the ADAREP utility. Optional Parameters Examples Optional Parameters ADAREP can be specified alone to retrieve a database status report. You can optionally customize the report by added parameter values. ACODE: Identify Code Page for XML UTF-8 Output Use the ACODE parameter only if the UTF8 option is specified on the OUTPUT parameter. This parameter identifies the code page to which the database name, file names, and checkpoint names should be converted. This parameter is specified in three parts, in a specific order, and with each part separated by a comma from the others: ACODE=’database-name-codepage,file-name-codepage,checkpoint-name-codepage’ The database-name-codepage can either be the literal "GCB" or a valid code page number; the file-name-codepage can either be the literal "GCB", the literal "FCB", or a valid code page number; the checkpoint-name-codepage can either be the literal "GCB" or a valid code page number: A setting of "GCB" for any part indicates that the code page for that part should be acquired from the code page in the GCB (the GCB ACODE setting). 1 Report Syntax Optional Parameters A setting of "FCB" for the file-name-codepage indicates that the code page for converting file names in the XML document to UTF-8 should be acquired from the code page setting in the FCB (the FCB ACODE setting). If code pages for all three parts of the ACODE parameter are being specified, they must be specified in the order shown above. -

Iso/Iec Jtc 1/Sc 2/ Wg 2 N ___Ncits-L2-98

Unicode support in EBCDIC based systems ISO/IEC JTC 1/SC 2/ WG 2 N _______ NCITS-L2-98-257REV 1998-09-01 Title: EBCDIC-Friendly UCS Transformation Format -- UTF-8-EBCDIC Source: US, Unicode Consortium and V.S. UMAmaheswaran, IBM National Language Technical Centre, Toronto Status: For information and comment Distribution: WG2 and UTC Abstract: This paper defines the EBCDIC-Friendly Universal Multiple-Octet Coded Character Set (UCS) Transformation Format (TF) -- UTF-8-EBCDIC. This transform converts data encoded using UCS (as defined in ISO/IEC 10646 and the Unicode Standard defined by the Unicode Consortium) to and from an encoding form compatible with IBM's Extended Binary Coded Decimal Interchange Code (EBCDIC). This revised document incorporates the suggestions made by Unicode Technical Committee Meeting No. 77, on 31 July 98, and several editoiral changes. It is also being presented at the Internationalization and Unicode Conference no. 13, in San Jose, on 11 September 98. It has been accepted by the UTC as the basis for a Unicode Technical Report and is being distributed to SC 2/WG 2 for information and comments at this time. 13th International Unicode Conference 1 San Jose, CA, September 1998 Unicode support in EBCDIC based systems 1 Background UCS Transformation Format UTF-8 (defined in Amendment No. 2 to ISO/IEC 10646-1) is a transform for UCS data that preserves the subset of 128 ISO-646-IRV (ASCII) characters of UCS as single octets in the range X'00' to X'7F', with all the remaining UCS values converted to multiple-octet sequences containing only octets greater than X'7F'.