Computer Codes for Korean Sounds: K-SAMPA

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sample, Not for Administration Or Resale

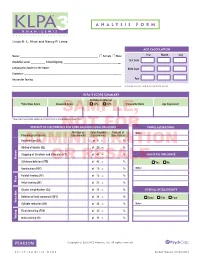

ANALYSIS FORM Linda M. L. Khan and Nancy P. Lewis Age Calculation Name: ______________________________________________________ u Female u Male Year Month Day Test Date Grade/Ed. Level: __________ School/Agency: __________________________________________ Language(s) Spoken in the Home: ___________________________________________________ Birth Date Examiner: ____________________________________________________________________ Reason for Testing: ______________________________________________________________ Age ____________________________________________________________________________ Reminder: Do not round up to next month or year. KLPA–3 Score Summary Confidence Interval *Total Raw Score Standard Score 90% 95% Percentile Rank Age Equivalent SAMPLE,– * Raw score equals total number of occurrences of scored phonological processes. Percent of OccurrenceNOT for Core Phonological ProcessesFOR Vowel Alterations Number of Total Possible Percent of Notes: Phonological Process Occurrences Occurrences Occurrences DeaffricationADMINISTRATION (DF) of 8 = % Gliding of liquids (GL) of 20 = % Stopping of fricatives and affricates (ST) of 48 = % Dialectal Influence Manner OR RESALE Stridency deletion (STR) of 42 = % Yes No Vocalization (VOC) of 15 = % Notes: Palatal fronting (PF) of 12 = % Place Velar fronting (VF) of 23 = % Cluster simplification (CS) of 23 = % Overall Intelligibility Deletion of final consonant (DFC) of 36 = % Good Fair Poor Reduction Syllable reduction (SR) of 25 = % Notes: Final devoicing (FDV) of 35 = % Voicing Initial voicing -

Lecture 5 Sound Change

An articulatory theory of sound change An articulatory theory of sound change Hypothesis: Most common initial motivation for sound change is the automation of production. Tokens reduced online, are perceived as reduced and represented in the exemplar cluster as reduced. Therefore we expect sound changes to reflect a decrease in gestural magnitude and an increase in gestural overlap. What are some ways to test the articulatory model? The theory makes predictions about what is a possible sound change. These predictions could be tested on a cross-linguistic database. Sound changes that take place in the languages of the world are very similar (Blevins 2004, Bateman 2000, Hajek 1997, Greenberg et al. 1978). We should consider both common and rare changes and try to explain both. Common and rare changes might have different characteristics. Among the properties we could look for are types of phonetic motivation, types of lexical diffusion, gradualness, conditioning environment and resulting segments. Common vs. rare sound change? We need a database that allows us to test hypotheses concerning what types of changes are common and what types are not. A database of sound changes? Most sound changes have occurred in undocumented periods so that we have no record of them. Even in cases with written records, the phonetic interpretation may be unclear. Only a small number of languages have historic records. So any sample of known sound changes would be biased towards those languages. A database of sound changes? Sound changes are known only for some languages of the world: Languages with written histories. Sound changes can be reconstructed by comparing related languages. -

LT3212 Phonetics Assignment 4 Mavis, Wong Chak Yin

LT3212 Phonetics Assignment 4 Mavis, Wong Chak Yin Essay Title: The sound system of Japanese This essay aims to introduce the sound system of Japanese, including the inventories of consonants, vowels, and diphthongs. The phonological variations of the sound segments in different phonetic environments are also included. For the illustration, word examples are given and they are presented in the following format: [IPA] (Romaji: “meaning”). Consonants In Japanese, there are 14 core consonants, and some of them have a lot of allophonic variations. The various types of consonants classified with respect to their manner of articulation are presented as follows. Stop Japanese has six oral stops or plosives, /p b t d k g/, which are classified into three place categories, bilabial, alveolar, and velar, as listed below. In each place category, there is a pair of plosives with the contrast in voicing. /p/ = a voiceless bilabial plosive [p]: [ippai] (ippai: “A cup of”) /b/ = a voiced bilabial plosive [b]: [baɴ] (ban: “Night”) /t/ = a voiceless alveolar plosive [t]: [oto̞ ːto̞ ] (ototo: “Brother”) /d/ = a voiced alveolar plosive [d]: [to̞ mo̞ datɕi] (tomodachi: “Friend”) /k/ = a voiceless velar plosive [k]: [kaiɰa] (kaiwa: “Conversation”) /g/ = a voiced velar plosive [g]: [ɡakɯβsai] (gakusai: “Student”) Phonetically, Japanese also has a glottal stop [ʔ] which is commonly produced to separate the neighboring vowels occurring in different syllables. This phonological phenomenon is known as ‘glottal stop insertion’. The glottal stop may be realized as a pause, which is used to indicate the beginning or the end of an utterance. For instance, the word “Japanese money” is actually pronounced as [ʔe̞ ɴ], instead of [je̞ ɴ], and the pronunciation of “¥15” is [dʑɯβːɡo̞ ʔe̞ ɴ]. -

Ling 230/503: Articulatory Phonetics and Transcription English Vowels

Ling 230/503: Articulatory Phonetics and Transcription Broad vs. narrow transcription. A narrow transcription is one in which the transcriber records much phonetic detail without attention to the way in which the sounds of the language form a system. A broad transcription omits those details of a narrow transcription which the transcriber feels are not worth recording. Normally these details will be aspects of the speech event which are: (1) predictable or (2) would not differentiate two token utterances of the same type in the judgment of speakers or (3) are presumed not to figure in the systematic phonology of the language. IPA vs. American transcription There are two commonly used systems of phonetic transcription, the International Phonetics Association or IPA system and the American system. In many cases these systems overlap, but in certain cases there are important distinctions. Students need to learn both systems and have to be flexible about the use of symbols. English Vowels Short vowels /ɪ ɛ æ ʊ ʌ ɝ/ ‘pit’ pɪt ‘put’ pʊt ‘pet’ pɛt ‘putt’ pʌt ‘pat’ pæt ‘pert’ pɝt (or pr̩t) Long vowels /i(ː), u(ː), ɑ(ː), ɔ(ː)/ ‘beat’ biːt (or bit) ‘boot’ buːt (or but) ‘(ro)bot’ bɑːt (or bɑt) ‘bought’ bɔːt (or bɔt) Diphthongs /eɪ, aɪ, aʊ, oʊ, ɔɪ, ju(ː)/ ‘bait’ beɪt ‘boat’ boʊt ‘bite’ bɑɪt (or baɪt) ‘bout’ bɑʊt (or baʊt) ‘Boyd’ bɔɪd (or boɪd) ‘cute’ kjuːt (or kjut) The property of length, denoted by [ː], can be predicted based on the quality of the vowel. For this reason it is quite common to omit the length mark [ː]. -

Part 1: Introduction to The

PREVIEW OF THE IPA HANDBOOK Handbook of the International Phonetic Association: A guide to the use of the International Phonetic Alphabet PARTI Introduction to the IPA 1. What is the International Phonetic Alphabet? The aim of the International Phonetic Association is to promote the scientific study of phonetics and the various practical applications of that science. For both these it is necessary to have a consistent way of representing the sounds of language in written form. From its foundation in 1886 the Association has been concerned to develop a system of notation which would be convenient to use, but comprehensive enough to cope with the wide variety of sounds found in the languages of the world; and to encourage the use of thjs notation as widely as possible among those concerned with language. The system is generally known as the International Phonetic Alphabet. Both the Association and its Alphabet are widely referred to by the abbreviation IPA, but here 'IPA' will be used only for the Alphabet. The IPA is based on the Roman alphabet, which has the advantage of being widely familiar, but also includes letters and additional symbols from a variety of other sources. These additions are necessary because the variety of sounds in languages is much greater than the number of letters in the Roman alphabet. The use of sequences of phonetic symbols to represent speech is known as transcription. The IPA can be used for many different purposes. For instance, it can be used as a way to show pronunciation in a dictionary, to record a language in linguistic fieldwork, to form the basis of a writing system for a language, or to annotate acoustic and other displays in the analysis of speech. -

Issues in the Distribution of the Glottal Stop

Theoretical Issues in the Representation of the Glottal Stop in Blackfoot* Tyler Peterson University of British Columbia 1.0 Introduction This working paper examines the phonemic and phonetic status and distribution of the glottal stop in Blackfoot.1 Observe the phonemic distribution (1), four phonological operations (2), and three surface realizations (3) of the glottal stop: (1) a. otsi ‘to swim’ c. otsi/ ‘to splash with water’ b. o/tsi ‘to take’ (2) a. Metathesis: V/VC ® VV/C nitáó/mai/takiwa nit-á/-omai/takiwa 1-INCHOAT-believe ‘now I believe’ b. Metathesis ® Deletion: V/VùC ® VVù/C ® VVùC kátaookaawaatsi káta/-ookaa-waatsi INTERROG-sponsor.sundance-3s.NONAFFIRM ‘Did she sponsor a sundance?’ (Frantz, 1997: 154) c. Metathesis ® Degemination: V/V/C ® VV//C ® VV/C áó/tooyiniki á/-o/too-yiniki INCHOAT-arrive(AI)-1s/2s ‘when I/you arrive’ d. Metathesis ® Deletion ® V-lengthening: V/VC ® VV/C ® VVùC kátaoottakiwaatsi káta/-ottaki-waatsi INTEROG-bartender-3s.NONAFFIRM ‘Is he a bartender?’ (Frantz, 1997) (3) Surface realizations: [V/C] ~ [VV0C] ~ [VùC] aikai/ni ‘He dies’ a. [aIkaI/ni] b. [aIkaII0ni] c. [aIkajni] The glottal stop appears to have a unique status within the Blackfoot consonant inventory. The examples in (1) (and §2.1 below) suggest that it appears as a fully contrastive phoneme in the language. However, the glottal stop is put through variety of phonological processes (metathesis, syncope and degemination) that no other consonant in the language is subject to. Also, it has a variety of surfaces realizations in the form of glottalization on an adjacent vowel (cf. -

How to Edit IPA 1 How to Use SAMPA for Editing IPA 2 How to Use X

version July 19 How to edit IPA When you want to enter the International Phonetic Association (IPA) character set with a computer keyboard, you need to know how to enter each IPA character with a sequence of keyboard strokes. This document describes a number of techniques. The complete SAMPA and RTR mapping can be found in the attached html documents. The main html document (ipa96.html) comes in a pdf-version (ipa96.pdf) too. 1 How to use SAMPA for editing IPA The Speech Assessment Method (SAM) Phonetic Alphabet has been developed by John Wells (http://www.phon.ucl.ac.uk/home/sampa). The goal was to map 176 IPA characters into the range of 7-bit ASCII, which is a set of 96 characters. The principle is to represent a single IPA character by a single ASCII character. This table is an example for five vowels: Description IPA SAMPA script a ɑ A ae ligature æ { turned a ɐ 6 epsilon ɛ E schwa ə @ A visual represenation of a keyboard shows the mapping on screen. The source for the SAMPA mapping used is "Handbook of multimodal an spoken dialogue systems", D Gibbon, Kluwer Academic Publishers 2000. 2 How to use X-SAMPA for editing IPA The multi-character extension to SAMPA has also been developed by John Wells (http://www.phon.ucl.ac.uk/home/sampa/x-sampa.htm). The basic principle used is to form chains of ASCII characters, that represent a single IPA character, e.g. This table lists some examples Description IPA X-SAMPA beta β B small capital B ʙ B\ lower-case B b b lower-case P p p Phi ɸ p\ The X-SAMPA mapping is in preparation and will be included in the next release. -

Wagner 1 Dutch Fricatives Dutch Is an Indo-European Language of The

Wagner 1 Dutch Fricatives Dutch is an Indo-European language of the West Germanic branch, closely related to Frisian and English. It is spoken by about 22 million people in the Netherlands, Belgium, Aruba, Suriname, and the Netherlands Antilles, nations in which the Dutch language also has an official status. Additionally, Dutch is still spoken in parts of Indonesia as a result of colonial rule, but it has been replaced by Afrikaans in South Africa. In the Netherlands, Algemeen Nederlands is the standard form of the language taught in schools and used by the government. It is generally spoken in the western part of the country, in the provinces of Nord and Zuid Holland. The Netherlands is divided into northern and southern regions by the Rijn river and these regions correspond to the areas where the main dialects of Dutch are distinguished from each other. My consultant, Annemarie Toebosch, is from the village of Bemmel in the Netherlands. Her dialect is called Betuws Dutch because Bemmel is located in the Betuwe region between two branches of the Rijn river. Toebosch lived in the Netherlands until the age of 25 when she relocated to the United States for graduate school. Today she is a professor of linguistics at the University of Michigan-Flint and speaks only English in her professional career. For the past 18 months she has been speaking Dutch more at home with her son, but in the 9 years prior to that, she only spoke Dutch once or twice a week with her parents and other relatives who remain in the Netherlands. -

Towards Zero-Shot Learning for Automatic Phonemic Transcription

The Thirty-Fourth AAAI Conference on Artificial Intelligence (AAAI-20) Towards Zero-Shot Learning for Automatic Phonemic Transcription Xinjian Li, Siddharth Dalmia, David R. Mortensen, Juncheng Li, Alan W Black, Florian Metze Language Technologies Institute, School of Computer Science Carnegie Mellon University {xinjianl, sdalmia, dmortens, junchenl, awb, fmetze}@cs.cmu.edu Abstract Hermann and Goldwater 2018), which typically uses an un- supervised technique to learn representations which can be Automatic phonemic transcription tools are useful for low- resource language documentation. However, due to the lack used towards speech processing tasks. of training sets, only a tiny fraction of languages have phone- However, those unsupervised approaches could not gener- mic transcription tools. Fortunately, multilingual acoustic ate phonemes directly and there has been few works study- modeling provides a solution given limited audio training ing zero-shot learning for unseen phonemes transcription, data. A more challenging problem is to build phonemic tran- which consist of learning an acoustic model without any au- scribers for languages with zero training data. The difficulty dio data or text data for a given target language and unseen of this task is that phoneme inventories often differ between phonemes. In this work, we aim to solve this problem to the training languages and the target language, making it in- transcribe unseen phonemes for unseen languages without feasible to recognize unseen phonemes. In this work, we ad- dress this problem by adopting the idea of zero-shot learning. considering any target data, audio or text. Our model is able to recognize unseen phonemes in the tar- The prediction of unseen objects has been studied for a get language without any training data. -

Palatals in Spanish and French: an Analysis Rachael Gray

Florida State University Libraries Honors Theses The Division of Undergraduate Studies 2012 Palatals in Spanish and French: An Analysis Rachael Gray Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] Abstract (Palatal, Spanish, French) This thesis deals with palatals from Latin into Spanish and French. Specifically, it focuses on the diachronic history of each language with a focus on palatals. I also look at studies that have been conducted concerning palatals, and present a synchronic analysis of palatals in modern day Spanish and French. The final section of this paper focuses on my research design in second language acquisition of palatals for native French speakers learning Spanish. 2 THE FLORIDA STATE UNIVERSITY COLLEGE OF ARTS AND SCIENCES PALATALS IN SPANISH AND FRENCH: AN ANALYSIS BY: RACHAEL GRAY A Thesis submitted to the Department of Modern Languages in partial fulfillment of the requirements for graduation with Honors in the Major Degree Awarded: 3 Spring, 2012 The members of the Defense Committee approve the thesis of Rachael Gray defended on March 21, 2012 _____________________________________ Professor Carolina Gonzaléz Thesis Director _______________________________________ Professor Gretchen Sunderman Committee Member _______________________________________ Professor Eric Coleman Outside Committee Member 4 Contents Acknowledgements ......................................................................................................................... 5 0. -

Building a Universal Phonetic Model for Zero-Resource Languages

Building a Universal Phonetic Model for Zero-Resource Languages Paul Moore MInf Project (Part 2) Interim Report Master of Informatics School of Informatics University of Edinburgh 2020 3 Abstract Being able to predict phones from speech is a challenge in and of itself, but what about unseen phones from different languages? In this project, work was done towards building precisely this kind of universal phonetic model. Using the GlobalPhone language corpus, phones’ articulatory features, a recurrent neu- ral network, open-source libraries, and an innovative prediction system, a model was created to predict phones based on their features alone. The results show promise, especially for using these models on languages within the same family. 4 Acknowledgements Once again, a huge thank you to Steve Renals, my supervisor, for all his assistance. I greatly appreciated his practical advice and reasoning when I got stuck, or things seemed overwhelming, and I’m very thankful that he endorsed this project. I’m immensely grateful for the support my family and friends have provided in the good times and bad throughout my studies at university. A big shout-out to my flatmates Hamish, Mark, Stephen and Iain for the fun and laugh- ter they contributed this year. I’m especially grateful to Hamish for being around dur- ing the isolation from Coronavirus and for helping me out in so many practical ways when I needed time to work on this project. Lastly, I wish to thank Jesus Christ, my Saviour and my Lord, who keeps all these things in their proper perspective, and gives me strength each day. -

A Case Study of Norwegian Clusters

UC Davis UC Davis Previously Published Works Title Morphological derived-environment effects in gestural coordination: A case study of Norwegian clusters Permalink https://escholarship.org/uc/item/5mn3w7gz Journal Lingua, 117 ISSN 0024-3841 Author Bradley, Travis G. Publication Date 2007-06-01 Peer reviewed eScholarship.org Powered by the California Digital Library University of California Bradley, Travis G. 2007. Morphological Derived-Environment Effects in Gestural Coordination: A Case Study of Norwegian Clusters. Lingua 117.6:950-985. Morphological derived-environment effects in gestural coordination: a case study of Norwegian clusters Travis G. Bradley* Department of Spanish and Classics, University of California, 705 Sproul Hall, One Shields Avenue, Davis, CA 95616, USA Abstract This paper examines morphophonological alternations involving apicoalveolar tap- consonant clusters in Urban East Norwegian from the framework of gestural Optimality Theory. Articulatory Phonology provides an insightful explanation of patterns of vowel intrusion, coalescence, and rhotic deletion in terms of the temporal coordination of consonantal gestures, which interacts with both prosodic and morphological structure. An alignment-based account of derived-environment effects is proposed in which complete overlap in rhotic-consonant clusters is blocked within morphemes but not across morpheme or word boundaries. Alignment constraints on gestural coordination also play a role in phonologically conditioned allomorphy. The gestural analysis is contrasted with alternative Optimality-theoretic accounts. Furthermore, it is argued that models of the phonetics-phonology interface which view timing as a low-level detail of phonetic implementation incorrectly predict that input morphological structure should have no effect on gestural coordination. The patterning of rhotic-consonant clusters in Norwegian is consistent with a model that includes gestural representations and constraints directly in the phonological grammar, where underlying morphological structure is still visible.