Intelligent Design the Relationship of Economic Theory to Experiments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Ono Mato Pee 145

789493 148215 9 789493 148215 9 789493 148215 148215 9 789493 148215 789493 9 9 789493 148215 9 148215 789493 148215 789493 9 148215 9 789493 148215 9 789493 148215 148215 789493 9 789493 148215 9 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 9 789493 148215 99 789493789493 148215148215 9 789493 148215 99 789493789493 148215148215 9 789493 148215 9 789493 148215 9 789493 148215 145 PEE MATO ONO Graphic Design Systems, and the Systems of Graphic Design Francisco Laranjo Bibliography M.; Drenttel, W. & Heller, S. (2012) Within graphic design, the concept of systems is profoundly Escobar, A. (2018) Designs for Looking Closer 4: Critical Writings rooted in form. When a term such as system is invoked, it is the Pluriverse (New Ecologies for on Graphic Design. Allworth Press, normally related to macro and micro-typography, involving pp. 199–207. the Twenty-First Century). Duke book design and typesetting, but also addressing type design University Press. Manzini, E. (2015) Design, When or parametric typefaces. Branding and signage are two other Jenner, K. (2016) Chill Kendall Jenner Everybody Designs: An Introduction Didn’t Think People Would Care to Design for Social Innovation. domains which nearly claim exclusivity of the use of the That She Deleted Her Instagram. Massachusetts: MIT Press. word ‘systems’ in relation to graphic design. A key example of In: Vogue. Available at: https://www. Meadows, D. (2008) Thinking in this is the influential bookGrid Systems in Graphic Design vogue.com/article/kendall-jenner- Systems. -

Mike Kelley's Studio

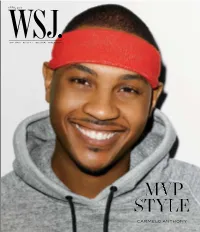

No PMS Flood Varnish Spine: 3/16” (.1875”) 0413_WSJ_Cover_02.indd 1 2/4/13 1:31 PM Reine de Naples Collection in every woman is a queen BREGUET BOUTIQUES – NEW YORK FIFTH AVENUE 646 692-6469 – NEW YORK MADISON AVENUE 212 288-4014 BEVERLY HILLS 310 860-9911 – BAL HARBOUR 305 866-1061 – LAS VEGAS 702 733-7435 – TOLL FREE 877-891-1272 – WWW.BREGUET.COM QUEEN1-WSJ_501x292.indd 1-2 01.02.13 16:56 BREGUET_205640303.indd 2 2/1/13 1:05 PM BREGUET_205640303.indd 3 2/1/13 1:06 PM a sporting life! 1-800-441-4488 Hermes.com 03_501,6x292,1_WSJMag_Avril_US.indd 1 04/02/13 16:00 FOR PRIVATE APPOINTMENTS AND MADE TO MEASURE INQUIRIES: 888.475.7674 R ALPHLAUREN.COM NEW YORK BEVERLY HILLS DALLAS CHICAGO PALM BEACH BAL HARBOUR WASHINGTON, DC BOSTON 1.855.44.ZEGNA | Shop at zegna.com Passion for Details Available at Macy’s and macys.com the new intense fragrance visit Armanibeauty.com SHIONS INC. Phone +1 212 940 0600 FA BOSS 0510/S HUGO BOSS shop online hugoboss.com www.omegawatches.com We imagined an 18K red gold diving scale so perfectly bonded with a ceramic watch bezel that it would be absolutely smooth to the touch. And then we created it. The result is as aesthetically pleasing as it is innovative. You would expect nothing less from OMEGA. Discover more about Ceragold technology on www.omegawatches.com/ceragold New York · London · Paris · Zurich · Geneva · Munich · Rome · Moscow · Beijing · Shanghai · Hong Kong · Tokyo · Singapore 26 Editor’s LEttEr 28 MasthEad 30 Contributors 32 on thE CovEr slam dunk Fashion is another way Carmelo Anthony is scoring this season. -

Intelligent Design Creationism and the Constitution

View metadata, citation and similar papers at core.ac.uk brought to you by CORE provided by Washington University St. Louis: Open Scholarship Washington University Law Review Volume 83 Issue 1 2005 Is It Science Yet?: Intelligent Design Creationism and the Constitution Matthew J. Brauer Princeton University Barbara Forrest Southeastern Louisiana University Steven G. Gey Florida State University Follow this and additional works at: https://openscholarship.wustl.edu/law_lawreview Part of the Constitutional Law Commons, Education Law Commons, First Amendment Commons, Religion Law Commons, and the Science and Technology Law Commons Recommended Citation Matthew J. Brauer, Barbara Forrest, and Steven G. Gey, Is It Science Yet?: Intelligent Design Creationism and the Constitution, 83 WASH. U. L. Q. 1 (2005). Available at: https://openscholarship.wustl.edu/law_lawreview/vol83/iss1/1 This Article is brought to you for free and open access by the Law School at Washington University Open Scholarship. It has been accepted for inclusion in Washington University Law Review by an authorized administrator of Washington University Open Scholarship. For more information, please contact [email protected]. Washington University Law Quarterly VOLUME 83 NUMBER 1 2005 IS IT SCIENCE YET?: INTELLIGENT DESIGN CREATIONISM AND THE CONSTITUTION MATTHEW J. BRAUER BARBARA FORREST STEVEN G. GEY* TABLE OF CONTENTS ABSTRACT ................................................................................................... 3 INTRODUCTION.................................................................................................. -

The Design Argument

The design argument The different versions of the cosmological argument we discussed over the last few weeks were arguments for the existence of God based on extremely abstract and general features of the universe, such as the fact that some things come into existence, and that there are some contingent things. The argument we’ll be discussing today is not like this. The basic idea of the argument is that if we pay close attention to the details of the universe in which we live, we’ll be able to see that that universe must have been created by an intelligent designer. This design argument, or, as its sometimes called, the teleological argument, has probably been the most influential argument for the existence of God throughout most of history. You will by now not be surprised that a version of the teleological argument can be found in the writings of Thomas Aquinas. You will by now not be surprised that a version of the teleological argument can be found in the writings of Thomas Aquinas. Aquinas is noting that things we observe in nature, like plants and animals, typically act in ways which are advantageous to themselves. Think, for example, of the way that many plants grow in the direction of light. Clearly, as Aquinas says, plants don’t do this because they know where the light is; as he says, they “lack knowledge.” But then how do they manage this? What does explain the fact that plants grow in the direction of light, if not knowledge? Aquinas’ answer to this question is that they must be “directed to their end” -- i.e., designed to be such as to grow toward the light -- by God. -

Intelligent Design Enables Architectural Evolution

Intelligent Design Enables Architectural Evolution Ali Ghodsi Teemu Koponen Barath Raghavan KTH / UC Berkeley Nicira Networks ICSI Scott Shenker Ankit Singla James Wilcox ICSI / UC Berkeley UIUC Williams College ABSTRACT enables the research community to investigate how to address What does it take for an Internet architecture to be evolvable? these problems architecturally, even if the changes themselves Despite our ongoing frustration with today’s rigid IP-based cannot be incrementally deployed. architecture and the research community’s extensive research These clean slate efforts have increased our intellectual on clean-slate designs, it remains unclear how to best design understanding of Internet architecture — particularly in for architectural evolvability. We argue here that evolvability the areas of security, reliability, route selection, and mo- is far from mysterious. In fact, we claim that only a few bility/multihoming — and there are now many clean-slate “intelligent” design changes are needed to support evolvability. proposals that offer significantly better functionality than While these changes are definitely nonincremental (i.e., our current Internet. However, in recent years these clean cannot be deployed in an incremental fashion starting with slate efforts moved beyond adding functionality and have today’s architecture), they follow directly from the well- begun addressing the reason we had to turn to clean slate known engineering principles of indirection, modularity, and design in the first place: architectural rigidity. That is, the extensibility. research community is now devoting significant attention to the design of architectures that can support architectural Categories and Subject Descriptors evolution, so that future architectural changes could be made incrementally rather than through a series of clean-slate C.2.1 [Computer-Communication Networks]: Network designs. -

Devious Distortions- Durston Or Myers

Devious Distortions: Durston or Myers? or Estimating the Probability of Functional Biological Proteins Kirk Durston, Ph.D. Biophysics A few days after his 2009 debate with me on the existence of God, well-known atheist, PZ Myers, posted a blog with the title, ‘Durston’s Devious Distortions’, in which he attempted to address my claim that amino acid sequences coding for biological protein families have an astonishingly low probability … so low that they definitively falsify the hypothesis that biological proteins could have been assembled via an evolutionary process. For his resource material, Myers referred to an online video of a lecture I gave at the University of Edinburgh about a year earlier. That video was for a general, interdisciplinary audience and did not actually discuss how the probabilities of biological proteins are calculated. Given this absence of information, Myers made some assumptions as to how I calculate protein probabilities. It appears that he did not check his own numbers by plugging them back into the relevant equation to see if they gave the results I had obtained. Those who tried to use Myers’ numbers found that they were incorrect, not yielding anything close to the results for SecY or RecA that I had published in the literature and shown in the video. Unfortunately, some atheists who follow Myers, unquestioningly accepted his explanation without checking his numbers, thus spreading Myers’ confusion. In reality, the probabilities of biological proteins were calculated using a two-step procedure. The first step was to calculate the functional complexity required to code for a particular protein using a method I had published in 2007.[1] That paper was referenced in the video in a slide titled, ‘Case 3: Average protein’ so that inquiring minds could obtain further details. -

A Multi-Agent Intelligent Design System Integrating Manufacturing and Shop-Floor Control

From: Proceedings of the First International Conference on Multiagent Systems. Copyright © 1995, AAAI (www.aaai.org). All rights reserved. A Multi-AgentIntelligent Design System Integrating ManufacturingAnd Shop-Floor Control SivaramBsll ubramanianand Douglas H. Norrie Division of ManufaclnringEngineering Depamnentof MechanicalEngineering University of Calgely Calgary, AB, C.=nadn T2NIN4 Abm’aet Jacksonet al. 1993], serviceability [’Makinnet ul. 1989], and reo/d~ility [Markino et al. 1994] early into the A multi-agnnt architectnre has been developedfor the design phase. These aspects of the product are integration of design, riming, and shop floor considered conjointly with the design functions. This control activities. This is based on cooperating approach can reduce the number of redesigns, thus intellismt entities in the sub-domain~which make shorteningproduct developmentlead times. In addition, decisions through negotiation, using domain-specific better products with moretml~nced overall performance knowledgeboth distributed amongthe entities and be produced, because of early consideration of all ao~ssible to them. Using this archRecmral relevant aspects of the product.life cycle. framework, an Agent Based Coty~’rent Design Achieving good manufactarab~ty and minimum Environmentsystem has been developed for featare- productioncost is often consideredto be the mostcritical based design, manufacturability evaluation, and factors when implementing concurrent engineering dypnmlc process planning. This is a multi-agent design. This brings the downstreamconcerns up front prototype system involving the following types of and helps avoid costly redesign iterations at the agent: design agent; geometric interface agent; manufacturing stage. Using Design for feature agent; part agem;machine agent; tool agent; ManufLetumbilitytools, a designer can significantly environment manager; and shop floor manager. -

Intelligent Design: the Biochemical Challenge to Darwinian Evolution?

Christian Spirituality and Science Issues in the Contemporary World Volume 2 Issue 1 The Design Argument Article 2 2001 Intelligent Design: The Biochemical Challenge to Darwinian Evolution? Ewan Ward Avondale College Marty Hancock Avondale College Follow this and additional works at: https://research.avondale.edu.au/css Recommended Citation Ward, E., & Hancock, M. (2001). Intelligent design: The biochemical challenge to Darwinian evolution? Christian Spirituality and Science, 2(1), 7-23. Retrieved from https://research.avondale.edu.au/css/vol2/ iss1/2 This Article is brought to you for free and open access by the Avondale Centre for Interdisciplinary Studies in Science at ResearchOnline@Avondale. It has been accepted for inclusion in Christian Spirituality and Science by an authorized editor of ResearchOnline@Avondale. For more information, please contact [email protected]. Ward and Hancock: Intelligent Design Intelligent Design: The Biochemical Challenge to Darwinian Evolution? Ewan Ward and Marty Hancock Faculty of Science and Mathematics Avondale College “For since the creation of the world God’s invisible qualities – his eternal power and divine nature – have been clearly seen, being understood from what has been made, so that men are without excuse.” Romans 1:20 (NIV) ABSTRACT The idea that nature shows evidence of intelligent design has been argued by theologians and scientists for centuries. The most famous of the design argu- ments is Paley’s watchmaker illustration from his writings of the early 19th century. Interest in the concept of design in nature has recently had a resurgence and is often termed the Intelligent Design movement. Significant is the work of Michael Behe on biochemical systems. -

Pdf/22/1/89/1714333/Desi.2006.22.1.89.Pdf by Guest on 29 September 2021 Gems of Wit Or Wisdom to Ponder

Jan Conradi letters, words, and text. He raises questions but doesn’t always provide answers. He expects his readers to make Typography: Formation and Transformation by Willi Kunz connections and discover possibilities that resonate, (Verlag Niggli AG, Zurich, 2003), ISBN 3721204956, sometimes with just a single example as a guidepost. 160 pages, illustrated, $56.00, hardcover. Where is formation? Where transformation? Kunz (Available in the US from Willi Kunz Books.) encourages us to distill typography to its essence then to build, shift, and layer, forming abstract symbols that Too many design volumes on bookstore shelves seem transform into logical communication. Exercises on the superficial and formulaic. However the formula must creative potential embedded in form and counterform work: simply gather short snippets of quotes from would be eye opening to a novice. How might a letter- currently hot professionals, combine the writing with form logically evolve into a symbol or logo? Kunz shows colorful images, then bind it all under a title promising how logical steps transform a Bodoni “a” into a symbol quick and easy answers to various design shortcomings. alluding to a sunlit pathway. Examples of posters, publi- These books offer plenty to look at (or mimic) and a few cations and experimental studies help clarify ideas for Downloaded from http://direct.mit.edu/desi/article-pdf/22/1/89/1714333/desi.2006.22.1.89.pdf by guest on 29 September 2021 gems of wit or wisdom to ponder. But then what? transforming space, proportion and composition. Final Then reach for a reference that provides depth, sub- designs are often accompanied by superimposed struc- stance and timelessness. -

Intelligent Design and Its Place in the Classroom by Victor Trinh & Micky Mezgebu

Intelligent Design and its Place in the Classroom By Victor Trinh & Micky Mezgebu ETHICAL QUESTION CONCLUSION While evolution may be seen as crucial to the science curriculum, it Should intelligent design be taught alongside evolution in would be for the best not to have intelligent design replace, or even be public schools? taught alongside, evolution. First, ID is not substantiated by scientific evidence and likewise its only evidence is taken from lack thereof (National Academy of Sciences, Institute of Medicine 43). As fundamental evolution is to a student's understanding of science and biology, teaching ideas of intelligent design and creationism would more likely confuse students rather PROS than give them a broader perspective in learning. Evolution is widely Fig. 1 & 2. Well-known image of evolution (left) and Michaelangelo’s Intelligent design declares that the sheer complexity accepted and substantiated by evidence, while intelligent design stands in Creation of Adam (right), both explaining the origin of man. of nature is evidence of a Designer, the creator of all life open contradiction of it. As philosopher Theodosius says, "Nothing in on earth. Professor Michael Behe defines this through biology makes sense except in the light of evolution" (Godfrey 27). the phrase “irreducible complexity” (Ayala144). Students that are taught evolution would have a better grasp of science and ABSTRACT Advocates of ID believe that it should be taught biology, while possibly forming a closer relationship with their environment. alongside evolution because students would be given a Thus, ID should not be taught in public schools because it is not a genuine Since the dawn of time, there has been controversy surrounding the question of form of science. -

Evolution, Creationism, and Intelligent Design Kent Greenwalt

Notre Dame Journal of Law, Ethics & Public Policy Volume 17 Article 2 Issue 2 Symposium on Religion in the Public Square 1-1-2012 Establishing Religious Ideas: Evolution, Creationism, and Intelligent Design Kent Greenwalt Follow this and additional works at: http://scholarship.law.nd.edu/ndjlepp Recommended Citation Kent Greenwalt, Establishing Religious Ideas: Evolution, Creationism, and Intelligent Design, 17 Notre Dame J.L. Ethics & Pub. Pol'y 321 (2003). Available at: http://scholarship.law.nd.edu/ndjlepp/vol17/iss2/2 This Article is brought to you for free and open access by the Notre Dame Journal of Law, Ethics & Public Policy at NDLScholarship. It has been accepted for inclusion in Notre Dame Journal of Law, Ethics & Public Policy by an authorized administrator of NDLScholarship. For more information, please contact [email protected]. ARTICLES ESTABLISHING RELIGIOUS IDEAS: EVOLUTION, CREATIONISM, AND INTELLIGENT DESIGN KENT GREENAWALT* I. INTRODUCTION The enduring conflict between evolutionary theorists and creationists has focused on America's public schools. If these schools had no need to teach about the origins of life, each side might content itself with promoting its favored worldview and declaring its opponents narrow-minded and dogmatic. But edu- cators have to decide what to teach, and because the Supreme Court has declared that public schools may not teach religious propositions as true, the First Amendment is crucially implicated. On close examination, many of the controversial constitu- tional issues turn out to be relatively straightforward, but others, posed mainly by the way schools teach evolution and by what they say about "intelligent design" theory, push us to deep questions about the nature of science courses and what counts as teaching religious propositions. -

The Perfect Solution for Intelligent Publishing!

The Perfect Solution Typesetting 4.0 For Intelligent Publishing! 1 Typesetting 4.0 TM Gutenberg: lead typesetting (1450) Mergenthaler: mechanical typesetting; Linotype (1886) Desktop Publishing (1985) Knuth: automated digital typesetting-systems; TeX (1986) metiTec: completely automated typesetting 2 Problem Today’s demands for the media and publishing industry: XML-first workflow Workflow automation Parallel production of different formats for different devices Customized and personalized publications for various channels and devices Content management on the basis of XML This affects many market segments: Scientific and commercial publishing Industrial catalogues as well as STM Corporate and technical publishing 3 How metiTec works 1. 1 2. Dynamically calculates space Transforms structured content requirements for all page into XML and integrates elements and controls page images, tables and assets layout using intelligent design from various sources. rules and measurements. 3 2 Optimize Uses cross-page planning to complete automation, analyzes the consequences of 3. existing breaks, applies rules for future breaks and creates optimal horizontal and vertical breaks. 4 The Typesetting Intelligence of metiTec Typesetting operators automatically adapt and integrate data to layout via typographic and micro-typographic calculations and functions: vertical feathering including grid layout operators for calculating: 1 spacing, glue and line distance font size, glyph scaling and optimized line length automatic switch from justification