3 How Many People Watch Coronation Street?

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Between L.S. Lowry and Coronation Street: Salford Cultural Identities

Between L.S. Lowry and Coronation Street: Salford Cultural Identities Susanne Schmid Abstract: Salford, “the classic slum”, according to Robert Roberts’s study, has had a distinct cultural identity of its own, which is centred on the communal ideal of work- ing-class solidarity, best exemplified in the geographical space of “our street”. In the wake of de-industrialisation, Roberts’s study, lyrics by Ewan MacColl, L.S. Lowry’s paintings, and the soap opera Coronation Street all nostalgically celebrate imagined northern working-class communities, imbued with solidarity and human warmth. Thereby they contribute to constructing both English and northern identities. Key names and concepts: Friedrich Engels - Robert Roberts - Ewan MacColl - L.S. Lowry - Charles Dickens - Richard Hoggart - George Orwell; Slums - Escaper Fiction - Working-class Culture - Nostalgia - De-industrialisation - Rambling - Coronation Street. 1. Salford as an Imagined Northern Community For a long time, Salford, situated right next to the heart of Manchester, has been known as a place that underwent rapid and painful industri- alisation in the nineteenth century and an equally difficult and agonis- ing process of de-industrialisation in the twentieth. If Manchester, the former flag-ship of the cotton industry, has been renowned for its beautiful industrial architecture, its museums, and its economic suc- cess, Salford has been hailed as “the classic slum”, as in the title of Robert Roberts’s seminal study about Salford slum life in the first quarter of the twentieth century (Roberts 1990, first published in 1971). The equation of “Salford” and “slum”, however, dates back further than that. Friedrich Engels’s The Condition of the Working Class in England, written in 1844/45, casts a gloomy light on a city made up of dwellings hardly fit for humans: 348 Susanne Schmid If we cross the Irwell to Salford, we find on a peninsula formed by the river, a town of eighty thousand inhabitants […]. -

Coronation Street Jailbirds

THIS WEEK Coronation Street’s Maria Connor is released from prison this week J – and ex-lover Aidan should beware, says actress Samia Longchambon CORONATION STREET AILBIRDS Maria Connor is by no means the first he view from Maria Connor’s window is by him? After all, it was only a couple of weeks almost tear-jerkingly romantic. It’s ago that her cellmate was remarking, a little Weatherfield woman to end up behind bars… Christmas Eve 2016 and, as if on cue, CORONATION ominously, “Men, eh? Can’t live with ’em, can’t plump white snowflakes have begun to STREET, smash ’em in the head with an iron bar…” DEIRDRE RACHID 1998 T Wrongly convicted of fraud after flutter gently to the ground. Could she have MONDAY, ITV “She does love Aidan,” says Samia. “It wished to gaze out on a more festive scene? hasn’t just been a sexual thing. He’s been her being set up by her fiancé, conman Well, yes, she could. For one thing, she knight in shining armour, her hero.” Jon Lindsay. The injustice sparked a could have wished for the window not to have And yes, he was certainly a tower of strength real-life Free The Weatherfield One campaign. Prime Minister Tony Blair thick iron bars across it. when an innocent Maria found herself falsely even raised it in Parliament. Yes, this was the night a frightened, despairing Maria accused of murder, back in November. But when it began a 12-month prison sentence for an illegal came to the day of her sentencing for this crazy HAYLEY CROPPER marriage, joining a long and illustrious line of marriage scam, for which she pleaded guilty, (NÉE PATTERSON) 2001 Coronation Street women who, over the years, have Aidan tarnished his image by failing to show. -

Survey Report

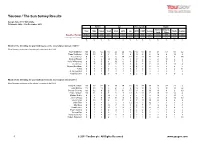

YouGov / The Sun Survey Results Sample Size: 1767 GB Adults Fieldwork: 20th - 21st December 2011 Gender Age Social grade Region Rest of Midlands / Total Male Female 18-24 25-39 40-59 60+ ABC1 C2DE London North Scotland South Wales Weighted Sample 1767 859 908 214 451 604 498 1007 760 226 574 378 435 154 Unweighted Sample 1767 837 930 68 509 706 484 1154 613 180 632 374 418 163 % % % % % % % % % % % % % % Which of the following do you think has been the most stylish woman of 2011? [Don't knows and none of the above's removed, N=1203] Kate Middleton 50 46 54 50 42 44 65 50 50 56 48 53 50 42 Pippa Middleton 10 15 5 4 11 11 9 10 9 5 12 9 10 8 Helen Mirren 9 6 12 3 5 14 12 9 10 11 12 6 8 14 Emma Watson 7 9 6 14 10 6 3 9 5 6 8 10 5 6 Holly Willoughby 6 6 5 3 10 6 3 5 7 6 5 5 7 7 Cheryl Cole 5 7 4 14 5 5 2 4 7 2 4 5 11 4 Victoria Beckham 5 3 7 3 5 7 5 4 7 6 5 5 6 5 Tulisa 3 4 3 9 5 3 0 4 3 1 4 1 2 11 Kelly Rowland 2 3 2 1 3 3 1 3 1 3 3 3 1 3 Angelina Jolie 2 2 1 0 4 1 1 2 1 3 1 3 0 2 Which of the following do you think has been the most stylish man of 2011? [Don't knows and none of the above's removed, N=1050] David Beckham 19 17 22 9 17 24 23 16 24 18 16 27 23 6 Gary Barlow 11 10 11 9 21 8 4 12 10 9 9 10 10 22 George Clooney 10 12 9 2 7 11 17 11 9 13 13 8 6 13 Prince William 10 11 9 8 5 7 19 9 11 7 10 11 14 4 Michael Buble 9 9 8 14 9 9 5 7 11 4 12 7 10 6 Johnny Depp 9 9 9 11 11 9 5 10 7 6 8 9 10 13 Daniel Craig 8 10 7 5 9 11 6 7 10 10 9 9 6 9 Colin Firth 7 6 8 7 5 7 12 11 3 12 7 7 6 7 Olly Murs 6 5 7 18 7 5 1 6 7 7 9 6 4 3 Hugh Laurie 4 6 3 10 3 4 3 5 3 4 4 5 4 2 Ryan Gosling 2 1 3 4 4 1 0 2 2 7 1 1 2 2 Vernon Kay 2 1 2 0 1 1 3 1 2 1 1 1 2 3 David Cameron 2 1 2 0 0 2 3 2 1 2 2 1 2 1 Robert Pattinson 1 1 1 4 1 1 0 1 1 1 0 0 1 10 1 © 2011 YouGov plc. -

Lesbian Brides: Post-Queer Popular Culture

Lesbian Brides: Post-Queer Popular Culture Dr Kate McNicholas Smith, Lancaster University Department of Sociology Bowland North Lancaster University Lancaster LA1 4YN [email protected] Professor Imogen Tyler, Lancaster University Department of Sociology Bowland North Lancaster University Lancaster LA1 4YN [email protected] Abstract The last decade has witnessed a proliferation of lesbian representations in European and North American popular culture, particularly within television drama and broader celebrity culture. The abundance of ‘positive’ and ‘ordinary’ representations of lesbians is widely celebrated as signifying progress in queer struggles for social equality. Yet, as this article details, the terms of the visibility extended to lesbians within popular culture often affirms ideals of hetero-patriarchal, white femininity. Focusing on the visual and narrative registers within which lesbian romances are mediated within television drama, this article examines the emergence of what we describe as ‘the lesbian normal’. Tracking the ways in which the lesbian normal is anchored in a longer history of “the normal gay” (Warner 2000), it argues that the lesbian normal is indicative of the emergence of a broader post-feminist and post-queer popular culture, in which feminist and queer struggles are imagined as completed and belonging to the past. Post-queer popular culture is depoliticising in its effects, diminishing the critical potential of feminist and queer politics, and silencing the actually existing conditions of inequality, prejudice and stigma that continue to shape lesbian lives. Keywords: Lesbian, Television, Queer, Post-feminist, Romance, Soap-Opera Lesbian Brides: Post-Queer Popular Culture Increasingly…. to have dignity gay people must be seen as normal (Michael Warner 2000, 52) I don't support gay marriage despite being a Conservative. -

Housing Market Takes Buyers on 'Wild'

DEALS OF THE $DAY$ PG. 3 FRIDAY JULY 9, 2021 DEALS OF THE Housing market takes buyers on ‘wild’ $rideDAY$ PG. 3 By Sam Minton DiVirgilio explained that ITEM STAFF this can drive the prices of surrounding homes as It might seem like a sim- well. He said that this is ple business practice, but part of the reason that the high demand and low in- housingDEALS market continues ventory can lead to prices to go up.OF THE for any particular product Schweihs works on the to increase. North Shore, in areas in- Right now this is hap- $DAY$ cluding Marblehead,PG. 3 Sa- pening to the housing lem, Saugus and Swamp- market in the area. Low scott. He said that for the inventory is leading buy- past 6-8 months it has ers to have to go in on been a “crazy” seller’s homes above the asking market and that he has price to ensure that they buyersDEALS being forced to of- even have a chance of se- fer anywhere from 10-20 curing a home. percentOF above THE the asking According to multiple price. realtors, they are seeing a Joan $Regan,DA Ywho$ works lot more cash offers take for CenturyPG. 21 3 in Lynn, place in the current mar- also described the market ket. Andrew Schweihs of only realtor who men- said. “Buyers are guaran- as “wild” in the city. Re- AJS Real Estate said that Realtors, from left, Albert DiVirgilio, Joan Re- tioned this. Albert DiVir- gan and Andrew Schweihs say it’s a seller’s teeing their offer so basi- gan mentioned that she he is even seeing buyers has seen offers between gilio from Re/Max said market right now on the North Shore. -

Will It Be Fifth Time Lucky for Steve?

INTERVIEW FOR BETTER...Kate Ford,O 34, who firstR took on the roleWORSE? very instinctive. She’ll just know when the Coronation Street’s Steve McDonald is about of Tracy 10 years ago, isn’t one to make time is right to take the ammunition out. apologies for her character’s appalling “Basically, she decides to put on a cracking to marry scheming Tracy Barlow – unless behaviour. And yet she can understand, at outfit, rock up to the church and say to Becky’s bombshell wrecks their big day least to a degree, why Tracy has resorted to Steve, ‘This is what you could have won…’” such extreme, unforgivable measures. There are several ways this could then BECKY DECIDES “She’s so scared of losing him,” she pan out, of course, particularly with vil, twisted, And how long did our sympathy last? explains. “She’s just desperate.” regard to Katherine’s final scenes. Will TO PUT ON A poisonous Ooh, several hours. By the following And Kate adds that, for all her character’s she successfully wreck the wedding? Will CRACKING OUTFIT, – over the years, episode, true to form, Tracy had turned this faults, “Tracy is a genuinely devoted mum.” she reconcile with Steve? Will she be ROCK UP TO Ewe’ve pinned terrible tragedy to her advantage in the What sets us up so nicely for Monday’s leaving alone? Or will she pledge her THE CHURCH AND no end of labels on most despicable fashion, claiming that a fall marriage ceremony is the fact that future to this Danny chap she’s taken such TRACY’S SO Coronation Street’s down the stairs at Becky McDonald’s flat Katherine Kelly, who’s been playing the a shine to – the widowed hotel manager SAY TO STEVE, SCARED OF LOSING Tracy Barlow. -

Free Deirdre Shirt

Free deirdre shirt click here to download Mens Deirdre Barlow Free The Weatherfield One T-shirt: Free UK Shipping on Orders Over £20 and Free Day Returns, on Selected Fashion Items Sold or. Ladies Deirdre Barlow Free The Weatherfield One T-shirt: Free UK Shipping on Orders Over £20 and Free Day Returns, on Selected Fashion Items Sold or. Description. FREE DEIRDRE RASHHID CORONATION STREET PARODY T SHIRT. IF YOU LIKE THIS COME AND TAKE A LOOK IN OUR FAT DAD EBAY. High quality Deirdre Barlow inspired T-Shirts by independent artists and designers from around the world. Deirdre Barlow Free the Wetherfield one T-Shirt. Buy 'Deirdre Barlow Free the Wetherfield one' by pickledjo as a T-Shirt, Classic T-Shirt, Tri-blend T-Shirt, Lightweight Hoodie, Women's Fitted Scoop T-Shirt. The jailing of Deirdre Rachid in Coronation Street has captured the public The tabloids are issuing 'Free Deirdre' stickers and T-shirts. RIP Deirdre Barlow: Corrie fans rush to snap up 'Free The Weatherfield Deirdre Barlow – soap fans have rushed to snap up a t-shirt depicting. Crinkle shirt of mixed fabrics. Stretch side panels. Home; DEIRDRE SHIRT. MULTI. Zoom Free shipping Australia Wide for orders over $ Read More. The push for her release was spearheaded by the country's tabloid newspapers, who offered stickers and t-shirts saying “Free Deirdre” and. The Coronation Street star's drama as she was thrown behind bars while her lover walked free enraged the nation. Deirdre ran her hand over Luke's ass. “Aces is taking over. She put her free hand up his shirt and pulled it up to suck on his nipple. -

Violence in UK Soaps

Violence in UK Soaps: A four wave trend analysis Dr Guy Cumberbatch, Victoria Lyne, Andrea Maguire and Sally Gauntlett July 2014 CRG UK Ltd Anvic House A report prepared for: 84 Vyse Street Ofcom Jewellery Quarter Birmingham B18 6HA t: ++(0)121 523 9595 f: ++(0)121 523 8523 e: [email protected] Contents Foreword from Ofcom .......................................................................................................................... 1 1 Violence in UK soaps: A four-wave trend analysis (2001-2013) ............................................... 2 1.1 Executive summary ................................................................................................................. 2 1.2 Key findings: overall violence in soaps 2001-2013 ................................................................. 3 1.3 Key findings: changes in violent content over period 2001-2013 ........................................... 4 1.4 Key findings: 2013 storyline analysis ...................................................................................... 5 1.5 Key findings: 2013 case studies of scenes containing strong violence .................................. 5 1.6 Overall conclusions ................................................................................................................. 6 2 Objectives, methodology and sample ......................................................................................... 7 2.1 Overall objectives ................................................................................................................... -

Alison King Shaftesbury Avenue London W1D 6LD

www.cam.co.uk Email [email protected] Address 55-59 Alison King Shaftesbury Avenue London W1D 6LD Telephone +44 (0) 20 7292 0600 Film Title Role Director Production LAST CHANCE Helen Ion Ionescu Vision PM ONE LAST TIME Lovington Chris Munro 21st Century Productions DARK RIDE Lara Craig Singer Action Factory SAVE ANGEL HOPE Jacqui Lydon Lukas Erni Trickslm Gmbh DEUCE BIGALOW - EUROPEAN GIGOLO Eva Mike Bigelow Sony Pictures Ent SUBMERGED Damita Tony Hickox Nu Image Films SHANGHAI KNIGHTS Ellie David Dobkin Buena Vista FEEDBACK Jane (Co-Lead) Chris Atkins Regalia Productions KEEP OFF THE GRASS Imelda Craig Robert Young Milk Productions Television Title Role Director Production SICK NOTE (Series 2) CS Henchy Matt Lipsey Sky1 CORONATION STREET Carla Connor (Regular) Various Directors ITV DREAM TEAM Lynda Block (Regular) Various Directors Sky One HELP Rebecca Declan Lowney BBC 2 RICHFIELDS Mandy Martin Marcus D F White Hewland Internatonal AUF WIEDERSEHEN, PET Erica Trasker Maurice Phillips BBC 1 HOLBY CITY Becky Roper Ged Maguire BBC 1 MURPHY'S LAW Ang Maurice Phillips BBC 1 CELEB - INFATUATION Zoe Ed Bye BBC CUTTING IT Georgie Andy de Emmony BBC LENNY HENRY IN PIECES Bride Ed Bye BBC 1 COUPLING Chrissie Martin Dennis BBC 2 URBAN GOTHIC - THE END Stella Otto Bathurst Channel 5 BROOKSIDE Helen Jeremy Summers Mersey Television Theatre Title Role Director Production BLOOD BROTHERS Narrator - London Palladium MERRY WIVES OF WINDSOR Mistress Page - North Cheshire Theatre PEACE BY EURIDEDIES Hermes - North Cheshire Theatre THE WIZ Adderperle - Royal College of Music GREASE Frenchie - Edinburgh Festival Theatre Skills Music & Dance Alto Mezzo, Movemeng and Dance Sports Cycling, Horse-Riding, Water Sports Characteristics Height Hair Eyes Training 5'5" Dark Brown Green North Cheshire College CAM Limited Registered Oce:CAM, 55-59 ShaftesburyAvenue, London, E. -

PUB TYPE Information Analyses (070)--Speeches/Conference

DOCUMENT RESUME ED 294 542 IR 013 087 AUTHOR Scannell, Paddy TITLE Radio Times: The Temporal Arrangements of Broadcasting in the Modern World. PUB DATE Jul 86 NOTE 34p.; Paper presented at the International Television Studies Conference (London, England, July 10-12, 1986). PUB TYPE Information Analyses (070) -- Speeches/Conference Papers (150) EDRS PRICE MF01/PCO2 Plus Postage. DESCRIPTORS *Audiences; *Broadcast Industry; Cultural Context; Diaries; Foreign Countries; History; Interpersonal Relationship; Leisure Time; Nationalism; *Programing (Broadcast); *Radio; Social Attitudes; Story Telling; Surveys; *Television; *Time Perspective; Traditionalism IDENTIFIERS *England; Soap Operas ABSTRACT This paper examines the unobtrusive ways in which broadcasting sustains the lives and routines, from one day to the next, year in and year out, of whole populations, and reflects on some of the implications of these processes by accounting for the ways in which the times of radio and television are organized in relation to the social spaces of listening and viewing. Topics discussed include: (1) three intersecting planes of temporality that are intrinsic to every moment of social reproduction and a fourth plane which occurs in broadcasting; (2) the process of modernization, after 1834, that demanded new time-keeping habits and work disciplines from whole populations, and with it the necessity for reconstruction of images and emblems of nationhood; (3) the development of radio broadcasting in the 1920s and 1930s and the role the broadcast "year" played -

Weekly Highlights Week 35/36: Sat29th August - Fri 4Th September 2020

Weekly Highlights Week 35/36: Sat29th August - Fri 4th September 2020 Sheridan Smith: Becoming Mum Tuesday, 9pm Intimate documentary following actor Sheridan Smith’s journey through pregnancy and mental health struggles. This information is embargoed from reproduction in the public domain until Tue 25th August 2020. Press contacts Further programme publicity information: ITV Press Office [email protected] www.itv.com/presscentre @itvpresscentre ITV Pictures [email protected] www.itv.com/presscentre/itvpictures ITV Billings [email protected] www.ebs.tv This information is produced by EBS New Media Ltd on behalf of ITV +44 (0)1462 895 999 Please note that all information is embargoed from reproduction in the public domain as stated. Weekly highlights ITV Racing: Live from Goodwood Saturday, 1.30pm 29th August ITV Ed Chamberlin and Francesca Cumani present all the live action from Goodwood race course on another Saturday of exciting racing with coverage from three venues. The feature race of the day is the Celebration Mile at Goodwood, which has been won by some top-class horses in recent years and is bound to be another strong renewal in 2020. There’s also action from Newmarket and Windsor in the afternoon. The Masked Singer US Saturday, 4pm & 5pm 29th August ITV Surreal musical antics from season two of the US version of The Masked Singer, featuring a roster of famous competitors in outlandish disguises singing for glory. Nick Cannon presents as the three remaining contestants give it their all in the final As ever, judges Robin Thicke, Jenny McCarthy, Ken Jeong and Nicole Scherzinger try to guess the performers’ identities, as well as crowning the overall winner of the show. -

William Roache M.B.E. Canadian Tour Interview + Bio & Short History

William Roache M.B.E. Canadian Tour Interview + Bio & Short History Interview via E-mail from William Roache aka Ken Barlow November 2011. Ref: Canadian tour March 2012. Show title Coronation St. Icon: An Audience with William Roache. March 15th Ottawa @ Centrepointe theatre 1.866.752.5231 www.centrepointetheatre.com March 17th Toronto @ Winter Gardens theatre 1.855.622.2787 www.ticketmaster.ca March 18th Hamilton @ Hamilton Place 1.855.872.5000 www.ticketmaster.ca March 19th Victoria @ Royal Theatre 1.888.717.6121 www.rmts.bc.ca March 21st Vancouver @ Red Robinson Show theatre 1.855.985.5000 www.ticketmaster.ca March 23 & 24th Halifax @ Casino Nova Scotia 1.877.451.1221 www.ticketatlantic.com Answers to questions: Is this your first visit to Canada? And, what impresses you about coming to the country? I visited St John‟s Newfoundland in the eighties. I did a play at the Art and Culture Centre. I was made to feel very welcome. I was amazed at the wilderness, vast areas and the animal life. A bit worried when I came across a bear's footprint. How important are Canadian fans to the worldwide success of Corrie? It is really wonderful to have such a strong fan base in Canada. It is so rewarding and comforting to know that the show is enjoyed and appreciated by so many in Canada. I was thrilled to get a personal letter on my golden anniversary from your Prime minister the RT.Hon.Stephen Harper, saying that he is a fan. I was really honoured, and would love to meet him and thank him.