Norwegian Citizen Panel

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Les Sportsplanen

KIL/HEMNE Fotball Kyrksæterøra mars 2021 INNHOLD DEL 1 - DETTE ER KIL/HEMNE o INNLEDNING side 3 o KIL/HEMNE SIN VISJON, VERDIGRUNNLAG OG MÅL side 4 o TRENINGSKULTUR OG BEGREPET TALENT side 7 o KRAV TIL KIL/HEMNE SOM KLUBB side 8 o KRAV TIL «OSS I KIL/HEMNE» side 9 o ORGANISASJONSKART KIL/HEMNE side 12 o TRENERROLLEN I KIL/HEMNE side 13 o OM TRENING side 14 o KEEPERTRENING side 16 o ANDRE EKSTRATILTAK side 18 o HOSPITERING DIFFERENSIERING JEVNBYRDIGHET ALLSIDIGHET SPESIALISERING EGENTRENING TOTALBELASTNING side 19 o SAMARBEID MED KYRKSÆTERØRA VIDEREGÅENDE SKOLE side 23 o GODE DOMMERE side 23 DEL 2 - DE ULIKE GRUPPENE o 3’er 6-7 år side 25 o 5’er 8-9 år side 28 o 7’er 10-11 år side 31 o 9’er 12-14 år (GUTTER/JENTER 15-16 år) side 33 o GUTTER/JENTER 15-16 år side 35 o GUTTER/JENTER 17-19 år side 38 o AKTUELLE LINKER side 41 Kyrksæterøra mars 2021 Del 1 DETTE ER KIL/HEMNE INNLEDNING Formålet med SPORTSPLANEN er å utvikle barne- og ungdomsfotballen i KIL/Hemne. Den skal være et verktøy til veiledning for trenere og støtteapparat i klubben, og legge grunnlaget for fornuftig og målrettet opplæring i et godt miljø. SPORTSPLANEN skal bidra til at KIL/Hemne er best på spillerutvikling i regionen. SPORTSPLANEN ble utarbeidet av klubben høsten 2015 og vinteren 2016, og vedtatt i årsmøte mars 2016. Arbeidet ble ledet av ei prosjektgruppe: Atle Karlstrøm (leder), Lina Dalum Sødahl, Gjermund Bjørkøy, Per Erik Bjerksæter, David Monkan og Arne Sandnes. -

Kommunestrukturutredning Snillfjord, Hitra Og Frøya Delrapport 2 Om Bærekraftige Og Økonomisk Robuste Kommuner Anja Hjelseth Og Audun Thorstensen

Kommunestrukturutredning Snillfjord, Hitra og Frøya Delrapport 2 om bærekraftige og økonomisk robuste kommuner Anja Hjelseth og Audun Thorstensen TF-notat nr. 51/2015 1 Kolofonside Tittel: Kommunestrukturutredning Snillfjord, Hitra og Frøya Undertittel: Delrapport 2 om bærekraftige og økonomisk robuste kommuner TF-notat nr.: 51/2015 Forfatter(e): Audun Thorstensen og Anja Hjelseth Dato: 15.09.2015 ISBN: 978-82-7401-841-9 ISSN: 1891-053X Pris: (Kan lastes ned gratis fra www.telemarksforsking.no) Framsidefoto: Telemarksforsking og istockfoto.com Prosjekt: Utredning av kommunestruktur på Nordmøre Prosjektnummer.: 20150650 Prosjektleder: Anja Hjelseth Oppdragsgiver(e): Snillfjord, Hitra og Frøya kommuner Spørsmål om dette notatet kan rettes til: Telemarksforsking, Postboks 4, 3833 Bø i Telemark – tlf. 35 06 15 00 – www.telemarksforsking.no 2 Forord • Telemarksforsking har fått i oppdrag fra Hitra, Frøya og Snillfjord kommuner å utrede sammenslåing av de tre kommunene. Det skal leveres 5 delrapporter med følgende tema: – Helhetlig og samordnet samfunnsutvikling – Bærekraftige og økonomisk robuste kommuner – Gode og likeverdige tjenester – Styrket lokaldemokrati – Samlet vurdering av fordeler og ulemper ved ulike strukturalternativer • I tillegg til dette alternativet, så inngår Hitra og Snillfjord i utredningsalternativer på Nordmøre, hvor også Telemarksforsking står for utredningsarbeidet. Disse er: – Hemne, Hitra, Aure, Smøla og Halsa – Hemne, Aure, Halsa og Snillfjord • Denne delrapporten omhandler bærekraftige og økonomisk robuste kommuner. -

Søknad Om Midlertidig Opprettholdelse Av Anleggskonsesjon for Deler Av Eksisterende 132 Kv Forbindelse Orkdal - Snillfjord

Søknad om midlertidig opprettholdelse av anleggskonsesjon for deler av eksisterende 132 kV forbindelse Orkdal - Snillfjord (Mast Orkdal – Snillfjord ved Orkdal transformatorstasjon) Januar 2021 i Forord Tensio TS AS (heretter benevnt Tensio TS) søker med dette på vegne av Statnett om midlertidig opprettholdelse (til 2030) av anleggskonsesjon for 6,4 km av 132 kV forbindelsen Orkdal – Snillfjord i Orkland kommune. Linjen er opprinnelig planlagt revet som gitt som vilkår i konsesjon med NVEs referanse 201002544-249. Grunnet økt skissert kraftbehov i næringsområdet på Grønnøra søkes det om endring av vilkåret om riving jfr. Energiloven §10-4, slik at eksisterende konsesjon opprettholdes midlertidig. Fristen for riving omsøkes utsatt til 2030 slik at det er tilstrekkelig tid til å avklare behov samt evaluere utviklingen av kraftforbruket på Grønnøra næringsområde i Orkland kommune. Innspill oversendes til NVE. Eventuelle spørsmål til søknaden kan rettes til oppgitt kontaktperson. Bjørn Rune Stubbe Nettdirektør Tensio TS AS Kontaktinformasjon: Norges vassdrags- og energidirektorat (NVE) Tensio TS AS Postboks 5091 Majorstua Postboks 9480 Torgarden 0301 OSLO 7496 TRONDHEIM Tlf: 22 95 95 95 Tlf: 74 12 15 00 Org.nummer: 978 631 029 Kontaktperson Navn Telefon E-post Saksbehandler Tommy Svarva Hårstad +47 48152119 [email protected] ii TENSI O TS AS Innhold Forord.......................................................................................................................................... ii Kontaktinformasjon: ................................................................................................................... -

Aglo Vgs Anne Berit Haabeth Frisør Byåsen Vgs

Navn på skole Navn på kontaktlærer Hvilken konkurranse skal dere delta i? Aglo vgs Anne Berit Haabeth Frisør Byåsen vgs Anette Fredriksen og Ailin Indergård Helsefagarbeider Byåsen vgs Bjørn Halgunset / Roger Rosmo Bilskade-lakk Byåsen vgs Bjørn Halgunset / Roger Rosmo Industrimekaniker Byåsen VGS Børge Østeraas Industrimekaniker og CNC Byåsen vgs Hilde Moslet Mona Sjølie Midtsand Barne og ungdomsarbeider Byåsen vgs Roger Rosmo / Bjørn Halgunset Bilfag lette kjøretøy Byåsen vgs Roger Rosmo / Bjørn Halgunset CNC Byåsen vgs Siv Stai, Eva Trondsen Barne og ungdomsarbeider Byåsen vgs Tore Johnny Olafsen og Stine Lorentzen Lund Helsefagarbeider Charlottenlund vgs John Kåre Busklein Industrimekaniker Charlottenlund vgs Leif Joar Lassesen Tømrer Charlottenlund vgs Tone Høvik Salg Charlottenlund vgs Lisbeth Sommerbakk Frisør Charlottenlund vgs. John Kåre Busklein Industrimekaniker Charlottenlund vgs. John Kåre Busklein Industrimekaniker Charlottenlund videregående Halldisskole Berg Design og søm Fosen VGS Terje Svankild Tømrer Fosen videregående skole Pål Ulset Elektriker Gauldal vgs Elin Rise Salg Gauldal vgs Øystein Talsnes Sveis Gauldal Videregående Skole Bjørn Myrbakken Anleggsmaskinfører Gauldal Videregående Skole Ingrid Brandegg Barne og ungdomsarbeider Gauldal Videregående Skole Jostein Brattset Tømrer Gauldal Videregående Skole Øystein Talsnes Industrimekaniker Gauldal Videregående Skole Øystein Talsnes Manuell mask. Grong videregående Knut Bjørnar Johansen Tømrer Grong Videregående Skole Jogeir Rosten Elektriker Heimdal vgs Terje Monsen Elektriker Hemne vgs Asbjørn Sagøy Industrimekaniker Hemne vgs Asbjørn Sagøy Manuell maskinering Hemne vgs Asbjørn Sagøy Sveis Hemne vgs Nils Erling Lidal Tømrer Hemne videregående skole Birgit Belsvik Helsefagarbeider Hemne videregående skole Birgit Belsvik Barne og ungdomsarbeider Leksvik vgs Rita Lien Barne og ungdomsarbeider Leksvik vgs Stig Lysvand Tømrer Leksvik videregående skole Gunhild Røst Helsefagarbeider Levanger vg skole Leif Meland Kokk Levanger vg skole Leif Meland Servitør Levanger vg. -

Møteinnkalling Overhalla Formannskap

Møteinnkalling Utvalg: Overhalla formannskap Møtested: Kommunestyresalen, Adm.bygg Dato: 05.11.2019 Tidspunkt: 12:00 De faste medlemmene innkalles med dette til møtet. Den som har gyldig forfall, eller ønsker sin habilitet i enkeltsaker vurdert, melder dette så snart som mulig på e-post: [email protected]. Ved melding om forfall: Begrunnelse for forfall skal oppgis. Varamedlemmer møter etter nærmere innkalling. Per Olav Tyldum Ordfører Torunn Grønnesby Formannskapssekretær 1 Sakliste Saksnr Innhold PS 64/19 Søknad om permisjon fra verv som varamedlem til Overhalla kommunestyre - Marianne Øyesvold PS 65/19 Innspill - Egnet plassering av hurtigladepunkt i Overhalla PS 66/19 Søknad om sluttfinansiering av nytt orgel i Ranem kirke PS 67/19 Deltakelse i IKA Trøndelag IKS PS 68/19 Tilstands- og utviklingsrapport for de kommunale grunnskolene 2019. PS 69/19 Navnesak Overhalla kommune - Boligveg ved Skiljåsaunet PS 70/19 Søknad om kjøp av tilleggsareal til Svalifoten 5, eier Robert Gabrielsen og Monica Hanssen PS 71/19 Brøndbo Holding AS - Søknad om kjøp av tilleggstomt på Skage industriområde PS 72/19 Søknad om dispensasjon fra kommuneplanens arealdel og fradeling av gårdstunet på Åsheim, 1/2, eier Lars Iver Kalnes PS 73/19 Søknad om dispensasjon og fradeling av bebygd tun, Elverum, 75/5, eier Jan Olav Tømmerås PS 74/19 Søknad om dispensasjon, fradeling og makebytte mellom 12/14-Pål Tore Raabakken og 11/3 - Rune Hansen PS 75/19 Regnskaps- og virksomhetsrapport 2. tertial 2019 PS 76/19 Valg av nytt kommunestyremedlem til prosjektgruppa -

EFTA Surveillance Authority Rue Belliard 35 B- 1040 Brussels Belgium [email protected] Complaint: Norway Gives About NOK

Trondheim and Steinkjer, 15.03.2016 EFTA Surveillance Authority Rue Belliard 35 B- 1040 Brussels Belgium [email protected] Complaint: Norway gives about NOK 4 billion as an extra support to wind power projects in Trøndelag. We claim that this support violates the general EU market rules. A wind Power consortium consisting of Statkraft, Trønderenergi and Nordic Wind Power is planning 6 wind power sites in Trøndelag (Mid Norway), with a total of 1000 MW installed effect and a yearly production of 3.4 TWh. These wind power sites are going to receive a public support of about NOK 0.15/ kWh for 15 years as an addition to the electricity market price, a support given to all new renewable power installations in Norway and Sweden to be put to work within 2021. We accept that this support probably is within EU market rules. Our complaint concerns an extra support of about NOK 4 billion, which is to be given to the consor- tium through a free connection to the national power grid through new 420 kV overhead lines. The general rule in Norway is that connections of new power plants to the national grid are to be paid by the owner, but in this case the connection is to be paid by Statnett, the owner of the national grid. We claim that this extra support of NOK 4 billion violates the EU market rules of fair competition. Complainants Naturvernforbundet i Sør Trøndelag Sandgata 20 7012 Trondheim Norway Regional departments of: e-mail: [email protected] phone: +47 40248084 Naturvernforbundet (Friends of the Earth Norway) Naturvernforbundet -

Nye Fylkes- Og Kommunenummer - Trøndelag Fylke Stortinget Vedtok 8

Ifølge liste Deres ref Vår ref Dato 15/782-50 30.09.2016 Nye fylkes- og kommunenummer - Trøndelag fylke Stortinget vedtok 8. juni 2016 sammenslåing av Nord-Trøndelag fylke og Sør-Trøndelag fylke til Trøndelag fylke fra 1. januar 2018. Vedtaket ble fattet ved behandling av Prop. 130 LS (2015-2016) om sammenslåing av Nord-Trøndelag og Sør-Trøndelag fylker til Trøndelag fylke og endring i lov om forandring av rikets inddelingsnavn, jf. Innst. 360 S (2015-2016). Sammenslåing av fylker gjør det nødvendig å endre kommunenummer i det nye fylket, da de to første sifrene i et kommunenummer viser til fylke. Statistisk sentralbyrå (SSB) har foreslått nytt fylkesnummer for Trøndelag og nye kommunenummer for kommunene i Trøndelag som følge av fylkessammenslåingen. SSB ble bedt om å legge opp til en trygg og fremtidsrettet organisering av fylkesnummer og kommunenummer, samt å se hen til det pågående arbeidet med å legge til rette for om lag ti regioner. I dag ble det i statsråd fastsatt forskrift om nærmere regler ved sammenslåing av Nord- Trøndelag fylke og Sør-Trøndelag fylke til Trøndelag fylke. Kommunal- og moderniseringsdepartementet fastsetter samtidig at Trøndelag fylke får fylkesnummer 50. Det er tidligere vedtatt sammenslåing av Rissa og Leksvik kommuner til Indre Fosen fra 1. januar 2018. Departementet fastsetter i tråd med forslag fra SSB at Indre Fosen får kommunenummer 5054. For de øvrige kommunene i nye Trøndelag fastslår departementet, i tråd med forslaget fra SSB, følgende nye kommunenummer: Postadresse Kontoradresse Telefon* Kommunalavdelingen Saksbehandler Postboks 8112 Dep Akersg. 59 22 24 90 90 Stein Ove Pettersen NO-0032 Oslo Org no. -

Vindsenter Fosen

Vindsenter Fosen Bolystprosjekt i regi av Åfjord kommune Sluttrapport Vindsenter Fosen er et utviklingsprosjekt etablert av Åfjord kommune i samarbeid med Roan kommune for å utvikle strategier og iverksettes tiltak som gjøre at Åfjord og de andre vindkraftkommunene på Fosen skal klare å utnytte de mulighetene som ligger i utbyggingen av vindkraft frem mot 2020. Prosjektet har som mål at det skal etableres 200 nye, varige arbeidsplasser i regionen i perioden 2015 til 2020. Prosjektet har for perioden 2013 til 2015 hatt en finansiering på 3 millioner kroner hvorav 50% er finansiert av Kommunal- og regionaldepartementet (KRD) og 50% er finansiert av Åfjord kommune. Finansieringen fra KRD er under tiltaket Bolystmidler og ble tildelt i 2013 til prosjektet som da ble benevnt Vindkraftsamfunn 2.0. Prosjekteier er Åfjord kommune ved rådmann. Prosjektleder er Kjell Inge Skaldebø Prosjektet har hatt en ressursgruppe bestående av: Vibeke Stjern, Ordfører Åfjord kommune Per Johansen, Rådmann Åfjord kommune Jan Helge Grydeland, Ordfører Roan kommune (frem til 01.10.15) Einar Eian, Ordfører Roan kommune (fra 01.10.15) Vegar Saur, Windcluster Norway Ole Svendgård, Inergo AS Bjørn Damhaug, Leksvik Industriell vekst AS Arnt Ivar Kverndalen, Kopparn Utvikling AS Åfjord Utvikling har deltatt på møtene ved daglig leder. Åfjord 18. mars 2016 Kjell Inge Skaldebø Prosjektleder Vindsenter Fosen Sluttrapport side 2 Innholdsfortegnelse Innholdsfortegnelse ............................................................................................................................... -

Norway Maps.Pdf

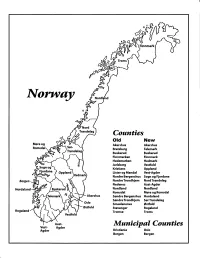

Finnmark lVorwny Trondelag Counties old New Akershus Akershus Bratsberg Telemark Buskerud Buskerud Finnmarken Finnmark Hedemarken Hedmark Jarlsberg Vestfold Kristians Oppland Oppland Lister og Mandal Vest-Agder Nordre Bergenshus Sogn og Fjordane NordreTrondhjem NordTrondelag Nedenes Aust-Agder Nordland Nordland Romsdal Mgre og Romsdal Akershus Sgndre Bergenshus Hordaland SsndreTrondhjem SorTrondelag Oslo Smaalenenes Ostfold Ostfold Stavanger Rogaland Rogaland Tromso Troms Vestfold Aust- Municipal Counties Vest- Agder Agder Kristiania Oslo Bergen Bergen A Feiring ((r Hurdal /\Langset /, \ Alc,ersltus Eidsvoll og Oslo Bjorke \ \\ r- -// Nannestad Heni ,Gi'erdrum Lilliestrom {", {udenes\ ,/\ Aurpkog )Y' ,\ I :' 'lv- '/t:ri \r*r/ t *) I ,I odfltisard l,t Enebakk Nordbv { Frog ) L-[--h il 6- As xrarctaa bak I { ':-\ I Vestby Hvitsten 'ca{a", 'l 4 ,- Holen :\saner Aust-Agder Valle 6rrl-1\ r--- Hylestad l- Austad 7/ Sandes - ,t'r ,'-' aa Gjovdal -.\. '\.-- ! Tovdal ,V-u-/ Vegarshei I *r""i'9^ _t Amli Risor -Ytre ,/ Ssndel Holt vtdestran \ -'ar^/Froland lveland ffi Bergen E- o;l'.t r 'aa*rrra- I t T ]***,,.\ I BYFJORDEN srl ffitt\ --- I 9r Mulen €'r A I t \ t Krohnengen Nordnest Fjellet \ XfC KORSKIRKEN t Nostet "r. I igvono i Leitet I Dokken DOMKIRKEN Dar;sird\ W \ - cyu8npris Lappen LAKSEVAG 'I Uran ,t' \ r-r -,4egry,*T-* \ ilJ]' *.,, Legdene ,rrf\t llruoAs \ o Kirstianborg ,'t? FYLLINGSDALEN {lil};h;h';ltft t)\l/ I t ,a o ff ui Mannasverkl , I t I t /_l-, Fjosanger I ,r-tJ 1r,7" N.fl.nd I r\a ,, , i, I, ,- Buslr,rrud I I N-(f i t\torbo \) l,/ Nes l-t' I J Viker -- l^ -- ---{a - tc')rt"- i Vtre Adal -o-r Uvdal ) Hgnefoss Y':TTS Tryistr-and Sigdal Veggli oJ Rollag ,y Lvnqdal J .--l/Tranbv *\, Frogn6r.tr Flesberg ; \. -

Midtre Gauldal Kommune

Kartlegging av radon i Midtre Gauldal kommune Radon 2000/2001 Vinteren 2000/2001 ble det gjennomført en fase 1-kartlegging av radon i inneluft i Midtre Gauldal kommune, i forbindelse med den landsomfattende undersøkelsen ”Radon 2000/2001”. En andel på 8 % av kommunens husstander deltok i kartleggingen, og det ble funnet at 8 % av disse har en radonkonsentrasjon som er høyere enn anbefalt tiltaksnivå på 200 Bq/m3 luft. Midtre Gauldal kommune har et stedvis radonproblem, og kommunen kan deles inn i ulike områder med tanke på oppfølging. På Klokkerhaugen og Singsås er flere enn 20 % av målingene over tiltaksgrensen på 200 Bq/m3 radon i luft, og det er derfor en høy sannsynlighet for høye radonverdier i disse områdene. Her anbefaler Statens strålevern oppfølgende målinger i alle boliger med leilighet eller oppholdsrom i 1. etasje eller underetasje. Sør for Bones til Budal er under 5 % av målingene over tiltaksgrensen, og området har lav sannsynlighet for høye radonverdier. Anbefalt oppfølging kan her begrenses til generell informasjon og veiledning. I de resterende delene av kommunen er det en middels høy sannsynlighet for forhøyde radonnivåer, og det anbefales å gjøre oppfølgende målinger i utvalgte boliger. Line Ruden Gro Beate Ramberg Katrine Ånestad Terje Strand Kartlegging av radon i Midtre Gauldal kommune Mer generell informasjon om radon finnes 1. INNLEDNING på Strålevernets radonsider: http://radon.nrpa.no. 1.1 Om radon Radon (222Rn) er et radioaktivt stoff som dannes naturlig ved desintegrasjon av 1.2 Bakgrunn for prosjektet radium (226Ra), og som finnes i varierende I forbindelse med Nasjonal kreftplan, som mengder i all berggrunn og jordsmonn. -

Møteinnkalling

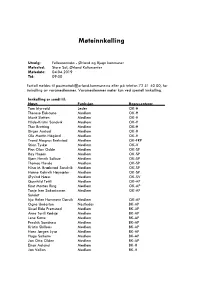

Møteinnkalling Utvalg: Fellesnemnda - Ørland og Bjugn kommuner Møtested: Store Sal, Ørland Kultursenter Møtedato: 04.04.2019 Tid: 09:00 Forfall meldes til [email protected] eller på telefon 72 51 40 00, for innkalling av varamedlemmer. Varamedlemmer møter kun ved spesiell innkalling. Innkalling er sendt til: Navn Funksjon Representerer Tom Myrvold Leder OK-H Therese Eidsaune Medlem OK-H Marit Sletten Medlem OK-H Hilde-Kristin Sandvik Medlem OK-H Thor Bretting Medlem OK-H Birger Austad Medlem OK-H Ole Martin Hågård Medlem OK-H Trond Magnus Brekstad Medlem OK-FRP Stian Tyskø Medlem OK-V Finn Olav Odde Medlem OK-SP Roy Hoøen Medlem OK-SP Bjørn Henrik Solbue Medlem OK-SP Thomas Hovde Medlem OK-SP Nina M. Brækstad Sandvik Medlem OK-SP Hanne Koksvik Høysæter Medlem OK-SP Øyvind Næss Medlem OK-SV Gunnhild Tettli Medlem OK-AP Knut Morten Ring Medlem OK-AP Tonje Iren Sakariassen Medlem OK-AP Sundet Irja Helen Hammern Døsvik Medlem OK-AP Ogne Undertun Nestleder BK-AP Sissel Eide Fremstad Medlem BK-AP Anne Torill Rødsjø Medlem BK-AP Lene Kotte Medlem BK-AP Fredrik Sandness Medlem BK-AP Kristin Skilleås Medlem BK-AP Hans Jørgen Lysø Medlem BK-AP Hugo Solheim Medlem BK-AP Jan Otto Olden Medlem BK-AP Einar Aaland Medlem BK-H Jan Vollan Medlem BK-H Tore Melhuus Medlem BK-H Anne M. Bjørnerud Medlem BK-H Hans Eide Medlem BK-SP Laila Iren Veie Medlem BK-SP Ole Graneng Medlem BK-SP Jan Inge Standahl Medlem BK-SP Walter Braa Medlem BK-FRP Per Odd Solberg Medlem BK-B Marthe Rødsjø Medlem BK-SV Vel møtt! Tom Myrvold Leder Fellesnemnda Saksnr Innhold -

Administrative and Statistical Areas English Version – SOSI Standard 4.0

Administrative and statistical areas English version – SOSI standard 4.0 Administrative and statistical areas Norwegian Mapping Authority [email protected] Norwegian Mapping Authority June 2009 Page 1 of 191 Administrative and statistical areas English version – SOSI standard 4.0 1 Applications schema ......................................................................................................................7 1.1 Administrative units subclassification ....................................................................................7 1.1 Description ...................................................................................................................... 14 1.1.1 CityDistrict ................................................................................................................ 14 1.1.2 CityDistrictBoundary ................................................................................................ 14 1.1.3 SubArea ................................................................................................................... 14 1.1.4 BasicDistrictUnit ....................................................................................................... 15 1.1.5 SchoolDistrict ........................................................................................................... 16 1.1.6 <<DataType>> SchoolDistrictId ............................................................................... 17 1.1.7 SchoolDistrictBoundary ...........................................................................................