1 Digital Dance Ethnography

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

A Short Course in International Folk Dance, Harry Khamis, 1994

Table of Contents Preface .......................................... i Recommended Reading/Music ........................iii Terminology and Abbreviations .................... iv Basic Ethnic Dance Steps ......................... v Dances Page At Va'ani ........................................ 1 Ba'pardess Leyad Hoshoket ........................ 1 Biserka .......................................... 2 Black Earth Circle ............................... 2 Christchurch Bells ............................... 3 Cocek ............................................ 3 For a Birthday ................................... 3 Hora (Romanian) .................................. 4 Hora ca la Caval ................................. 4 Hora de la Botosani .............................. 4 Hora de la Munte ................................. 5 Hora Dreapta ..................................... 6 Hora Fetalor ..................................... 6 Horehronsky Czardas .............................. 6 Horovod .......................................... 7 Ivanica .......................................... 8 Konyali .......................................... 8 Lesnoto Medley ................................... 8 Mari Mariiko ..................................... 9 Miserlou ......................................... 9 Pata Pata ........................................ 9 Pinosavka ........................................ 10 Setnja ........................................... 10 Sev Acherov Aghcheek ............................. 10 Sitno Zensko Horo ............................... -

Dionysiac and Pyrrhic Roots and Survivals in the Zeybek Dance, Music, Costume and Rituals of Aegean Turkey

GEPHYRA 14, 2017, 213-239 Dionysiac and Pyrrhic Roots and Survivals in the Zeybek Dance, Music, Costume and Rituals of Aegean Turkey Recep MERİÇ In Memory of my Teacher in Epigraphy and the History of Asia Minor Reinhold Merkelbach Zeybek is a particular name in Aegean Turkey for both a type of dance music and a group of com- panions performing it wearing a particular decorative costume and with a typical headdress (Fig. 1). The term Zeybek also designates a man who is brave, a tough and courageous man. Zeybeks are gen- erally considered to be irregular military gangs or bands with a hierarchic order. A Zeybek band has a leader called efe; the inexperienced young men were called kızans. The term efe is presumably the survivor of the Greek word ephebos. Usually Zeybek is preceded by a word which points to a special type of this dance or the place, where it is performed, e.g. Aydın Zeybeği, Abdal Zeybeği. Both the appellation and the dance itself are also known in Greece, where they were called Zeibekiko and Abdaliko. They were brought to Athens after 1922 by Anatolian Greek refugees1. Below I would like to explain and attempt to show, whether the Zeybek dances in the Aegean provinces of western Turkey have any possible links with Dionysiac and Pyrrhic dances, which were very popular in ancient Anatolia2. Social status and origin of Zeybek Recently E. Uyanık3 and A. Özçelik defined Zeybeks in a quite appropriate way: “The banditry activ- ities of Zeybeks did not have any certain political aim, any systematic ideology or any organized re- ligious sect beliefs. -

SYRTAKI Greek PRONUNCIATION

SYRTAKI Greek PRONUNCIATION: seer-TAH-kee TRANSLATION: Little dragging dance SOURCE: Dick Oakes learned this dance in the Greek community of Los Angeles. Athan Karras, a prominent Greek dance researcher, also has taught Syrtaki to folk dancers in the United States, as have many other teachers of Greek dance. BACKGROUND: The Syrtaki, or Sirtaki, was the name given to the combination of various Hasapika (or Hassapika) dances, both in style and the variation of tempo, after its popularization in the motion picture Alexis Zorbas (titled Zorba the Greek in America). The Syrtaki is danced mainly in the taverns of Greece with dances such as the Zeybekiko (Zeimbekiko), the Tsiftetelli, and the Karsilamas. It is a combination of either a slow hasapiko and fast hasapiko, or a slow hasapiko, hasaposerviko, and fast hasapiko. It is typical for the musicians to "wake things up" after a slow or heavy (vari or argo) hasapiko with a medium and / or fast Hasapiko. The name "Syrtaki" is a misnomer in that it is derived from the most common Greek dance "Syrtos" and this name is a recent invention. These "butcher dances" spread throughout the Balkans and the Near East and all across the Aegean islands, and entertained a great popularity. The origins of the dance are traced to Byzantium, but the Argo Hasapiko is an evolved idiom by Aegean fisherman and their languid lifestyle. The name "Syrtaki" is now embedded as a dance form (meaning "little Syrtos," though it is totally unlike any Syrto dance), but its international fame has made it a hallmark of Greek dancing. -

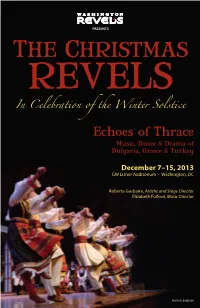

Read This Year's Christmas Revels Program Notes

PRESENTS THE CHRISTMAS REVELS In Celebration of the Winter Solstice Echoes of Thrace Music, Dance & Drama of Bulgaria, Greece & Turkey December 7–15, 2013 GW Lisner Auditorium • Washington, DC Roberta Gasbarre, Artistic and Stage Director Elizabeth Fulford, Music Director PHOTO BY ROGER IDE THE CHRISTMAS REVELS In Celebration of the Winter Solstice Echoes of Thrace Music, Dance & Drama of Bulgaria, Greece & Turkey The Washington Featuring Revels Company Karpouzi Trio Koleda Chorus Lyuti Chushki Koros Teens Spyros Koliavasilis Survakari Children Tanya Dosseva & Lyuben Dossev Thracian Bells Tzvety Dosseva Weiner Grum Drums Bryndyn Weiner Kukeri Mummers and Christmas Kamila Morgan Duncan, as The Poet With And Folk-Dance Ensembles The Balkan Brass Byzantio (Greek) and Zharava (Bulgarian) Emerson Hawley, tuba Radouane Halihal, percussion Roberta Gasbarre, Artistic and Stage Director Elizabeth Fulford, Music Director Gregory C. Magee, Production Manager Dedication On September 1, 2013, Washington Revels lost our beloved Reveler and friend, Kathleen Marie McGhee—known to everyone in Revels as Kate—to metastatic breast cancer. Office manager/costume designer/costume shop manager/desktop publisher: as just this partial list of her roles with Revels suggests, Kate was a woman of many talents. The most visibly evident to the Revels community were her tremendous costume skills: in addition to serving as Associate Costume Designer for nine Christmas Revels productions (including this one), Kate was the sole costume designer for four of our five performing ensembles, including nineteenth- century sailors and canal folk, enslaved and free African Americans during Civil War times, merchants, society ladies, and even Abraham Lincoln. Kate’s greatest talent not on regular display at Revels related to music. -

Pocono Fall Folk Dance Weekend 2017

Pocono Fall Folk Dance Weekend 2017 Dances, in order of teaching Steve’s and Yves’ notes are included in this document. Steve and Susan 1. Valle e Dados – Kor çë, Albania 2. Doktore – Moh ács/Moha č, Hungary 3. Doktore – Sna šo – Moh ács/Moha č, Hungary 4. Kukunje šće – Tököl (R ác – Serbs), HungaryKezes/Horoa - moldaviai Cs áng ó 5. Kezes/Hora – Moldvai Cs áng ó 6. Bácsol K ék Hora – Circle and Couple ____ 7. Pogonisht ë – Albania 8. Gajda ško 9. Staro Roko Kolo 10. Kupondjijsko Horo 11. Gergelem (Doromb) 12. Gergely T ánc 13. Hopa Hopa Yves and France 1. Srebranski Danets (Bulgaria/Dobrudzha) 2. Svornato (Bulgaria/Rhodopes) 3. Tsonkovo (Bulgaria/Trakia) 4. Nevesto Tsarven Trendafil (Macedonia) 5. Zhensko Graovsko (Bulgaria/Shope) _____ 6. Belchova Tropanka (Bulgaria/Dobrudzha) 7. Draganinata (Bulgaria/ W. Trakia) 8. Chestata (North Bulgaria) 9. Svrlji ški Čačak (East Serbia) DOKTORE I SNAŠO (Mo hács, Hungary) Doktore and Snašo are part of the “South Slavic” ( Délszláv) dance repertoire from Mohács/Moha č, Hungary. Doktore is named after a song text and is originally a Serbian Dance, but is enjoyed by the Croatian Šokci as well. Snašno (young married woman) or Tanac is a Šokac dance. Recording: Workshop CD Formation: Open circle with “V” hold, or M join hands behind W’s back and W’s hands Are on M’s nearest shoulders. Music: 2/4 Meas: DOKTORE 1 Facing center, Step Rft diag fwd to R bending R knee slightly (ct 1); close Lft to Rft and bounce on both feet (ct 2); bounce again on both feet (ct &); 2 Step Lft back to place (bend knee slightly)(ct -

Music and Traditions of Thrace (Greece): a Trans-Cultural Teaching Tool 1

MUSIC AND TRADITIONS OF THRACE (GREECE): A TRANS-CULTURAL TEACHING TOOL 1 Kalliopi Stiga 2 Evangelia Kopsalidou 3 Abstract: The geopolitical location as well as the historical itinerary of Greece into time turned the country into a meeting place of the European, the Northern African and the Middle-Eastern cultures. Fables, beliefs and religious ceremonies, linguistic elements, traditional dances and music of different regions of Hellenic space testify this cultural convergence. One of these regions is Thrace. The aim of this paper is firstly, to deal with the music and the dances of Thrace and to highlight through them both the Balkan and the middle-eastern influence. Secondly, through a listing of music lessons that we have realized over the last years, in schools and universities of modern Thrace, we are going to prove if music is or not a useful communication tool – an international language – for pupils and students in Thrace. Finally, we will study the influence of these different “traditions” on pupils and students’ behavior. Key words: Thrace; music; dances; multi-cultural influence; national identity; trans-cultural teaching Resumo: A localização geopolítica, bem como o itinerário histórico da Grécia através do tempo, transformou o país num lugar de encontro das culturas europeias, norte-africanas e do Médio Oriente. Fábulas, crenças e cerimónias religiosas, elementos linguísticos, danças tradicionais e a música das diferentes regiões do espaço helénico são testemunho desta convergência cultural. Uma destas regiões é a Trácia. O objectivo deste artigo é, em primeiro lugar, tratar da música e das danças da Trácia e destacar através delas as influências tanto dos Balcãs como do Médio Oriente. -

Greek Dance and Everyday Nationalism in Contemporary Greece - Kalogeropoulou 55

Greek dance and everyday nationalism in contemporary Greece - Kalogeropoulou 55 Greek dance and everyday nationalism in contemporary Greece Sofia Kalogeropoulou The University of Otago ABSTRACT In this article I explore how dance as an everyday lived experience during community events contributes to constructing national identities. As a researcher living in New Zealand where issues of hybridity and fluidity of identities in relation to dance are currently a strong focus for discussion, I was inspired to examine dance in my homeland, Greece. In a combination of ethnography and autobiography I examine dance as an embodied practice that physically and culturally manifests the possession of a distinct national identity that can also be used as a means of differentiation. I also draw on the concept of banal nationalism by Michael Billig (1995), which looks at the mundane use of national symbols and its consequences. I argue that while folk dance acts as a uniting device amongst members of national communities, its practice of everyday nationalism can also be transformed into a political ritual that accentuates differences and projects chauvinism and extreme nationalism with a potential for conflict. INTRODUCTION A few years ago when I was still living in Greece I was invited to my cousin’s farewell party before he went to do his national service. This is a significant rite of passage for a Greek male marking his transition from childhood to manhood and also fulfilling his obligations towards his country and the state. This dance event celebrates the freedom of the civilian life and marks the beginning of a twelve-month period of military training in the Greek army. -

Greek Cultures, Traditions and People

GREEK CULTURES, TRADITIONS AND PEOPLE Paschalis Nikolaou – Fulbright Fellow Greece ◦ What is ‘culture’? “Culture is the characteristics and knowledge of a particular group of people, encompassing language, religion, cuisine, social habits, music and arts […] The word "culture" derives from a French term, which in turn derives from the Latin "colere," which means to tend to the earth and Some grow, or cultivation and nurture. […] The term "Western culture" has come to define the culture of European countries as well as those that definitions have been heavily influenced by European immigration, such as the United States […] Western culture has its roots in the Classical Period of …when, to define, is to the Greco-Roman era and the rise of Christianity in the 14th century.” realise connections and significant overlap ◦ What do we mean by ‘tradition’? ◦ 1a: an inherited, established, or customary pattern of thought, action, or behavior (such as a religious practice or a social custom) ◦ b: a belief or story or a body of beliefs or stories relating to the past that are commonly accepted as historical though not verifiable … ◦ 2: the handing down of information, beliefs, and customs by word of mouth or by example from one generation to another without written instruction ◦ 3: cultural continuity in social attitudes, customs, and institutions ◦ 4: characteristic manner, method, or style in the best liberal tradition GREECE: ANCIENT AND MODERN What we consider ancient Greece was one of the main classical The Modern Greek State was founded in 1830, following the civilizations, making important contributions to philosophy, mathematics, revolutionary war against the Ottoman Turks, which started in astronomy, and medicine. -

Teaching Folk Dance. Successful Steps. INSTITUTION High/Scope Educational Research Foundation, Ypsilanti, MI

DOCUMENT RESUME ED 429 050 SP 038 379 AUTHOR Weikart, Phyllis S. TITLE Teaching Folk Dance. Successful Steps. INSTITUTION High/Scope Educational Research Foundation, Ypsilanti, MI. ISBN ISBN-1-57379-008-7 PUB DATE 1997-00-00 NOTE 674p.; Accompanying recorded music not available from EDRS. AVAILABLE FROM High/Scope Press, High/Scope Educational Research Foundation, 600 North River Street, Ypsilanti, MI 48198-2898; Tel: 313-485-2000; Fax: 313-485-0704. PUB TYPE Books (010)-- Guides - Non-Classroom (055) EDRS PRICE EDRS Price MF04 Plus Postage. PC Not Available from EDRS. DESCRIPTORS *Aesthetic Education; Cultural Activities; Cultural Education; *Dance Education; Elementary Secondary Education; *Folk Culture; Music Education IDENTIFIERS *Folk Dance ABSTRACT This book is intended for all folk dancers and teachers of folk dance who wish to have a library of beginning and intermediatefolk dance. Rhythmic box notations And teaching suggestionsaccompany all of the beginning and intermediate folk dances in the book. Many choreographieshave been added to give beginning dancers more experience with basicdance movements. Along with each dance title is the pronunciation and translation of the dance title, the country of origin, and the "Rhythmically Moving"or "Changing Directions" recording on which the selectioncan be found. The dance descriptions in this book provide a quick recall of dances and suggested teaching strategies for those who wish to expand their repertoire of dances. The eight chapters include: (1) "Beginning and Intermediate Folk Dance: An Educational Experience"; (2) "Introducing Folk Dance to Beginners"; (3) "Introducing Even and Uneven Folk Dance Steps";(4) "Intermediate Folk Dance Steps"; (5) "Folk Dance--The Delivery System"; (6) "Folk Dance Descriptions"; (7) "Beginning Folk Dances"; and (8)"Intermediate Folk Dances." Six appendixes conclude the volume. -

Evdokia's Zeibekiko

European Scientific Journal December 2017 edition Vol.13, No.35 ISSN: 1857 – 7881 (Print) e - ISSN 1857- 7431 The Bouzouki’s Signifiers and Significance Through the Zeibekiko Dance Song: "Evdokia’s Zeibekiko" Evangelos Saragatsis Musician, Secondary Education Teacher, Holder Of Postgraduate Diploma Ifigeneia Vamvakidou Professor at the University of Western Macedonia, Greece Doi: 10.19044/esj.2017.v13n35p125 URL:http://dx.doi.org/10.19044/esj.2017.v13n35p125 Abstract The objective of this study is to identify the signifiers and significance of the Zeibekiko dance, and those of the bouzouki itself, to a further extent, as they emerge through research conducted in the relevant literature, and which is anchored to those signifiers, as they are highlighted through their presence in material that is obtained from movies. The semiotic analysis of the film “Evdokia”, by A. Damianos (1971), is the research method that is followed. In this context, the main focus is placed on the episode/scene, where Evdokia’s Zeibekiko is displayed on stage. This ‘polytropic’ (polymodal) material that consists of listening to, viewing, playing music, and dancing encompasses a large variety of musicological and gender signifiers that refer to the specific era. The model followed is that of Greimas (1996), as it was used by Lagopoulos & Boklund-Lagopoulou (2016), and Christodoulou (2012), in order to point out those characteristic features that are expressed by the bouzouki, as a musical instrument, through a representative sample of the zeibekiko dance, as it is illustrated in the homonymous film. The analysis of images, as well as of the language message, lead to the emergence of codes, such as the one referring to the gender, and also the symbolic, value, and social codes, and it is found that all these codes agree with the introductory literature research conducted on the zeibekiko dance and the bouzouki. -

Glossary Ahengu Shkodran Urban Genre/Repertoire from Shkodër

GLOSSARY Ahengu shkodran Urban genre/repertoire from Shkodër, Albania Aksak ‘Limping’ asymmetrical rhythm (in Ottoman theory, specifically 2+2+2+3) Amanedes Greek-language ‘oriental’ urban genre/repertory Arabesk Turkish vocal genre with Arabic influences Ashiki songs Albanian songs of Ottoman provenance Baïdouska Dance and dance song from Thrace Čalgiya Urban ensemble/repertory from the eastern Balkans, especially Macedonia Cântarea României Romanian National Song Festival: ‘Singing for Romania’ Chalga Bulgarian ethno-pop genre Çifteli Plucked two-string instrument from Albania and Kosovo Čoček Dance and musical genre associated espe- cially with Balkan Roma Copla Sephardic popular song similar to, but not identical with, the Spanish genre of the same name Daouli Large double-headed drum Doina Romanian traditional genre, highly orna- mented and in free rhythm Dromos Greek term for mode/makam (literally, ‘road’) Duge pjesme ‘Long songs’ associated especially with South Slav traditional music Dvojka Serbian neo-folk genre Dvojnica Double flute found in the Balkans Echos A mode within the 8-mode system of Byzan- tine music theory Entekhno laïko tragoudhi Popular art song developed in Greece in the 1960s, combining popular musical idioms and sophisticated poetry Fanfara Brass ensemble from the Balkans Fasil Suite in Ottoman classical music Floyera Traditional shepherd’s flute Gaida Bagpipes from the Balkan region 670 glossary Ganga Type of traditional singing from the Dinaric Alps Gazel Traditional vocal genre from Turkey Gusle One-string, -

RCA Victor International FPM/FSP 100 Series

RCA Discography Part 26 - By David Edwards, Mike Callahan, and Patrice Eyries. © 2018 by Mike Callahan RCA Victor International FPM/FSP 100 Series FPM/FSP 100 – Neapolitan Mandolins – Various Artists [1961] Torna A Surriento/Anema E Core/'A Tazza 'E Caffe/Funiculi Funicula/Voce 'E Notte/Santa Lucia/'Na Marzianina A Napule/'O Sole Mio/Oi Mari/Guaglione/Lazzarella/'O Mare Canta/Marechiaro/Tarantella D'o Pazzariello FPM/FSP 101 – Josephine Baker – Josephine Baker [1961] J'ai Deux Amours/Ca C'est Paris/Sur Les Quais Du Vieux Paris/Sous Les Ponts De Paris/La Seine/Mon Paris/C'est Paris/En Avril À Paris, April In Paris, Paris Tour Eiffel/Medley: Sous Les Toits De Paris, Sous Le Ciel De Paris, La Romance De Paris, Fleur De Paris FPM/FSP 102 – Los Chakachas – Los Chakachas [1961] Never on Sunday/Chocolate/Ca C’est Du Poulet/Venus/Chouchou/Negra Mi Cha Cha Cha/I Ikosara/Mucho Tequila/Ay Mulata/Guapacha/Pollo De Carlitos/Asi Va La Vida FPM/FSP 103 – Magic Violins of Villa Fontana – Magic Violins of Villa Fontana [1961] Reissue of LPM 1291. Un Del di Vedremo/Rubrica de Amor/Gigi/Alborada/Tchaikovsky Piano Concerto No. 1/Medley/Estrellita/Xochimilco/Adolorido/Carousel Waltz/June is Bustin’ Out All Over/If I Loved You/O Sole Mio/Santa Lucia/Tarantella FPM/FSP 104 – Ave a Go Wiv the Buskers – Buskers [1961] Glorious Beer/The Sunshine of Paradise Alley/She Told Me to Meet Her at the Gate/Nellie Dean/After the Ball/I Do Like to Be Beside the Seaside//Any Old Iron/Down At the Old Bull and Bush/Boiled Beef and Carrots/The Sunshine of Your Smile/Wot Cher