Design Management and Professional Practice

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Službeni List Grada Subotice

POŠTARINA PLA ĆENA TISKOVINA KOD POŠTE 24000 SUBOTICA SLUŽBENI LIST GRADA SUBOTICE BROJ: 22 GODINA: XLVII DANA:25. maj 2011. CENA: 87,00 DIN. Na osnovu člana 22. i člana 26. Odluke o mesnim zajednicama (pre čiš ćeni tekst) («Službeni list Grada Subotica», broj 17/2009- pre čiš ćeni tekst i 26/2009) i člana 24. Uputstva za sprovo đenje izbora za članove skupština mesnih zajednica («Službeni list Grada Subotica», broj 12/2011), Izborna komisija grada Subotice, na sednici održanoj dana 25. maja 2011. godine, donela je ZBIRNU IZBORNU LISTU KANDIDATA ZA IZBOR ČLANOVA SKUPŠTINE MESNE ZAJEDNICE ALEKSANDROVO I Zbirna izborna lista kandidata za izbor članova Skupštine Mesne zajednice Aleksandrovo koji se održava 05. juna 2011. godine sastoji se od slede ćih izbornih lista sa slede ćim kandidatima: 1.) Naziv izborne liste: DEMOKRATSKA STRANKA – BOŽIDAR KRSTI Ć Podnosilac izborne liste: Demokratska stranka Kandidati: 1. Božidar Krsti ć, 1953., elektroinženjer, Subotica, Vladimira Rolovi ća 22 2. Danijel Horvat, 1984., dipl. ekonomista, Subotica, Jovana Pa čua 43 3. Jadranka Vukovi ć, 1957., maturant gimnazije, Subotica, Aksentija Marodi ća 62 4. Slobodan Bukvi ć, 1974., matemat. program. sarad., Subotica, Josipa Lihta 13 5. Vlado Nim čevi ć, 1964., poljoprivredni tehni čar, Subotica, Kameni čka 16 6. Ljiljana Majlat, 1962., ekonomski tehni čar, Subotica, Josipa Lihta 64 7. Dragoljub Vesi ć, 1942., penzioner, Subotica, Trg Paje Jovanovi ća 3 8. Savo Mar četi ć, 1961., knjigovo đa, Subotica, Vojislava Ili ća 67 9. Judit Radakov, 1969., medicinski tehni čar-sestra, Subotica, Josipa Lihta 54 10. Vjekoslav Šar čevi ć, 1984., student, Subotica, Jelene Čovi ć 69 11. -

S^Flnoiuersary Sqibcbrfnion $19,99

>4 - EVEWINO HERALD, niiiri.. June U. ItK \ Bolton High School names top seniors BOLTON ^— Adam Cellarte lle r, PhHaChris RinliRich, Rensselaer Math ASt l^ienreScience Award,Award th«the wi._ u#__ ______ j . ___. Misa Manning and Anastasia Morianos went to Laurie Manning for highest poten Teresa Landrey, Martin Fergoaon, and Yale Gub of Hartford Book Award, and and for outstanding service to the senior Lori Sheets have been named as the five was made a member of tbe Sociedad tial in achievement in shorthand; Laurie class, Ann CIscon, Sara Waddell, and Gagnon, outstanding accomplishment in m top scholars in this year’s graduati^ Honoraria Hispanica. Winkler. Winkler was also awarded the class at Bolton High School. The first two Other graduates named as Connecticut advanced accounting and for outstanding outstanding sportsman award. accomplishment in the business education » I hold the title of valedictorian and State Scholars were Ferguson and Miss Named for outstanding efforts and salutatorian. curriculum. Penny Gillum was given the Landrey, Miss Landrey also received the achievement in Spanish II was Heather Teller has also been hbnored by being Brlarwood College Book Award for a .... ; ■ - •: University of Connecticut Alumni Briggs and for excellence in Spanish I, V named a Presidential Scholar and he and Association Award. promising junior planning a career in « . Kate Shorey. business. Rich are National Merit Scholarship Suzanne Fenton was presented with the Sara Waddell was named for four years * winners. Harvard Book Award and Seth Teller was Miss Manning was cited for placing of outstanding achievement in all areas of third in the state shorthand competition Teller was also awarded the Bausch St cited for bis high score on the National home economics and for outstanding im Lomb science award, was cited for Merit Scholarship Qualifying Test. -

2019 FACULTY SCHOLARSHIP REPORT DESIGN and LAYOUT Nick Paulus Keara Mangan Lucy Zhang

2019 FACULTY SCHOLARSHIP REPORT DESIGN AND LAYOUT Nick Paulus Keara Mangan Lucy Zhang PHOTOGRAPHY Elizabeth Torgerson-Lamark/RIT PUBLISHED BY The Wallace Center - RIT Open Access Publishing OFFICE OF THE PROVOST www.rit.edu/provost/ 1 TABLE OF CONTENTS 03 Letter From The Provost Golisano College of Computing 04 & Information Sciences 20 College of Art & Design 32 College of Engineering Technology 40 College of Health Sciences & Technology 46 College of Liberal Arts 66 College of Science 90 Golisano Institute for Sustainability 96 International Campuses 106 Kate Gleason College of Engineering 126 National Technical Institute for the Deaf 150 Saunders College of Business 160 School of Individualized Study Letter from the Provost I am very pleased to present the 2019 Faculty Scholarship Report. This compendium of scholarly work by RIT faculty and students represents the best of who we are as scholars and creative artists. The report provides several examples of exceptional individual and group achievements. It contains a list of the awards won, articles and books published, juried shows exhibited, and editorships undertaken by faculty members and students in 2019. I hope you take a few minutes to review the work and take pride in your own and others’ accomplishments. The scholarly and artistic efforts within this report each contribute to RIT’s growing national reputation, and many have direct and positive impacts on communities here in western New York and around the world. This work, which ranges from developing computational systems that improve care for patients with heart disease, to understanding the role of cultural and linguistic diversity for those who are Deaf and hard-of-hearing, to discovering the closest exoplanet to Earth, puts RIT and its research at the heart of some of the world’s most technical, scientific, and social challenges. -

1919–1930) Veliki Beležnik (1922–1924

Историјски архив Суботица – Istorijski arhiv Subotica – Történelmi Levéltár – The Historical Archives of Subotica NAU ČNO-INFORMATIVNA SREDSTVA O ARHIVSKOJ GRA ĐI ANALITI Č KI INVENTAR VELIKI BELEŽNIK (1919–1930) SVESKA 3 VELIKI BELEŽNIK (1922–1924) Stevan Ma čkovi ć, arhivski savetnik Суботица – Subotica – Szabadka, 2014. 2 S A D R Ž A J: ANALITI ČKI OPISI IMENSKI REGISTAR PREDMETNO TEMATSKI REGISTAR GEOGRAFSKI REGISTAR 3 ANALITI ČKI OPISI F. 47, 1210, 1211,1212,1213,1214,1215 Veliki beležnik, 1922 - 1924. (I, II, III, IV) Redni brojevi 1-756 1. F. 47, IV 39/1922. Molba udove Ištvana Gencela (Göncöl) ro đ. Kataline Ren čar sa stanom na Pali ću, vila Fuks, radi izdavanja svedožbe o nadležnosti radi optiranja za Ma đarsku . Odluka u kojoj se potvr đuje da je moliteljica na temelju pargrafa 6, zakonskog člana XXII iz godine 1886. nadležna u Subotici. L:6 2. F. 47, IV 41/1922. Pozitivno rešena molba Mihajla Rema (Rehm) kazandžije za uverenje o nadležnosti radi optiranja za državljanstvo KSHSS. L:10 3. F. 47, IV 43/1922. Pozitivno rešena molba Mihajla Kladeka – učitelja, za uverenje o nadležnosti za njegovog sina Mirka Kladeka, radi prijema za apotekarskog pomo ćnika. L:3 4. F. 47, IV 46/1922. Pozitivno rešena molba Hermana Šterna (r. 1895) rodom iz mesta Mako u Ma đarskoj za uverenje o tome da se dobrovoljno prijavio za vojsku i da se nalazi upisan u regrutnom spisku pod brojem 1375. L:2 5. F. 47, IV 71/1922. Pozitivno rešena molba štamparije „Minerva d.d.“ radi uverenja da lica koja su akcionari te firme: dr Franjo Fenjveš, Ljudevit Fenjveš, dr Jako Fišer, Ignjat Fridman, dr Velimir Kalmar, Emil Makai, Sava Jankovi ć, Mirko Havas, Arnold Reves, Ljudevit Šreger, Matika Lidert, nisu optirala za Ma đarsku. -

Please Carefully Review This Objection and the Attachments Hereto to Determine Whether This Objection Affects Your Claim(S)

HEARING DATE AND TIME: February 9, 2011 at 9:45 a.m. (Eastern Time) RESPONSE DEADLINE: February 2, 2011 at 4:00 p.m. (Eastern Time) PLEASE CAREFULLY REVIEW THIS OBJECTION AND THE ATTACHMENTS HERETO TO DETERMINE WHETHER THIS OBJECTION AFFECTS YOUR CLAIM(S) Harvey R. Miller Stephen Karotkin Joseph H. Smolinsky WEIL, GOTSHAL & MANGES LLP 767 Fifth Avenue New York, New York 10153 Telephone: (212) 310-8000 Facsimile: (212) 310-8007 Attorneys for Debtors and Debtors in Possession UNITED STATES BANKRUPTCY COURT SOUTHERN DISTRICT OF NEW YORK ---------------------------------------------------------------x : In re : Chapter 11 Case No. : MOTORS LIQUIDATION COMPANY, et al., : 09-50026 (REG) f/k/a General Motors Corp., et al. : : Debtors. : (Jointly Administered) : ---------------------------------------------------------------x NOTICE OF DEBTORS’ 138TH OMNIBUS OBJECTION TO CLAIMS (Eurobond Deutsche Debt Claims) PLEASE TAKE NOTICE that on December 23, 2010, Motors Liquidation Company (f/k/a General Motors Corporation) and its affiliated debtors, as debtors in possession (the “Debtors”), filed their 138th omnibus objection to claims (the “Objection”), and that a hearing (the “Hearing”) to consider the Objection will be held before the Honorable Robert E. Gerber, United States Bankruptcy Judge, in Room 621 of the United States Bankruptcy Court for the Southern District of New York, One Bowling Green, New York, New York 10004, on US_ACTIVE:\43594211\01\72240.0639 February 9, 2011 at 9:45 a.m. (Eastern Time), or as soon thereafter as counsel may be heard. This Objection does not affect a Claimant’s ability to receive distributions as a beneficial bondholder under the Debtors’ Amended Joint Chapter 11 Plan (ECF No. -

Hitler Escapou Ileso Do Atentado De Munich

¦¦•¦¦:.-.*•-••¦ • -¦ .... -.--.•¦'-;.¦ •--. •.. -•-•-¦• .:ee.. - •-..-.. •¦- ..¦¦¦¦¦-.-:•¦-¦.•-.-•••JORNAL DO.¦¦ ., BRASIL.•"-¦• -."'...-"..' .,., .- ANOXLIX || N. 266 RIO DE JANEIRO — SEXTA-FEIRA, 10 DE NOVEMBRO DE 1939 || EDIÇÃO -- 20 PAGINAS do empregada DIRETORES í para PREC1SA-SF.todo c serviço, sabendo cozi- Presidente, B. rcrcirn Carneiro. nhar, pura um cisai. Exlgem-.o Tesoureiro, i. Vire. do Hio. refurcnclns: á rua 19 de FeveiMl- Secret.0 Aníbal Freire da Fonseca. ileso atentado de Munich ro n. 138r Botafogo. Tratar das do 8 hs 10 Hitler escapou horas.(24237)3 110-112 AV-.N1DA HIO BRANCO PAdlNA ¦ do uma empregada 22-1818 Meca telefônica PRECISA-SE. de 30 a 35 anos, com pratica, A' noite: Das .3 hs. às 7 boras: multo limpa e de toda a conílon- Redação «-«"£ INUNDAÇÃO DE SUA PRINCIPAL DE ça, para arumadelia c levar umi. Reportagem pollolal .. 22-0310 A HOLANDA INICIA A LINHA DEFESA menina a escola. Deve gostar de Pnmrla 22-1782 PAGINA ¦ lugar sossegado. Estrada da Ga- vea n. 50. Tel. 27-6230. (74614)3 end. telg. JOHBRASIL dc uma mocinha, CHAMBERLAIN DECLARA OS ALIADOS NÃO DEPORÃO, AINDA, AS ARMAS 1VRECISA-SEentre 16 a 18 anos, para aju- ASSINATURAS QÜE dar em serviços leves, om casa d« PAGINA t-trasll, America e Espanha: 1 pequena fnmllla; Avenlda Rio Ano, 908000 Semestre 508000. Branco n. 59. 2° andar. (84415)3 França e Alemanha, Bélgica emprega- lOOSOOO RECISA-SE de uma Portu-jal Semestre 0 MINISTRO DAS FINANÇAS DA FRANÇA AFIRMA QUE SE A NEUTRALIDADE DOS EE. UU. da, á rua Engenho de Dontro Outros países: Semestre 2008000 n. 174, loja_.'(7998B)3 de uma empregada DE PRECISA-SE À assinatura pôde começar em VIER A SER VIOLADA NÂO SERA'POR QUALQUER ATO DE SEU PAIS OU SEUS ALIADOS para todos os serviços, m.no» qualquer dia. -

Bethell Hospice Newsletter Spring 2019 FINAL

FROM THE BETHELL HOSPICE FOUNDATION . SPRING 2019 ISSUE Tree Dedication Marjorie Webster Lamb Marjorie Webster Lamb was a resident of the Morning Glory Room at Bethell Hospice from May 13 to July 21, 2018. Marjorie was a breath of sunshine, and it was evident to everyone that she shared a special bond with her husband, Richard. Marjorie cherished her time at Bethell Hospice. She adored the nursing staff and the excellent care she received, and characterized her stay as one of profound peace. She shared many wonderful conversations and even wrote some lovely poetry, including which is now framed in Bethell Hospice as a tribute to the entire care team. During her stay, Marjorie and Richard decided to make a lasting tribute by dedicating a tree in Marjorie’s memory on the Bethell Hospice grounds. They selected a beautiful Japanese Lilac that reminded Marjorie of the trees in her garden at home. It is only fitting that a poem she wrote to Richard is immortalized on her tree dedication plaque: My promise I will keep to you Green pastures await you Still waters are there Just walk round the corner I wait for you there To find out more about tree dedication opportunities at Bethell Hospice, please contact: Jeannette Vanden Heuvel at 905-838-3534, ext. 2251, or [email protected]. Here when you need us at Bethell Hospice Overwhelming Light & Love Support Hundreds of friends of Bethell Hospice gathered in Inglewood on December 13 to light the Bethell Hospice trees in memory of their loved ones. We are thrilled to announce that our 2018 Celebration of Light & Love raised over $170,000 in support of Bethell Hospice Foundation. -

Title Items-In-Public Relations Files - Luncheons, Dinners and Receptions - Volumes XV, XVI, XVII

UN Secretariat Item Scan - Barcode - Record Title Page 37 Date 08/06/2006 Time 11:11:50 AM S-0864-0006-03-00001 Expanded Number S-0864-0006-03-00001 Title items-in-Public relations files - luncheons, dinners and receptions - Volumes XV, XVI, XVII Date Created 30/07/1969 Record Type Archival Item Container s-0864-0006: Public Relations Files of the Secretary-General: U Thant Print Name of Person Submit Image Signature of Person Submit Protocol and Liaison Luncheon given by the Secretary-General to bid farewell to His Excellency Mr. Mohamed Awad El Kony and His Excellency Mr. Bohdan Tomorowicz Wednesday, 50 July 1969 H.E. Mr. Buffurn M. Chayet Mr. Stavropoulos x x x Sr. Rolz-Bennett c Chief Adebo H.E. M. Quid Daddah c H.E. Mr. Agha Shahi H.E. Mr. Sen £ H.E. Mr. Tomorovica H.E. Mr. El Kony - The Secretary-General H.E. U Soe Tin H.E. M. Huot Sambath ^E. Sr. Cuevas Cancino 3 c H.E. Mr. Ogebe Ogbu Mr. Narasimhan c Mr. Nosek H.E. Mr. Zakharov M. Tabiti Mr. Hildyard SG luncheon to bid farewell to H.E. Mr. Mohamed Awad El Kony and H.E. Mr. Bohdan Tomorowicz, Wednesday, JO July 1969., 1.1$ p.m., 58th floor v/ t^.\ * (1.. v \f HU^ "',. « - .^. ;!-, Vj /H.E. Mr. Mohamed Awad El Kony - UAR /\ i j w'H.E. Mr. Bohdan Tomorowicz - Poland ^ -/'H.E. Mr. William B. Buffiim - United States /H.E. Mr. A.V. Zakharov - USSR .X Mr. David H.T. Hildyard - United Kingdom no H-.-E-.- M-.- -Arfiiattd- -BeVard— i'i-aB.-ee- (away) no HTE. -

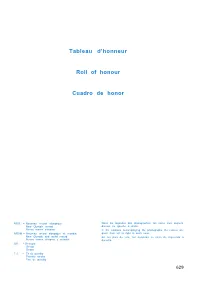

Tableau Dhonneur

Tableau d’honneur Roll of honour Cuadro de honor NRO = Nouveau record olympique Dans les legendes des photographes, les noms sont toujours New Olymplc record donnes de gauche a droite. Nueva marca olimpica. In the captions accompanying the photographs the names are NROM = Nouveau record olympique et mondial given from left to right in each case. New Olymplc and world record En los pies de foto, los nombres se citan de izquierda a Nueva marca olimpica y mundial. derecha. GR = Groupe Group Grupo. T. S. = Tir de penalty Penalty stroke Tiro de penalty 629 ● 5000 m 1. Said Aouita (MAR) (NRO) 13’05”59 Athlétisme 2. Markus Ryffel (SUI) 13’07”54 3. Antonio Leitao (POR) 13’09”20 Athletics 4. Tim Hutchings (GBR) 13’11”50 5. Paul Kipkoech (KEN) 13’14”40 Atletismo 6. Charles Cheruiyot (KEN) 13’18”41 ● 10 000 m 1. Alberto Cova (ITA) 27’47”54 2. Michael Mc Leod (GBR) 28’06”22 3. Mike Musyoki (KEN) 28’06”46 4. Salvatore Antibo (ITA) 28’06”50 1. Hommes - Men - Hombres 5 . Christoph Herle (FRG) 28’08”21 6. Sosthenes Bitok (KEN) 28’09”01 ● 100 m 1. Carl Lewis (USA) 9”99 ● 110 m haies, hurdles, vallas 2. Sam Graddy (USA) 10”19 1. Roger Kingdom (USA) (NRO) 13”20 3. Ben Johnson (CAN) 10”22 2. Greg Foster (USA) 13”23 4. Ron Brown (USA) 10”26 3. Arto Bryggare (FIN) 13”40 5. Michael Mc Farlane (GBR) 10”27 4. Mark McKoy (CAN) 13”45 6. Ray Stewart (JAM) 10”29 5. -

Results from the Games of the Xxiiird Olympic Games

Tableau d’honneur Roll of honour Cuadro de honor NRO = Nouveau record olympique Dans les légendes des photographies, les noms sont toujours New Olympic record donnés de gauche à droite. Nueva marca olimpica. In the captions accompanying the photographs the names are NROM = Nouveau record olympique et mondial given from left to right in each case. New Olympic and world record En los pies de foto, los nombres se citan de izquierda a Nueva marca olimpica y mundial derecha GR = Groupe Group Grupo. T.S. = Tir de penalty Penalty stroke Tiro de penalty. 629 • 5000 m 1. Said Aouita (MAR) (NRO) 13’05”59 Athlétisme 2. Markus Ryffel (SUI) 13'07"54 3. Antonio Leitao (POR) 13’09”20 Athletics 4. Tim Hutchings (GBR) 13’11”50 5. Paul Kipkoech (KEN) 13’14”40 Atletismo 6. Charles Cheruiyot (KEN) 13’18”41 • 10 000 m 1. Alberto Cova (ITA) 27’47”54 • 10 000 m 2. Michael MC Leod (GBR) 28’06”22 3. Mike Musyoki (KEN) 28’06”46 1. Alberto Cova (ITA) 27'47"54 2. 4. Salvatore Antibo (ITA) 28'06"50 Martti Vainio (FIN) 27'51"10 Michael MC Leod (GBR) 5. Christoph Herle (FRG) 28'08"21 3. 28'06"22 4. 6. Sosthenes Bitok (KEN) 28’09”01 Mike Musyoki (KEN) 28'06"46 5. Salvatore Antibo (ITA) 28'06"50 6. Christoph Herle (FRG) 28'08"21 • 100 m 1. Carl Lewis (USA) 9”99 • 110 m haies, hurdles, vallas 2. Sam Graddy (USA) 10”19 1. Roger Kingdom (USA) (NRO) 13”20 3. -

2016 Donacije Srps Engl 16 (2)1

NATIONAL BANK OF SERBIA Foreign Exchange Department SPECIFICATION OF DONATIONS IN FAVOUR OF GOVERNMENT BODIES AND ORGANIZATIONS PAID THROUGH THE NBS ACCOUNTS ABROAD IN 2016 No DONOR AMOUNT IN USD BREAKDOWN BY ORIGINAL CURRENCY; BENEFICIARY 1. APRE - AGENZIA PER LA PROMOZIONE 907.55 EUR 833.00 DELLA RICERCA EUROPEA -Donation to the Ministry of Construction, Transport and Infrastructure, Belgrade VIA CAVOUR 71 00184 ROMA, ITALY January 04, 2016 2. EUROPEAN COMMISSION 7,965.03 EUR 7,310.72 DIRECTORATE GENERAL BUDGET -Donation to the Tourist Organization "Zlatibor", Zlatibor AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 04, 2016 3. EUROPEAN COMMISSION 106,471.08 EUR 97,724.76 DIRECTORATE GENERAL BUDGET -Donation to the Municipality of Bela Palanka AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 04, 2016 4. EUROPEAN COMMISSION 102,289.69 EUR 93,886.86 DIRECTORATE GENERAL BUDGET -Donation to the Municipality of Ruma AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 04, 2016 5. EUROPEAN COMMISSION 132,764.94 EUR 121,858.65 DIRECTORATE GENERAL BUDGET -Donation to the Municipality of Batočina AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 04, 2016 6. EUROPEAN COMMISSION 71,339.76 EUR 65,479.38 DIRECTORATE GENERAL BUDGET -Donation to the City of Valjevo AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 04, 2016 7. EUROPEAN COMMISSION 99,825.94 EUR 91,625.50 DIRECTORATE GENERAL BUDGET -Donation to the Municipality of Varvarin AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 04, 2016 8. EUROPEAN COMMISSION 24,479.23 EUR 22,596.90 DIRECTORATE GENERAL BUDGET -Donation to the Municipality of Stara Pazova AVENUE D'AUDERGHEM 19 BE-1049 BRUXELLES January 05, 2016 9. -

Proquest Dissertations

Q.UEEN JADWIQA IN HISTORY AND LEGEND by Sigmund John Sluszka A Contribution to the Study of the XIV-XV Century History of Poland A thesis submitted to the Faoulty of Arts of the University of Ottawa in partial fulfillment of the requirements for the degree of Doctor of ^JriMBfcpiphy. *r*jy 0\ Ottawa, Canada, 1950 UMI Number: DC53563 INFORMATION TO USERS The quality of this reproduction is dependent upon the quality of the copy submitted. Broken or indistinct print, colored or poor quality illustrations and photographs, print bleed-through, substandard margins, and improper alignment can adversely affect reproduction. In the unlikely event that the author did not send a complete manuscript and there are missing pages, these will be noted. Also, if unauthorized copyright material had to be removed, a note will indicate the deletion. UMI® UMI Microform DC53563 Copyright 2011 by ProQuest LLC All rights reserved. This microform edition is protected against unauthorized copying under Title 17, United States Code. ProQuest LLC 789 East Eisenhower Parkway P.O. Box 1346 Ann Arbor, Ml 48106-1346 CURRICULUM STHDIORUM Sigmund John Sluszka was born on August 27, 1911, in Hempstead, New York, U.S.A. In June, 1935 the A.B. degree was awarded by Notre Dame University, Notre Dame, Indiana. After one year of graduate study in the Wolna Wszechnica Polska, Warsaw, Poland, a certificate of study was given in June, 19S6. The M.A. degree was granted in 193§ by New York University: the Master's thesis submitted was An Estimate of America as Evidenced in Henryk Sienkiewicz's Letters.