Development of a Low-Cost Multi-Camera Star Tracker for Small Satellites

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Small Satellite Platform Imaging X-Ray Polarimetry Explorer (IXPE

Small Satellite Platform Imaging X-Ray Polarimetry Explorer (IXPE) Mission Concept and Implementation (SSC18-V-XX) 32nd Annual Small Satellite Conference, Logan, UT, USA 4-9 August 2018 7 August: 14:00 – 14:15 Presented by: Dr. William Deininger Ball Aerospace This work was authored by employees of Ball Aerospace under Contract No.NNM16581489R with the National Aeronautics and Space Administration. The United States Government retains and the publisher, by accepting the article for publication, acknowledges that the United States Government retains a non-exclusive, paid-up, irrevocable, worldwide license to reproduce, prepare derivative works, distribute copies to the public, and perform publicly and display publicly, or allow others 1 to do so, for United States Government purposes. All other rights are reserved by the copyright owner. CO-AUTHORS . William Kalinowski – IXPE Spacecraft Lead and . Scott Mitchell – IXPE Mission Design Lead, Ball C&DH Lead, Ball . Sarah Schindhelm – IXPE Structural Design . Jeff Bladt – IXPE ADCS Lead, Ball Lead, Ball . Zach Allen – IXPE EPDS and Electrical Systems . Janice Houston – IXPE Lead Systems Engineer, Lead, Ball MSFC . Kyle Bygott – IXPE Spacecraft FSW Lead, Ball . Brian Ramsey – IXPE Deputy Principal . Brian Smith – IXPE AI&T Manager, Ball Investigator (DPI), MSFC . Colin Peterson – IXPE Mission Operations . Stephen O’Dell – IXPE Project Scientist, MSFC Manager, Ball . Michele Foster – IXPE V&V Engineer, MSFC . Spencer Antoniak – IXPE Launch Services, Ball . Ettore Del Monte – IXPE Instrument Systems . Jim Masciarelli – IXPE Metrology and Payload Engineering Lead, IAPS/INAF Systems Lead, Ball . Francesco Santoli – IXPE Instrument Systems . Jennifer Erickson – IXPE Requirement Lead, Ball Engineering, IAPS/INAF . Sandra Johnson – IXPE Telecommunications . -

Method to Increase the Accuracy of Large Crankshaft Geometry Measurements Using Counterweights to Minimize Elastic Deformations

applied sciences Article Method to Increase the Accuracy of Large Crankshaft Geometry Measurements Using Counterweights to Minimize Elastic Deformations Leszek Chybowski * , Krzysztof Nozdrzykowski, Zenon Grz ˛adziel , Andrzej Jakubowski and Wojciech Przetakiewicz Faculty of Marine Engineering, Maritime University of Szczecin, ul. Willowa 2-4, 71-650 Szczecin, Poland; [email protected] (K.N.); [email protected] (Z.G.); [email protected] (A.J.); [email protected] (W.P.) * Correspondence: [email protected]; Tel.: +48-914-809-412 Received: 26 May 2020; Accepted: 7 July 2020; Published: 9 July 2020 Abstract: Large crankshafts are highly susceptible to flexural deformation that causes them to undergo elastic deformation as they revolve, resulting in incorrect geometric measurements. Additional structural elements (counterweights) are used to stabilize the forces at the supports that fix the shaft during measurements. This article describes the use of temporary counterweights during measurements and presents the specifications of the measurement system and method. The effect of the proposed solution on the elastic deflection of a shaft was simulated with FEA, which showed that the solution provides constant reaction forces and ensures nearly zero deflection at the supported main journals of a shaft during its rotation (during its geometry measurement). The article also presents an example of a design solution for a single counterweight. Keywords: large crankshafts; minimization of elastic deformation; increasing measurement precision; device stabilizing reaction forces; measurement procedures 1. Introduction The geometries of small machine components are easily measured because they are very common in mechanical engineering, and the relevant measurement instruments are available [1,2]. -

Development of the CENTURION Jet Fuel Aircraft Engines at TAE

Development of the CENTURION Jet Fuel Aircraft Engines at TAE 25th April 2003, Friedrichshafen, Germany Thielert Group The Thielert group has been developing and manufacturing components for high performance engines, as well as special parts with complex geometries made from high-quality super alloys for various applications. Our engineers are developing solutions to optimize internal combustion engines for the racing-, prototyping- and the aircraft industry. We specialize in manufacturing, design, analysis, R&D, prototyping and testing. We work with our customers from the initial concept through to production. www.Thielert.com Company Structure Thielert AG Thielert Motoren GmbH Thielert Aircraft Engines GmbH Hamburg Lichtenstein High-Performance Engines, Manufacturing of components and Components and Engine Management parts with complex geometries Systems, Manufacturing of Prototype- and Race Cars Design Organisation according to JAR-21 Subpart JA Production Organisation according to JAR-21 Subpart G Aircraft Engines according to JAR-E Engine installation acc. to JAR-23 Turnover 18,0 Mio. EUR 16,0 16,2 14,0 12,0 13,0 10,0 9,6 8,0 6,0 6,9 5,3 4,0 3,3 2,0 0,0 1997 1998 1999 2000 2001 2002 Employees 120 112 100 99 80 71 60 40 41 35 20 27 0 199719981999200020012002 Founded 1989 as a One-Man-Company. Competency has increased steadily due to very low employee turnover. Company Profile Aviation: Production of jet fuel aircraft engines Production of precision components for the aviation industry (including engine components and structural parts) Development and production of aircraft electronics assemblies (Digital engine control units) Automotive (Prototypes, pilot lots, motor racing): Production of engine components (including high-performance cam-and crank shafts) Development and production of electronic assemblies (Engine control units, control software) Engineering Services (including test facilities, optimisation of engine concepts) Current List of Customers Automotive Bugatti Engineering GmbH, Wolfsburg DaimlerChrysler AG, Untertürkheim Dr. -

Addressing Deep Uncertainty in Space System Development Through Model-Based Adaptive Design by Mark A

Addressing Deep Uncertainty in Space System Development through Model-based Adaptive Design by Mark A. Chodas S.B. Massachusetts Institute of Technology (2012) S.M. Massachusetts Institute of Technology (2014) Submitted to the Department of Aeronautics and Astronautics in partial fulfillment of the requirements for the degree of Doctor of Philosophy at the MASSACHUSETTS INSTITUTE OF TECHNOLOGY June 2019 ○c Massachusetts Institute of Technology 2019. All rights reserved. Author................................................................ Department of Aeronautics and Astronautics May 23, 2019 Certified by. Olivier L. de Weck Professor of Aeronautics and Astronautics Certified by. Rebecca A. Masterson Principal Research Engineer, Department of Aeronautics and Astronautics Certified by. Brian C. Williams Professor of Aeronautics and Astronautics Certified by. Michel D. Ingham Jet Propulsion Laboratory Accepted by........................................................... Sertac Karaman Associate Professor of Aeronautics and Astronautics Chair, Graduate Program Committee 2 Addressing Deep Uncertainty in Space System Development through Model-based Adaptive Design by Mark A. Chodas Submitted to the Department of Aeronautics and Astronautics on May 23, 2019, in partial fulfillment of the requirements for the degree of Doctor of Philosophy Abstract When developing a space system, many properties of the design space are initially unknown and are discovered during the development process. Therefore, the problem exhibits deep uncertainty. Deep uncertainty refers to the condition where the full range of outcomes of a decision is not knowable. A key strategy to mitigate deep uncertainty is to update decisions when new information is learned. NASA’s current uncertainty management processes do not emphasize revisiting decisions and therefore are vulnerable to deep uncertainty. Examples from the development of the James Webb Space Telescope are provided to illustrate these vulnerabilities. -

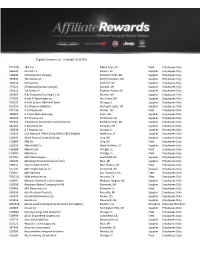

Eligible Company List - Updated 1/21/2021

Eligible Company List - Updated 1/21/2021 F011RQ 18 K Inc Eighty Four, PA Fleet Employees Only S66234 2A USA Inc Auburn, AL Supplier Employees Only S10009 3 Dimensional Services Rochester Hills, MI Supplier Employees Only S65830 3BL Media LLC North Hampton, MA Supplier Employees Only S69510 3D Systems Rock Hill, SC Supplier Employees Only S70521 3R Manufacturing Company Goodell, MI Supplier Employees Only S61313 7th Sense LP Bingham Farms, MI Supplier Employees Only S42897 A & S Industrial Coating Co Inc Warren, MI Supplier Employees Only S73205 A and D Technology Inc Ann Arbor, MI Supplier Employees Only S38187 A Finkl & Sons DBA Finkl Steel Chicago, IL Supplier Employees Only S01250 A G Simpson (USA) Inc Sterling Heights, MI Supplier Employees Only F02130 A G Wassenaar Denver, CO Fleet Employees Only S80904 A J Rose Manufacturing Avon, OH Supplier Employees Only S64720 A P Plasman Inc Fort Payne, AL Supplier Employees Only S62637 A Raymond Tinnerman Automotive Inc Rochester Hills, MI Supplier Employees Only S82162 A Schulman Inc Fairlawn, OH Supplier Employees Only S78336 A T Kearney Inc Chicago, IL Supplier Employees Only S36205 AAA National Office (Only EMPLOYEES Eligible) Heathrow, FL Supplier Employees Only S14541 Aarell Process Controls Group Troy, MI Supplier Employees Only F05894 ABB Inc Cary, NC Fleet Employees Only S10035 Abbott Ball Co West Hartford, CT Supplier Employees Only F66984 Abbott Labs Chicago, IL Fleet Employees Only FOOF92 AbbVie Inc Chicago, IL Fleet Employees Only S57205 ABC Technologies Southfield, MI Supplier -

Development and Implementation of Star Tracker Electronics

DEGREE PROJECT, IN SYSTEMS, CONTROL & ROBOTICS , SECOND LEVEL STOCKHOLM, SWEDEN 2014 Development and Implementation of Star Tracker Electronics MARCUS LINDH KTH ROYAL INSTITUTE OF TECHNOLOGY ELECTRICAL ENGINEERING, SPACE AND PLASMA PHYSICS DEPARTMENT Development and Implementation of Star Tracker Electronics MARCUS LINDH, [email protected] Stockholm 2014 Space and Plasma Physics School of Electrical Engineering Kungliga Tekniska Hogskolan¨ XR-EE-SPP 2014:001 i Development and Implementation of Star Tracker Electronics Abstract Star trackers are essential instruments commonly used on satellites. They pro- vide precise measurement of the orientation of a satellite and are part of the at- titude control system. For cubesats star trackers need to be small, consume low power and preferably cheap to manufacture. In this thesis work the electronics for a miniature star tracker has been developed. A star detection algorithm has been implemented in hardware logic, tested and verified. A platform for continued work is presented and future improvements of the current implementation are discussed. Utveckling och implementering av elektronik for¨ en stjarnkamera¨ Sammanfattning Stjarnkameror¨ ar¨ vanligt forekommande¨ instrument på satelliter. De tillhan- dahåller information om satellitens orientering med mycket hog¨ precision och ar¨ en viktig del i satellitens reglersystem. For¨ kubsatelliter måste dessa vara små, stromsnåla¨ och helst billiga att tillverka. I detta examensarbete har elektroniken for¨ en sådan stjarnkamera¨ utvecklats. En algoritm som detekterar stjarnor¨ har im- plementerats i hårdvara, testats och verifierats. En hårdvaruplattform som fortsatt arbete kan utgå ifrån har skapats och forslag¨ på forb¨ attringar¨ diskuteras. keywords miniature star tracker, cubesat, real-time blob detection, FPGA image processing, CMOS image sensor, smartfusion2 SoC, attitude control, hardware development ii Acknowledgements During frustrating and seemingly impossible problems, my super- visors Nicola Schlatter and Nickolay Ivchenko have been of great support. -

Exhibitor List (As of 10/04/2017)

2017 AUSA Annual Meeting & Exposition A Professional Development Forum 9 – 11 October 2017 Walter E. Washington Convention Center | Washington, DC Exhibitor List (as of 10/04/2017) COMPANY BOOTH 3M Company 7243 4C North America 2833 4FRONT Solutions, LLC 8045 A Head For the Future 3926 A.C.S. Industries LTD 2357 AAFMAA 3349 AAR Mobility Systems 3639 Academy of United States Veterans 8330 Accella Tire Fill Systems 3413 Accenture Federal Services 1149 Accenture Federal Services 7543 Accurate Energetic Systems 8043 Adcole Maryland Aerospace, LLC 543 ADS Group Limited 7451 ADS, Inc. 2115 Advanced Composites, Inc. 7811 Advanced Turbine Engine Company 1739 Advantech Corp. 7913 AECOM 3019 AEL JV 7227 AeroGlow International 650 Aerojet Rocketdyne 1405 AEROSERVICES S.A. 7229 AeroVironment, Inc. 201 Agility, Defense & Government Services 1714 Aimpoint, Inc. 3404 Air Radiators 704 Airborne Systems 6114 Airborne Systems Europe 252 AIRBUS 1139 AirTronic USA, LLC 3705 AITECH Defense Systems, Inc. 6015 Al Qabandi United Co. W.L.L. 1666 Alacran 2313 Alaska Structures, Inc. 2243 Allison Transmission, Inc. 1033 ALLJACK Technologies, Inc. 3533 ALPHA SYSTEMS 7221 ALTUS LSA 7231 Alumni Association of ICAF & ES 667 AM General 1439 AMC & ASA(ALT) 7515 Amerex Defense 7211 American Hearing Benefits 307 American Military University 3604 American Red Cross 1531 American-Hellenic Chamber of Commerce 7228 AmeriForce Media, LLC, a SDVOSB 1629 Amphenol 8235 AmSafe Bridport 7015 AmSafe, Inc. 7114 Analog Devices 7922 Antrica 7355 AOA Medical, Inc. 647 AP Lazer 8234 APPI – TECHNOLOGY 1841 Applied Companies 8124 APV Corporation 704 Aqua Innovations Ltd. 7915 AQYR Tech 8011 AR Modular RF 539 Arch Global Precision 1366 Arconic 1949 Argon Corporation 1867 Arlington National Cemetery 969 Armada International/Asian Military Review 3624 Armed Forces Insurance 7910 Armor Australia 704 Armor USA, Inc. -

Mass-Peculiarities: 2017 | an EMPLOYER’S GUIDE to WAGE & HOUR LAW in the BAY STATE

Mass-Peculiarities: 2017 | AN EMPLOYER’S GUIDE TO WAGE & HOUR LAW IN THE BAY STATE 3RD EDITION Authored by the Wage & Hour Litigation Practice Group | Seyfarth Shaw LLP, Boston Office MASSACHUSETTS PECULIARITIES An Employer’s Guide to Wage & Hour Law in the Bay State ____________________________________________________________________________ Third Edition Editors in Chief C.J. Eaton Cindy Westervelt Wage & Hour Litigation Practice Group Seyfarth Shaw LLP Boston, Massachusetts Richard L. Alfred, Chair and Senior Editor Patrick J. Bannon Hillary J. Massey Anne S. Bider Kristin G. McGurn Timothy J. Buckley Barry J. Miller Anthony S. Califano Kelsey P. Montgomery Ariel D. Cudkowicz Molly Clayton Mooney C.J. Eaton Alison H. Silveira Robert A. Fisher Dawn Reddy Solowey Beth G. Foley Lauren S. Wachsman James M. Hlawek Cindy Westervelt Bridget M. Maricich Jean M. Wilson Seyfarth Shaw LLP Two Seaport Lane, Suite 300 Boston, Massachusetts 02210 (617) 946-4800 www.seyfarth.com © 2017 Seyfarth Shaw LLP All Rights Reserved Legal Notice Copyrighted © 2017 SEYFARTH SHAW LLP. All rights reserved. Apart from any fair use for the purpose of private study or research permitted under applicable copyright laws, no part of this publication may be reproduced or transmitted by any means without the prior written permission of Seyfarth Shaw LLP. Important Disclaimer This publication is in the nature of general commentary only. It is not legal advice on any specific issue. The authors disclaim liability to any person in respect of anything done or omitted in reliance upon the contents of this publication. Readers should refrain from acting on the basis of any discussion contained in this publication without obtaining specific legal advice on the particular circumstances at issue. -

Affiliate Rewards Eligible Companies

Affiliate Rewards Eligible Companies Program ID's: 2013MY 2014MY Designated Corporate Customer 28HDR 28HER Fleet Company 28HDH 28HEH Supplier Company 28HDJ 28HEJ Company Name Company Type 3 Point Machine SUPPLIER 3-Dimensional Services SUPPLIER 3M Employee Transportation & Travel FLEET 84 Lumber Company DCC A & R Security Services, Inc. FLEET A G Manufacturing SUPPLIER A G Simpson Automotive Inc SUPPLIER A I M CORPORATION SUPPLIER A M G INDUSTRIES INC SUPPLIER A&D Technology Inc SUPPLIER A&E Television Networks DCC A. Raymond Tinnerman Automotive Inc SUPPLIER A. Schulman Inc SUPPLIER A.J. Rose Manufacturing SUPPLIER A.M Community Credit Union DCC A.T. Kearney, Inc. SUPPLIER A-1 SPECIALIZED SERVICES SUPPLIER AAA East Central DCC AAA National Office (Only EMPLOYEES Eligible, Not Members) SUPPLIER AAA Ohio Auto Club DCC ABB, Inc. FLEET Abbott Ball Co SUPPLIER Abbott Labs FLEET Abbott, Nicholson, Quilter, Esshaki & Youngblood P DCC Abby Farm Supply, Inc DCC ABC GROUP-CANADA SUPPLIER Abednego Environmental Services SUPPLIER Abercrombie & Fitch FLEET ABF Freight System Inc SUPPLIER ABM Industries, Inc. FLEET AboveNet FLEET ABP Induction SUPPLIER ABRASIVE DIAMOND TOOL COMPANY SUPPLIER ABT Electronics, Inc FLEET ACCENTURE SUPPLIER Access Fund SUPPLIER Affiliate Rewards Eligible Companies Program ID's: 2013MY 2014MY Designated Corporate Customer 28HDR 28HER Fleet Company 28HDH 28HEH Supplier Company 28HDJ 28HEJ Acciona Energy North America Corporation FLEET Accor North America FLEET Accu-Die & Mold Inc SUPPLIER Accumetric, LLC SUPPLIER ACCURATE MACHINE AND TOOL CORP SUPPLIER Accurate Technologies Inc SUPPLIER Accuride Corporation SUPPLIER Ace Hardware Corporation FLEET ACE PRODUCTS INC SUPPLIER ACG Direct Inc. SUPPLIER ACME GROOVING TOOL COMPANY SUPPLIER ACME MANUFACTURING COMPANY SUPPLIER ACOUSTEK NONWOVENS SUPPLIER ACRO-FEED INDUSTRIES INC SUPPLIER ACS INDUSTRIES INC SUPPLIER ACT ASSOCIATES SUPPLIER Actavis FLEET Actelion Pharmaceuticals US, Inc. -

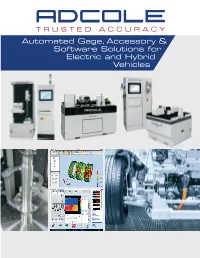

Automated Gage, Accessory & Software

Automated Gage, Accessory & Software Solutions for Electric and Hybrid Vehicles 1 AUTOMATED GAGE AND ACCESSORY AUTOMATED SIZE, FORM & PROFILE GAGES SOLUTIONS FOR NEW ENERGY Model 911 Automated Metrology Systems The Adcole Model 911 helps organizations improve VEHICLE (NEV) MANUFACTURING. part quality, reduce scrap, and increase manufacturing efficiency. The 911 gage is available in 610mm (24”), Whether you are manufacturing parts for pure 920mm (36”), 1530mm (60”), 2290mm (90”), 2670mm (105”) part capacities, offering an automated metrology battery electric, mild hybrid or plug-in hybrid solution for every manufacturing need. The versatile 911 vehicles, Adcole has automated gaging solu- gage is durable and ready for use on the shop floor as tions for your high-performance manufactur- well as the quality control lab. ing needs. Engineered to provide accurate and Benefits of the 911 gage include: repeatable data, Adcole metrology solutions provide electric motor, gearbox/transmission, • Reduces labor and material costs with superior gage output, balance, eccentric and other shaft accuracy and reliability manufacturers certainty that key production • Eliminates operator error with one button testing, specifications can be held. Manufacturing tol- concise pass/fail inspection reports, and more • Measures multiple part types and complex geometries erances as low as single-micron (μm) levels • Provides numerical and graphical representation of can be supported with accuracy capabilities complex metrology data The Model 911 is available in different length capacities reaching sub-micron performance. With elec- • Enables manufacturers to measure multiple part tric motor rotation speeds escalating toward types using a flexible gage platform 20,000 RPM and beyond, Adcole gages are • Available with optional LightSCOPE™ and DiaMetric™ Follower System (see following details) uniquely capable of providing the quality con- trol data necessary to build tomorrow’s sophis- ticated NEVs. -

Central Europe Automotive Report

AUSTRIA BULGARIA CZECH REPUBLIC HUNGARY CENTRAL POLAND ROMANIA SLOVAK REPUBLIC SLOVENIA EUROPE COVERING THE AUTOMOTIVE CENTRAL EUROPEAN AUTOMOTIVE INDUSTRY REPORT February 1997 Volume II, Issue 2 ISSN 1088-1123 ™ ture (1995-1997) amounts Romania to USD 500,000,000. As of November 1996, the com- PROFILE pany had 4,800 employees. ROMANIA Commercial Auto Leasing Daewoo & Dacia Battle for Market Daewoo plans to invest Hits Romania an additional USD 550 mil- Share; Suppliers Modernizing lion (USD 77 million in cash and USD 473 million AutoLease is Romania’s first dedicated auto in equipment) in its leasing company. The company will provide Market Highlights Romanian factory. Daewoo hopes to pro- 2-year cross-border finance leases to interna- duce 300,000 cars annually by the year 2000. tional and Romanian companies doing business In early 1997, Romanian truck manufactur- “We are not going to make any compromise in Romania. er Roman S.A. will put into service a new on the quality of the cars produced in flexible crankshaft manufacturing line. The Craiova,” stresses Mr. Dong-Kyu Park, the Mike Morris, line will use machines imported from the company’s Chairman. (See CEAR Vol. 1, Founder and companies Boeringer, Hegenscheidt, Naxos- Issue 3 for a Profile interview of Mr. Park). CEO of Union, and Adcole. Roman also plans to Daewoo also intends to start assembly (SKD) AutoLease, is a begin production of 110-115 hp truck engines of 1-ton light trucks at ARO’s facilities in Harvard MBA that meet Euro-2 standards. The engines are Campulung Muscel. and financier currently being tested at the A.V.L. -

Capstone Headwaters C4ISR Industry MA Coverage Report November

MILITARY READINESS AGAINST NEAR-PEERS DRIVES HEIGHTENED DEMAND FOR C4ISR INDUSTRY C4ISR INDUSTRY UPDATE | NOVEMBER 2020 CONTACTS KEY HIGHLIGHTS Tess Oxenstierna • Resilient industry during COVID-19, as C4ISR serves Managing Director as primary “enabling technologies” across military Aerospace l Defense l Govt l Security air/land/sea/space platforms and the joint networks that allow for interoperability Hilary Morrison • The National Defense Strategy is fueling Vice President modernization requirements to keep pace with Aerospace l Defense l Govt l Security warfighting missions against near-peers • Merger and acquisition activity totals 60 deals through October, keeping pace with 2019 Military Readiness Against Near-Peers Drives Heightened Demand for C4ISR Industry INDUSTRY OUTLOOK TABLE OF CONTENTS C4ISR (Command, Control, Communications, Computers, Intelligence, Surveillance, and Reconnaissance) continues to serve as primary “enabling technologies” across Industry Outlook military air/land/sea/space platforms and the joint networks that allow for Key Trends & Drivers interoperability across these mission critical systems. Many C4ISR companies were deemed essential at the onset of the COVID-19 pandemic and The Defense Defense Budget Request Overview Production Act, invoked in March 2020, further buoyed the sector by authorizing Highlight: U.S. Space Force federal government loans and prioritizing government orders to ensure continuity M&A Activity of supply. These immediate buffers helped mitigate business loses across the Defense