XFORMAL: a Benchmark for Multilingual Formality Style Transfer

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

JULIANA VILLAGE July 2018

Juliana Village Activities Program – July 2018 JULIANA VILLAGE RESIDENTS’ NEWSLETTER July 2018 Juliana Village Activities Program – July 2018 Mon 2nd - Monthly Shopping Trip to Southgate at 10:30am with Shire Mini Bus. - Renata Nail Appointments - BINGO with Lorna at 1:45pm Tue 3rd - Music at 10:30am & Men’s Shed 10am -12pm - Music Therapy with Jenni at 2:15pm in GC Wed 4th - Podiatry Day - 9:30am in Georges Centre, - Devotion with Tony at 1:45pm Thurs 5th - Hairdresser Day, - Laughter Yoga at 2pm in GC Fri 6th - Painting Therapy with Janine at 10:30am, - Short Bus Trip at 1:15pm with Shire Mini Bus. - Residents Committee Meeting 2pm Mon 9th - BINGO with Lorna at 1:45pm , Tue 10th -Library Day , - Music at 10:30am, -Monthly Lunch Outing to: Bundeena Memorial RSL Club-$30pp, Bus departs at 10:30am with Activus Wed 11th -Devotion with Tony at 1:45pm in GC Thurs 12th - Hairdresser Day, - Birthday Party with Bollywood Dancers at 2:00pm Fri 13th - Painting Therapy with Janine at 10:30am Mon 16th - Renata Nail Appointments - BINGO with Lorna at 1:45pm Tue 17th - Music Sing-a-long at 10:30am & Men’s Shed with Tony 10am-12pm Wed 18th - Podiatry Day - Hairdresser in Today - Devotion with Tony at 1:45pm in GC Thurs 19th - Christmas in July Event at Panorama House, Bus Departs at 10:30am Fri 20th - Painting Therapy with Janine at 10:30am Mon 23rd h - BINGO with Lorna at 1:45pm , - Renata Nail Appointments Tue 24th - Library Day , - Music Sing-a-long & Men’s Shed 10am-12pm Wed 25th Devotion with Tony at 1:45pm in GC Thurs 26th - Hairdresser Day -Happy Hour at 2pm Fri 27th -Painting Therapy with Janine at 10:30am, - Short Shopping Trip to Menai Marketplace at 1:15pm with Activus . -

Memoria Completa

1 AGRADECIMIENTOS Eva López, Juana Jara, Javier Fernández Río, Juan Majada y Pablo Priesca. Edita Caja Rural de Asturias Diseño, maquetación y contenidos Cronistar Fotografías Carlos Rodríguez Imprime Gráficas Eujoa S.A. Depósito Legal AS 2246-2003 www.fundacioncajaruraldeasturias.com 3 ÍNDICE Quiénes somos 4 Carta del Presidente 6 Laboratorio de oncología 8 Con los que avanzan 16 Ingeniero del año 38 Todo por aprender 42 Creciendo juntos 52 Artes plásticas 60 Libros y publicaciones 70 Música 78 Deporte 88 Nunca caminarán solos 102 Nuestro medio rural 122 Desglose económico 140 Órganos de Gobierno 142 5 Quiénes somos Nuestros valores Somos una cooperativa de crédito, una entidad financiera que » Devolver la CONFIANZA que nuestros socios y clientes nos desde 1965 provee de productos y servicios financieros al mer- han confiado. cado asturiano, destinatario de nuestra actuación directa. Ejer- cemos una banca de proximidad sin olvidar que nuestra asocia- » Establecer un COMPROMISO con los valores de nuestra co- ción con otras Cajas Rurales en el Banco Cooperativo Español munidad. nos permite realizar la actividad desde un concepto más global. » Defender un desarrollo basado en la ÉTICA social y empresa- La confianza, aspecto clave en la relación entre una entidad de rial. ahorro y sus clientes, la traducimos en una combinación de bue- nas prácticas bancarias y valores éticos al servicio de los intere- » Vocación de SERVICIO a la sociedad con el objetivo de mejo- ses financieros de nuestros socios respaldada por la fortaleza de rar su calidad de vida. nuestros recursos propios generados con una eficiente gestión. » Potenciar la CERCANÍA para desarrollar actividades destina- das a todos los segmentos de la población con especial dedi- Nuestro compromiso cación al fomento de la economía social y al medio rural. -

Italian – All Elements F–10 and 7–10

Italian – All elements F–10 and 7–10 Copyright statement The copyright material published in this work is subject to the Copyright Act 1968 (Cth) and is owned by ACARA or, where indicated, by a party other than ACARA. This material is consultation material only and has not been endorsed by Australia’s nine education ministers. You may view, download, display, print, reproduce (such as by making photocopies) and distribute these materials in unaltered form only for your personal, non-commercial educational purposes or for the non-commercial educational purposes of your organisation, provided that you make others aware it can only be used for these purposes and attribute ACARA as the source. For attribution details refer to clause 5 in (https://www.australiancurriculum.edu.au/copyright-and-terms-of-use/). ACARA does not endorse any product that uses the Australian Curriculum Review consultation material or make any representations as to the quality of such products. Any product that uses this material should not be taken to be affiliated with ACARA or have the sponsorship or approval of ACARA. TABLE OF CONTENTS F–10 AUSTRALIAN CURRICULUM: LANGUAGES .................................................................................................................................................................... 1 ABOUT THE LEARNING AREA .................................................................................................................................................................................................. 1 Introduction -

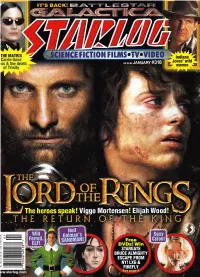

Starlog Magazine

THE MATRIX SCIENCE FICTION FILMS*TV*VIDEO Indiana Carrie-Anne 4 Jones' wild iss & the death JANUARY #318 r women * of Trinity Exploit terrain to gain tactical advantages. 'Welcome to Middle-earth* The journey begins this fall. OFFICIAL GAME BASED OS ''HE I.l'l't'.RAKY WORKS Of J.R.R. 'I'OI.KIKN www.lordoftherings.com * f> Sunita ft* NUMBER 318 • JANUARY 2004 THE SCIENCE FICTION UNIVERSE ^^^^ INSIDEiRicinE THISti 111* ISSUEicri ir 23 NEIL CAIMAN'S DREAMS The fantasist weaves new tales of endless nights 28 RICHARD DONNER SPEAKS i v iu tuuay jfck--- 32 FLIGHT OF THE FIREFLY Creator is \ 1 *-^J^ Joss Whedon glad to see his saga out on DVD 36 INDY'S WOMEN L/UUUy ICLdll IMC dLLIUII 40 GALACTICA 2.0 Ron Moore defends his plans for the brand-new Battlestar 46 THE WARRIOR KING Heroic Viggo Mortensen fights to save Middle-Earth 50 FRODO LIVES Atop Mount Doom, Elijah Wood faces a final test 54 OF GOOD FELLOWSHIP Merry turns grim as Dominic Monaghan joins the Riders 58 THAT SEXY CYLON! Tricia Heifer is the model of mocmodern mini-series menace 62 LANDniun OFAC THETUE BEARDEAD Disney's new animated film is a Native-American fantasy 66 BEING & ELFISHNESS Launch into space As a young man, Will Ferrell warfare with the was raised by Elves—no, SCI Fl Channel's new really Battlestar Galactica (see page 40 & 58). 71 KEYMAKER UNLOCKED! Does Randall Duk Kim hold the key to the Matrix mysteries? 74 78 PAINTING CYBERWORLDS Production designer Paterson STARLOC: The Science Fiction Universe is published monthly by STARLOG GROUP, INC., Owen 475 Park Avenue South, New York, NY 10016. -

Paese Comuneomune OMMARIO

Periodico dell’Amministrazione Comunale di Castelgugliemo - Dicembre 2009 N°10 - Redattori: Camilla Magagnini e Antonio Paese Comuneomune SOMMARIO: La parola al Sindaco P.1 Dal consiglio Comunale P.2 Attività sociali P.5 Cittadini esemplari P.6 Tutti a Scuola P.7 L’impegno delle Associazioni P.8 Dal mondo sportivo P.16 Cari concittadini, questo Natale all’alba del Nuovo Anno è dominato, nell’animo di ciascuno di noi, dall’inquietudine data dalla crisi economica che ormai ha toccato ogni famiglia italiana e del nostro Comune. La sconvolgente crisi finanziaria scoppiata nel corso del 2008 negli Stati Uniti d’America, ha via via investito l’intera economia mondiale. In questo momento è necessario guardare in faccia ai pericoli cui è esposta la nostra società, senza sottovalutarne la gravità : ma senza lasciarcene impaurire. In questo momento di crisi bisogna essere lungimiranti ed impo- stare le premesse di un migliore futuro facendo leva sui punti di forza e sulle più vive energie di cui disponiamo. Ecco perché stiamo alacremente lavorando affinché l’irrealizzato ottimismo che ha prodotto lo scostamento di bilancio del nostro comune, quest’anno, trovi concretizzazione il prossimo anno. La situazione ci impone il massi- mo sforzo di concertazione tra i protagonisti della nostra economia, per definire lo sviluppo dei prossimi anni. Dobbiamo oggi ancor più che in passato favorire le imprese che vogliono insediarsi nel nostro comune e promuovere indirizzi nuovi per il progresso omune omune delle attività produttive con un occhio di riguardo, soprattutto, ma non solo, alle più avanzate tecnologie per l’energia e per l’ambiente. -

TESTING 'THE GIRL with X-RAY EYES' Tsunami Conspiracies and Hollow Moons

TAVRIS ON SEX DIFFERENCES • RANDI ON JOHNNY CARSON • NICKELL ON TURIN SHROUD Skeptical Inquirer THE MAGAZINE FOR SCIENCE A N D R EASON Volume 29, No. 3 • May / June 200 » Testing **, 'The Girl with X-Ray Eyes' •\ •Ray Hyman. Andrew Skolnick Joe Nickell Psychic Swindlers ^H --.T.'.-V*• • Four Myths about Evolution A Librarian's Guide to Critical Thinking Published by the Committee for the Scientific Investigation of Claims of the Paranormal THE COMMITTEE FOR THE SCIENTIFIC INVESTIGATION of Claims off the Paranormal AT THE CENTER FOR INQUIRY-TRANSNATIONAL (ADJACENT TO THE STATE UNIVERSITY OF NEW YORK AT BUFFALO| • AN INTERNATIONAL ORGANIZATION Paul Kurtz, Chairman; professor emeritus of philosophy, State University of New York at Buffalo Barry Karr, Executive Director Joe Nicked, Senior Research Fellow Massimo Polidoro, Research Fellow Richard Wiseman. Research Fellow Lee Nisbet. Special Projects Director FELLOWS James E. Alcock.* psychologist. York Univ., Toronto Saul Green, Ph.D., biochemist, president of ZOL Loren Pankratz. psychologist. Oregon Health jerry Andrus, magician and inventor, Albany, Oregon Consultants, New York. NY Sciences Univ. Marcia Angell. M.D., former editor-in-chief. New Susan Haack. Cooper Senior Scholar in Arts Robert L Park, professor of physics, Univ. of Maryland England Journal of Medicine and Sciences, prof, of philosophy, University John Paulos, mathematician. Temple Univ. Robert A. Baker, psychologist, Univ. of Kentucky of Miami Steven Pinker, cognitive scientist. Harvard Stephen Barrett. M.D., psychiatrist, author. C. E. M. Hansel, psychologist, Univ. of Wales Massimo Polidoro, science writer, author, consumer advocate. Allentown, Pa. David J. Helfand. professor of astronomy, executive director CICAP Italy Willem Betz. -

American Primacy and the Global Media

City Research Online City, University of London Institutional Repository Citation: Chalaby, J. (2016). Drama without drama: The late rise of scripted TV formats. Television & New Media, 17(1), pp. 3-20. doi: 10.1177/1527476414561089 This is the accepted version of the paper. This version of the publication may differ from the final published version. Permanent repository link: https://openaccess.city.ac.uk/id/eprint/5818/ Link to published version: http://dx.doi.org/10.1177/1527476414561089 Copyright: City Research Online aims to make research outputs of City, University of London available to a wider audience. Copyright and Moral Rights remain with the author(s) and/or copyright holders. URLs from City Research Online may be freely distributed and linked to. Reuse: Copies of full items can be used for personal research or study, educational, or not-for-profit purposes without prior permission or charge. Provided that the authors, title and full bibliographic details are credited, a hyperlink and/or URL is given for the original metadata page and the content is not changed in any way. City Research Online: http://openaccess.city.ac.uk/ [email protected] Drama without drama: The late rise of scripted TV formats Author’s name and address: Professor Jean K. Chalaby Department of Sociology City University London London EC1V 0HB Tel: 020 7040 0151 Fax: 020 7040 8558 Email: [email protected] Author biography: Jean K. Chalaby is Professor of International Communication and Head of Sociology at City University London. He is the author of The Invention of Journalism (1998), The de Gaulle Presidency and the Media (2002) and Transnational Television in Europe: Reconfiguring Global Communications Networks (2009). -

Morgan State University Opera Workshop Newsletter Bravi Tutti! Bravi Tutti! Bravi Tuttispring! ISSUE Volume 1, No

Morgan State University Opera Workshop Newsletter Bravi Tutti! Bravi Tutti! Bravi TuttiSPRING! ISSUE Volume 1, No. 2 SPRING 2011 Opera ALA SPRIING 2011OperaGG ALA MMAASSTTEERR CCLLAASSSS SSEERRIIIEESS WWiiissddoomm fffrroomm ttthhee JJoouurrnneeyy 2011 BBaallttiimmoorree SSuummmmeerr OOppeerraa WWoorrkksshhoopp 11 Bravi Tutti! Spring Issue 1 Greetings from the Artistic Director… Happy New Year! The Morgan State University Opera Workshop is delighted that you are taking the time to read this newsletter and stay abreast of the progress, events, and development of our students. We have come a long way in the past seven years. When I first arrived at Morgan there was a small class run by the late Dr. Marilyn Thompson. The students were very enthusiatic and Dr. Thompson was very creative and committed to providing performance opportunities for the students. Our first class was made up of ten very enthusiatic students. Our first event was an evening of opera scenes by Mozart, Purell and Douglas Moore in fall 2004. We mounted our first fully-staged opera in spring 2005 with a production of Gian Carlo Menotti’s The Medium. This was the beginning of a new era of operatic performances here at Morgan. It was also the beginning of a great journey for our students. In this spring edition of Bravi Tutti!...you will read about our events for the semester which include master classes with Washington, DC born international opera singer Denyce Graves and Broadway singer/dancer and the title role of “The Wiz” on Broadway, Kenneth Kamal Scott; “Wisdom from the Journey” guest retired opera singer, Junetta Jones; our program for the spring 2011 Opera Gala with the MSU Opera Workshop and MSU Choir in collaboration once again with Maestro Julien Benichou and the Chesapeake Youth Symphony Orchestra, and updates on some of the Alumni of the Opera Workshop. -

Friday, September 8, 2006

Rose-Hulman Institute of Technology Rose-Hulman Scholar The Rose Thorn Archive Student Newspaper Fall 9-8-2006 Volume 42 - Issue 01 - Friday, September 8, 2006 Rose Thorn Staff Rose-Hulman Institute of Technology, [email protected] Follow this and additional works at: https://scholar.rose-hulman.edu/rosethorn Recommended Citation Rose Thorn Staff, "Volume 42 - Issue 01 - Friday, September 8, 2006" (2006). The Rose Thorn Archive. 183. https://scholar.rose-hulman.edu/rosethorn/183 THE MATERIAL POSTED ON THIS ROSE-HULMAN REPOSITORY IS TO BE USED FOR PRIVATE STUDY, SCHOLARSHIP, OR RESEARCH AND MAY NOT BE USED FOR ANY OTHER PURPOSE. SOME CONTENT IN THE MATERIAL POSTED ON THIS REPOSITORY MAY BE PROTECTED BY COPYRIGHT. ANYONE HAVING ACCESS TO THE MATERIAL SHOULD NOT REPRODUCE OR DISTRIBUTE BY ANY MEANS COPIES OF ANY OF THE MATERIAL OR USE THE MATERIAL FOR DIRECT OR INDIRECT COMMERCIAL ADVANTAGE WITHOUT DETERMINING THAT SUCH ACT OR ACTS WILL NOT INFRINGE THE COPYRIGHT RIGHTS OF ANY PERSON OR ENTITY. ANY REPRODUCTION OR DISTRIBUTION OF ANY MATERIAL POSTED ON THIS REPOSITORY IS AT THE SOLE RISK OF THE PARTY THAT DOES SO. This Book is brought to you for free and open access by the Student Newspaper at Rose-Hulman Scholar. It has been accepted for inclusion in The Rose Thorn Archive by an authorized administrator of Rose-Hulman Scholar. For more information, please contact [email protected]. R OSE -H ULMAN I NSTITUTE OF T ECHNOLOGY T ERRE H AUTE , I NDIANA Friday, September 8, 2006 Volume 42, Issue 1 “I’m here for the students” News Briefs Jakubowski answers questions and concerns at open forum By Ryan Schultz Fred Webber As for making changes Band sounded terrific. -

My Italian Circle

My Italian Circle Beginner Italian Course Vocabulary - Lesson 1 VIDEO TRANSCRIPT Today we’ll go through 20 basic Italian phrases that we use all the 9me, and that will make your Italian sound natural. There’s a bonus expression at the end, so keep watching! Let’s dive right in! 1. CIAO Everybody knows it! It means hello, and it’s used between friends or people of the same age. What you may not know is that it’s beIer not to use it with people who are much older than you! You should rather say… 2. BUONGIORNO! Good morning. It’s formal, but also friendly. Say it when you enter a shop or you meet someone that you don’t know very well. 3. ARRIVEDERCI Goodbye! It literally means Un9l we meet again! but it’s formal. You can say it when you leave a restaurant or a shop. When par9ng from a friend you can say simply CIAO! or CI VEDIAMO! Same meaning. We have a video all about Italian gree9ngs, formal and informal. The link is here in the top right corner and in the descrip9on below, have a look! 4. COME VA? How is it going? It’s impersonal, so you can use it with anybody. Very common, even more than Come stai?, which means How are you? 5. TUTTO BENE All good, everything okay. You can also use it as a ques9on: TuIo bene? Is everything alright? This is also impersonal, so it’s easier to use. 6. PIACERE Nice to meet you. You can say it when you shake hands with someone that you just met, and they will say it back to you. -

Training List Singapore Sunday 02 September 2018 Meeting

TRAINING LIST SINGAPORE SUNDAY 02 SEPTEMBER 2018 MEETING TRAINER C BROWN TRAINER DL FREEDMAN C454 CAMBRIDGE KS B/1600 H193 CROUCHING SUN (l.CROUCHING DRAGON) 4P/1200 H205 CENTENARY DIAMOND R/1400 C324 ELITE EXCALIBUR (l.RIVER WILD) G3/1600 W395 INFANTRY G3/1600 C272 GOLD CITY KS B/1600 H57 KING ZOUSTAR R/1400 E439 GOLD COMPANY M/1200 H60 LIM'S FAIRLY R/1400 H122 GOLD KINGDOM R/1400 H94 LIONROCKSPIRIT KS B/1600 E496 PENNSYLVANIA M/1200 H53 MORPHEUS R/1400 C88 SIR ISAAC KS B/1000 E187 MR CLINT G3/1600 E307 ZAC KASA KS B/1000 R325 MR SPIELBERG G3/1600 TOTAL FOR TRAINER: 9 TOTAL 8 FOR TRAINER CT KUAH TRAINER H TAKAOKA C345 GOLDEN THUNDER 4NP/1200 W461 BOHEMIAN 5/1200 E382 KING OF GLORY M/1200 W302 GOLAZO G3/1600 E277 ULTIMATE KILLER 5/1200 R363 IN BOCCA AL LUPO 5/1200 E224 WILD BEE M/1200 E500 SUN ELIZABETH KS D/1600 W306 SUN HANCOCK 4NP/1200 TOTAL FOR TRAINER: 4 TOTAL 5 TRAINER D HILL R61 KEEP SPINNING KS B/1600 TRAINER HW TAN W510 PRINCE FERDINAND 5/1200 E524 BULL N RUM M/1200 E435 RELIC WARRIOR R/1400 C276 GREY FALCON KS B/1000 C284 MILITARY MIGHT 4NP/1200 TOTAL FOR TRAINER: 3 C274 MY HORSE (l.AFFALO) KS B/1000 TRAINER D KOH W122 NOVA STRIKE G3/1600 C194 SPECIAL RAIN (l.HOLD ON LIL TOMATO) 4NP/1200 E275 BEAUTIFUL DAY M/1200 J379 SUGARTIME JAZZ (l.NEAT) 5/1200 C170 CAVATINA 5/1200 F369 WHITE COFFEE (l.SANTANA) KS D/1600 W12 PEER GYNT KS D/1600 E532 SOLO SUN (l.SOLO HEART) KS B/1600 TOTAL 8 R564 SUN EMPIRE (l.WIND SEEKER) 5/1200 E531 SUN PRINCEPS (l.DUAN ZHIXING) 4P/1200 TRAINER J O'HARA J298 GALLANT HEIGHTS 4NP/1200 E273 SATELLITE CLASSIC -

ML4LT (2016): Assignments - Undergraduate Students

ML4LT (2016) Marina Santini Department of Linguistics and Philology Uppsala University ML4LT (2016): Assignments - Undergraduate Students Refresh the page: Changelog 12 Dec 2016 The Machine Learning for Language Technology course is examined by means of 3 home assignments (check the assignments’ deadline on the course website). TIP: Before starting working on the assignments, review all the lab tasks and build upon the practical experience you gained during the lab sessions. We used several datasets of different nature and composition that highlighted different views and interpretation of data and ML algorithms. The lab documents contain step-by-step instructions, tips and useful references. Assignment 1: Decision Trees and k-Nearest Neighbours This assignment is about learning morphological information, namely the different classes of English verbs. o Remember that accuracy can be a misleading metric to assess the goodness of a classifier. o Use visualization to understand the behaviour of the classifiers. o Tweak parameters and see what happens. o Did any classifier(s) perform statistically worse than the reference classifier? o Did any classifier(s) perform statistically better than the reference classifier? o Which classifier(s) classified most correct instances? o Which classifier(s) classified least correct instances? o How many correct instances did the reference classifier classify (in percent)? o How many incorrect instances did the reference classifier classify (in percent)? o Did any classifier had unclassified instances? o Draw ROC curves, cost/benefit analysis and apply any other evaluation metrics that you think is useful to make the right choice. o Think of the effect of the inductive bias on classification results.