Iconicity As a Pervasive Force in Language: Evidence from Ghanaian Sign

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Sign Language Typology Series

SIGN LANGUAGE TYPOLOGY SERIES The Sign Language Typology Series is dedicated to the comparative study of sign languages around the world. Individual or collective works that systematically explore typological variation across sign languages are the focus of this series, with particular emphasis on undocumented, underdescribed and endangered sign languages. The scope of the series primarily includes cross-linguistic studies of grammatical domains across a larger or smaller sample of sign languages, but also encompasses the study of individual sign languages from a typological perspective and comparison between signed and spoken languages in terms of language modality, as well as theoretical and methodological contributions to sign language typology. Interrogative and Negative Constructions in Sign Languages Edited by Ulrike Zeshan Sign Language Typology Series No. 1 / Interrogative and negative constructions in sign languages / Ulrike Zeshan (ed.) / Nijmegen: Ishara Press 2006. ISBN-10: 90-8656-001-6 ISBN-13: 978-90-8656-001-1 © Ishara Press Stichting DEF Wundtlaan 1 6525XD Nijmegen The Netherlands Fax: +31-24-3521213 email: [email protected] http://ishara.def-intl.org Cover design: Sibaji Panda Printed in the Netherlands First published 2006 Catalogue copy of this book available at Depot van Nederlandse Publicaties, Koninklijke Bibliotheek, Den Haag (www.kb.nl/depot) To the deaf pioneers in developing countries who have inspired all my work Contents Preface........................................................................................................10 -

(Sasl) in a Bilingual-Bicultural Approach in Education of the Deaf

APPLICATION OF SOUTH AFRICAN SIGN LANGUAGE (SASL) IN A BILINGUAL-BICULTURAL APPROACH IN EDUCATION OF THE DEAF Philemon Abiud Okinyi Akach August 2010 APPLICATION OF SOUTH AFRICAN SIGN LANGUAGE (SASL) IN A BILINGUAL-BICULTURAL APPROACH IN EDUCATION OF THE DEAF By Philemon Abiud Omondi Akach Thesis submitted in fulfillment of the requirements of the degree PHILOSOPHIAE DOCTOR in the FACULTY OF HUMANITIES (DEPARTMENT OF AFROASIATIC STUDIES, SIGN LANGUAGE AND LANGUAGE PRACTICE) at the UNIVERSITY OF FREE STATE Promoter: Dr. Annalie Lotriet. Co-promoter: Dr. Debra Aarons. August 2010 Declaration I declare that this thesis, which is submitted to the University of Free State for the degree Philosophiae Doctor, is my own independent work and has not previously been submitted by me to another university or faculty. I hereby cede the copyright of the thesis to the University of Free State Philemon A.O. Akach. Date. To the deaf children of the continent of Africa; may you grow up using the mother tongue you don’t acquire from your mother? Acknowledgements I would like to say thank you to the University of the Free State for opening its doors to a doubly marginalized language; South African Sign Language to develop and grow not only an academic subject but as the fastest growing language learning area. Many thanks to my supervisors Dr. A. Lotriet and Dr. D. Aarons for guiding me throughout this study. My colleagues in the department of Afroasiatic Studies, Sign Language and Language Practice for their support. Thanks to my wife Wilkister Aluoch and children Sophie, Susan, Sylvia and Samuel for affording me space to be able to spend time on this study. -

Ugandan Sign Language

Communicating with your deaf child in... Ugandan Sign Language Book One 1 Contents 3 Contents 5 Introduction 6 Chapters 47 Notes 2 6 Chapter 1 Greetings and Courtesy 10 Chapter 2 Friends and Family 13 Chapter 3 Fingerspelling and Numbers 16 Chapter 4 Going Shopping 24 Chapter 5 The World and Far Away 31 Chapter 6 Children’s Rights 36 Chapter 7 Health 42 Chapter 8 Express Yourself 3 4 Introduction This book is for parents, carers, guardians and families with a basic knowledge of Ugandan Sign Language (USL) who want to develop their USL skills to communicate at a more advanced level with their deaf children using simple phrases and sentences. This is the second edition of the book, first published in 2013 now with added amends and content following consultation with users. We want this book to: • Empower families to improve their communication skills • Ensure deaf children are included in their family life and in their communities • Empower deaf children to express their own views and values and develop the social skills they need to lead independent lives • Allow deaf children to fulfil their potential How to use this book Sign language is a visual language using gesture, body movements and facial expressions to communicate. This book has illustrated pictures to show you how to sign different words. The arrows show you the hand movements needed to make the sign correctly. This book is made up of eight chapters, each covering different topic areas. Each chapter has a section on useful vocabulary followed by activities to enable you to practise phrases using the words learned in the chapter. -

UNIVERSITY of CALIFORNIA, SAN DIEGO Telling, Writing And

UNIVERSITY OF CALIFORNIA, SAN DIEGO Telling, Writing and Reading Number Tales in ASL and English Academic Languages: Acquisition and Maintenance of Mathematical Word Problem Solving Skills A thesis submitted in partial satisfaction of the requirements for the degree Master of Arts in Teaching and Learning: Bilingual Education (ASL-English) By Alexander Zernovoj Committee in charge: Tom Humphries, Chair Bobbie M. Allen Claire Ramsey 2005 Copyright Alexander Zernovoj, 2005 All rights reserved. The thesis of Alexander Zernovoj is approved: _____________________________________ Chair _____________________________________ _____________________________________ University of California, San Diego 2005 iii DEDICATION It is difficult to imagine how I would have gone this far without the support of my family, friends, professors, teachers and classmates. They have encouraged me to pursue lifelong teaching and learning. They also have inspired me, helped me, supported me, and pushed me to become a best teacher as I can be. This thesis is dedicated to them. iv TABLE OF CONTENTS Signature Page ………………………………………………………… iii Dedication ……………………………………………………………… iv Table of Contents ……………………………………………………… v List of Figures …………………………………………………………... vi List of Tables …………………………………………………………... viii Abstract ………………………………………………………………… xi I. Introduction and Overview ………………………………...…………… 1 II. The Need for Bilingual Approaches to Education ……………............... 5 III. Assessment of Need …………………………………………………… 20 IV. Review of Existing Materials and Curricula …………………………… -

A Lexical Comparison of South African Sign Language and Potential Lexifier Languages

A lexical comparison of South African Sign Language and potential lexifier languages by Andries van Niekerk Thesis presented in partial fulfilment of the requirements for the degree Masters in General Linguistics at the University of Stellenbosch Supervisors: Dr Kate Huddlestone & Prof Anne Baker Faculty of Arts and Social Sciences Department of General Linguistics March, 2020 Stellenbosch University https://scholar.sun.ac.za DECLARATION By submitting this thesis electronically, I declare that the entirety of the work contained therein is my own, original work, that I am the sole author thereof (save to the extent explicitly otherwise stated), that reproduction and publication thereof by Stellenbosch University will not infringe any third party rights and that I have not previously in its entirety or in part submitted it for obtaining any qualification. Andries van Niekerk March 2020 Copyright © 2020 University of Stellenbosch All rights reserved 1 Stellenbosch University https://scholar.sun.ac.za ABSTRACT South Africa’s history of segregation was a large contributing factor for lexical variation in South African Sign Language (SASL) to come about. Foreign sign languages certainly had a presence in the history of deaf education; however, the degree of influence foreign sign languages has on SASL today is what this study has aimed to determine. There have been very limited studies on the presence of loan signs in SASL and none have included extensive variation. This study investigates signs from 20 different schools for the deaf and compares them with signs from six other sign languages and the Paget Gorman Sign System (PGSS). A list of lemmas was created that included the commonly used list of lemmas from Woodward (2003). -

Chapter 4 Deaf Children's Teaching and Learning

A University of Sussex DPhil thesis Available online via Sussex Research Online: http://sro.sussex.ac.uk/ This thesis is protected by copyright which belongs to the author. This thesis cannot be reproduced or quoted extensively from without first obtaining permission in writing from the Author The content must not be changed in any way or sold commercially in any format or medium without the formal permission of the Author When referring to this work, full bibliographic details including the author, title, awarding institution and date of the thesis must be given Please visit Sussex Research Online for more information and further details TEACHING DEAF LEARNERS IN KENYAN CLASSROOMS CECILIA WANGARI KIMANI SUBMITTED TO THE UNIVERSITY OF SUSSEX FOR THE DEGREE OF DOCTOR OF PHILOSOPHY FEBRUARY 2012 ii I hereby declare that this thesis has not been and will not be, submitted in whole or in part to another university for the award of any other degree. Signature: ……………………… iii Table of Contents Summary.........................................................................................................................ix Acknowledgements.........................................................................................................xi Dedication.......................................................................................................................xii List of tables..................................................................................................................xiii List of figures................................................................................................................xiv -

AFRICAN SIGN LANGUAGES WORKSHOP PROGRAM Includes Most of Europe, South Africa, Egypt, More Info: Malawi, Sudan, Rwanda, Zambia, Etc

WORLD CONGRESS OF AFRICAN LINGUISTICS 10 (WOCAL10), LEIDEN UNIVERSITY Schedule is in timezone of conference site (Central European Time; GMT+2 in DST). This AFRICAN SIGN LANGUAGES WORKSHOP PROGRAM includes most of Europe, South Africa, Egypt, more info: http://sign.wocal.net Malawi, Sudan, Rwanda, Zambia, etc. For more timezones, see Page 2 RECEPTION: 6:00-7:30PM SATURDAY, JUNE 5, 2021 DAY 1 MONDAY, JUNE 7, 2021 DAY 2 TUESDAY, JUNE 8, 2021 DAY 3 WEDNESDAY, JUNE 9, 2021 DAY 4 THURSDAY, JUNE 10, 2021 DAY 5 FRIDAY, JUNE 11, 2021 START TIME EVENT START TIME EVENT START TIME EVENT START TIME EVENT START TIME EVENT WORKSHOP OPENING; Introductions 4:00PM Daily opening 4:00PM Daily opening 3:00PM Plenary: Woinshet Girma (moderator, interpreters, authors), Zoom WOCAL Committee on African Sign 4:00PM TBD Theme: Documentation and language comparisons Theme: Language origins and 3:40PM Break before sessions start protocols & instructions, announcements Languages meeting (all participants invited) language of expression 4:00PM Daily opening Theme: African sign languages in use: Annie Risler, Alain Gebert • The 4:00PM Daily opening Ingeborg Groen • Anger expression in Theme: African sign languages and across borders and in the home Dictionary for Seychellois Sign Language: 4:10 PM Theme: Lexical variation and new methods 4:10PM Ghanaian Sign Language (GSL) deaf students in the classroom Amandine le Maire • Mobilities / A tool forthe preservation, transmission Anne Baker, Andries van Niekerk, Kate Hope E. Morgan, Evans N. Burichani, Yede Adama Sanogo • Sign language 4:10PM Immobilities of deaf people in Kakuma and development of Seychelles' heritage Huddlestone • Studying lexicalvariation Jared O. -

The Deaf of South Sudan the South Sudanese Sign Language Community South Sudan Achieved Its Independence in 2011 from the Republic of Sudan to Its North

Profile Year: 2015 People and Language Detail Report Language Name: South Sudan Sign Language ISO Language Code: not yet The Deaf of South Sudan The South Sudanese Sign Language Community South Sudan achieved its independence in 2011 from the Republic of Sudan to its north. Prior to that, Sudan suffered under two civil wars. The second, which began in 1976, pitted the Sudanese government against the Sudan People’s Liberation Army (PLA) and lasted for over twenty years. These wars have led to significant suffering among the Sudanese and South Sudanese people, including major gaps in infrastructure development and significant displacement of various people groups. All of this war has traumatized the people of Sudan, and the Deaf in particular. The country appears to have a very small middle class, while a vast majority of its citizens are either very wealthy or extremely poor. The Deaf in South Sudan tend to be the poorest of the poor. Some cannot afford food and must stay at home with families (even though the home environ- ment often means that no one can communicate with them). There are currently no Deaf schools in South Sudan. Deaf schools are photo by DOOR International typically the center of language and cultural development for the Deaf of a country. A lack of Deaf schools means that there is a need for a central cultural organization among the Deaf. Deaf churches could function in this Primary Religion: role if they were well-established. There is currently only one Deaf church in Non-religious __________________________________________________ South Sudan. This church meets in Juba, and has fewer than 50 members. -

Prayer Cards | Joshua Project

Pray for the Nations Pray for the Nations Anii in Benin Dendi, Dandawa in Benin Population: 47,000 Population: 274,000 World Popl: 66,000 World Popl: 414,700 Total Countries: 2 Total Countries: 3 People Cluster: Guinean People Cluster: Songhai Main Language: Anii Main Language: Dendi Main Religion: Islam Main Religion: Islam Status: Unreached Status: Unreached Evangelicals: 1.00% Evangelicals: 0.03% Chr Adherents: 2.00% Chr Adherents: 0.07% Scripture: Unspecified Scripture: New Testament www.joshuaproject.net www.joshuaproject.net Source: Kerry Olson Source: Jacques Taberlet "Declare his glory among the nations." Psalm 96:3 "Declare his glory among the nations." Psalm 96:3 Pray for the Nations Pray for the Nations Foodo in Benin Fulani, Gorgal in Benin Population: 45,000 Population: 43,000 World Popl: 46,100 World Popl: 43,000 Total Countries: 2 Total Countries: 1 People Cluster: Guinean People Cluster: Fulani / Fulbe Main Language: Foodo Main Language: Fulfulde, Western Niger Main Religion: Islam Main Religion: Islam Status: Unreached Status: Unreached Evangelicals: 0.01% Evangelicals: 0.00% Chr Adherents: 0.02% Chr Adherents: 0.00% Scripture: Portions Scripture: New Testament www.joshuaproject.net www.joshuaproject.net Source: Bethany World Prayer Center Source: Bethany World Prayer Center "Declare his glory among the nations." Psalm 96:3 "Declare his glory among the nations." Psalm 96:3 Pray for the Nations Pray for the Nations Fulfulde, Borgu in Benin Gbe, Seto in Benin Population: 650,000 Population: 40,000 World Popl: 767,700 World -

Cross-Linguistic Variation in Space-Based Distance for Size Depiction in the Lexicons of Six Sign Languages

Cross-linguistic variation in space-based distance for size depiction in the lexicons of six sign languages Victoria Nyst University of Leiden This paper is a semiotic study of the distribution of a type of size depiction in lexical signs in six sign languages. Recently, a growing number of studies are focusing on the distribution of two representation techniques, i.e. the use of entity handshapes and handling handshapes for the depiction of hand-held tools (e.g. Ortega et al. 2014). Padden et al. (2013) find that there is cross- linguistic variation in the use of this pair of representation techniques. This study looks at variation in a representation technique that has not been systematically studied before, i.e. the delimitation of a stretch of space to depict the size of a referent, or space-based distance for size depiction. It considers the question whether the cross-linguistic variation in the use of this representation technique is governed by language-specific patterning as well (cf. Padden et al. 2013). This study quantifies and compares the occurrence of space-based distance for size depiction in the lexicons of six sign languages, three of Western European origin, and three of West African origin. It finds that sign languages differ significantly from each other in their frequency of use of this depiction type. This result thus corroborates that the selection and distribution of representation techniques does not solely depend on features of the depicted image, but also on language-specific patterning in the distribution of representation techniques, and it adds another dimension of iconic depiction in which sign languages may vary from each other (in addition to the entity/handling handshape distinction). -

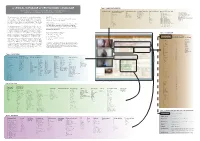

A LEXICAL DATABASE of KENYAN SIGN LANGUAGE Table 1

A LEXICAL DATABASE of KENYAN SIGN LANGUAGE Table 1. HAND CONFIGURATION Hope E. Morgan • University of California San Diego • [email protected] number of hands main articulator (primary), handshape symmetry movement symmetry SignType (Battison) geometry of the two hands ‘Digging into Signs’ Workshop; UCLondon, March 30 - April 1, 2015 1 secondary articulator same simultaneous X mirror end-stacked; tips same 2 hand different simult; connected 0 mirror; alternating end-stacked; tips opposite 1 or 2 hand (stiff wrist) same, same simult; h2 stationary 0/X mirror; alternating; asymmetric end-stacked; tips perpendicular forearm different, same alternating 1 mirror; offset adjacent; ranked prox-dist This poster presents an ongoing project to code the lexical prop- METHODS: arm same, different H2 stationary 2 mirror; asymmetric perpendicular plane head complex 3 stacked; tips same same plane; adjacent; tips perpindicular erties of signs in Kenyan Sign Language (KSL)* including pho- Participants: 28 deaf signers in total, though the majority face 3-minimal stacked; tips opposite h2 base eyes 3-move stacked; tips perpendicular h1 under h2 netic, phonemic, iconic, semantic, grammatical, and etymologi- of signs were produced by 3 signers mouth 4 stacked; facing; tips same n/a cal, with the focus here on phonetic properties. The ultimate author tongue C stacked; facing; tips opposite Coder: teeth unsure stacked; facing; tips perpendicular future goal is to link these lexical records to a full corpus of KSL. Language genres: (1) elicitation of citation forms by author whole body stacked; opposite facing; tips perpendicular with deaf Kenyan participant, (2) dyadic exchanges be- upper body side-stacked; tips same The database was created in FileMaker Pro, allowing for maxi- clothes side-stacked; tips opposite tween deaf Kenyans doing communiative tasks, (3) group not sure side-stacked; tips perpendicular mum flexibility during coding (see data entry screen in Fig. -

![“Ugandan Sign Language [Ugn] (A Language of Uganda)](https://docslib.b-cdn.net/cover/1587/ugandan-sign-language-ugn-a-language-of-uganda-1921587.webp)

“Ugandan Sign Language [Ugn] (A Language of Uganda)

“Ugandan Sign Language [ugn] (A language of Uganda) • Alternate Names: USL • Population: 160,000 (2008 WFD). 160,316–840,000 deaf (2008 WFD, citing various sources). 528,000–800,000 deaf (Lule and Wallin 2010, citing various sources). Over 700,000 deaf adults (Oluoch 2010, citing 2002 Uganda Bureau of Statistics). Figures range from 0.5%–2.7% of the general population of approx. 31,000,000. • Location: Scattered, mainly in urban areas. • Language Status: 5 (Developing). Recognized language (1995, Constitution, Article XXIV(d)). • Classification: Deaf sign language • Dialects: None known. Historical influence from British Sign Language [bfi], American Sign Language [ase] and Kenyan Sign Language [xki], but clearly distinct from all three. Influence from English [eng] in grammar, mouthing, initialization, fingerspelling (both one-handed and two-handed systems), especially among young, urban Deaf. Some mouthing from Luganda [lug] and Swahili [swa] (Lule and Wallin 2010). • Typology: One-handed and two-handed fingerspelling. • Language Use: Schools for deaf children since 1959. 8 primary schools and 2 secondary for the deaf; mixture of bilingual education and Total Communication (WFD Regional Secretariat for Southern and Eastern Africa 2008). Sign language in classrooms tends to be Signed English, especially by hearing teachers; some teachers are Deaf. Some schools are residential; education at preschool through vocational and university levels, but not available to all deaf children; many are in mainstream settings (Lule and Wallin 2010). Interpreters available for university, social, medical and religious services, courts, parliament, etc. (WFD Regional Secretariat for Southern and Eastern Africa 2008). Positive attitudes towards USL among Deaf; negative attitudes still common among hearing (Lule and Wallin 2010).