Academic Press

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Integrated Circuit

PREMLILA VITHALDAS POLYTECHNIC S. N. D. T. Women’s University, Juhu Campus, Santacruz (West), Mumbai- 400 049. Maharashtra (INDIA). Integrated Circuit PREPARED BY Miss. Rohini A. Mane (G. R. No.: 15070113) Miss. Anjali J. Maurya (G. R. No.: 15070114) Miss. Tejal S. Mejari (G. R. No.: 15070115) . Diploma in Electronics: Semester VII (June - November 2018) Introduction: History: The separately manufactured components like An integrated circuit is a thin slice of silicon resistor, capacitor, diode, and transistor are joined by or sometimes another material that has been specially wires or by printed circuit boards (PCB) to form processed so that a tiny electric circuit is etched on its circuit. These circuits are called discrete circuits and surface. The circuit can have many millions of they have following disadvantages. microscopic individual elements, including 1. In a large electronic circuit, there may be very transistors, resistors, capacitors, and conductors, all large number of components and as a result electrically connected in a certain way to perform the discrete assembly will occupy very large some useful function. space. 2. They are formed by soldering which causes a problem of reliability. To overcome these problems of space conservation and reliability the integrated circuit were developed(IC). Figure2 The first Integrated circuit The first integrated circuits were based on the idea that the same process used to make clusters of transistors on silicon wafers might be used to make a functional circuit, such as an amplifier circuit or a computer logic circuit. Slices of the semiconductor Figure1 Integrated Circuit materials silicon and germanium were already being printed with patterns, the exposed surfaces etched with An integrated circuit (IC), sometimes called a chemicals, and then the pattern removed, leaving chip or microchip, is a semiconductor wafer on which dozens of individual transistors, ready to be sliced up thousands or millions of tiny resistors, capacitors, and and packed individually. -

Transistors to Integrated Circuits

resistanc collectod ean r capacit foune yar o t d commercial silicon controlled rectifier, today's necessarye b relative .Th e advantage lineaf so r thyristor. This later wor alss kowa r baseou n do and circular structures are considered both for 1956 research [19]. base resistanc r collectofo d an er capacity. Parameters, which are expected to affect the In the process of diffusing the p-type substrate frequency behavior considerede ar , , including wafer into an n-p-n configuration for the first emitter depletion layer capacity, collector stage of p-n-p-n construction, particularly in the depletion layer capacit diffusiod yan n transit redistribution "drive-in" e donophasth f ro e time. Finall parametere yth s which mighe b t diffusion at higher temperature in a dry gas obtainabl comparee ear d with those needer dfo ambient (typically > 1100°C in H2), Frosch a few typical switching applications." would seriously damag r waferseou wafee Th . r surface woul e erodedb pittedd an d r eveo , n The Planar Process totally destroyed. Every time this happenee dth s e apparenlosexpressiowa th s y b tn o n The development of oxide masking by Frosch Frosch' smentiono t face t no , ourn o , s (N.H.). and Derick [9,10] on silicon deserves special We would make some adjustments, get more attention inasmuch as they anticipated planar, oxide- silicon wafers ready, and try again. protected device processing. Silicon is the key ingredien oxids MOSFEr it fo d ey an tpave wa Te dth In the early Spring of 1955, Frosch commented integrated electronics [22]. -

ELEC3221 Digital IC & Sytems Design Iain Mcnally Koushik Maharatna Basel Halak ELEC3221 / ELEC6241 Digital IC & Sytems D

ELEC3221 ELEC3221 / ELEC6241- module merge for 2016/2017 Digital IC & Sytems Design Digital IC & Sytems Design SoC Design Techniques Iain McNally Iain McNally 10 lectures 10 lectures ≈ ≈ Koushik Maharatna Koushik Maharatna 12 lectures 12 lectures ≈ ≈ Basel Halak Basel Halak 12 lectures 12 lectures ≈ ≈ 1001 1001 ELEC3221 / ELEC6241 Digital IC & Sytems Design Assessment Digital IC & Sytems Design • SoC Design Techniques 10% Coursework L-Edit Gate Design (BIM) 90% Examination Iain McNally Books • 10 lectures ≈ Integrated Circuit Design Koushik Maharatna a.k.a. Principles of CMOS VLSI Design - A Circuits and Systems Perspective Neil Weste & David Harris 12 lectures ≈ Pearson, 2011 Basel Halak Digital System Design with SystemVerilog Mark Zwolinski 12 lectures ≈ Pearson Prentice-Hall, 2010 1001 1002 Digital IC & Sytems Design History Iain McNally 1947 First Transistor Integrated Circuit Design John Bardeen, Walter Brattain, and William Shockley (Bell Labs) Content • 1952 Integrated Circuits Proposed – Introduction Geoffrey Dummer (Royal Radar Establishment) - prototype failed... – Overview of Technologies 1958 First Integrated Circuit – Layout Jack Kilby (Texas Instruments) - Co-inventor – CMOS Processing 1959 First Planar Integrated Circuit – Design Rules and Abstraction Robert Noyce (Fairchild) - Co-inventor – Cell Design and Euler Paths – System Design using Standard Cells 1961 First Commercial ICs – Wider View Simple logic functions from TI and Fairchild Notes & Resources 1965 Moore’s Law • http://users.ecs.soton.ac.uk/bim/notes/icd Gordon Moore (Fairchild) observes the trends in integration. 1003 1004 History 1947 Point Contact Transistor Collector Emitter 1947 First Transistor John Bardeen, Walter Brattain, and William Shockley (Bell Labs) Base 1952 Integrated Circuits Proposed Geoffrey Dummer (Royal Radar Establishment) - prototype failed.. -

Computer History a Look Back Contents

Computer History A look back Contents 1 Computer 1 1.1 Etymology ................................................. 1 1.2 History ................................................... 1 1.2.1 Pre-twentieth century ....................................... 1 1.2.2 First general-purpose computing device ............................. 3 1.2.3 Later analog computers ...................................... 3 1.2.4 Digital computer development .................................. 4 1.2.5 Mobile computers become dominant ............................... 7 1.3 Programs ................................................. 7 1.3.1 Stored program architecture ................................... 8 1.3.2 Machine code ........................................... 8 1.3.3 Programming language ...................................... 9 1.3.4 Fourth Generation Languages ................................... 9 1.3.5 Program design .......................................... 9 1.3.6 Bugs ................................................ 9 1.4 Components ................................................ 10 1.4.1 Control unit ............................................ 10 1.4.2 Central processing unit (CPU) .................................. 11 1.4.3 Arithmetic logic unit (ALU) ................................... 11 1.4.4 Memory .............................................. 11 1.4.5 Input/output (I/O) ......................................... 12 1.4.6 Multitasking ............................................ 12 1.4.7 Multiprocessing ......................................... -

The Transistor Revolution (Part 1)

Feature by Dr Bruce Taylor HB9ANY l E-mail: [email protected] t Christmas 1938, working in their small rented garage in Palo Alto, California, two The Transistor enterprising young men Acalled Bill Hewlett and Dave Packard finished designing a novel wide-range Wien bridge VFO. They took pictures of the instrument sitting on the mantelpiece Revolution (Part 1) in their house, made 25 sales brochures and sent them to potential customers. Thus began the electronics company Dr Bruce Taylor HB9ANY describes the invention of the that by 1995 employed over 100,000 people worldwide and generated annual tiny device that changed the course of radio history. sales of $31 billion. The oscillator used fve thermionic valves, the active devices that had been the mainstay of wireless communications for over 25 years. But less than a decade after HP’s frst product went on sale, two engineers working on the other side of the continent at Murray Hill, New Jersey, made an invention that was destined to eclipse the valve and change wireless and electronics forever. On Decem- ber 23rd 1947, John Bardeen and Walter Brattain at Bell Telephone Laboratories (the research arm of AT&T) succeeded in making the device that set in motion a technological revolution beyond their wildest dreams. It consisted of two gold contacts pressed on a pinhead of semi-conductive material on a metallic base. The regular News of Radio item in the 1948 New York Times was far from being a blockbuster column. Relegated to page 46, a short article in the edition of July 1st reported that CBS would be starting two new shows for the summer season, “Mr Tutt” and “Our Miss Brooks”, and that “Waltz Time” would be broadcast for a full hour on three successive Fridays. -

Second Industrial Revolution – Computer Safety and Security

Copyright Jim Thomson 2013 Safety In Engineering Ltd The Second Industrial Revolution – Computer Safety and Security “Programming today is a race between software engineers striving to build bigger and better idiot- proof programs, and the Universe trying to produce bigger and better idiots. So far, the Universe is winning.” Rick Cook “Measuring programming progress by lines of code is like measuring aircraft building progress by weight.” Bill Gates “The digital revolution is far more significant than the invention of writing or even of printing.” Douglas Engelbart “Complex systems almost always fail in complex ways.” Columbia Accident Investigation Board Report, August 2003 In the twenty-first century, it is difficult to imagine a world without computers. Almost every aspect of our lives is now tied to computers in some way or other. They are used throughout industry for the control of industrial plant and processes, manufacturing, aircraft, automobiles, washing machines, telephones and telecommunications, etcetera, etcetera. Most domestic electrical hardware now contains some sort of microprocessor control. Microprocessors are now so completely ubiquitous, and so much of our lives is completely dependent on them, that it is a continuous source of surprise to me that the people and the laboratories that led their invention and development are not household names in the same way as, say, Newton’s discovery of gravity or Darwin’s theory of evolution. Microprocessors have caused an ongoing revolution in our lives. The first industrial revolution in the nineteenth century led to steam power for factories, ships, and trains, and also to the ready availability of substances like steel, which transformed the lives of our forebears. -

ELEC3221 Digital IC & Sytems Design Iain Mcnally Koushik Maharatna Digital IC & Sytems Design • Assessment • Books D

ELEC3221 Digital IC & Sytems Design Iain McNally Integrated Circuit Design Digital IC & Sytems Design Content • – Introduction – Overview of Technologies Iain McNally – Layout – CMOS Processing 15 lectures ≈ – Design Rules and Abstraction – Cell Design and Euler Paths Koushik Maharatna – System Design using Standard Cells – Wider View 15 lectures ≈ Notes & Resources • https://secure.ecs.soton.ac.uk/notes/bim/notes/icd/ 1001 1003 Digital IC & Sytems Design History Assessment 1947 First Transistor • John Bardeen, Walter Brattain, and William Shockley (Bell Labs) 10% Coursework L-Edit Gate Design (BIM) 90% Examination 1952 Integrated Circuits Proposed Geoffrey Dummer (Royal Radar Establishment) - prototype failed... Books • 1958 First Integrated Circuit Integrated Circuit Design Jack Kilby (Texas Instruments) - Co-inventor a.k.a. Principles of CMOS VLSI Design - A Circuits and Systems Perspective 1959 First Planar Integrated Circuit Neil Weste & David Harris Robert Noyce (Fairchild) - Co-inventor Pearson, 2011 1961 First Commercial ICs Digital System Design with SystemVerilog Simple logic functions from TI and Fairchild Mark Zwolinski 1965 Moore’s Law Pearson Prentice-Hall, 2010 Gordon Moore (Fairchild) observes the trends in integration. 1002 1004 History History Moore’s Law Moore’s Law; a Self-fulfilling Prophesy Predicts exponential growth in the number of components per chip. The whole industry uses the Moore’s Law curve to plan new fabrication facilities. 1965 - 1975 Doubling Every Year Slower - wasted investment In 1965 Gordon Moore observed that the number of components per chip had Must keep up with the Joneses2. doubled every year since 1959 and predicted that the trend would continue through to 1975. Faster - too costly Moore describes his initial growth predictions as ”ridiculously precise”. -

Of Components, Packaging and Manufacturing Technology

50Years of Components, Packaging and Manufacturing Technology IEEE CPMT Society 445 Hoes Lane, PO Box 1331 Piscataway, NJ 08855 telephone: +1 732 562 5529 fax: +1 732 981 1769 email: [email protected] IEEE History Center Rutgers University 39 Union Street New Brunswick, NJ 08901 telephone: +1 732 932 1066 fax: +1 732 932 1193 email: [email protected] ® IEEE 50 YEARS of COMPONENTS, PACKAGING, AND MANUFACTURING TECHNOLOGY The IEEE CPMT Society and its Technologies 1950 - 20 0 0 Contents 1950s ..............................................................................................2-6 Acknowledgements • Components and the Search for Reliability Many people gave • AIEE, IRE, EIA Symposium on Improved unstintingly of their Quality Electronic Components time and expertise to • Dummer’s Prediction help with this project. • Standards • Formation of IRE Professional Group on Component Parts My special thanks go to • Circuit Board Developments Jack Balde, Ron Gedney, • Miniaturization, Heat Dissipation, Hybrid Technologies Charles Eldon, Mauro 1960s.............................................................................................7-13 Walker, and Paul Wesling • IRE Professional Group on Product Engineering and Production for their insights; Dimitry • Commercial Applications Grabbe, who provided • Increasing Density on the Circuit Board access to his first-class • Pure Molding Compounds components collection; • VLSI Workshops and Gus Shapiro, who • Hybrid Circuit • Darnell Report on Reliability shared early material • Telstar on the -

Integrated Circuit - Wikipedia, the Free Encyclopedia 페이지 1 / 13

Integrated circuit - Wikipedia, the free encyclopedia 페이지 1 / 13 Integrated circuit From Wikipedia, the free encyclopedia In electronics, an integrated circuit (also known as IC, microcircuit, microchip, silicon chip, or chip) is a miniaturized electronic circuit (consisting mainly of semiconductor devices, as well as passive components) that has been manufactured in the surface of a thin substrate of semiconductor material. Integrated circuits are used in almost all electronic equipment in use today and have revolutionized the world of electronics. A hybrid integrated circuit is a miniaturized electronic circuit constructed of individual semiconductor devices, as well as passive components, bonded to a substrate or circuit board. Integrated circuit of Atmel Diopsis This article is about monolithic integrated circuits. 740 System on Chip showing memory blocks, logic and input/output pads around the periphery Contents ■ 1 Introduction ■ 2 Invention ■ 3 Generations ■ 3.1 SSI, MSI and LSI ■ 3.2 VLSI ■ 3.3 ULSI, WSI, SOC and 3D-IC ■ 4 Advances in integrated circuits ■ 5 Popularity of ICs Microchips (EPROM memory) ■ 6 Classification with a transparent window, ■ 7 Manufacture showing the integrated circuit ■ 7.1 Fabrication inside. Note the fine silver- ■ 7.2 Packaging colored wires that connect the ■ 7.3 Chip labeling and manufacture date integrated circuit to the pins of the package. The window allows the ■ 8 Legal protection of semiconductor chip layouts memory contents of the chip to be ■ 9 Other developments erased, by exposure to strong ■ 10 Silicon graffiti ultraviolet light in an eraser ■ 11 Key industrial and academic data device. ■ 11.1 Notable ICs ■ 11.2 Manufacturers ■ 11.3 VLSI conferences ■ 11.4 VLSI journals ■ 12 Branch pages ■ 13 See also ■ 14 References ■ 15 External links Introduction mhtml:file://E:\VLSI 설계_집적회로_Class_VLSI_2장과10장부터\Integrated circui.. -

Integrated Circuit

Google Image Result for http://content.answers.com/main/content/img/McGrawHill/Encyclopedia/images/CE347600FG0020.gif 7/27/10 9:37 AM integrated circuit Dictionary: integrated circuit (n't-gr'td) n. A complex set of electronic components and their interconnections that are etched or imprinted onto a tiny slice of semiconducting material. integrated circuitry integrated circuitry n. Related Videos: integrated circuit What is a Chipset View more Technology videos Britannica Concise Encyclopedia: integrated circuit Assembly of microscopic electronic components (transistors, diodes, capacitors, and resistors) and their interconnections fabricated as a single unit on a wafer of semiconducting material, especially silicon. Early ICs of the late 1950s consisted of about 10 components on a chip 0.12 in. (3 mm) square. Very large-scale integration (VLSI) vastly increased circuit density, giving rise to the microprocessor. The first commercially successful IC chip (Intel, 1974) had 4,800 transistors; Intel's Pentium (1993) had 3.2 million, and more than a billion are now achievable. For more information on integrated circuit, visit Britannica.com. How Products are Made: How is an integrated circuit made? Background An integrated circuit, commonly referred to as an IC, is a microscopic array of electronic circuits and components that has been diffused or implanted onto the surface of a single crystal, or chip, of semiconducting material such as silicon. It is called an integrated circuit because the components, circuits, and base material are all made together, or integrated, out of a single piece of silicon, as opposed to a discrete circuit in which the components are made separately from different materials and assembled later. -

The MOS Silicon Gate Technology and the First Microprocessors

The MOS Silicon Gate Technology and the First Microprocessors Federico Faggin This is a preprint of the article published in La Rivista del Nuovo Cimento, Società Italiana di Fisica, Vol. 38, No. 12, 2015 1. – Introduction There are a few key technological inventions in human history that have come to characterize an era. For example, the animal-pulled plow was the invention that enabled efficient agriculture, thus gradually ending nomadic culture and creating a new social order that in time produced another seminal invention. This was the steam engine which gave rise to the industrial revolution and created the environment out of which the electronic computer emerged – the third seminal invention that started the information revolution that is now defining our time. All such inventions have deep roots and a long evolution. Engines powered by water or wind were used for many centuries before being replaced by steam engines. Steam engines were then replaced by internal combustion engines, and finally electric motors became prevalent; each generation of engines being more powerful, more efficient, more versatile, and more convenient than the preceding one. Similarly, the origin of our present computers dates back to the abacus, a computational tool that was used for several millennia before being replaced by mechanical calculators in the 19th century, by electronic computers in the 1950’s, and by microchips toward the end of the 20th century. The invention of the electronic computer was originally motivated by the need for a much faster computational tool than was possible with electromechanical calculators operated by human beings. This improvement was accomplished not only by performing the four elementary operations considerably faster than previously possible with calculators, but even more importantly, by adding the ability to program a long sequence of arithmetic operations that could be executed automatically, without human intervention. -

Circuito Integrado 1 Circuito Integrado

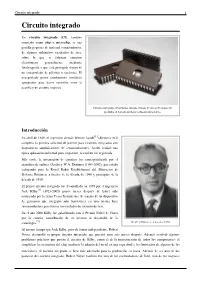

Circuito integrado 1 Circuito integrado Un circuito integrado (CI), también conocido como chip o microchip, es una pastilla pequeña de material semiconductor, de algunos milímetros cuadrados de área, sobre la que se fabrican circuitos electrónicos generalmente mediante fotolitografía y que está protegida dentro de un encapsulado de plástico o cerámica. El encapsulado posee conductores metálicos apropiados para hacer conexión entre la pastilla y un circuito impreso. Circuitos integrados de memoria con una ventana de cristal de cuarzo que posibilita su borrado mediante radiación ultravioleta. Introducción En abril de 1949, el ingeniero alemán Werner Jacobi[1] (Siemens AG) completa la primera solicitud de patente para circuitos integrados con dispositivos amplificadores de semiconductores. Jacobi realizó una típica aplicación industrial para su patente, la cual no fue registrada. Más tarde, la integración de circuitos fue conceptualizada por el científico de radares Geoffrey W.A. Dummer (1909-2002), que estaba trabajando para la Royal Radar Establishment del Ministerio de Defensa Británico, a finales de la década de 1940 y principios de la década de 1950. El primer circuito integrado fue desarrollado en 1959 por el ingeniero Jack Kilby[1] (1923-2005) pocos meses después de haber sido contratado por la firma Texas Instruments. Se trataba de un dispositivo de germanio que integraba seis transistores en una misma base semiconductora para formar un oscilador de rotación de fase. En el año 2000 Kilby fue galardonado con el Premio Nobel de Física por la enorme contribución de su invento al desarrollo de la tecnología.[2] Geoffrey Dummer en los años 1950. Al mismo tiempo que Jack Kilby, pero de forma independiente, Robert Noyce desarrolló su propio circuito integrado, que patentó unos seis meses después.