Flounder: an RL Chess Agent

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Games Ancient and Oriental and How to Play Them, Being the Games Of

CO CD CO GAMES ANCIENT AND ORIENTAL AND HOW TO PLAY THEM. BEING THE GAMES OF THE ANCIENT EGYPTIANS THE HIERA GRAMME OF THE GREEKS, THE LUDUS LATKUNCULOKUM OF THE ROMANS AND THE ORIENTAL GAMES OF CHESS, DRAUGHTS, BACKGAMMON AND MAGIC SQUAEES. EDWARD FALKENER. LONDON: LONGMANS, GEEEN AND Co. AND NEW YORK: 15, EAST 16"' STREET. 1892. All rights referred. CONTENTS. I. INTRODUCTION. PAGE, II. THE GAMES OF THE ANCIENT EGYPTIANS. 9 Dr. Birch's Researches on the games of Ancient Egypt III. Queen Hatasu's Draught-board and men, now in the British Museum 22 IV. The of or the of afterwards game Tau, game Robbers ; played and called by the same name, Ludus Latrunculorum, by the Romans - - 37 V. The of Senat still the modern and game ; played by Egyptians, called by them Seega 63 VI. The of Han The of the Bowl 83 game ; game VII. The of the Sacred the Hiera of the Greeks 91 game Way ; Gramme VIII. Tlie game of Atep; still played by Italians, and by them called Mora - 103 CHESS. IX. Chess Notation A new system of - - 116 X. Chaturanga. Indian Chess - 119 Alberuni's description of - 139 XI. Chinese Chess - - - 143 XII. Japanese Chess - - 155 XIII. Burmese Chess - - 177 XIV. Siamese Chess - 191 XV. Turkish Chess - 196 XVI. Tamerlane's Chess - - 197 XVII. Game of the Maharajah and the Sepoys - - 217 XVIII. Double Chess - 225 XIX. Chess Problems - - 229 DRAUGHTS. XX. Draughts .... 235 XX [. Polish Draughts - 236 XXI f. Turkish Draughts ..... 037 XXIII. }\'ci-K'i and Go . The Chinese and Japanese game of Enclosing 239 v. -

Inside Russia's Intelligence Agencies

EUROPEAN COUNCIL ON FOREIGN BRIEF POLICY RELATIONS ecfr.eu PUTIN’S HYDRA: INSIDE RUSSIA’S INTELLIGENCE SERVICES Mark Galeotti For his birthday in 2014, Russian President Vladimir Putin was treated to an exhibition of faux Greek friezes showing SUMMARY him in the guise of Hercules. In one, he was slaying the • Russia’s intelligence agencies are engaged in an “hydra of sanctions”.1 active and aggressive campaign in support of the Kremlin’s wider geopolitical agenda. The image of the hydra – a voracious and vicious multi- headed beast, guided by a single mind, and which grows • As well as espionage, Moscow’s “special services” new heads as soon as one is lopped off – crops up frequently conduct active measures aimed at subverting in discussions of Russia’s intelligence and security services. and destabilising European governments, Murdered dissident Alexander Litvinenko and his co-author operations in support of Russian economic Yuri Felshtinsky wrote of the way “the old KGB, like some interests, and attacks on political enemies. multi-headed hydra, split into four new structures” after 1991.2 More recently, a British counterintelligence officer • Moscow has developed an array of overlapping described Russia’s Foreign Intelligence Service (SVR) as and competitive security and spy services. The a hydra because of the way that, for every plot foiled or aim is to encourage risk-taking and multiple operative expelled, more quickly appear. sources, but it also leads to turf wars and a tendency to play to Kremlin prejudices. The West finds itself in a new “hot peace” in which many consider Russia not just as an irritant or challenge, but • While much useful intelligence is collected, as an outright threat. -

1999/1 Layout

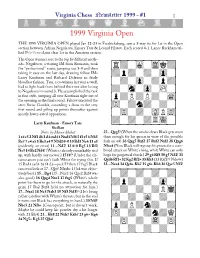

Virginia Chess Newsletter 1999 - #1 1 1999 Virginia Open THE 1999 VIRGINIA OPEN played Jan 22-24 in Fredricksburg, saw a 3-way tie for 1st in the Open section between Adrian Negulescu, Emory Tate & Leonid Filatov. Each scored 4-1. Lance Rackham tal- 1 1 lied 5 ⁄2- ⁄2 to claim clear 1st in the Amateur section. The Open winners rose to the top by different meth- ‹óóóóóóóó‹ ods. Negulescu, a visiting IM from Rumania, took õÏ›‹Ò‹ÌÙ›ú the “professional” route, jumping out 3-0 and then taking it easy on the last day, drawing fellow IMs õ›‡›‹›‹·‹ú Larry Kaufman and Richard Delaune in fairly bloodless fashion. Tate, a co-winner last year as well, õ‹›‹·‹›‡›ú had to fight back from behind this time after losing to Negulescu in round 3. He accomplished the task õ›‹Â‹·‹„‹ú in fine style, jumping all over Kaufman right out of õ‡›fi›fi›‹Ôú the opening in the final round. Filatov executed the semi Swiss Gambit, conceding a draw in the very õfl‹›‰›‹›‹ú first round and piling up points thereafter against mostly lower-rated opposition. õ‹fl‹›‹Áfiflú õ›‹›‹›ÍÛ‹ú Larry Kaufman - Emory Tate Sicilian ‹ìììììììì‹ Notes by Macon Shibut 25...Qxg5! (When the smoke clears Black gets more 1 e4 c5 2 Nf3 d6 3 d4 cxd4 4 Nxd4 Nf6 5 f3 e5 6 Nb3 than enough for his queen in view of the possible Be7 7 c4 a5 8 Be3 a4 9 N3d2 0-0 10 Bd3 Nc6 11 a3 fork on e4) 26 Qxg5 Rxf2 27 Rxf2 Nxf2 28 Qxg6 (evidently an error) 11...Nd7! 12 0-0 Bg5 13 Bf2 Nfxe4 (Now Black will regroup his pieces for a com- Nc5 14 Bc2 Nd4! (White is already remarkably tied bined attack on White’s king, while White can only up, with hardly any moves.) 15 f4!? (Under the cir- hope for perpetual check.) 29 g4 Rf8 30 g5 Nd2! 31 cumstances you can’t fault White for trying this. -

Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25

Title: Proposal to Encode Heterodox Chess Symbols in the UCS Source: Garth Wallace Status: Individual Contribution Date: 2016-10-25 Introduction The UCS contains symbols for the game of chess in the Miscellaneous Symbols block. These are used in figurine notation, a common variation on algebraic notation in which pieces are represented in running text using the same symbols as are found in diagrams. While the symbols already encoded in Unicode are sufficient for use in the orthodox game, they are insufficient for many chess problems and variant games, which make use of extended sets. 1. Fairy chess problems The presentation of chess positions as puzzles to be solved predates the existence of the modern game, dating back to the mansūbāt composed for shatranj, the Muslim predecessor of chess. In modern chess problems, a position is provided along with a stipulation such as “white to move and mate in two”, and the solver is tasked with finding a move (called a “key”) that satisfies the stipulation regardless of a hypothetical opposing player’s moves in response. These solutions are given in the same notation as lines of play in over-the-board games: typically algebraic notation, using abbreviations for the names of pieces, or figurine algebraic notation. Problem composers have not limited themselves to the materials of the conventional game, but have experimented with different board sizes and geometries, altered rules, goals other than checkmate, and different pieces. Problems that diverge from the standard game comprise a genre called “fairy chess”. Thomas Rayner Dawson, known as the “father of fairy chess”, pop- ularized the genre in the early 20th century. -

Chess Mag - 21 6 10 16/11/2020 17:49 Page 3

01-01 Cover - December 2020_Layout 1 16/11/2020 18:39 Page 1 03-03 Contents_Chess mag - 21_6_10 16/11/2020 17:49 Page 3 Chess Contents Founding Editor: B.H. Wood, OBE. M.Sc † Executive Editor: Malcolm Pein Editorial....................................................................................................................4 Editors: Richard Palliser, Matt Read Malcolm Pein on the latest developments in the game Associate Editor: John Saunders Subscriptions Manager: Paul Harrington 60 Seconds with...Bruce Pandolfini ............................................................7 We discover all about the famous coach and Queen’s Gambit adviser Twitter: @CHESS_Magazine Twitter: @TelegraphChess - Malcolm Pein A Krushing Success .............................................................................................8 Website: www.chess.co.uk Irina Krush and Wesley So were victorious in the U.S. Championships Subscription Rates: Escapism!..............................................................................................................14 United Kingdom Matthew Lunn headed for the Dolomites along with some friends 1 year (12 issues) £49.95 2 year (24 issues) £89.95 Magnusficent......................................................................................................18 3 year (36 issues) £125 Magnus Carlsen has produced the odd instructive effort of late Europe 1 year (12 issues) £60 How Good is Your Chess?..............................................................................22 2 year (24 issues) £112.50 -

Playing the Petroff Swapnil Dhopade

Playing the Petroff By Swapnil Dhopade Quality Chess www.qualitychess.co.uk Contents Key to Symbols used & Bibliography 4 Preface 5 Third Move Alternatives 1 3.¥c4?! & 3.¤c3 7 2 3.d4 21 3 6.¤xd7 55 3.¤xe5 d6 4 4th Move Alternatives 75 4.¤f3 ¤xe4 5 5.¥d3 & 5.c4 102 6 5.d3 123 7 5.£e2 139 Old Main Line 8 5.d4 154 9 9.£c2 169 10 9.¤c3 186 Modern Main Line 11 5.¤c3 200 12 11.¢b1 & 11.¦he1 221 Avoiding the Petroff 13 Centre Game 240 14 King’s Gambit 264 15 Vienna Game 287 16 Bishop’s Opening 301 Appendix: Konguvel Ponnuswamy – Swapnil Dhopade 317 Variation Index 320 Preface Welcome, dear readers, to my first opening book – on the Petroff Defence. The idea for this book first occurred during the 2018 Olympiad in Batumi, Georgia, an event which marked a turning point in my career. Having become a grandmaster in 2016, I had been coaching talented youngsters (including GM Raunak Sadhwani, who became a GM at just 13 years of age!) for quite a while and preparing opening files for them. In 2018, I was given the opportunity to work as part of a team of seconds, headed by GM Jacob Aagaard, helping the Indian Women’s team at the Olympiad. Performing this work at a world-class competition required me not only to prepare opening ideas at a more intricate level, but also to supplement my ChessBase analysis with brief but lucid comments at critical moments, to help the players retain the most important information. -

Saturday Enrichment Fall 2019 Introduction to Chess: Exercising Your Brain

SATURDAY ENRICHMENT FALL 2019 INTRODUCTION TO CHESS: EXERCISING YOUR BRAIN Instructor: Cary R. Easterday Instructor Email: [email protected] Location: Loew Hall 118 Course Description A healthy lifestyle includes physical and mental fitness. Playing chess improves memory, concentration, foresight, math skills, reading skills, creativity, and problem-solving skills. Each week the instructor will provide puzzles, lessons, videos, and one-on-one play, monitor student progress, and help with questions. Lessons include setup, piece movements, strategy, tactics, openings, middlegame, endgame, chess notation, popular variants, and history. No experience is necessary. Learning Outcomes By the end of this course, students should be able to: • Play standard chess, including such moves as castle, en passant, and pawn promotion. • Demonstrate chess tactics such as forks, pins, skewers, batteries, discovered attacks, undermining, overloading, deflection, and sacrifices. • Record games using algebraic and descriptive chess notations. • Play popular chess variants such as Bughouse, Chess960, Transcendental, King of the Hill, Upside-down, Atomic, Pawns Game, Peasants’ Revolt, and four-player Quad Kingdom. • Play in a chess tournament, if they choose (optional participation). Instructional Strategies • The instructor uses inquiry-based instruction to inspire student creativity and problem- solving abilities. • Students play chess games via cooperative learning and differentiation. • Each student receives a gold membership to Chesskid.com and has access to technology (computers, notebooks, phones) to solve chess puzzles, take self-paced chess lessons, and play online chess games with computer bots or other students. Student Assessment There is no formal assessment for this course. The instructor will observe one-on-one play each week and will informally assess a student’s progress as they answer questions, solve puzzles, and progress in lessons/ranking on Chesskid.com. -

The Petroff Defence Cyrus Lakdawala

opening repertoire the Petroff defence Cyrus Lakdawala www.everymanchess.com About the Author is an International Master, a former National Open and American Open Cyrus Lakdawala Champion, and a six-time State Champion. He has been teaching chess for over 30 years, and coaches some of the top junior players in the U.S. Also by the Author: Play the London System A Ferocious Opening Repertoire The Slav: Move by Move 1...d6: Move by Move The Caro-Kann: Move by Move The Four Knights: Move by Move Capablanca: Move by Move The Modern Defence: Move by Move Kramnik: Move by Move The Colle: Move by Move The Scandinavian: Move by Move Botvinnik: Move by Move The Nimzo-Larsen Attack: Move by Move Korchnoi: Move by Move The Alekhine Defence: Move by Move The Trompowsky Attack: Move by Move Carlsen: Move by Move The Classical French: Move by Move Larsen: Move by Move 1...b6: Move by Move Bird’s Opening: Move by Move Petroff Defence: Move by Move Fischer: Move by Move Anti-Sicilians: Move by Move Opening Repertoire: ...c6 First Steps: the Modern Caruana: Move by Move Contents About the Author 3 Bibliography 5 Introduction 6 1 The Cochrane Gambit 14 2 The Scotch Petroff 32 3 The Main Line Petroff 98 4 The Main Line Sidelines 190 5 The New Main Line 251 6 The Three Knights Petroff 303 Introduction W________W [rhb1kgW4] [0p0pDp0p] [WDWDWhWD] [DWDW0WDW] [WDWDPDWD] [DWDWDNDW] [P)P)W)P)] [$NGQIBDR] W--------W What is Your Opening Utopia? In the foolishness of youth I took a vast dislike to the Petroff, a hateful creature with no perceivable reason to exist, other than to annoy 1 e4 players. -

An Analysis of the Ideologies, Strategies and Applications of Chess and Martial Arts with Respect to Transferable Skills Torriente Toliver Langston University

Langston University Digital Commons @ Langston University McCabe Thesis Collection Student Works 5-2008 An Analysis of the Ideologies, Strategies and Applications of Chess and Martial Arts With Respect to Transferable Skills Torriente Toliver Langston University Follow this and additional works at: http://dclu.langston.edu/mccabe_theses Part of the Other Arts and Humanities Commons, Sociology Commons, and the Sports Studies Commons Recommended Citation Toliver, Torriente, "An Analysis of the Ideologies, Strategies and Applications of Chess and Martial Arts With Respect to Transferable Skills" (2008). McCabe Thesis Collection. Paper 46. This Thesis is brought to you for free and open access by the Student Works at Digital Commons @ Langston University. It has been accepted for inclusion in McCabe Thesis Collection by an authorized administrator of Digital Commons @ Langston University. For more information, please contact [email protected]. AN ANALYSIS OF THE IDEOLOGIES, STRATEGIES, AND APPLICATIONS OF CHESS AND MARTIAL ARTS WITH RESPECT TO TRANSFERABLE SKILLS By Torriente Toliver Acknowledgements This work would not be possible without the training in the skills that I learned to transfer. I want to thank Allen Hammond, my high school chess coach and world history teacher, who taught me the value of information, research, and critical thinking. I would also like to thank the staff of Beverly Pagoda Martial Arts Academy who taught me to put my critical thinking skills to use with practical application and not to waste them on pontification. I would like to thank Sensei Kates Jr. specifically because it was he that taught me about transferable skills and inspired me to create an effective way to analyze my skills and teach others to do the same. -

Excellent Tool for Standardized Test Preparation!

Excellent Tool for Standardized Test Preparation! • Latin and Greek roots • Figurative language • Reading comprehension • Fact and opinion • Predicting outcomes • Answer key Reading Grade 6 Frank Schaffer Publications® Spectrum is an imprint of Frank Schaffer Publications. Printed in the United States of America. All rights reserved. Except as permitted under the United States Copyright Act, no part of this publication may be reproduced or distributed in any form or by any means, or stored in a database or retrieval system, without prior written permission from the publisher, unless otherwise indicated. Frank Schaffer Publications is an imprint of School Specialty Publishing. Copyright © 2007 School Specialty Publishing. Send all inquiries to: Frank Schaffer Publications 8720 Orion Place Columbus, Ohio 43240-2111 Spectrum Reading—grade 6 ISBN 978-0-76823-826-6 Index of Skills Reading Grade 6 Numerals indicate the exercise pages on which these skills appear. Vocabulary Skills Drawing Conclusions 3, 7, 17, 23, 25, 29, 31, 33, 41, 43, 47, 51, 61, 65, 75, 79, 87, 89, 93, 95, 97, 99, 101, Abbreviations 5, 11, 15, 27, 39, 59, 61, 69, 79, 81, 103, 107, 109, 113, 117, 121, 123, 127, 133, 135, 111 139, 143, 151 Affixes 3, 9, 21, 29, 35, 51, 59, 65, 71, 77, 89, 95, Fact and Opinion 7, 31, 45, 53, 71, 83, 99, 115 109, 111, 117, 123, 125 Facts and Details all activity pages Antonyms 13, 31, 45, 53, 61, 67, 83, 91, 105, 135, 141 Fantasy and Reality 39, 57, 125, 143 Classification 5, 21, 41, 55, 125, 137, 151 Formulates Ideas and Opinions 103, 107, -

A Beginner's Guide to Coaching Scholastic Chess

A Beginner’s Guide To Coaching Scholastic Chess by Ralph E. Bowman Copyright © 2006 Foreword I started playing tournament Chess in 1962. I became an educator and began coaching Scholastic Chess in 1970. I became a tournament director and organizer in 1982. In 1987 I was appointed to the USCF Scholastic Committee and have served each year since, for seven of those years I served as chairperson or co-chairperson. With that experience I have had many beginning coaches/parents approach me with questions about coaching this wonderful game. What is contained in this book is a compilation of the answers to those questions. This book is designed with three types of persons in mind: 1) a teacher who has been asked to sponsor a Chess team, 2) parents who want to start a team at the school for their child and his/her friends, and 3) a Chess player who wants to help a local school but has no experience in either Scholastic Chess or working with schools. Much of the book is composed of handouts I have given to students and coaches over the years. I have coached over 600 Chess players who joined the team knowing only the basics. The purpose of this book is to help you to coach that type of beginning player. What is contained herein is a summary of how I run my practices and what I do with beginning players to help them enjoy Chess. This information is not intended as the one and only method of coaching. In all of my college education classes there was only one thing that I learned that I have actually been able to use in each of those years of teaching. -

A Conversation with a Ten Year Old Author

YOUNG GUNS Oliver Boydell came up with a simple plan: language because my goal was to teach the 1. Pick exciting games from the past important chess concepts 2. Make the notes breezy and brief and this is best done when 3. Use lots of diagrams the language is clear and 4. Have questions for the reader with answers everyone – kids and adults – in the back of the book can understand. 5. Have a “lesson” or two, a concept, to take with them that they should remember How do you go through a about playing good chess game? Do you take notes? 6. Give a favorite move – one that might Do you use a chess engine stick with the reader. as you go? I take notes on what I think For the miserable types that give a is interesting. I might go disapproving “harumph” to all this, you’ve over it several times to By Pete Tamburro forgotten what it is to be a kid. make sure I didn’t make any mistakes, like missing an Our chat was fun and we share it here. obvious tactic. I would use the engine several times, even different engines, to make sure I agreed with ack in 2010, I was assigned to How does it feel to be an the evaluation. I also look write a review of a 14-year- author? for certain ideas I can write old boy’s first chess book, I’m proud of myself and am about, like doubling rooks Mastering Positional Chess. really excited. I had never attacking games.