Identifying Dependencies Among Delays

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Getting to PTB in Braunschweig

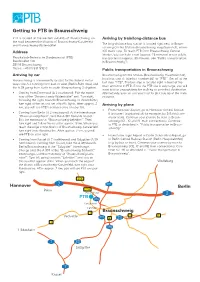

Getting to PTB in Braunschweig PTB is located on the western outskirts of Braunschweig, on Arriving by train/long-distance bus the road between the districts of Braunschweig-Kanzlerfeld The long-distance bus station is located right next to Braun- and Braunschweig-Watenbüttel. schweig Central Station (Braunschweig Hauptbahnhof), where Address ICE trains stop. To reach PTB from Braunschweig Central Station, you can take a taxi (approx. 15 minutes) or use public Physikalisch-Technische Bundesanstalt (PTB) transportation (approx. 30 minutes, see “Public transportation Bundesallee 100 in Braunschweig”). 38116 Braunschweig Phone: +49 (0) 531 592-0 Public transportation in Braunschweig Arriving by car Braunschweig Central Station (Braunschweig Hauptbahnhof), local bus stop A: take bus number 461 to “PTB”. Get off at the Braunschweig is conveniently located for the federal motor- last stop “PTB”. The bus stop is located right in front of the ways: the A 2 running from east to west (Berlin-Ruhr Area) and main entrance to PTB. Since the PTB site is very large, you will the A 39 going from north to south (Braunschweig-Salzgitter). want to plan enough time for walking to your final destination. • Coming from Dortmund (A 2 eastbound): Exit the motor- Alternatively, you can ask your host to pick you up at the main way at the “Braunschweig-Watenbüttel” exit. Turn right, entrance. following the signs towards Braunschweig. In Watenbüttel, turn right at the second set of traffic lights. After approx. 2 Arriving by plane km, you will see PTB‘s entrance area on your left. • From Hannover Airport, go to Hannover Central Station • Coming from Berlin (A 2 westbound): At the interchange (Hannover Hauptbahnhof) for example, by S-Bahn (com- “Braunschweig-Nord”, take the A 391 towards Kassel. -

Folgende Stores Sind Auch Weiterhin Für

FOLGENDE STORES SIND AUCH WEITERHIN FÜR EUCH GEÖFFNET! BERLIN BERLIN BAHNHOF ALEXANDERPLATZ: Mo bis Fr 6:00 - 22:00 Uhr / Sa & So 7:00 - 22:00 Uhr BERLIN BRANDENBURGER TOR: Mo bis So 8:00 - 21:00 Uhr BERLIN BAHNHOF SPANDAU: Mo bis Fr 6:00 - 21:00 Uhr Sa 7:00 - 21:00 Uhr / So 8:00 - 21:00 Uhr BERLIN BAHNHOF FRIEDRICHSTRASSE: Mo bis Fr 6:00 - 21:00 Uhr Sa 7:00 - 21:00 Uhr / So 8:00 - 21:00 Uhr BERLIN GESUNDBRUNNEN CENTER: Mo bis Sa 8:00 - 20:00 Uhr So GESCHLOSSEN BERLIN HAUPTBAHNHOF: Mo bis So 8:00 - 21:00 Uhr BERLIN HERMANNPLATZ: Mo bis Fr 8:00 - 20:00 Uhr Sa 9:00 - 20:00 Uhr / So 10:00 - 20:00 Uhr BERLIN KAISERDAMM: Mo bis Fr 8:00 - 20:00 Uhr Sa 9:00 - 20:00 Uhr / So 10:00 - 20:00 Uhr BERLIN OSTBAHNHOF: Mo bis Fr 7:00 - 21:00 Uhr / Sa & So 8:00 - 21:00 Uhr BERLIN RATHAUSPASSAGEN: Mo bis So 8:00 - 22:00 Uhr BERLIN RING CENTER: Mo bis Sa 8:00 - 20:00 Uhr / So 10:00 - 18:00 Uhr BERLIN SONY CENTER: Mo bis So 8:00 - 21:00 Uhr BERLIN WILMERSDORFER: Mo bis Sa 8:00 - 20:00 Uhr / So 10:00 - 18:00 Uhr BERLIN ZOOM: Mo bis So 7:00 - 22:00 Uhr / Sa & So 8:00 - 22:00 Uhr LEIPZIG LEIPZIG HAUPTBAHNHOF: Mo bis So 8:00 - 20:00 Uhr LEIPZIG NOVA EVENTIS: Mo bis Sa 11:00 - 18:00 Uhr So GESCHLOSSEN LEIPZIG PAUNSDORF: Mo bis So 8:00 - 20:00 Uhr So GESCHLOSSEN 1/5 NRW BOCHUM RHEIN-RUHR PARK: Mo bis Fr 10:00 - 20:00 Uhr Sa 9:00 - 20:00 Uhr So GESCHLOSSEN BONN POSTSTRASSE: Mo bis Sa 7:00 - 22:00 Uhr So 9:00 - 22:00 Uhr DORTMUND HAUPTBAHNHOF: Mo bis Fr 6:00 - 22:00 Uhr Sa & So 8:00 - 22:00 Uhr DORTMUND WESTENHELLWEG: Mo bis So 10:00 - 20:00 Uhr DÜSSELDORF HAUPTBAHNHOF: -

Was Kann Jetzt Noch Kommen? Über Neue Herausforderungen Und Das Nächste Sommermärchen

SOMMER 2018 PHILIPP LAHM WAS KANN JETZT NOCH KOMMEN? ÜBER NEUE HERAUSFORDERUNGEN UND DAS NÄCHSTE SOMMERMÄRCHEN NACHTZUG NACH MOSKAU SOMMER-SCHMINKTIPPS KOLUMNE VON KAMINER Reisereportage: von GNTM-Model Der Bestsellerautor über Deutschland nach Russland Mandy Bork verrät ihre die Menschen an seinen in 21 Stunden. Beauty-Geheimnisse. Lieblingsbahnhöfen. ALLES SCHÖN FRISCH Liebe Leserinnen, liebe Leser, Sie haben es sicherlich sofort bemerkt: Wir haben den Look unseres Magazins aufgefrischt und diese erste Ausgabe in ein sommerliches Gelb getaucht. Und nicht nur das: Sie finden im Heft auch neue Story-Formate – mal mitreißend, mal augenzwin- kernd und immer unterhaltend. Dazu bieten wir Ihnen Tipps zu Lifestyle, Shopping, Essen, Beauty und natürlich den Einkaufsbahn- höfen. Unser Credo: „Mein Bahnhof“ soll Ihnen Spaß machen. In dieser Ausgabe widmen wir uns den Hauptdarstellern des Sommers: dem Fußball, gutem Essen vom Grill und dem perfekten Style für laue Abende. Passend zur WM haben wir eine Fußball- legende getroffen: Ex-Nationalspieler Philipp Lahm, der als DFB-Botschafter bei der Weltmeisterschaft in Russland der deut- schen Mannschaft zur Seite steht. Mit ihm haben wir über Erfolge, Niederlagen und Zukunftsträume gesprochen. Einen kleinen Einblick in die Eigenheiten des WM-Gastgeberlandes liefert unser Reiseautor. Er berichtet von 21 Stunden im Nachtzug von Berlin nach Moskau. Für all jene, die den Sommer in Deutschland verbringen, haben wir die besten Veranstaltungen zusammengestellt – erstmals auch speziell für Ihre Region. Und wem es zu heiß wird, der er- fährt, wie er vom Bahnhof zum nächsten Badesee kommt. Dort können Sie eine der Grundzutaten eines gelungenen Sommer- tages genießen: ein Eis am Stiel. Am besten während der Lektüre unseres Magazins essen – das bringt doppelte Erfrischung. -

Cards Im GVH Regionaltarif 2015

Cards im GVH Regionaltarif 2015 Für Celle, Peine, Schaumburg, Heidekreis, Hameln-Pyrmont, Nienburg/Weser, Hildesheim und Gifhorn gvh.de Stand: 01.01.2015 Bahn frei für den Regionaltarif Mit GVH MobilCards im Regionaltarif können Sie günstig auf Bahnstrecken aus allen Landkreisen rund um die Region Hannover in das GVH Gebiet hineinfahren – und umgekehrt. Die Anschlussfahrten mit üstra und RegioBus im GVH Gebiet sind inklusive. Der besondere Vorteil Mit einer GVH MobilCard im Regionaltarif können Sie z. B. aus Nienburg nicht nur nach Hannover fahren, sondern auch weiter nach Hildesheim oder Peine. Mit einer übertragbaren GVH MobilCard im Regionaltarif sind Sie einen Monat lang günstig unterwegs und können innerhalb der von Ihnen gewählten Zonen fahren, so oft Sie möchten. Die GVH MobilCard ist ab dem aufgestempelten Datum bis zum Vortag des Folgemonats gültig, z. B. vom 17. Mai bis zum 16. Juni. Rundum sorglos mit dem GVH Abo Noch mehr sparen Sie mit einer GVH MobilCard im Jahres- Abo. Ihre GVH MobilCard im Abo kommt bequem per Post zu Ihnen nach Hause. Die monatlichen Beträge werden von Ihrem Konto abgebucht. Sie können den Jahresbetrag auch im Voraus zahlen. Dann erhalten Sie 2 % Rabatt und sind ein Jahr lang sicher vor Preiserhöhungen. Die Mitnahmeregelung Der Familienausflug ist mit drin: Mit IhrerGVH MobilCard können Sie zusätzlich einen Erwachsenen und bis zu drei Kinder unter 18 Jahren mitnehmen: montags bis freitags von 19.00 Uhr bis 03.00 Uhr des nächsten Tages samstags, sonn- und feiertags ganztägig am 24. und 31.12. ganztägig Die Mitnahmeregelung gilt für GVH MobilCard, auch im Abonnement, nicht jedoch für GVH MobilCards 60plus und nicht bei Cards für Schüler und Auszubildende. -

How to Find Us Pwc Legal in Hanover

How to find us PwC Legal in Hanover By car • Leave the Messeschnellweg at the Please note that you are only permitted to Weidetor/Medizinische Hochschule exit drive into Hanover city if you have a valid and follow Weidetorkreisel until you reach environmental badge for your vehicle Karl-Wiechert-Allee. (Umweltplakette). • Continue along Karl-Wiechert-Allee (approximately 2 kilometres) and then From the A7 Hamburg/Kassel turn right into Berckhusenstraße (signs • Take the A7 towards Hannover and towards Kleefeld). continue to the Hannover-Kirchhorst exit. • Follow the curving priority road. • Turn right onto the A37 and take the • Then take the first turning on the right Messeschnellweg(express way) B3/B6 into Fuhrberger Straße. towards Messe (exhibition centre). • PwC Legal is on the left. • Leave Messeschnellweg at the Weidetor/ Medizinische Hochschule exit and follow From Hannover Mitte (Hanover city Weidetorkreisel until you reach Karl- centre) Wiechert-Allee. • Follow the signs towards Messe PricewaterhouseCoopers Legal AG 52° 22´ 43.28´´ N • Continue along Karl-Wiechert-Allee (exhibition centre). Rechtsanwaltsgesellschaft 9° 48´ 14.44´´ E (approximately 2 kilometres) and then • Follow the signs towards Hannover Fuhrberger Straße 5 turn right into Berckhusenstraße (signs Kleefeld. Continue on Berckhusenstraße 30625 Hannover Tel: +49 511 5357-5745 towards Kleefeld). until you reach Hannover Heideviertel. Fax: +49 511 5357-5300 • Follow the curving priority road. • Turn left into Fuhrberger Straße. www.pwclegal.de • Then take the first turning on the right • PwC Legal is on the left. into Fuhrberger Straße. • PwC Legal is on the left. From the airport • Approximately 20 minutes by taxi. • PwC Legal is on the left (approximately You are now at the corner of Berckhusen- From the A2 Berlin/Dortmund • If you prefer to use public transport, take 3 minutes on foot). -

Coffee House Straße PLZ Stadt 1 Aachen Markt Markt 35 52062 Aachen 2 Sony-Center Potsdamer Str

Coffee House Straße PLZ Stadt 1 Aachen Markt Markt 35 52062 Aachen 2 Sony-Center Potsdamer Str. 10785 Berlin 3 Bahnhof Alexanderplatz Dircksenstr. 2 10179 Berlin 4 Schloßstr. 129 Schloßstr. 129 12163 Berlin 5 Berlin Hauptbahnhof Europaplatz 1 10557 Berlin 6 Pariser Platz Pariser Platz 4A 10117 Berlin 7 IHZ - Friedrichstrasse 96 Friedrichstr. 96 10117 Berlin 8 Friedrichstrasse 61 Friedrichstr. 61 10117 Berlin 9 Kurfuerstendamm 231 Kurfürstendamm 231 10719 Berlin 10 Potsdamer Arkaden Alte Potsdamer Str. 7 10785 Berlin 11 Friedrichstrasse 210 Friedrichstr. 210 10969 Berlin 12 Potsdamer Platz 5 Potsdamer Platz 5 10785 Berlin 13 Kurfuerstendamm 26a Kurfürstendamm 26A 10719 Berlin 14 Alexanderplatz Panoramastr. 1A 10178 Berlin 15 Alexa Shopping Centre Grunerstr. 20 10179 Berlin 16 Kurfuerstendamm 61 Kurfürstendamm 61 10707 Berlin 17 TXL - Tegel - Terminal A Flughafen Tegel 13405 Berlin 18 Wilmersdorfer Arcaden Wilmersdorfer Str. 53-54 10627 Berlin 19 Rewe Ackerstraße Invalidenstrasse 158 10115 Berlin 20 Niedernstrasse 41 Niedernstr. 6 33602 Bielefeld 21 Dr.Ruer Platz Dr.-Ruer-Platz 44787 Bochum 22 Sternstrasse 67 Sternstr. 67 53111 Bonn 23 T-Mobile Hauptverwaltung Landgrabenweg 151 53227 Bonn 24 Muensterplatz Münsterplatz 21 53111 Bonn 25 Bremen Hauptbahnhof Bahnhofsplatz 15 28195 Bremen 26 Marktstrasse 3 Marktstr. 3 28195 Bremen 27 Waterfront C02 AG-Weser-Str. 28237 Bremen 28 Waterfront Kiosk Use Akschen 4 28237 Bremen 29 Luisenplatz 4 Luisenplatz 4 64283 Darmstadt 30 Thier Galerie Westenhellweg 102-106 44137 Dortmund 31 Markt 6 Markt 6 44137 Dortmund 32 Ostenhellweg 19 Kleppingstr. 2 44135 Dortmund 33 Centrum Galerie Prager Str. 15 1069 Dresden 34 Altmarkt Altmarkt 7 1067 Dresden 35 Dresden Hauptbahnhof Wiener Platz 4 1069 Dresden 36 City Palais Königstr. -

Coffee House Straße PLZ Stadt Aachen Markt Markt 35 52062 Aachen Sony-Center Potsdamer Str

Coffee House Straße PLZ Stadt Aachen Markt Markt 35 52062 Aachen Sony-Center Potsdamer Str. 10785 Berlin Bahnhof Alexanderplatz Dircksenstr. 2 10179 Berlin Schloßstr. 129 Schloßstr. 129 12163 Berlin Berlin Hauptbahnhof Europaplatz 1 10557 Berlin Pariser Platz Pariser Platz 4A 10117 Berlin IHZ - Friedrichstrasse 96 Friedrichstr. 96 10117 Berlin Friedrichstrasse 61 Friedrichstr. 61 10117 Berlin Kurfuerstendamm 231 Kurfürstendamm 231 10719 Berlin Potsdamer Arkaden Alte Potsdamer Str. 7 10785 Berlin Friedrichstrasse 210 Friedrichstr. 210 10969 Berlin Potsdamer Platz 5 Potsdamer Platz 5 10785 Berlin Kurfuerstendamm 26a Kurfürstendamm 26A 10719 Berlin Alexanderplatz Panoramastr. 1A 10178 Berlin Alexa Shopping Centre Grunerstr. 20 10179 Berlin Kurfuerstendamm 61 Kurfürstendamm 61 10707 Berlin TXL - Tegel - Terminal A Flughafen Tegel 13405 Berlin Wilmersdorfer Arcaden Wilmersdorfer Str. 53-54 10627 Berlin Rewe Ackerstraße Invalidenstrasse 158 10115 Berlin Niedernstrasse 41 Niedernstr. 6 33602 Bielefeld Dr.Ruer Platz Dr.-Ruer-Platz 44787 Bochum Sternstrasse 67 Sternstr. 67 53111 Bonn T-Mobile Hauptverwaltung Landgrabenweg 151 53227 Bonn Muensterplatz Münsterplatz 21 53111 Bonn Kohlmarkt 18 Kohlmarkt 18 38100 Braunschweig Bremen Hauptbahnhof Bahnhofsplatz 15 28195 Bremen Marktstrasse 3 Marktstr. 3 28195 Bremen Waterfront C02 AG-Weser-Str. 28237 Bremen Waterfront Kiosk Use Akschen 4 28237 Bremen Luisenplatz 4 Luisenplatz 4 64283 Darmstadt Thier Galerie Westenhellweg 102-106 44137 Dortmund Markt 6 Markt 6 44137 Dortmund Ostenhellweg 19 Kleppingstr. 2 44135 Dortmund Centrum Galerie Prager Str. 15 1069 Dresden Altmarkt Altmarkt 7 1067 Dresden Dresden Hauptbahnhof Wiener Platz 4 1069 Dresden City Palais Königstr. 39 47051 Duisburg Duisburg Hauptbahnhof Mercatorstr. 19-25 47051 Duisburg Forum Königstr. 48 47051 Duisburg Erkrather Strasse Erkrather Str. 364 40231 Düsseldorf DUS - Flughafen Terminal B LandsideFlughafenstr. -

Challenges of Urban and Rural Transformation

20th International Symposium on Society and Resource Management Challenges of Urban and Rural Transformation June 9-13,2014 Hannover, Germany Program ISSRM 2014 Hannover Contents Welcome to the ISSRM 2014 in Hannover 3 About our Partners 4 Conference Planning Committee & Others 5 Conference Venue 6 Getting Around in Hannover 8 Conference Logistics 9 Canteen 10 Conference Dinner 11 In-Conference Field Trips 12 Post-Conference Field Trips 14 Workshops 16 Key Note Speeches 18 Student Events 20 Schedule Overview 21 Detailed Schedule 26 Poster Session 46 Session List 48 Author & Presenter List 50 2 Welcome to the ISSRM 2014 in Hannover! Challenges of Urban and Rural Transformation Dear ISSRM Participants: On behalf of IASNR and the local organizing team from Leibniz University Hannover (LUH), we would like to extend a warm welcome to you! We have tried to deliver an exciting scientific program at the always dynamic interface of natural resources and society along with social events that enable you to enjoy some of the sights of Hannover and Northern Germany. Although traditionally strong in engineering, LUH has had a remarkable track record in the field of natural resource management and society. Eduard Pestel, who would have celebrared his 100th birthday just the week prior to the ISSRM conference, was not only one of the founders of the Club of Rome, but also a professor at this university. In addition, LUH is considered the birthplace of modern landscape planning and nature conservation concepts after 1945, influencing research in the field that decades later was named „ecosystem services.“ The City of Hannover with its manyfold approaches to become one of the world‘s most sustainable cities provides a great backdrop for all of this. -

DB Station&Service AG Geschäftsbericht 2003

DB Station&Service AG Geschäftsbericht 2003 DB Station &AG Service Geschäftsbericht 2003 Entwicklung im Geschäftsjahr 2003 Umsatzerlöse Betriebliches Ergebnis Brutto-Investitionen in Mio. € nach Zinsen in Mio. € in Mio. € 811 851 –215 40 591 647 1.200 100 750 1.000 0 600 800 450 –100 600 300 400 – 200 150 200 – 300 2002 2003 2002 2003 2002 2003 2002 zu 2003: +4,9% 2002 zu 2003: 2002 zu 2003: +9,5% +255 Mio.€ Wesentliche Kennzahlen Veränd. in Mio. € 2003 2002 in % Umsatz 851 811 + 4,9 Ergebnis der gewöhnlichen Geschäftstätigkeit 1) 37 – 251 – EBITDA vor Altlastenerstattungen 178 – 91 – EBITDA 2) 178 – 88 – EBIT 3) 77 – 185 – Betriebliches Ergebnis nach Zinsen 4) 40 – 215 – Return on Capital Employed in % 3,5 – 8,0 – Bilanzsumme 2.842 2.645 + 7,4 Anlagevermögen 2.606 2.476 + 5,2 Eigenkapital 1.202 1.202 – Zinspflichtige Verbindlichkeiten 835 881 – 5,2 Cashflow vor Steuern 138 – 152 – Brutto-Investitionen 647 591 + 9,5 Netto-Investitionen 5) 279 285 – 2,2 Mitarbeiter per 31.12. 5.066 5.255 – 3,7 Veränd. Leistungskennzahlen 2003 2002 in % Anzahl der Bahnhöfe 5.443 5.580 – 2,5 1) Die DB Station&Service AG hat einen Ergebnisabführungsvertrag mit der Deutschen Bahn AG 2) Betrieblich ermitteltes Ergebnis vor Steuern, Zinsen sowie Abschreibungen (bereinigt um Sonderfaktoren) 3) Betrieblich ermitteltes Ergebnis vor Steuern und Zinsen (bereinigt um Sonderfaktoren) 4) Betrieblich ermittelte Ergebnisgröße (bereinigt um Sonderfaktoren) 5) Brutto-Investitionen abzüglich Baukostenzuschüssen von Dritten Inhalt 2 Vorwort des Vorstandsvorsitzenden 6 Lagebericht 28 Jahresabschluss 45 Bestätigungsvermerk 47 Organe 50 Bericht des Aufsichtsrats 54 Bahnhöfe Vorwort des Vorstandsvorsitzenden Sehr geehrte Damen und Herren, das Jahr 2003 war ein sehr erfolgreiches Jahr für die DB Station&Service AG. -

Promoting Intermodal Connectivity at California's High-Speed Rail Stations

MTI Funded by U.S. Department of Services Transit Census California of Water 2012 Transportation and California Promoting Intermodal Department of Transportation Connectivity at California’s High-Speed Rail Stations MTI ReportMTI 12-02 MTI Report 12-47 December 2012 MINETA TRANSPORTATION INSTITUTE MTI FOUNDER Hon. Norman Y. Mineta The Mineta Transportation Institute (MTI) was established by Congress in 1991 as part of the Intermodal Surface Transportation Equity Act (ISTEA) and was reauthorized under the Transportation Equity Act for the 21st century (TEA-21). MTI then successfully MTI BOARD OF TRUSTEES competed to be named a Tier 1 Center in 2002 and 2006 in the Safe, Accountable, Flexible, Efficient Transportation Equity Act: A Legacy for Users (SAFETEA-LU). Most recently, MTI successfully competed in the Surface Transportation Extension Act of 2011 to Founder, Honorable Norman Joseph Boardman (Ex-Officio) Diane Woodend Jones (TE 2016) Michael Townes* (TE 2017) be named a Tier 1 Transit-Focused University Transportation Center. The Institute is funded by Congress through the United States Mineta (Ex-Officio) Chief Executive Officer Principal and Chair of Board Senior Vice President Department of Transportation’s Office of the Assistant Secretary for Research and Technology (OST-R), University Transportation Secretary (ret.), US Department of Amtrak Lea+Elliot, Inc. Transit Sector, HNTB Transportation Centers Program, the California Department of Transportation (Caltrans), and by private grants and donations. Vice Chair Anne Canby (TE 2017) Will Kempton (TE 2016) Bud Wright (Ex-Officio) Hill & Knowlton, Inc. Director Executive Director Executive Director OneRail Coalition Transportation California American Association of State The Institute receives oversight from an internationally respected Board of Trustees whose members represent all major surface Honorary Chair, Honorable Bill Highway and Transportation Officials transportation modes. -

Informationen Zu Dem Veranstaltungsort

Informationen zu dem Veranstaltungsort Veranstaltungsort Hannover Congress Centrum (HCC) Theodor-Heuss-Platz 1-3 30175 Hannover Ihr Weg zum Veranstaltungsort: Anreise mit der Bahn: Der Hannover Hauptbahnhof liegt direkt im Zentrum Hannovers. Er verfügt über eine hervorragende Anbindung an das HCC. Öffentliche Verkehrsmittel, Taxen und Mietwagen stehen zur Verfügung. Anfahrt mit öffentlichen Verkehrsmitteln: Das HCC ist direkt an die Haltestelle U-Hannover Congress Centrum (Linie 128 und 134) angebunden. Ab Hauptbahnhof mit der Buslinie 128 oder 134 Richtung Peiner Straße direkt bis zur Station Hannover Congress Centrum. Zeitdauer: ca. 10 Minuten. Ab Kröpcke mit der Stadtbahn Linie 11 (Zoo) bis zur Station Hannover Congress Centrum. Zeitdauer: ca. 10 Minuten. Anreise mit dem Flugzeug: Der Flughafen Hannover verfügt über exzellente nationale, kontinentale und interkontinentale Flugverbindungen. Öffentliche Verkehrsmittel, Taxen und Mietwagen stehen dort zur Verfügung. Anfahrt mit öffentlichen Verkehrsmitteln vom Flughafen Hannover: Vom Flughafen mit der S-Bahn Linie 5 bis zum Hauptbahnhof. Zeitdauer: 25 Minuten. Ab Hauptbahnhof mit der Buslinie 128 oder 134 Richtung Peiner Straße direkt bis zur Station Hannover Congress Centrum. Zeitdauer: 10 Minuten. Anfahrt mit dem PKW: Bitte geben Sie in das Navigationsgerät „Schillstraße“ Hannover ein, da einige Navigationsgeräte den Theodor-Heuss-Platz nicht kennen. Aus Richtung Süden auf der BAB A7 bis Autobahndreieck Hannover-Süd über die A37/ Messeschnellweg bis zur Ausfahrt H.-Kleefeld. Links abbiegen, an der 1. Ampel wieder rechts in die Clausewitzstraße. Parkplatz: Schackstraße oder Parkhaus am HCC. Aus Richtung Norden auf der BAB A7 bis Autobahnkreuz Hannover-Kirchhorst, weiter über die A37/ Messeschnellweg. An der Ausfahrt H.-Kleefeld rechts, 1. Ampel wieder rechts in die Clausewitzstraße. -

Geschäftsbericht 2004 DB AG Als Management-Holding Vertikal Integrierte Konzernstruktur 24,0 Portfolio Fokussiert Auf Kerngeschäft Mrd

Geschäftsbericht 2004 DB AG als Management-Holding Vertikal integrierte Konzernstruktur 24,0 Portfolio fokussiert auf Kerngeschäft Mrd. € Konzernumsatz Exzellentes Rating: Aa1/AA Wirtschaftliche Entwicklung nach HGB S. 46 Konzernlagebericht nach IFRS S. 66 Nr.1 im europäischen Schienenpersonenverkehr 11,2 Nr.1 im europäischen ÖPNV Mrd. € Umsatz im Personenverkehr Nr.1 im Busverkehr in Deutschland Personenverkehr S.128 Nr.1 im europäischen Schienengüterverkehr 11,6 Nr.1 im europäischen Landverkehr Mrd. € Umsatz im Bereich Nr. 3 in der weltweiten Seefracht Transport und Logistik Transport und Logistik S.136 Nr. 5 in der weltweiten Luftfracht Attraktive Bahnhöfe Größtes europäisches Schienennetz 290 Funktionierender Wettbewerb konzernfremde Bahnen nutzen den auf der Schiene offenen Zugang zu unserer Infrastruktur Personenbahnhöfe S.142 Fahrweg S.148 Service für interne 31.500 und externe Kunden Mitarbeiter im Bereich Dienstleistungen Dienstleistungen S.156 Ergänzend zum geprüften IFRS-Abschluss stellen wir Ihnen auch die Entwicklung unter HGB vor. Für die wirtschaftlichen Fortschritte seit Beginn der Bahnreform ist die Entwicklung des EBITDA ein geeigneter Maßstab. Im Jahr 2001 hatten wir für 2004 die Rückkehr zu positiven Betrieblichen Ergebnissen nach Zinsen prognostiziert. Dies wurde in schwierigem Markt- und Wettbewerbsumfeld erreicht. Umsatzerlöse EBITDA vor Betriebliches Ergebnis Brutto-Investitionen in Mio.€ Altlastenerstattungen nach Zinsen in Mio.€ in Mio.€ in Mio.€ 28.228 23.963 3.092 3.509 –172 253 9.121 7.232 25.000 4.000 250 10.000 200 20.000 150 8.000 3.000 100 15.000 6.000 50 2.000 0 10.000 4.000 – 50 1.000 5.000 – 100 2.000 – 150 – 200 2003 2004 2003 2004 2003 2004 2003 2004 2003 zu 2004: 2003 zu 2004: 2003 zu 2004: 2003 zu 2004: –15,1% +13,5% +425 Mio.€ –20,7% Wesentliche Kennzahlen HGB HGB Veränd.