Future Trends Research V6.Pdf

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

1 Music Article from a Homeless Street Musician in Nashville to a Top

Music article From a homeless street musician in Nashville to a top-selling artist in Sweden - The remarkable story of Doug Seegers (by J. Granstrom) In the previous newsletter, we covered Jill Johnson’s highly celebrated TV show “Jills veranda (Jill’s Porch)”, where she invites Swedish artists to visit her in Nashville to make music and learn more Seegers with Johnson at the Swedish about different aspects about life in the American TV show “Allsång på Skansen” Deep South. In one of the most popular episodes during the first season of the show in 2014, Johnson and her guest Magnus Carlson - singer of the popular Swedish band “Weeping Willows” which has collaborated with Oasis member Andy Bell on several occasions - met the 62-year old homeless street musician Doug Seegers who was performing on a park bench in Nashville. A street vendor recommended Johnson and Carlson to check out Seegers, and both were immediately impressed by Seeger’s voice. He tells them that he “lives in a place where he doesn’t have to pay rent - under a bridge”, and then brings them out to a church where homeless people are Seegers with Johnson on offered free food and clothes. During the visit, Seegers plays the cover of their joint his own song ”Going Down To The River”, which impressed album “In Tandem” Johnson and Carlson so much that they returned some time later and offered to record the song with Seegers in Johnny Cash’s old studio in Nashville. Seegers became an overnight sensation in Sweden after the episode was broadcast, and “Going Down To The River” was number 1 on the Swedish iTunes charts for 12 consecutive days. -

ENTERTAINMENT Page 17 Technique • Friday, March 24, 2000 • 17

ENTERTAINMENT page 17 Technique • Friday, March 24, 2000 • 17 Fu Manchu on the road On shoulders of giants ENTERTAINMENT The next time you head off down the Oasis returns after some troubling highway and are looking for some years with a new release and new hope tunes, try King of the Road. Page 19 for the future of the band. Page 21 Technique • Friday, March 24, 2000 Passion from the mouthpiece all the way to the back row By Alan Back Havana, Cuba, in 1949, Sandoval of jazz, classical idioms, and Living at a 15-degree tilt began studying classical trumpet traditional Cuban styles. at the age of 12, and his early During a 1977 visit to Cuba, Spend five minutes, or even experience included a three-year bebop master and Latin music five seconds, talking with enrollment at the Cuba National enthusiast Dizzy Gillespie heard trumpeter Arturo Sandoval and the young upstart performing you get an idea of just how with Irakere and decided to be- dedicated he is to his art. Whether “I don’t have to ask gin mentoring him. In the fol- working as a recording artist, lowing years, Sandoval left the composer, professor, or amateur permission to group in order to tour with both actor, he knows full well where anybody to do Gillespie’s United Nation Or- his inspiration comes from and chestra and an ensemble of his does everything in his power to what I have to.” own. The two formed a friend- pass that spark along to anyone Arturo Sandoval ship that lasted until the older who will listen. -

“All Politicians Are Crooks and Liars”

Blur EXCLUSIVE Alex James on Cameron, Damon & the next album 2 MAY 2015 2 MAY Is protest music dead? Noel Gallagher Enter Shikari Savages “All politicians are Matt Bellamy crooks and liars” The Horrors HAVE THEIR SAY The GEORGE W BUSH W GEORGE Prodigy + Speedy Ortiz STILL STARTING FIRES A$AP Rocky Django Django “They misunderestimated me” David Byrne THE PAST, PRESENT & FUTURE OF MUSIC Palma Violets 2 MAY 2015 | £2.50 US$8.50 | ES€3.90 | CN$6.99 # "% # %$ % & "" " "$ % %"&# " # " %% " "& ### " "& "$# " " % & " " &# ! " % & "% % BAND LIST NEW MUSICAL EXPRESS | 2 MAY 2015 Anna B Savage 23 Matthew E White 51 A$AP Rocky 10 Mogwai 35 Best Coast 43 Muse 33 REGULARS The Big Moon 22 Naked 23 FEATURES Black Rebel Motorcycle Nicky Blitz 24 Club 17 Noel Gallagher 33 4 Blanck Mass 44 Oasis 13 SOUNDING OFF Blur 36 Paddy Hanna 25 6 26 Breeze 25 Palma Violets 34, 42 ON REPEAT The Prodigy Brian Wilson 43 Patrick Watson 43 Braintree’s baddest give us both The Britanys 24 Passion Pit 43 16 IN THE STUDIO Broadbay 23 Pink Teens 24 Radkey barrels on politics, heritage acts and Caribou 33 The Prodigy 26 the terrible state of modern dance Carl Barât & The Jackals 48 Radkey 16 17 ANATOMY music. Oh, and eco light bulbs… Chastity Belt 45 Refused 6, 13 Coneheads 23 Remi Kabaka 15 David Byrne 12 Ride 21 OF AN ALBUM De La Soul 7 Rihanna 6 Black Rebel Motorcycle Club 32 Protest music Django Django 15, 44 Rolo Tomassi 6 – ‘BRMC’ Drenge 33 Rozi Plain 24 On the eve of the general election, we Du Blonde 35 Run The Jewels 6 -

Marxman Mary Jane Girls Mary Mary Carolyne Mas

Key - $ = US Number One (1959-date), ✮ UK Million Seller, ➜ Still in Top 75 at this time. A line in red 12 Dec 98 Take Me There (Blackstreet & Mya featuring Mase & Blinky Blink) 7 9 indicates a Number 1, a line in blue indicate a Top 10 hit. 10 Jul 99 Get Ready 32 4 20 Nov 04 Welcome Back/Breathe Stretch Shake 29 2 MARXMAN Total Hits : 8 Total Weeks : 45 Anglo-Irish male rap/vocal/DJ group - Stephen Brown, Hollis Byrne, Oisin Lunny and DJ K One 06 Mar 93 All About Eve 28 4 MASH American male session vocal group - John Bahler, Tom Bahler, Ian Freebairn-Smith and Ron Hicklin 01 May 93 Ship Ahoy 64 1 10 May 80 Theme From M*A*S*H (Suicide Is Painless) 1 12 Total Hits : 2 Total Weeks : 5 Total Hits : 1 Total Weeks : 12 MARY JANE GIRLS American female vocal group, protégées of Rick James, made up of Cheryl Ann Bailey, Candice Ghant, MASH! Joanne McDuffie, Yvette Marine & Kimberley Wuletich although McDuffie was the only singer who Anglo-American male/female vocal group appeared on the records 21 May 94 U Don't Have To Say U Love Me 37 2 21 May 83 Candy Man 60 4 04 Feb 95 Let's Spend The Night Together 66 1 25 Jun 83 All Night Long 13 9 Total Hits : 2 Total Weeks : 3 08 Oct 83 Boys 74 1 18 Feb 95 All Night Long (Remix) 51 1 MASON Dutch male DJ/producer Iason Chronis, born 17/1/80 Total Hits : 4 Total Weeks : 15 27 Jan 07 Perfect (Exceeder) (Mason vs Princess Superstar) 3 16 MARY MARY Total Hits : 1 Total Weeks : 16 American female vocal duo - sisters Erica (born 29/4/72) & Trecina (born 1/5/74) Atkins-Campbell 10 Jun 00 Shackles (Praise You) -

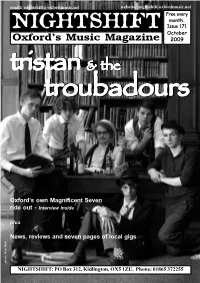

Issue 171.Pmd

email: [email protected] website: nightshift.oxfordmusic.net Free every month. NIGHTSHIFT Issue 171 October Oxford’s Music Magazine 2009 tristantristantristantristan &&& thethethe troubadourstroubadourstroubadourstroubadourstroubadours Oxford’s own Magnificent Seven ride out - Interview inside plus News, reviews and seven pages of local gigs photo: Marc West photo: Marc NIGHTSHIFT: PO Box 312, Kidlington, OX5 1ZU. Phone: 01865 372255 NEWNEWSS Nightshift: PO Box 312, Kidlington, OX5 1ZU Phone: 01865 372255 email: [email protected] Online: nightshift.oxfordmusic.net THIS MONTH’S OX4 FESTIVAL will feature a special Music Unconvention alongside its other attractions. The mini-convention, featuring a panel of local music people, will discuss, amongst other musical topics, the idea of keeping things local. OX4 takes place on Saturday 10th October at venues the length of Cowley Road, including the 02 Academy, the Bullingdon, East Oxford Community Centre, Baby Simple, Trees Lounge, Café Tarifa, Café Milano, the Brickworks and the Restore Garden Café. The all-day event has SWERVEDRIVER play their first Oxford gig in over a decade next been organised by Truck and local gig promoters You! Me! Dancing! Bands month. The one-time Oxford favourites, who relocated to London in the th already confirmed include hotly-tipped electro-pop outfit The Big Pink, early-90s, play at the O2 Academy on Thursday 26 November. The improvisational hardcore collective Action Beat and experimental hip hop band, who signed to Creation Records shortly after Ride in 1990, split in outfit Dälek, plus a host of local acts. Catweazle Club and the Oxford Folk 1999 but reformed in 2008, still fronted by Adam Franklin and Jimmy Festival will also be hosting acoustic music sessions. -

Sidestep-Programm - Gesamt 1 - Nach Titel

Sidestep-Programm - Gesamt 1 - nach Titel Titel Komponist (Interpret) Jahr 24K Magic Bruno Mars, Philip Lawrence, Christopher Brody Brown (Bruno Mars) 2016 500 Miles (I'm Gonna Be) The Proclaimers 1988 After Dark Steve Huffsteter, Tito Larriva (Tito & Tarantula) 1987/1996 Água De Beber Antonio Carlos Jobim, Vincius De Moraes 1963 Ain't Misbehavin' Fats Waller, Harry Brooks, Andy Razaf (Fats Waller, Louis Armstrong, Ella Fitzgerald, et al.) 1929 Ain't Nobody David Wolinski "Hawk" (Chaka Khan & Rufus) 1983 Ain't No Mountain High Enough N.Ashford, V.Simpson (Marvin Gaye & Tammi Terrell, Diana Ross et al.) 1967 Ain't No Sunshine Bill Withers 1971 Ain't She Sweet Milton Ager, Jack Yellen 1927 Alf Theme Song Alf Clausen 1986 All Around the World Lisa Stansfield, Ian Devaney, Andy Morris (Lisa Stansfield) 1989 All I Have To Do Is Dream Felice & Boudleaux Bryant (The Everly Brothers) 1958 All I Need Nicolas Godin, Jean-Benoît Dunckel, Beth Hirsch (Air) 1998 All I Want For Christmas Is You Mariah Carey, Walter Afanasieff (Mariah Carey) 1994 All My Loving John Lennon, Paul McCartney (The Beatles) 1963 All Night Long Lionel Richie 1983 All Of Me Gerald Marks, Seymour Simons 1931 All Of Me (John) John Stephens, Tony Gad (John Legend) 2013 All Shook Up Otis Blackwell (Elivis Presley) 1957 All That She Wants Ace Of Base 1992 All The Things You Are Oscar Hammerstein II, Jerome Kern 1939 All You Need Is Love John Lennon, Paul McCartney (The Beatles) 1967 Alright Jason Kay, Toby Smith, Rob Harris (Jamiroquai) 1996 Altitude 7000 Russian Standard (Valeri -

Towards a Sustainable Ocean Economy

IES_Illustration_v4Print-bleed.pdf 1 12/03/2021 12:41 RESEARCH C M Y CM MY CY CMY K RESEARCH CONTENTS > Towards a sustainable ANALYSIS 25 A source of nutritious food ocean economy Colin Moffat considers current marine food production and the necessary actions for the ocean to continue to provide us with good-quality food. s the recent analysis of the High Level Panel for a Implementation Plan2 calls on us to achieve, by 2030, the FEATURE 42 Sustainable Ocean Economy shows, humankind seven societal outcomes of the Decade, representing the Where warming land meets warming sea can sustainably produce six times more food from desired qualities not only of the ocean but also of people. EDITORIAL A Katharine R. Hendry and Christian März give an overview of the complexities of the ocean, generate 40 times more renewable energy, The 10 initial challenges of the Decade are the domains biogeochemical processes in polar coastal regions. and close around 20 per cent of the carbon gap towards where efforts will be concentrated. They are aimed the Paris Agreement’s more ambitious goal of keeping at addressing pollution, restoring ocean ecosystems, FEATURE 58 global warming to a maximum of 1.5 °C.1 However, all sustainably generating more food and wealth from the Building resilience to coastal hazards using tide gauges these imperatives are ocean-science intensive. ocean, resolving the host of issues in the ocean–climate Angela Hibbert describes the instrumentation that helps to warn of tsunamis and tidal surges. nexus, reducing the risk of disasters, observing the ocean Can the present ocean science rise to the challenge? and representing its state digitally, developing capacity CASE STUDY 84 Not quite yet. -

Rare Beatles Memorabilia Offered at Christie's

For Immediate Release 31 August 2005 Contact: Rhiannon Bevan-John 020.7752.3120 [email protected] RARE BEATLES MEMORABILIA OFFERED AT CHRISTIE’S LONDON IN SEPTEMBER Rare first working draft of lyrics for I’m Only Sleeping writing in John Lennon’s hand (Estimate in excess of £200,000) Pop Memorabilia Wednesday, 28 September 2005 at 2pm South Kensington – Leading Christie's Pop Memorabilia sale on 28 September 2005 is a rare first working draft of the lyrics for “I’m Only Sleeping” penned by John Lennon in 1966 for the groundbreaking Revolver album, which is estimated to fetch in excess of £200,000. This popular sale features over 230 lots of memorabilia relating to pop royalty from The Beatles through to Oasis, with estimates ranging from £200 up to £200,000. The Beatles / John Lennon Given personally by John Lennon to the current owner, the first draft of the lyrics for “I’m Only Sleeping” have remained in a private collection for over 30 years and appear at auction for the first time. The 17 lines were mostly written in blue pen on the reverse of a final demand from the GPO for Lennon’s car phone bill of £12.3.0 dated 25 April 1966. Page 1 of 3 “I’m Only Sleeping” is John’s first song on the groundbreaking 1966 Revolver album. Mark Lewisohn describes Revolver as the album which “shows the Beatles at peak of their creativity…one of those rare albums which is able to retain its original freshness and vitality. Quite simply…a masterpiece.” In a Rolling Stone interview in 1968 with John Cott, John Lennon said of “I’m Only Sleeping” and other of his classics such as “Lucy in the Sky” and “Strawberry Fields” that they were songs “that really meant something to me”. -

Monde.20020719.Pdf

www.lemonde.fr 58 ANNÉE – Nº 17878 – 1,20 ¤ – FRANCE MÉTROPOLITAINE --- VENDREDI 19JUILLET 2002 FONDATEUR : HUBERT BEUVE-MÉRY – DIRECTEUR : JEAN-MARIE COLOMBANI SÉRIES Pourquoi le capitalisme est malade ’ ALORS QUE les scandales finan- f ciers se multiplient aux Etats-Unis Enron, WorldCom : et que les marchés boursiers sont notre enquête déstabilisés par une crise de confiance sans précédent, de nom- sur le mécanisme breuses réflexions sont engagées, d’un côté et de l’autre de l’Atlanti- de la crise financière que, en vue de parvenir à une meilleure régulation du système f Gouvernement économique. Le 9 juillet, le prési- dent américain, George W. Bush, a / d’entreprise, ainsi annoncé une première série GRANDS REPORTAGES de dispositions, notamment pour stock-options, améliorer le gouvernement d’en- contrôle des comptes : Allende, 1970 treprise. En France, un groupe de réflexion patronal (Medef et les défauts Marcel Niedergang AFEP) conduit par Daniel Bouton, du système raconte le Chili PDG de la Société générale, devrait prochainement publier ses d’Allende p. 10 propositions de réforme sur le f Treize acteurs même sujet. L’enquête réalisée par Le Monde du monde met en évidence que huit ques- tions principales sont au cœur de économique ce débat : le gouvernement d’entre- régulation. Pour éclairer les enjeux Unis en France, Serge Weinberg, tiens avec des dirigeants économi- proposent prise, les normes comptables, les de ces dossiers, nous avons interro- président du directoire du groupe ques et politiques pour nourrir ce stock-options, le rôle des analys- gé treize personnalités, qui font PPR, ou Gérard Rameix, directeur débat, dont les enjeux portent sur leurs remèdes tes, des auditeurs et des agences part de leurs pistes de réflexion, général de la COB. -

Inisic Wee for Everyone in the Business of Music 23 SEPTEMBER

inisic wee For Everyone in the Business of Music 23 SEPTEMBER 1995 £3.10 Go! Dises plans EP Lyttleton axed by PRS follow-uptoHelp I this week as a follow-up to t ibumumber Help. one War Child char in overwhelming vote Andy Macdonald, managi the job of modernisation tl wingrush therecord suc- Maverick PRS director Trevor theto the PRS élection board whichtwo years put agoLyttleton when heon corne,"The Lyttletonshe says. vote oversl :h debuted at ety'sLyttleton board. bas been thrown off the soci- attracted a record number of votes. The writer/publisher's sacking was long debate in which Lyttleton depicted rack lifted from the album fo Rightdecided Society in a agmvote lastat theThursday, Performing after himselfup the society. as a lone "Do voice we want trying the to PRS clean to ÎP, which is scheduled for rel accusations that Lyttleton had engaged be a society in which directors are performance, membership ai mdm October tracks were 9. Détails still being of the fina ai unmask supposed deficiencies in the gagged?"But PRS he asked.board member Andrew ïs Music Week went to press. organisation. PRS estimâtes Potter said, "It seems to me that PRS chairman WayneBick liscussedOther possible include follow-ups more EPs, l; Lyttleton'sety £100,000. campaign has cost the Soci- with[Lyttleton] the society." wants to fmd things wrong the agm that 1994 was anotl by some of the featured artists resolutionThe meeting to remove voted 62%Lyttleton, in favour which of a andMomentum Music Publishers'Music managing Association director memberhad been Pete tabled Waterman by former and councilwhich chairmancouncil's unanimity Andy Heath and says, my own "Given impres- the raised around £50,000 for the char had the overwhelming support of 6 ofity each by donating copy of Help £2.35 to from War theChild. -

Gallagher Claims Liam Quit Beady Eye by Text Message

24 Friday Friday, December 21, 2018 Lifestyle | Gossip Gallagher claims Liam quit Beady Eye by text message iam Gallagher quit Beady Eye by text message, his estranged Man, he didn’t even have the balls to phone his bandmates. Because brother Noel Gallagher has claimed. The ‘Greedy Soul’ he got a solo deal, it was, See you later, lads. Then when he got his Lsinger formed the band with the other remaining members deal with Live Nation, no one was telling him, ‘Don’t do any Oasis of Oasis - Gem Archer, Andy Bell and Chris Sharrock - after Noel songs, do your new stuff, that’s what your good at.’ “ Once he knew quit the group in August 2009 following a backstage bust-up be- that Beady Eye was finished Noel had no hesitation in welcoming tween the brothers before a headline appearance at a Paris music Gem and Chris into his group and the timing was perfect because festival. Beady Eye disbanded in October 2014 after releasing two two of his members had decided to quit the High Flying Birds the albums, ‘Different Gear, Still Speeding’ and ‘BE’, and now guitarist same year. The rock legend said: “When I first started [as a solo Gem and drummer Chris play in Noel’s touring band the High Fly- artist], I had Tim [Smith, guitar] and Jeremy [Stacey, drums], and ing Birds. The ‘Wonderwall’ songwriter has accused Liam of not the great thing about session musicians is that when you’re not having the guts to tell his friends he was walking away from the sure what you’re doing, they’re bang on it, they make it sound like group to their faces or even in a phone call and instead sent them the record. -

Poetry Is More Than News Nathaniel Siegel’S World of Political Engagement and Simple Acts of Love

ART Laura Cinti BOOKS Jen Benka, Christopher Rizzo MUSIC Belowsky, The Poetry of Rock POETRY Anne Boyer, Jeremy Bushnell, BOOG CITY Karen Weiser A COMMUNITY NEWSPAPER FROM A GROUP OF ARTISTS AND WRITERS BASED IN AND AROUND NEW YORK CITY’S EAST VILLAGE ISSUE 32 FREE Poetry is More Than News Nathaniel Siegel’s World of Political Engagement and Simple Acts of Love INTERVIEW BY DEANNA ZANDT or vigil, and it was that energy. You didn’t know what was going read poems and engage people on the street. “It is difficult/ to get the news from poems/ yet men die to happen. We were more than the police could handle. We took miserably every day/ for lack/ of what is found there.” to the streets, and traffic had to be stopped … I remember people What kind of response do you get? — William Carlos Williams stepping off the curb, and then people getting arrested. They When you say that, it makes me think of handing out poems met Nathaniel Siegel in the spring of 2004, right around in Central Park. We did something called “Poets at The Gates” the first anniversary of the start of the Iraq War. He was when Christo had his saffron installation there. We were going I organizing poets to march in the name of peace with a to read poems in the park, and I printed out these little love contingent of artists, and what first struck me about him were his wide-eyed energy and his utter openness to the world around him. We became fast activist-buddies, but it wasn’t There’s an absolute and direct connection until shortly before the debacle that was Election 2004 that we became comrades in arms.