Application of Sentence-Level Text Analysis: the Role of Emotion in An

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

An Artificial Neural Network Approach for Sentence Boundary Disambiguation in Urdu Language Text

The International Arab Journal of Information Technology, Vol. 12, No. 4, July 2015 395 An Artificial Neural Network Approach for Sentence Boundary Disambiguation in Urdu Language Text Shazia Raj, Zobia Rehman, Sonia Rauf, Rehana Siddique, and Waqas Anwar Department of Computer Science, COMSATS Institute of Information Technology, Pakistan Abstract: Sentence boundary identification is an important step for text processing tasks, e.g., machine translation, POS tagging, text summarization etc., in this paper, we present an approach comprising of Feed Forward Neural Network (FFNN) along with part of speech information of the words in a corpus. Proposed adaptive system has been tested after training it with varying sizes of data and threshold values. The best results, our system produced are 93.05% precision, 99.53% recall and 96.18% f-measure. Keywords: Sentence boundary identification, feed forwardneural network, back propagation learning algorithm. Received April 22, 2013; accepted September 19, 2013; published online August 17, 2014 1. Introduction In such conditions it would be difficult for a machine to differentiate sentence terminators from Sentence boundary disambiguation is a problem in natural language processing that decides about the ambiguous punctuations. beginning and end of a sentence. Almost all language Urdu language processing is in infancy stage and processing applications need their input text split into application development for it is way slower for sentences for certain reasons. Sentence boundary number of reasons. One of these reasons is lack of detection is a difficult task as very often ambiguous Urdu text corpus, either tagged or untagged. However, punctuation marks are used in the text. -

A Comparison of Knowledge Extraction Tools for the Semantic Web

A Comparison of Knowledge Extraction Tools for the Semantic Web Aldo Gangemi1;2 1 LIPN, Universit´eParis13-CNRS-SorbonneCit´e,France 2 STLab, ISTC-CNR, Rome, Italy. Abstract. In the last years, basic NLP tasks: NER, WSD, relation ex- traction, etc. have been configured for Semantic Web tasks including on- tology learning, linked data population, entity resolution, NL querying to linked data, etc. Some assessment of the state of art of existing Knowl- edge Extraction (KE) tools when applied to the Semantic Web is then desirable. In this paper we describe a landscape analysis of several tools, either conceived specifically for KE on the Semantic Web, or adaptable to it, or even acting as aggregators of extracted data from other tools. Our aim is to assess the currently available capabilities against a rich palette of ontology design constructs, focusing specifically on the actual semantic reusability of KE output. 1 Introduction We present a landscape analysis of the current tools for Knowledge Extraction from text (KE), when applied on the Semantic Web (SW). Knowledge Extraction from text has become a key semantic technology, and has become key to the Semantic Web as well (see. e.g. [31]). Indeed, interest in ontology learning is not new (see e.g. [23], which dates back to 2001, and [10]), and an advanced tool like Text2Onto [11] was set up already in 2005. However, interest in KE was initially limited in the SW community, which preferred to concentrate on manual design of ontologies as a seal of quality. Things started changing after the linked data bootstrapping provided by DB- pedia [22], and the consequent need for substantial population of knowledge bases, schema induction from data, natural language access to structured data, and in general all applications that make joint exploitation of structured and unstructured content. -

ACL 2019 Social Media Mining for Health Applications (#SMM4H)

ACL 2019 Social Media Mining for Health Applications (#SMM4H) Workshop & Shared Task Proceedings of the Fourth Workshop August 2, 2019 Florence, Italy c 2019 The Association for Computational Linguistics Order copies of this and other ACL proceedings from: Association for Computational Linguistics (ACL) 209 N. Eighth Street Stroudsburg, PA 18360 USA Tel: +1-570-476-8006 Fax: +1-570-476-0860 [email protected] ISBN 978-1-950737-46-8 ii Preface Welcome to the 4th Social Media Mining for Health Applications Workshop and Shared Task - #SMM4H 2019. The total number of users of social media continues to grow worldwide, resulting in the generation of vast amounts of data. Popular social networking sites such as Facebook, Twitter and Instagram dominate this sphere. According to estimates, 500 million tweets and 4.3 billion Facebook messages are posted every day 1. The latest Pew Research Report 2, nearly half of adults worldwide and two- thirds of all American adults (65%) use social networking. The report states that of the total users, 26% have discussed health information, and, of those, 30% changed behavior based on this information and 42% discussed current medical conditions. Advances in automated data processing, machine learning and NLP present the possibility of utilizing this massive data source for biomedical and public health applications, if researchers address the methodological challenges unique to this media. In its fourth iteration, the #SMM4H workshop takes place in Florence, Italy, on August 2, 2019, and is co-located with the -

Full-Text Processing: Improving a Practical NLP System Based on Surface Information Within the Context

Full-text processing: improving a practical NLP system based on surface information within the context Tetsuya Nasukawa. IBM Research Tokyo Resem~hLaborat0ry t623-14, Shimotsurum~, Yimmt;0¢sl{i; I<almgawa<kbn 2421,, J aimn • nasukawa@t,rl:, vnet;::ibm icbm Abstract text. Without constructing a i):recige filodel of the eohtext through, deep sema~nfiCamtlys~is, our frmne= Rich information fl)r resolving ambigui- "work-refers .to a set(ff:parsed trees. (.r~sltlt~ 9 f syn- ties m sentence ~malysis~ including vari- : tacti(" miaiysis)ofeach sexitencd in t.li~;~i'ext as (:on- ous context-dependent 1)rol)lems. can be ob- text ilfformation, Thus. our context model consists tained by analyzing a simple set of parsed of parse(f trees that are obtained 1)y using mi exlst- ~rces of each senten('e in a text withom il!g g¢lwral syntactic parser. Excel)t for information constructing a predse model of the contex~ ()It the sequence of senl;,en('es, olIr framework does nol tl(rough deep senmntic.anMysis. Th.us. pro- consider any discourse stru(:~:ure mwh as the discourse cessmg• ,!,' a gloup" of sentem' .., '~,'(s togethel. i ': makes.' • " segmenm, focus space stack, or dominant hierarclty it.,p.(,)ss{t?!e .t.9 !~npl:ovel-t]le ~ccui'a~'Y (?f a :: :.it~.(.fi.ii~idin:.(cfi.0szufid, Sht/!er, dgs6)i.Tli6refbi.e, om< ,,~ ~-. ~t.ehi- - ....t sii~ 1 "~g;. ;, ~-ni~chin¢''ti-mslat{b~t'@~--..: .;- . .? • . : ...... - ~,',". ..........;-.preaches ":........... 'to-context pr0cessmg,,' • and.m" "tier-ran : ' le d at. •tern ; ::'Li:In i. thin.j.;'P'..p) a (r ~;.!iwe : .%d,es(tib~, ..i-!: .... -

Natural Language Processing

Chowdhury, G. (2003) Natural language processing. Annual Review of Information Science and Technology, 37. pp. 51-89. ISSN 0066-4200 http://eprints.cdlr.strath.ac.uk/2611/ This is an author-produced version of a paper published in The Annual Review of Information Science and Technology ISSN 0066-4200 . This version has been peer-reviewed, but does not include the final publisher proof corrections, published layout, or pagination. Strathprints is designed to allow users to access the research output of the University of Strathclyde. Copyright © and Moral Rights for the papers on this site are retained by the individual authors and/or other copyright owners. Users may download and/or print one copy of any article(s) in Strathprints to facilitate their private study or for non-commercial research. You may not engage in further distribution of the material or use it for any profitmaking activities or any commercial gain. You may freely distribute the url (http://eprints.cdlr.strath.ac.uk) of the Strathprints website. Any correspondence concerning this service should be sent to The Strathprints Administrator: [email protected] Natural Language Processing Gobinda G. Chowdhury Dept. of Computer and Information Sciences University of Strathclyde, Glasgow G1 1XH, UK e-mail: [email protected] Introduction Natural Language Processing (NLP) is an area of research and application that explores how computers can be used to understand and manipulate natural language text or speech to do useful things. NLP researchers aim to gather knowledge on how human beings understand and use language so that appropriate tools and techniques can be developed to make computer systems understand and manipulate natural languages to perform the desired tasks. -

What the Neurocognitive Study of Inner Language Reveals About Our Inner Space Hélène Loevenbruck

What the neurocognitive study of inner language reveals about our inner space Hélène Loevenbruck To cite this version: Hélène Loevenbruck. What the neurocognitive study of inner language reveals about our inner space. Epistémocritique, épistémocritique : littérature et savoirs, 2018, Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, 18. hal-02039667 HAL Id: hal-02039667 https://hal.archives-ouvertes.fr/hal-02039667 Submitted on 20 Sep 2019 HAL is a multi-disciplinary open access L’archive ouverte pluridisciplinaire HAL, est archive for the deposit and dissemination of sci- destinée au dépôt et à la diffusion de documents entific research documents, whether they are pub- scientifiques de niveau recherche, publiés ou non, lished or not. The documents may come from émanant des établissements d’enseignement et de teaching and research institutions in France or recherche français ou étrangers, des laboratoires abroad, or from public or private research centers. publics ou privés. Preliminary version produced by the author. In Lœvenbruck H. (2008). Épistémocritique, n° 18 : Langage intérieur - Espaces intérieurs / Inner Speech - Inner Space, Stéphanie Smadja, Pierre-Louis Patoine (eds.) [http://epistemocritique.org/what-the-neurocognitive- study-of-inner-language-reveals-about-our-inner-space/] - hal-02039667 What the neurocognitive study of inner language reveals about our inner space Hélène Lœvenbruck Université Grenoble Alpes, CNRS, Laboratoire de Psychologie et NeuroCognition (LPNC), UMR 5105, 38000, Grenoble France Abstract Our inner space is furnished, and sometimes even stuffed, with verbal material. The nature of inner language has long been under the careful scrutiny of scholars, philosophers and writers, through the practice of introspection. The use of recent experimental methods in the field of cognitive neuroscience provides a new window of insight into the format, properties, qualities and mechanisms of inner language. -

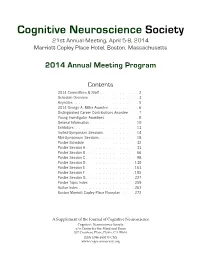

CNS 2014 Program

Cognitive Neuroscience Society 21st Annual Meeting, April 5-8, 2014 Marriott Copley Place Hotel, Boston, Massachusetts 2014 Annual Meeting Program Contents 2014 Committees & Staff . 2 Schedule Overview . 3 . Keynotes . 5 2014 George A . Miller Awardee . 6. Distinguished Career Contributions Awardee . 7 . Young Investigator Awardees . 8 . General Information . 10 Exhibitors . 13 . Invited-Symposium Sessions . 14 Mini-Symposium Sessions . 18 Poster Schedule . 32. Poster Session A . 33 Poster Session B . 66 Poster Session C . 98 Poster Session D . 130 Poster Session E . 163 Poster Session F . 195 . Poster Session G . 227 Poster Topic Index . 259. Author Index . 261 . Boston Marriott Copley Place Floorplan . 272. A Supplement of the Journal of Cognitive Neuroscience Cognitive Neuroscience Society c/o Center for the Mind and Brain 267 Cousteau Place, Davis, CA 95616 ISSN 1096-8857 © CNS www.cogneurosociety.org 2014 Committees & Staff Governing Board Mini-Symposium Committee Roberto Cabeza, Ph.D., Duke University David Badre, Ph.D., Brown University (Chair) Marta Kutas, Ph.D., University of California, San Diego Adam Aron, Ph.D., University of California, San Diego Helen Neville, Ph.D., University of Oregon Lila Davachi, Ph.D., New York University Daniel Schacter, Ph.D., Harvard University Elizabeth Kensinger, Ph.D., Boston College Michael S. Gazzaniga, Ph.D., University of California, Gina Kuperberg, Ph.D., Harvard University Santa Barbara (ex officio) Thad Polk, Ph.D., University of Michigan George R. Mangun, Ph.D., University of California, -

Entity Extraction: from Unstructured Text to Dbpedia RDF Triples Exner

Entity extraction: From unstructured text to DBpedia RDF triples Exner, Peter; Nugues, Pierre Published in: Proceedings of the Web of Linked Entities Workshop in conjuction with the 11th International Semantic Web Conference (ISWC 2012) 2012 Link to publication Citation for published version (APA): Exner, P., & Nugues, P. (2012). Entity extraction: From unstructured text to DBpedia RDF triples. In Proceedings of the Web of Linked Entities Workshop in conjuction with the 11th International Semantic Web Conference (ISWC 2012) (pp. 58-69). CEUR. http://ceur-ws.org/Vol-906/paper7.pdf Total number of authors: 2 General rights Unless other specific re-use rights are stated the following general rights apply: Copyright and moral rights for the publications made accessible in the public portal are retained by the authors and/or other copyright owners and it is a condition of accessing publications that users recognise and abide by the legal requirements associated with these rights. • Users may download and print one copy of any publication from the public portal for the purpose of private study or research. • You may not further distribute the material or use it for any profit-making activity or commercial gain • You may freely distribute the URL identifying the publication in the public portal Read more about Creative commons licenses: https://creativecommons.org/licenses/ Take down policy If you believe that this document breaches copyright please contact us providing details, and we will remove access to the work immediately and investigate your claim. LUND UNIVERSITY PO Box 117 221 00 Lund +46 46-222 00 00 Entity Extraction: From Unstructured Text to DBpedia RDF Triples Peter Exner and Pierre Nugues Department of Computer science Lund University [email protected] [email protected] Abstract. -

Talking to Computers in Natural Language

feature Talking to Computers in Natural Language Natural language understanding is as old as computing itself, but recent advances in machine learning and the rising demand of natural-language interfaces make it a promising time to once again tackle the long-standing challenge. By Percy Liang DOI: 10.1145/2659831 s you read this sentence, the words on the page are somehow absorbed into your brain and transformed into concepts, which then enter into a rich network of previously-acquired concepts. This process of language understanding has so far been the sole privilege of humans. But the universality of computation, Aas formalized by Alan Turing in the early 1930s—which states that any computation could be done on a Turing machine—offers a tantalizing possibility that a computer could understand language as well. Later, Turing went on in his seminal 1950 article, “Computing Machinery and Intelligence,” to propose the now-famous Turing test—a bold and speculative method to evaluate cial intelligence at the time. Daniel Bo- Figure 1). SHRDLU could both answer whether a computer actually under- brow built a system for his Ph.D. thesis questions and execute actions, for ex- stands language (or more broadly, is at MIT to solve algebra word problems ample: “Find a block that is taller than “intelligent”). While this test has led to found in high-school algebra books, the one you are holding and put it into the development of amusing chatbots for example: “If the number of custom- the box.” In this case, SHRDLU would that attempt to fool human judges by ers Tom gets is twice the square of 20% first have to understand that the blue engaging in light-hearted banter, the of the number of advertisements he runs, block is the referent and then perform grand challenge of developing serious and the number of advertisements is 45, the action by moving the small green programs that can truly understand then what is the numbers of customers block out of the way and then lifting the language in useful ways remains wide Tom gets?” [1]. -

Revealing the Language of Thought Brent Silby 1

Revealing the Language of Thought Brent Silby 1 Revealing the Language of Thought An e-book by BRENT SILBY This paper was produced at the Department of Philosophy, University of Canterbury, New Zealand Copyright © Brent Silby 2000 Revealing the Language of Thought Brent Silby 2 Contents Abstract Chapter 1: Introduction Chapter 2: Thinking Sentences 1. Preliminary Thoughts 2. The Language of Thought Hypothesis 3. The Map Alternative 4. Problems with Mentalese Chapter 3: Installing New Technology: Natural Language and the Mind 1. Introduction 2. Language... what's it for? 3. Natural Language as the Language of Thought 4. What can we make of the evidence? Chapter 4: The Last Stand... Don't Replace The Old Code Yet 1. The Fight for Mentalese 2. Pinker's Resistance 3. Pinker's Continued Resistance 4. A Concluding Thought about Thought Chapter 5: A Direction for Future Thought 1. The Review 2. The Conclusion 3. Expanding the mind beyond the confines of the biological brain References / Acknowledgments Revealing the Language of Thought Brent Silby 3 Abstract Language of thought theories fall primarily into two views. The first view sees the language of thought as an innate language known as mentalese, which is hypothesized to operate at a level below conscious awareness while at the same time operating at a higher level than the neural events in the brain. The second view supposes that the language of thought is not innate. Rather, the language of thought is natural language. So, as an English speaker, my language of thought would be English. My goal is to defend the second view. -

Natural Language Processing (NLP) for Requirements Engineering: a Systematic Mapping Study

Natural Language Processing (NLP) for Requirements Engineering: A Systematic Mapping Study Liping Zhao† Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Waad Alhoshan Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Alessio Ferrari Consiglio Nazionale delle Ricerche, Istituto di Scienza e Tecnologie dell'Informazione "A. Faedo" (CNR-ISTI), Pisa, Italy, [email protected] Keletso J. Letsholo Faculty of Computer Information Science, Higher Colleges of Technology, Abu Dhabi, United Arab Emirates, [email protected] Muideen A. Ajagbe Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] Erol-Valeriu Chioasca Exgence Ltd., CORE, Manchester, United Kingdom, [email protected] Riza T. Batista-Navarro Department of Computer Science, The University of Manchester, Manchester, United Kingdom, [email protected] † Corresponding author. Context: NLP4RE – Natural language processing (NLP) supported requirements engineering (RE) – is an area of research and development that seeks to apply NLP techniques, tools and resources to a variety of requirements documents or artifacts to support a range of linguistic analysis tasks performed at various RE phases. Such tasks include detecting language issues, identifying key domain concepts and establishing traceability links between requirements. Objective: This article surveys the landscape of NLP4RE research to understand the state of the art and identify open problems. Method: The systematic mapping study approach is used to conduct this survey, which identified 404 relevant primary studies and reviewed them according to five research questions, cutting across five aspects of NLP4RE research, concerning the state of the literature, the state of empirical research, the research focus, the state of the practice, and the NLP technologies used. -

Detecting Politeness in Natural Language by Michael Yeomans, Alejandro Kantor, Dustin Tingley

CONTRIBUTED RESEARCH ARTICLES 489 The politeness Package: Detecting Politeness in Natural Language by Michael Yeomans, Alejandro Kantor, Dustin Tingley Abstract This package provides tools to extract politeness markers in English natural language. It also allows researchers to easily visualize and quantify politeness between groups of documents. This package combines and extends prior research on the linguistic markers of politeness (Brown and Levinson, 1987; Danescu-Niculescu-Mizil et al., 2013; Voigt et al., 2017). We demonstrate two applications for detecting politeness in natural language during consequential social interactions— distributive negotiations, and speed dating. Introduction Politeness is a universal dimension of human communication (Goffman, 1967; Lakoff, 1973; Brown and Levinson, 1987). In practically all settings, a speaker can choose to be more or less polite to their audience, and this can have consequences for the speakers’ social goals. Politeness is encoded in a discrete and rich set of linguistic markers that modify the information content of an utterance. Sometimes politeness is an act of commission (for example, saying “please” and “thank you”) <and sometimes it is an act of omission (for example, declining to be contradictory). Furthermore, the mapping of politeness markers often varies by culture, by context (work vs. family vs. friends), by a speaker’s characteristics (male vs. female), or the goals (buyer vs. seller). Many branches of social science might be interested in how (and when) people express politeness to one another, as one mechanism by which social co-ordination is achieved. The politeness package is designed to make it easier to detect politeness in English natural language, by quantifying relevant characteristics of polite speech, and comparing them to other data about the speaker.