Das Unsichtbare Sichtbar Machen – Wenn Supercomputer Prozesse Simulieren

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Meteoswiss Good to Know Postdoc on Climate Change and Heat Stress

Federal Department of Home Affairs FDHA Federal Office of Meteorology and Climatology MeteoSwiss MeteoSwiss Good to know The Swiss Federal Office for Meteorology and Climatology MeteoSwiss is the Swiss National Weather Service. We record, monitor and forecast weather and climate in Switzerland and thus make a sus- tainable contribution to the well-being of the community and to the benefit of industry, science and the environment. The Climate Department carries out statistical analyses of observed and modelled cli- mate data and is responsible for providing the results for users and customers. Within the team Cli- mate Prediction we currently have a job opening for the following post: Postdoc on climate change and heat stress Your main task is to calculate potential heat stress for current and future climate over Europa that will serve as a basis for assessing the impact of climate change on the health of workers. You derive complex heat indices from climate model output and validate them against observational datasets. You will further investigate the predictability of heat stress several weeks ahead on the basis of long- range weather forecasts. In close collaboration with international partners of the EU H2020 project Heat-Shield you will setup a prototype system of climate services, including an early warning system. Your work hence substantially contributes to a heat-based risk assessment for different key industries and potential productivity losses across Europe. The results will be a central basis for policy making and to plan climate adaptation measures. Your responsibilities will further include publishing results in scientific journals and reports, reporting and coordinating our contribution to the European project and presenting results at national and international conferences. -

Switzerland Welcomes Foreign Investment and Accords It National Treatment

Executive Summary Switzerland welcomes foreign investment and accords it national treatment. Foreign investment is not hampered by significant barriers. The Swiss Federal Government adopts a relaxed attitude of benevolent noninterference towards foreign investment, allowing the 26 cantons to set major policy, and confining itself to creating and maintaining general conditions favorable to both Swiss and foreign investors. Such factors include economic and political stability, a transparent legal system, reliable and extensive infrastructure, efficient capital markets and excellent quality of life in general. Many US firms base their European or regional headquarters in Switzerland, drawn to the country's low corporate tax rates, exceptional infrastructure, and productive and multilingual work force. Switzerland was ranked as the world's most competitive economy according to the World Economic Forum's Global Competitiveness Report in 2013. The high ranking reflects the country’s sound institutional environment, excellent infrastructure, efficient markets and high levels of technological innovation. Switzerland has a developed infrastructure for scientific research; companies spend generously on R&D; intellectual property protection is generally strong; and the country’s public institutions are transparent and stable. Many of Switzerland's cantons make significant use of fiscal incentives to attract investment to their jurisdictions. Some of the more aggressive cantons have occasionally waived taxes for new firms for up to ten years but this practice has been criticized by the European Union, which has requested the abolition of these practices. Individual income tax rates vary widely across the 26 cantons. Corporate taxes vary depending upon the many different tax incentives. Zurich, which is sometimes used as a reference point for corporate location tax calculations, has a rate of around 25%, which includes municipal, cantonal, and federal tax. -

Dealing with Inconsistent Weather Warnings: Effects on Warning Quality and Intended Actions

Research Collection Journal Article Dealing with inconsistent weather warnings: effects on warning quality and intended actions Author(s): Weyrich, Philippe; Scolobig, Anna; Patt, Anthony Publication Date: 2019-10 Permanent Link: https://doi.org/10.3929/ethz-b-000291292 Originally published in: Meteorological Applications 26(4), http://doi.org/10.1002/met.1785 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Received: 11 July 2018 Revised: 12 December 2018 Accepted: 31 January 2019 Published on: 28 March 2019 DOI: 10.1002/met.1785 RESEARCH ARTICLE Dealing with inconsistent weather warnings: effects on warning quality and intended actions Philippe Weyrich | Anna Scolobig | Anthony Patt Climate Policy Group, Department of Environmental Systems Science, Swiss Federal In the past four decades, the private weather forecast sector has been developing Institute of Technology (ETH Zurich), Zurich, next to National Meteorological and Hydrological Services, resulting in additional Switzerland weather providers. This plurality has led to a critical duplication of public weather Correspondence warnings. For a specific event, different providers disseminate warnings that are Philippe Weyrich, Climate Policy Group, Department of Environmental Systems Science, more or less severe, or that are visualized differently, leading to inconsistent infor- Swiss Federal Institute of Technology (ETH mation that could impact perceived warning quality and response. So far, past Zurich), 8092 Zurich, Switzerland. research has not studied the influence of inconsistent information from multiple Email: [email protected] providers. This knowledge gap is addressed here. -

List of Participants

WMO Sypmposium on Impact Based Forecasting and Warning Services Met Office, United Kingdom 2-4 December 2019 LIST OF PARTICIPANTS Name Organisation 1 Abdoulaye Diakhete National Agency of Civil Aviation and Meteorology 2 Angelia Guy National Meteorological Service of Belize 3 Brian Golding Met Office Science Fellow - WMO HIWeather WCRP Impact based Forecast Team, Korea Meteorological 4 Byungwoo Jung Administration 5 Carolina Gisele Cerrudo National Meteorological Service Argentina 6 Caroline Zastiral British Red Cross 7 Catalina Jaime Red Cross Climate Centre Directorate for Space, Security and Migration Chiara Proietti 8 Disaster Risk Management Unit 9 Chris Tubbs Met Office, UK 10 Christophe Isson Météo France 11 Christopher John Noble Met Service, New Zealand 12 Dan Beardsley National Weather Service NOAA/National Weather Service, International Affairs Office 13 Daniel Muller 14 David Rogers World Bank GFDRR 15 Dr. Frederiek Sperna Weiland Deltares 16 Dr. Xu Tang Weather & Disaster Risk Reduction Service, WMO National center for hydro-meteorological forecasting, Viet Nam 17 Du Duc Tien 18 Elizabeth May Webster South African Weather Service 19 Elizabeth Page UCAR/COMET 20 Elliot Jacks NOAA 21 Gerald Fleming Public Weather Service Delivery for WMO 22 Germund Haugen Met No 23 Haleh Kootval World Bank Group 24 Helen Bye Met Office, UK 25 Helene Correa Météo-France Impact based Forecast Team, Korea Meteorological 26 Hyo Jin Han Administration Impact based Forecast Team, Korea Meteorological 27 Inhwa Ham Administration Meteorological Service -

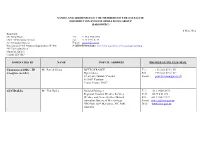

Names and Addresses of the Members of the Satellite Distribution System Operations Group (Sadisopsg)

NAMES AND ADDRESSES OF THE MEMBERS OF THE SATELLITE DISTRIBUTION SYSTEM OPERATIONS GROUP (SADISOPSG) 8 May 2014 Secretary: Mr. Greg Brock Tel: +1 514 954 8194 Chief, Meteorology Section Fax: +1 514 954 6759 Air Navigation Bureau E-mail: [email protected] International Civil Aviation Organization (ICAO) SADISOPSG website: http://www.icao.int/safety/meteorology/sadisopsg 999 University Street Montréal, Québec Canada H3C 5H7 NOMINATED BY NAME POSTAL ADDRESS PHONE/FAX/TELEX/E-MAIL Chairman of DMG - FP Mr. Patrick Simon METEO FRANCE Tel. : +33 5 61 07 81 50 (ex-officio member) Dprévi/Aéro Fax : +33 5 61 07 81 09 42, avenue Gustave Coriolis E-mail : [email protected] F-31057 Toulouse Cedex, France 31057 AUSTRALIA Mr. Tim Hailes National Manager Tel.: +61 3 9669 4273 Regional Aviation Weather Services Cell: +0427 840 175 Weather and Ocean Services Branch Fax: +61 3 9662 1222 Australian Bureau of Meteorology E-mail: [email protected] GPO Box 1289 Melbourne VIC 3001 Web: www.bom.gov.au Australia NOMINATED BY NAME POSTAL ADDRESS PHONE/FAX/TELEX/E-MAIL CHINA Ms. Zou Juan Engineer Tel:: + 86 10 87786828 MET Division Fax: +86 10 87786820 Air Traffic Management Bureau, CAAC E-mail: [email protected] 12 East San-huan Road Middle or [email protected] Chaoyang District, Beijing 100022 China Tel: +86 10 64598450 Ms. Lu Xin Ping Engineer E-mail: [email protected] Advisor Meteorological Center, North China ATMB Beijing Capital International Airport Beijing 100621 China CÔTE D'IVOIRE Mr. Konan Kouakou Chef du Service de l’Exploitation de la Tel.: + 225-21-21-58 90 Meteorologie or + 225 05 85 35 13 15 B.P. -

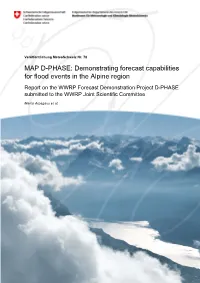

Demonstrating Forecast Capabilities for Flood Events in the Alpine Region

Veröffentlichung MeteoSchweiz Nr. 78 MAP D-PHASE: Demonstrating forecast capabilities for flood events in the Alpine region Report on the WWRP Forecast Demonstration Project D-PHASE submitted to the WWRP Joint Scientific Committee Marco Arpagaus et al. D-PHASE a WWRP Forecast Demonstration Project Veröffentlichung MeteoSchweiz Nr. 78 ISSN: 1422-1381 MAP D-PHASE: Demonstrating forecast capabilities for flood events in the Alpine region Report on the WWRP Forecast Demonstration Project D-PHASE submitted to the WWRP Joint Scientific Committee Marco Arpagaus1), Mathias W. Rotach1), Paolo Ambrosetti1), Felix Ament1), Christof Appenzeller1), Hans-Stefan Bauer2), Andreas Behrendt2), François Bouttier3), Andrea Buzzi4), Matteo Corazza5), Silvio Davolio4), Michael Denhard6), Manfred Dorninger7), Lionel Fontannaz1), Jacqueline Frick8), Felix Fundel1), Urs Germann1), Theresa Gorgas7), Giovanna Grossi9), Christoph Hegg8), Alessandro Hering1), Simon Jaun10), Christian Keil11), Mark A. Liniger1), Chiara Marsigli12), Ron McTaggart-Cowan13), Andrea Montani12), Ken Mylne14), Luca Panziera1), Roberto Ranzi9), Evelyne Richard15), Andrea Rossa16), Daniel Santos-Muñoz17), Christoph Schär10), Yann Seity3), Michael Staudinger18), Marco Stoll1), Stephan Vogt19), Hans Volkert11), André Walser1), Yong Wang18), Johannes Werhahn20), Volker Wulfmeyer2), Claudia Wunram21), and Massimiliano Zappa8). 1) Federal Office of Meteorology and Climatology MeteoSwiss, Switzerland, 2) University of Hohenheim, Germany, 3) Météo-France, France, 4) Institute of Atmospheric Sciences -

RUAG Ammotec Leading Ammunition Technology

RUAG Ammotec Leading Ammunition Technology RUAG Ammotec 1 One target, one round, one choice. RUAG Ammotec. RUAG Ammotec stands for a most sophisticated ammunition technology. Our professionals develop and manufacture high-performance, small-calibre standard and special ammunition for Hunting & Sports and Defence & Law Enforcement, as well as pyrotechnical elements and components for industry in general. On the basis of our value system which is based on high performance, visionary thinking and collaboration, our enterprise ensures that you can fully rely on RUAG Ammotec and its products in any situation. The mutual trust and satisfaction of our customers are the result of a precision job, high quality standards and last but not least a personal, honest relationship. More than 2,200 dedicated employees and 180 sales partners see to it that we are ready on the spot to satisfy your individual requirements. Together with you we work out solutions which meet future demands. We look forward with confidence, are flexible and ready to take new, even unusual approaches. You aim at a target and we offer you the right ammunition to reach it. In the interest of your success we hope to be your first choice. We want you to assess us against the fulfilment of this daily promise and intend to achieve success together with you, our customer, partner or fellow worker. Welcome to RUAG Ammotec. Christoph M. Eisenhardt CEO RUAG Ammotec RUAG Ammotec 3 Who we are About RUAG Ammotec Based upon a 150-year heritage of quality and innovation, RUAG Ammotec has become a trusted technology leader and strategic partner in the global ammunition industry. -

Climate Services to Support Adaptation and Livelihoods

In cooperation with: Published by: Climate-Smart Land Use Insight Brief No. 3 Climate services to support adaptation and livelihoods Key Messages f Climate services – the translation, tailoring, f It is not enough to tailor climate services to a packaging and communication of climate data to specifc context; equity and inclusion require meet users’ needs – play a key role in adaptation paying attention to the differentiated needs of to climate change. For farmers, they provide vital men and women, Indigenous Peoples, ethnic information about the onset of seasons, temperature minorities, and other groups. Within a single and rainfall projections, and extreme weather community, perspectives on climate risks, events, as well as longer-term trends they need to information needs, preferences for how to receive understand to plan and adapt. climate information, and capacities to use it may vary, even just refecting the different roles that f In ASEAN Member States, where agriculture is men and women may play in agriculture. highly vulnerable to climate change, governments already recognise the importance of climate f Delivering high-quality climate services to all services. National meteorological and hydrological who need them is a signifcant challenge. Given institutes provide a growing array of data, the urgent need to adapt to climate change and disseminated online, on broadcast media and via to support the most vulnerable populations and SMS, and through agricultural extension services sectors, it is crucial to address resource gaps and and innovative programmes such as farmer feld build capacity in key institutions, so they can schools. Still, there are signifcant capacity and continue to improve climate information services resource gaps that need to be flled. -

Annual Report 2009 on the Activity of the Swiss Federal Audit Office

EIDGENÖSSISCHE FINANZKONTROLLE CONTRÔLE FÉDÉRAL DES FINANCES CONTROLLO FEDERALE DELLE FINANZE SWISS FEDERAL AUDIT OFFICE Annual Report 2009 on the Activity of the Swiss Federal Audit Office Editorial The Swiss Federal Audit Office, a politically out its duties in a highly dedicated and profes - neutral specialist authority, is the supreme sional manner. The deficiencies listed in this financial supervisory body of the Confederation. report are not intended to call this opinion into Its aim is to advocate proper and lawful finan - question. I would like to take this opportunity to cial conduct by the Administration. In an age thank the Finance Delegation and the Federal of results-oriented public management, it is Council, which understand the role of the Swiss increasingly committed to improving the effective- Federal Audit Office as an independent, critical ness of government activity via comprehensive audit authority. I would also like to express my audits and analyses. The Swiss Federal Audit gratitude to the numerous employees in the Office seeks to locate deficiencies and weak - audited offices who readily supported the work nesses by taking a critical view, and not only to of the Swiss Federal Audit Office in the interests achieve temporary improvements but also to of the cause at hand. Finally, I would like to fundamentally optimize government actions thank the employees of the Swiss Federal Audit thanks to professional advocacy. Consequently, Office, who go about their demanding work with the Swiss Federal Audit Office focuses on dedication and motivation in the interests of dialogue with those audited in order to ensure taxpayers. its recommendations are willingly accepted. -

RUAG Completes Acquisition of Cyber Security Company Clearswift

Page 1 / 2 Media release RUAG completes acquisition of cyber security company Clearswift Bern (Switzerland), Theale (UK), 23 January 2017. RUAG Defence has successfully completed the acquisition of the British cyber security company Clearswift announced in December 2016. This acquisition enables RUAG to enhance key aspects of its current product and service portfolio in the area of cyber security. The acquisition of Clearswift increases the number of employees at RUAG’s new Cyber Security business unit, established at the beginning of 2017, to over 230 cyber security experts at locations in Switzerland, the UK, Germany, the USA, Australia and Japan. Clearswift is a global cyber security company with a product portfolio in the areas of data loss prevention and deep content inspection, with more than 2,300 customers in over 70 countries. In 2016 it generated sales exceeding GBP 23 million. “Clearswift’s products for the protection of critical data are the perfect complement to RUAG’s network expertise. Clearswift also has a strong research and development department, a global service organisation and established international sales channels”, says Urs Breitmeier, CEO of RUAG. In turn, the merger provides Clearswift with access to RUAG’s customer base, local and national authorities, the military and operators of critical infrastructures. “With the new Cyber Security business unit we want to position ourselves as a provider of high-quality specialist solutions and services for the customers worldwide in various sectors”, Urs Breitmeier adds. The headquarters of the Cyber Security business unit are in Switzerland. The UK site will be the centre of excellence for software business in the combined cyber security business, and the “Clearswift” brand will be retained. -

Annual Report 2018 Eidgenössische Finanzkontrolle Contrôle Fédéral Des Finances Controllo Federale Delle Finanze Swiss Federal Audit Office

EIDGENÖSSISCHE FINANZKONTROLLE CONTRÔLE FÉDÉRAL DES FINANCES CONTROLLO FEDERALE DELLE FINANZE SWISS FEDERAL AUDIT OFFICE 2018 ANNUAL REPORT BERN | MAY 2019 SWISS FEDERAL AUDIT OFFICE Monbijoustrasse 45 3003 Bern – Switzerland T. +41 58 463 11 11 F. +41 58 453 11 00 [email protected] twitter @EFK_CDF_SFAO WWW.SFAO.ADMIN.CH DIRECTOR’S FOREWORD FROM WHISTLEBLOWING TO PARTICIPATORY AUDITING In 2008, federal employees were not form www.whistleblowing.admin.ch. It is legally required to report the felonies now the IT system that ensures the an- they encountered to the courts. The onymous processing of reports. These experts of GRECO, a Council of Europe reports come from federal employees, anti-corruption body, pointed out this but also from third parties who have wit- shortcoming at that time in their evalu- nessed irregularities. ation report on Switzerland. For the SFAO, processing this infor- To remedy this shortcoming, the Federal mation is not simple. It is necessary to Office of Justice, in close cooperation sift through the information and critic- with the Federal Office of Personnel and ally verify onsite whether it is plausible. the Swiss Federal Audit Office (SFAO), Some reports may actually be intended introduced on 1 January 2011 the new to harm someone. It is then necessary Article 22a of the Federal Personnel to identify the appropriate time to initiate Act and the obligation to report felonies possible criminal proceedings and avoid and misdemeanours prosecuted ex of- obstructing them by alerting the perpe- ficio. This is when whistleblowing was trators of an offence. In any case, noth- launched at the federal administrative ing that could put the whistleblower in level. -

Security & Defence European

a 7.90 D 14974 E D European & Security ES & Defence 10/2019 International Security and Defence Journal ISSN 1617-7983 • US Army Priorities • The US and NATO • European Combat Helicopter Acquisition • EU Defence Cooperation • Surface-to-Air Missile Developments www.euro-sd.com • • New Risks of Digitised Wars • Italy's Fleet Renewal Programme • Light Tactical Vehicles • UGVs for Combat Support • Defence Procurement in Denmark • Taiwan's Defence Market • Manned-Unmanned Teaming • European Mortar Industry October 2019 Politics · Armed Forces · Procurement · Technology LIFETIME EXCELLENCE At MTU Aero Engines, we always have your goals in mind. As a reliable partner for military engines, our expertise covers the entire engine lifecycle. And our tailored services guarantee the success of your missions. All systems go! www.mtu.de Militaer_E_210x297_European_Security_Defence_20191001_01.indd 1 17.09.19 08:06 Editorial Juncker’s Heritage The end of October marks the conclusion of the term of office of Jean-Claude Juncker as President of the European Commission. His legacy to his successor Ursula von der Leyen is largely a heap of dust and ashes. Five years ago he came to power with a fanfare for the future. The European Union was to be given a new burst of vitality, become closer to its citizens, at last put an end to its constant preoccupation with itself, and work towards solving the real problems of our times. None of these good intentions have been transformed into reality, not even notionally. Instead, the situation has become worse – a whole lot worse. This is due not least to the fact that the United Kingdom is on the verge of leaving the Euro- pean Union.