An Ensemble of Deep Learning Models for Weather Nowcasting Based on Radar Products’ Values Prediction

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Meteoswiss Good to Know Postdoc on Climate Change and Heat Stress

Federal Department of Home Affairs FDHA Federal Office of Meteorology and Climatology MeteoSwiss MeteoSwiss Good to know The Swiss Federal Office for Meteorology and Climatology MeteoSwiss is the Swiss National Weather Service. We record, monitor and forecast weather and climate in Switzerland and thus make a sus- tainable contribution to the well-being of the community and to the benefit of industry, science and the environment. The Climate Department carries out statistical analyses of observed and modelled cli- mate data and is responsible for providing the results for users and customers. Within the team Cli- mate Prediction we currently have a job opening for the following post: Postdoc on climate change and heat stress Your main task is to calculate potential heat stress for current and future climate over Europa that will serve as a basis for assessing the impact of climate change on the health of workers. You derive complex heat indices from climate model output and validate them against observational datasets. You will further investigate the predictability of heat stress several weeks ahead on the basis of long- range weather forecasts. In close collaboration with international partners of the EU H2020 project Heat-Shield you will setup a prototype system of climate services, including an early warning system. Your work hence substantially contributes to a heat-based risk assessment for different key industries and potential productivity losses across Europe. The results will be a central basis for policy making and to plan climate adaptation measures. Your responsibilities will further include publishing results in scientific journals and reports, reporting and coordinating our contribution to the European project and presenting results at national and international conferences. -

Dealing with Inconsistent Weather Warnings: Effects on Warning Quality and Intended Actions

Research Collection Journal Article Dealing with inconsistent weather warnings: effects on warning quality and intended actions Author(s): Weyrich, Philippe; Scolobig, Anna; Patt, Anthony Publication Date: 2019-10 Permanent Link: https://doi.org/10.3929/ethz-b-000291292 Originally published in: Meteorological Applications 26(4), http://doi.org/10.1002/met.1785 Rights / License: Creative Commons Attribution 4.0 International This page was generated automatically upon download from the ETH Zurich Research Collection. For more information please consult the Terms of use. ETH Library Received: 11 July 2018 Revised: 12 December 2018 Accepted: 31 January 2019 Published on: 28 March 2019 DOI: 10.1002/met.1785 RESEARCH ARTICLE Dealing with inconsistent weather warnings: effects on warning quality and intended actions Philippe Weyrich | Anna Scolobig | Anthony Patt Climate Policy Group, Department of Environmental Systems Science, Swiss Federal In the past four decades, the private weather forecast sector has been developing Institute of Technology (ETH Zurich), Zurich, next to National Meteorological and Hydrological Services, resulting in additional Switzerland weather providers. This plurality has led to a critical duplication of public weather Correspondence warnings. For a specific event, different providers disseminate warnings that are Philippe Weyrich, Climate Policy Group, Department of Environmental Systems Science, more or less severe, or that are visualized differently, leading to inconsistent infor- Swiss Federal Institute of Technology (ETH mation that could impact perceived warning quality and response. So far, past Zurich), 8092 Zurich, Switzerland. research has not studied the influence of inconsistent information from multiple Email: [email protected] providers. This knowledge gap is addressed here. -

List of Participants

WMO Sypmposium on Impact Based Forecasting and Warning Services Met Office, United Kingdom 2-4 December 2019 LIST OF PARTICIPANTS Name Organisation 1 Abdoulaye Diakhete National Agency of Civil Aviation and Meteorology 2 Angelia Guy National Meteorological Service of Belize 3 Brian Golding Met Office Science Fellow - WMO HIWeather WCRP Impact based Forecast Team, Korea Meteorological 4 Byungwoo Jung Administration 5 Carolina Gisele Cerrudo National Meteorological Service Argentina 6 Caroline Zastiral British Red Cross 7 Catalina Jaime Red Cross Climate Centre Directorate for Space, Security and Migration Chiara Proietti 8 Disaster Risk Management Unit 9 Chris Tubbs Met Office, UK 10 Christophe Isson Météo France 11 Christopher John Noble Met Service, New Zealand 12 Dan Beardsley National Weather Service NOAA/National Weather Service, International Affairs Office 13 Daniel Muller 14 David Rogers World Bank GFDRR 15 Dr. Frederiek Sperna Weiland Deltares 16 Dr. Xu Tang Weather & Disaster Risk Reduction Service, WMO National center for hydro-meteorological forecasting, Viet Nam 17 Du Duc Tien 18 Elizabeth May Webster South African Weather Service 19 Elizabeth Page UCAR/COMET 20 Elliot Jacks NOAA 21 Gerald Fleming Public Weather Service Delivery for WMO 22 Germund Haugen Met No 23 Haleh Kootval World Bank Group 24 Helen Bye Met Office, UK 25 Helene Correa Météo-France Impact based Forecast Team, Korea Meteorological 26 Hyo Jin Han Administration Impact based Forecast Team, Korea Meteorological 27 Inhwa Ham Administration Meteorological Service -

Innovations Across the Grid Partnerships Transforming the Power Sector

VOLUME II INNOVATIONS ACROSS THE GRID PARTNERSHIPS TRANSFORMING THE POWER SECTOR The Edison Foundation INSTITUTE for ELECTRIC INNOVATION The Edison Foundation INSTITUTE for ELECTRIC INNOVATION VOLUME II INNOVATIONS ACROSS THE GRID PARTNERSHIPS TRANSFORMING THE POWER SECTOR December 2014 © 2014 by the Institute for Electric Innovation All rights reserved. Published 2014. Printed in the United States of America. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopying, recording, or any information storage or retrieval system or method, now known or hereinafter invented or adopted, without the express prior written permission of the Institute for Electric Innovation. Attribution Notice and Disclaimer This work was prepared by the Edison Foundation Institute for Electric Innovation (IEI). When used as a reference, attribution to IEI is requested. IEI, any member of IEI, and any person acting on IEI's behalf (a) does not make any warranty, express or implied, with respect to the accuracy, completeness or usefulness of the information, advice, or recommendations contained in this work, and (b) does not assume and expressly disclaims any liability with respect to the use of, or for damages resulting from the use of any information, advice, or recommendations contained in this work. The views and opinions expressed in this work do not necessarily reflect those of IEI or any member of IEI. This material and its production, reproduction, and distribution by IEI does -

Management Console Reference Guide

Secure Web Gateway Management Console Reference Guide Release 10.0 • Manual Version 1.01 M86 SECURITY SETUP AND CONFIGURATION GUIDE © 2010 M86 Security All rights reserved. 828 W. Taft Ave., Orange, CA 92865, USA Version 1.01, published November 2010 for SWG software release 10.0 This document may not, in whole or in part, be copied, photo- copied, reproduced, translated, or reduced to any electronic medium or machine readable form without prior written con- sent from M86 Security. Every effort has been made to ensure the accuracy of this document. However, M86 Security makes no warranties with respect to this documentation and disclaims any implied war- ranties of merchantability and fitness for a particular purpose. M86 Security shall not be liable for any error or for incidental or consequential damages in connection with the furnishing, performance, or use of this manual or the examples herein. Due to future enhancements and modifications of this product, the information described in this documentation is subject to change without notice. Trademarks Other product names mentioned in this manual may be trade- marks or registered trademarks of their respective companies and are the sole property of their respective manufacturers. II M86 SECURITY, Management Console Reference Guide CONTENT INTRODUCTION TO THE SECURE WEB GATEWAY MANAGEMENT CONSOLE .................................................................... 1 WORKING WITH THE MANAGEMENT CONSOLE................ 3 Management Console . 3 Main Menu . 4 Using the Management Console . 6 Management Wizard . 10 User Groups Wizard . 11 Log Entry Wizard . 28 DASHBOARD............................................................... 33 Dashboard Console . 33 Functionality. 34 Device Gauges . 35 Performance Graphs . 38 Messages (SNMP). 40 Device Utilization Graphs. 41 USERS ...................................................................... -

Accurate Weather Forecasting Through Locality Based Collaborative Computing

Accurate Weather Forecasting Through Locality Based Collaborative Computing Bard˚ Fjukstad John Markus Bjørndalen Otto Anshus Department of Computer Science Department of Computer Science Department of Computer Science Faculty of Science and Technology Faculty of Science and Technology Faculty of Science and Technology University of Tromsø, Norway University of Tromsø, Norway University of Tromsø, Norway Email: [email protected] Email: [email protected] and the Norwegian Meteorological Institute Forecasting Division for Northern Norway Email: [email protected] Abstract—The Collaborative Symbiotic Weather Forecasting of collaboration is when users use forecasts from the national (CSWF) system lets a user compute a short time, high-resolution weather services to produce short-term, small area, and higher forecast for a small region around the user, in a few minutes, resolution forecasts. There is no feedback of these forecasts on-demand, on a PC. A collaborated forecast giving better uncertainty estimation is then created using forecasts from other from the users to the weather services. These forecasts take users in the same general region. A collaborated forecast can be minutes to compute on a single multi-core PC. The third is visualized on a range of devices and in a range of styles, typically a symbiotic collaboration where users share on-demand their as a composite of the individual forecasts. CSWF assumes locality locally produced forecasts with each other. between forecasts, regions, and PCs. Forecasts for a region are In a complex terrain like the fjords and mountains of Nor- computed by and stored on PCs located within the region. To locate forecasts, CSWF simply scans specific ports on public IP way, the topography have a significant impact on the weather addresses in the local area. -

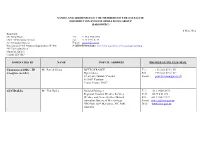

Names and Addresses of the Members of the Satellite Distribution System Operations Group (Sadisopsg)

NAMES AND ADDRESSES OF THE MEMBERS OF THE SATELLITE DISTRIBUTION SYSTEM OPERATIONS GROUP (SADISOPSG) 8 May 2014 Secretary: Mr. Greg Brock Tel: +1 514 954 8194 Chief, Meteorology Section Fax: +1 514 954 6759 Air Navigation Bureau E-mail: [email protected] International Civil Aviation Organization (ICAO) SADISOPSG website: http://www.icao.int/safety/meteorology/sadisopsg 999 University Street Montréal, Québec Canada H3C 5H7 NOMINATED BY NAME POSTAL ADDRESS PHONE/FAX/TELEX/E-MAIL Chairman of DMG - FP Mr. Patrick Simon METEO FRANCE Tel. : +33 5 61 07 81 50 (ex-officio member) Dprévi/Aéro Fax : +33 5 61 07 81 09 42, avenue Gustave Coriolis E-mail : [email protected] F-31057 Toulouse Cedex, France 31057 AUSTRALIA Mr. Tim Hailes National Manager Tel.: +61 3 9669 4273 Regional Aviation Weather Services Cell: +0427 840 175 Weather and Ocean Services Branch Fax: +61 3 9662 1222 Australian Bureau of Meteorology E-mail: [email protected] GPO Box 1289 Melbourne VIC 3001 Web: www.bom.gov.au Australia NOMINATED BY NAME POSTAL ADDRESS PHONE/FAX/TELEX/E-MAIL CHINA Ms. Zou Juan Engineer Tel:: + 86 10 87786828 MET Division Fax: +86 10 87786820 Air Traffic Management Bureau, CAAC E-mail: [email protected] 12 East San-huan Road Middle or [email protected] Chaoyang District, Beijing 100022 China Tel: +86 10 64598450 Ms. Lu Xin Ping Engineer E-mail: [email protected] Advisor Meteorological Center, North China ATMB Beijing Capital International Airport Beijing 100621 China CÔTE D'IVOIRE Mr. Konan Kouakou Chef du Service de l’Exploitation de la Tel.: + 225-21-21-58 90 Meteorologie or + 225 05 85 35 13 15 B.P. -

Demonstrating Forecast Capabilities for Flood Events in the Alpine Region

Veröffentlichung MeteoSchweiz Nr. 78 MAP D-PHASE: Demonstrating forecast capabilities for flood events in the Alpine region Report on the WWRP Forecast Demonstration Project D-PHASE submitted to the WWRP Joint Scientific Committee Marco Arpagaus et al. D-PHASE a WWRP Forecast Demonstration Project Veröffentlichung MeteoSchweiz Nr. 78 ISSN: 1422-1381 MAP D-PHASE: Demonstrating forecast capabilities for flood events in the Alpine region Report on the WWRP Forecast Demonstration Project D-PHASE submitted to the WWRP Joint Scientific Committee Marco Arpagaus1), Mathias W. Rotach1), Paolo Ambrosetti1), Felix Ament1), Christof Appenzeller1), Hans-Stefan Bauer2), Andreas Behrendt2), François Bouttier3), Andrea Buzzi4), Matteo Corazza5), Silvio Davolio4), Michael Denhard6), Manfred Dorninger7), Lionel Fontannaz1), Jacqueline Frick8), Felix Fundel1), Urs Germann1), Theresa Gorgas7), Giovanna Grossi9), Christoph Hegg8), Alessandro Hering1), Simon Jaun10), Christian Keil11), Mark A. Liniger1), Chiara Marsigli12), Ron McTaggart-Cowan13), Andrea Montani12), Ken Mylne14), Luca Panziera1), Roberto Ranzi9), Evelyne Richard15), Andrea Rossa16), Daniel Santos-Muñoz17), Christoph Schär10), Yann Seity3), Michael Staudinger18), Marco Stoll1), Stephan Vogt19), Hans Volkert11), André Walser1), Yong Wang18), Johannes Werhahn20), Volker Wulfmeyer2), Claudia Wunram21), and Massimiliano Zappa8). 1) Federal Office of Meteorology and Climatology MeteoSwiss, Switzerland, 2) University of Hohenheim, Germany, 3) Météo-France, France, 4) Institute of Atmospheric Sciences -

Climate Services to Support Adaptation and Livelihoods

In cooperation with: Published by: Climate-Smart Land Use Insight Brief No. 3 Climate services to support adaptation and livelihoods Key Messages f Climate services – the translation, tailoring, f It is not enough to tailor climate services to a packaging and communication of climate data to specifc context; equity and inclusion require meet users’ needs – play a key role in adaptation paying attention to the differentiated needs of to climate change. For farmers, they provide vital men and women, Indigenous Peoples, ethnic information about the onset of seasons, temperature minorities, and other groups. Within a single and rainfall projections, and extreme weather community, perspectives on climate risks, events, as well as longer-term trends they need to information needs, preferences for how to receive understand to plan and adapt. climate information, and capacities to use it may vary, even just refecting the different roles that f In ASEAN Member States, where agriculture is men and women may play in agriculture. highly vulnerable to climate change, governments already recognise the importance of climate f Delivering high-quality climate services to all services. National meteorological and hydrological who need them is a signifcant challenge. Given institutes provide a growing array of data, the urgent need to adapt to climate change and disseminated online, on broadcast media and via to support the most vulnerable populations and SMS, and through agricultural extension services sectors, it is crucial to address resource gaps and and innovative programmes such as farmer feld build capacity in key institutions, so they can schools. Still, there are signifcant capacity and continue to improve climate information services resource gaps that need to be flled. -

Bankovní Institut Vysoká Škola Praha

Bankovní institut vysoká škola Praha Katedra informačních technologií a elektronického obchodování Moderní servery a technologie Servery a superservery, moderní technologie a využití v praxi Bakalářská práce Autor: Jan Suchý Informační technologie a management Vedoucí práce: Ing. Vladimír Beneš Praha Duben, 2007 Prohlášení: Prohlašuji, ţe jsem bakalářskou práci zpracoval samostatně a s pouţitím uvedené literatury. podpis autora V Praze dne 14.4.2007 Jan Suchý 2 Anotace práce: Obsahem této práce je popis moderní technologie v oblasti procesorového vývoje a jeho nasazení do provozu v oblasti superpočítačových systémů. Vědeckotechnický pokrok je dnes významně urychlován právě specializovanými prototypy superpočítačů určenými pro nejnáročnější úkoly v oblastech jako jsou genetika, jaderná fyzika, termodynamika, farmacie, geologie, meteorologie a mnoho dalších. Tato bakalářská práce se v krátkosti zmiňuje o prvopočátku vzniku mikroprocesoru, víceprocesorové architektury aţ po zajímavé projekty, jako jsou IBM DeepBlue, Deep Thunder nebo fenomenální IBM BlueGene/L, který je v současné době nejvýkonnějším superpočítačem na světě. Trend zvyšování výkonu a důraz na náklady související s provozováním IT, vedl zároveň k poţadavku na efektivnější vyuţívání IT systémů. Technologie virtualizaci a Autonomic Computingu se tak staly fenoménem dnešní doby. 3 Obsah 1 ÚVOD 6 2 PROCESORY 7 2.1 HISTORIE A VÝVOJ 7 2.2 NOVÉ TECHNOLOGIE 8 2.2.1 Silicon Germanium - SiGe (1989) 8 2.2.2 První měděný procesor (1997) 8 2.2.3 Silicon on Insulator - SOI (1998) 9 2.2.4 -

Modeling and Distributed Computing of Snow Transport And

Modeling and distributed computing of snow transport and delivery on meso-scale in a complex orography Modelitzaci´oi computaci´odistribu¨ıda de fen`omens de transport i dip`osit de neu a meso-escala en una orografia complexa Thesis dissertation submitted in fulfillment of the requirements for the degree of Doctor of Philosophy Programa de Doctorat en Societat de la Informaci´oi el Coneixement Alan Ward Koeck Advisor: Dr. Josep Jorba Esteve Distributed, Parallel and Collaborative Systems Research Group (DPCS) Universitat Oberta de Catalunya (UOC) Rambla del Poblenou 156, 08018 Barcelona – Barcelona, 2015 – c Alan Ward Koeck, 2015 Unless otherwise stated, all artwork including digital images, sketches and line drawings are original creations by the author. Permission is granted to copy, distribute and/or modify this document under the terms of the Creative Commons BY-SA License, version 4.0 or ulterior at the choice of the reader/user. At the time of writing, the license code was available at: https://creativecommons.org/licenses/by-sa/4.0/legalcode Es permet la lliure c`opia, distribuci´oi/o modificaci´od’aquest document segons els termes de la Lic`encia Creative Commons BY-SA, versi´o4.0 o posterior, a l’escollida del lector o usuari. En el moment de la redacci´o d’aquest text, es podia accedir al text de la llic`encia a l’adre¸ca: https://creativecommons.org/licenses/by-sa/4.0/legalcode 2 In memoriam Alan Ward, MA Oxon, PhD Dublin 1937-2014 3 4 Acknowledgements A long-term commitment such as this thesis could not prosper on my own merits alone. -

Internet of Things and Big Data Analytics Toward Next-Generation Intelligence Studies in Big Data

Studies in Big Data 30 Nilanjan Dey Aboul Ella Hassanien Chintan Bhatt Amira S. Ashour Suresh Chandra Satapathy Editors Internet of Things and Big Data Analytics Toward Next-Generation Intelligence Studies in Big Data Volume 30 Series editor Janusz Kacprzyk, Polish Academy of Sciences, Warsaw, Poland e-mail: [email protected] About this Series The series “Studies in Big Data” (SBD) publishes new developments and advances in the various areas of Big Data- quickly and with a high quality. The intent is to cover the theory, research, development, and applications of Big Data, as embedded in the fields of engineering, computer science, physics, economics and life sciences. The books of the series refer to the analysis and understanding of large, complex, and/or distributed data sets generated from recent digital sources coming from sensors or other physical instruments as well as simulations, crowd sourcing, social networks or other internet transactions, such as emails or video click streams and other. The series contains monographs, lecture notes and edited volumes in Big Data spanning the areas of computational intelligence incl. neural networks, evolutionary computation, soft computing, fuzzy systems, as well as artificial intelligence, data mining, modern statistics and Operations research, as well as self-organizing systems. Of particular value to both the contributors and the readership are the short publication timeframe and the world-wide distribution, which enable both wide and rapid dissemination of research output. More information about this series at http://www.springer.com/series/11970 Nilanjan Dey • Aboul Ella Hassanien Chintan Bhatt • Amira S. Ashour Suresh Chandra Satapathy Editors Internet of Things and Big Data Analytics Toward Next-Generation Intelligence 123 Editors Nilanjan Dey Amira S.