Massively Parallel Processors

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Historical Perspective and Further Reading 162.E1

2.21 Historical Perspective and Further Reading 162.e1 2.21 Historical Perspective and Further Reading Th is section surveys the history of in struction set architectures over time, and we give a short history of programming languages and compilers. ISAs include accumulator architectures, general-purpose register architectures, stack architectures, and a brief history of ARMv7 and the x86. We also review the controversial subjects of high-level-language computer architectures and reduced instruction set computer architectures. Th e history of programming languages includes Fortran, Lisp, Algol, C, Cobol, Pascal, Simula, Smalltalk, C+ + , and Java, and the history of compilers includes the key milestones and the pioneers who achieved them. Accumulator Architectures Hardware was precious in the earliest stored-program computers. Consequently, computer pioneers could not aff ord the number of registers found in today’s architectures. In fact, these architectures had a single register for arithmetic instructions. Since all operations would accumulate in one register, it was called the accumulator , and this style of instruction set is given the same name. For example, accumulator Archaic EDSAC in 1949 had a single accumulator. term for register. On-line Th e three-operand format of RISC-V suggests that a single register is at least two use of it as a synonym for registers shy of our needs. Having the accumulator as both a source operand and “register” is a fairly reliable indication that the user the destination of the operation fi lls part of the shortfall, but it still leaves us one has been around quite a operand short. Th at fi nal operand is found in memory. -

Computer Organization EECC 550 • Introduction: Modern Computer Design Levels, Components, Technology Trends, Register Transfer Week 1 Notation (RTN)

Computer Organization EECC 550 • Introduction: Modern Computer Design Levels, Components, Technology Trends, Register Transfer Week 1 Notation (RTN). [Chapters 1, 2] • Instruction Set Architecture (ISA) Characteristics and Classifications: CISC Vs. RISC. [Chapter 2] Week 2 • MIPS: An Example RISC ISA. Syntax, Instruction Formats, Addressing Modes, Encoding & Examples. [Chapter 2] • Central Processor Unit (CPU) & Computer System Performance Measures. [Chapter 4] Week 3 • CPU Organization: Datapath & Control Unit Design. [Chapter 5] Week 4 – MIPS Single Cycle Datapath & Control Unit Design. – MIPS Multicycle Datapath and Finite State Machine Control Unit Design. Week 5 • Microprogrammed Control Unit Design. [Chapter 5] – Microprogramming Project Week 6 • Midterm Review and Midterm Exam Week 7 • CPU Pipelining. [Chapter 6] • The Memory Hierarchy: Cache Design & Performance. [Chapter 7] Week 8 • The Memory Hierarchy: Main & Virtual Memory. [Chapter 7] Week 9 • Input/Output Organization & System Performance Evaluation. [Chapter 8] Week 10 • Computer Arithmetic & ALU Design. [Chapter 3] If time permits. Week 11 • Final Exam. EECC550 - Shaaban #1 Lec # 1 Winter 2005 11-29-2005 Computing System History/Trends + Instruction Set Architecture (ISA) Fundamentals • Computing Element Choices: – Computing Element Programmability – Spatial vs. Temporal Computing – Main Processor Types/Applications • General Purpose Processor Generations • The Von Neumann Computer Model • CPU Organization (Design) • Recent Trends in Computer Design/performance • Hierarchy -

Phaser 600 Color Printer User Manual

WELCOME! This is the home page of the Phaser 600 Color Printer User Manual. See Tips on using this guide, or go immediately to the Contents. Phaser® 600 Wide-Format Color Printer Tips on using this guide ■ Use the navigation buttons in Acrobat Reader to move through the document: 1 23 4 5 6 1 and 4 are the First Page and Last Page buttons. These buttons move to the first or last page of a document. 2 and 3 are the Previous Page and Next Page buttons. These buttons move the document backward or forward one page at a time. 5 and 6 are the Go Back and Go Forward buttons. These buttons let you retrace your steps through a document, moving to each page or view in the order visited. ■ For best results, use the Adobe Acrobat Reader version 2.1 to read this guide. Version 2.1 of the Acrobat Reader is supplied on your printer’s CD-ROM. ■ Click on the page numbers in the Contents on the following pages to open the topics you want to read. ■ You can click on the page numbers following keywords in the Index to open topics of interest. ■ You can also use the Bookmarks provided by the Acrobat Reader to navigate through this guide. If the Bookmarks are not already displayed at the left of the window, select Bookmarks and Page from the View menu. ■ If you have difficultly reading any small type or seeing the details in any of the illustrations, you can use the Acrobat Reader’s Magnification feature. -

RISC-V Geneology

RISC-V Geneology Tony Chen David A. Patterson Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-6 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-6.html January 24, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Introduction RISC-V is an open instruction set designed along RISC principles developed originally at UC Berkeley1 and is now set to become an open industry standard under the governance of the RISC-V Foundation (www.riscv.org). Since the instruction set architecture (ISA) is unrestricted, organizations can share implementations as well as open source compilers and operating systems. Designed for use in custom systems on a chip, RISC-V consists of a base set of instructions called RV32I along with optional extensions for multiply and divide (RV32M), atomic operations (RV32A), single-precision floating point (RV32F), and double-precision floating point (RV32D). The base and these four extensions are collectively called RV32G. This report discusses the historical precedents of RV32G. We look at 18 prior instruction set architectures, chosen primarily from earlier UC Berkeley RISC architectures and major proprietary RISC instruction sets. Among the 122 instructions in RV32G: ● 6 instructions do not have precedents among the selected instruction sets, ● 98 instructions of the 116 with precedents appear in at least three different instruction sets. -

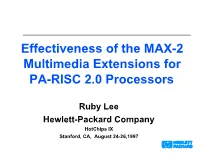

Effectiveness of the MAX-2 Multimedia Extensions for PA-RISC 2.0 Processors

Effectiveness of the MAX-2 Multimedia Extensions for PA-RISC 2.0 Processors Ruby Lee Hewlett-Packard Company HotChips IX Stanford, CA, August 24-26,1997 Outline Introduction PA-RISC MAX-2 features and examples Mix Permute Multiply with Shift&Add Conditionals with Saturation Arith (e.g., Absolute Values) Performance Comparison with / without MAX-2 General-Purpose Workloads will include Increasing Amounts of Media Processing MM a b a b 2 1 2 1 b c b c functionality 5 2 5 2 A B C D 1 2 22 2 2 33 3 4 55 59 A B C D 1 2 A B C D 22 1 2 22 2 2 2 2 33 33 3 4 55 59 3 4 55 59 Distributed Multimedia Real-time Information Access Communications Tool Tool Computation Tool time 1980 1990 2000 Multimedia Extensions for General-Purpose Processors MAX-1 for HP PA-RISC (product Jan '94) VIS for Sun Sparc (H2 '95) MAX-2 for HP PA-RISC (product Mar '96) MMX for Intel x86 (chips Jan '97) MDMX for SGI MIPS-V (tbd) MVI for DEC Alpha (tbd) Ideally, different media streams map onto both the integer and floating-point datapaths of microprocessors images GR: GR: 32x32 video 32x64 ALU SMU FP: graphics FP:16x64 Mem 32x64 audio FMAC PA-RISC 2.0 Processor Datapath Subword Parallelism in a General-Purpose Processor with Multimedia Extensions General Regs. y5 y6 y7 y8 x5 x6 x7 x8 x1 x2 x3 x4 y1 y2 y3 y4 Partitionable Partitionable 64-bit ALU 64-bit ALU 8 ops / cycle Subword Parallel MAX-2 Instructions in PA-RISC 2.0 Parallel Add (modulo or saturation) Parallel Subtract (modulo or saturation) Parallel Shift Right (1,2 or 3 bits) and Add Parallel Shift Left (1,2 or 3 bits) and Add Parallel Average Parallel Shift Right (n bits) Parallel Shift Left (n bits) Mix Permute MAX-2 Leverages Existing Processing Resources FP: INTEGER FLOAT GR: 16x64 General Regs. -

Design of the RISC-V Instruction Set Architecture

Design of the RISC-V Instruction Set Architecture Andrew Waterman Electrical Engineering and Computer Sciences University of California at Berkeley Technical Report No. UCB/EECS-2016-1 http://www.eecs.berkeley.edu/Pubs/TechRpts/2016/EECS-2016-1.html January 3, 2016 Copyright © 2016, by the author(s). All rights reserved. Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee provided that copies are not made or distributed for profit or commercial advantage and that copies bear this notice and the full citation on the first page. To copy otherwise, to republish, to post on servers or to redistribute to lists, requires prior specific permission. Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman A dissertation submitted in partial satisfaction of the requirements for the degree of Doctor of Philosophy in Computer Science in the Graduate Division of the University of California, Berkeley Committee in charge: Professor David Patterson, Chair Professor Krste Asanovi´c Associate Professor Per-Olof Persson Spring 2016 Design of the RISC-V Instruction Set Architecture Copyright 2016 by Andrew Shell Waterman 1 Abstract Design of the RISC-V Instruction Set Architecture by Andrew Shell Waterman Doctor of Philosophy in Computer Science University of California, Berkeley Professor David Patterson, Chair The hardware-software interface, embodied in the instruction set architecture (ISA), is arguably the most important interface in a computer system. Yet, in contrast to nearly all other interfaces in a modern computer system, all commercially popular ISAs are proprietary. -

Computer Architectures an Overview

Computer Architectures An Overview PDF generated using the open source mwlib toolkit. See http://code.pediapress.com/ for more information. PDF generated at: Sat, 25 Feb 2012 22:35:32 UTC Contents Articles Microarchitecture 1 x86 7 PowerPC 23 IBM POWER 33 MIPS architecture 39 SPARC 57 ARM architecture 65 DEC Alpha 80 AlphaStation 92 AlphaServer 95 Very long instruction word 103 Instruction-level parallelism 107 Explicitly parallel instruction computing 108 References Article Sources and Contributors 111 Image Sources, Licenses and Contributors 113 Article Licenses License 114 Microarchitecture 1 Microarchitecture In computer engineering, microarchitecture (sometimes abbreviated to µarch or uarch), also called computer organization, is the way a given instruction set architecture (ISA) is implemented on a processor. A given ISA may be implemented with different microarchitectures.[1] Implementations might vary due to different goals of a given design or due to shifts in technology.[2] Computer architecture is the combination of microarchitecture and instruction set design. Relation to instruction set architecture The ISA is roughly the same as the programming model of a processor as seen by an assembly language programmer or compiler writer. The ISA includes the execution model, processor registers, address and data formats among other things. The Intel Core microarchitecture microarchitecture includes the constituent parts of the processor and how these interconnect and interoperate to implement the ISA. The microarchitecture of a machine is usually represented as (more or less detailed) diagrams that describe the interconnections of the various microarchitectural elements of the machine, which may be everything from single gates and registers, to complete arithmetic logic units (ALU)s and even larger elements. -

Pre-Award Documentation for Solicitation No. RS-AED

QYc o Qi&cx5Scm QSCIENTECH Cost Proposal To United States Nuclear Regulatory Commission For Revised Request for Proposal (RFP) No. RS-AED-00-300 Emergency Response Data System (ERDS) Maintenance 2000 - 2003, Plus Two Optional Years Revision 0 SCIENTECH Proposal Number 99000-0001-055 January 3, 2000 This proposal or quotation includes data that shall not be disclosed outside the U. S. Nuclear Regulatory Commission or the Government and shall not be duplicated, used, or disclosed--in whole or in part--for any purpose other than to evaluate this proposal or quotation. If, however, a contract is awarded to this Offeror or quoter as a result of--or in connection with-the submission of this data, the NRC and the Government shall have the right to duplicate, use, or disclose the data to the extent provided in the resulting contract This restriction does not limit the NRC's or the Government's right to use information contained in this data if it is obtained from another source without restriction. The data subject to this restriction are contained in all sheets. SCIENTECH Inc., Proprietaryand Confidential ADM02 Template=ADM-008 OSCIENTetH. ERDS Maintenance, Rev. 0 TABLE OF CONTENTS Page 1. INTRODUCTION ....................................................................................................................................... 1 2. SF 1449 SOLICITATION/CONTRACT/ORDER FOR COMMERCIAL ITEMS ............................... 2 3. COST ESTIMATE BY CLIN ..................................................................................................... ...... 6 4. ORGANIZATIONAL CONFLICT OF INTEREST ............................................................................. 7 5. OFFEROR REPRESENTATIONS AND CERTIFICATIONS- COMMERCIAL ITEMS ................. 9 Attachment A SF 1449 Attachment B Offeror Representations and Certifications Attachment C SF 3881 SCIENTECH Inc., Proprietaryand Confidentialsubject to cover page disclosure OSCIENTECH* 1. Introduction SCIENTECH, Inc., is pleased to submit this Cost Proposal in response to U. -

The Symbolics Virtual Lisp Machine Or Using the Dec Alpha As a Programmable Micro-Engine1

THE SYMBOLICS VIRTUAL LISP MACHINE OR USING THE DEC ALPHA AS A PROGRAMMABLE MICRO-ENGINE 1 (EXTENDED ABSTRACT) P.T. Withington, Scott McKay, Gary Palter 2 Symbolics Inc. 6 Concord Farms Concord, MA 01742-2727, U.S.A. [email protected] 1+ 508 287 1000 Paul Robertson 2 Dynamic Object Language Group 2 Parsonage Hill Haverhill, MA 01832, U.S.A. specifically to execute the Lisp language. INTRODUCTION12 Because of the limited market, this custom hardware has never been able to take advantage Symbolics’ Genera system represents the accu- of cutting-edge technology such as is available mulation of nearly three and a half decades of in commodity, RISC-based workstations. At the software engineering tools, written in several same time, non-standard hardwares such as the dialects of the Lisp language and several object- Lisp Machine have fallen into economic oriented extensions to Lisp. Traditionally, disfavor. Nonetheless, Lisp’s (and the Lisp Genera has run on a “Lisp Machine”, a line of Machine’s) capability in prototyping, evolu- custom hardware workstations designed tionary problem solving, and super-complex problems has retained a small but dedicated market. 1Symbolics® and Genera® are registered trademarks and Virtual Lisp Machine™ and VLM™ are trademarks of In response to market pressure to provide Symbolics, Inc. DEC® is a registered trademark and Genera’s capabilities on commodity hardware, Alpha™ and AXP™ are trademarks of Digital Equipment Corporation. Symbolics chose to implement an “emulator” that would execute the Lisp Machine instruction 2Author’s electronic mail addresses are ptw@Riverside. set on a standard platform, rather than to port the SCRC.Symbolics.COM, [email protected]. -

Weak Memory Models with Matching Axiomatic and Operational Definitions

Weak Memory Models with Matching Axiomatic and Operational Definitions Sizhuo Zhang1 Muralidaran Vijayaraghavan1 Dan Lustig2 Arvind1 1{szzhang, vmurali, arvind}@csail.mit.edu [email protected] 1MIT CSAIL 2NVIDIA Abstract Memory consistency models are notorious for being difficult to define precisely, to reason about, and to verify. More than a decade of effort has gone into nailing down the definitions of the ARM and IBM Power memory models, and yet there still remain aspects of those models which (perhaps surprisingly) remain unresolved to this day. In response to these complexities, there has been somewhat of a recent trend in the (general-purpose) architecture community to limit new memory models to being (multicopy) atomic: where store values can be read by the issuing processor before being advertised to other processors. TSO is the most notable example, used in the past by IBM 370 and SPARC-TSO, and currently used in x86. Recently (in March 2017) ARM has also switched to a multicopy atomic memory model, and the new RISC-V ISA and recent academic proposals such as WMM are pushing to do the same. In this paper, we show that when memory models are atomic, it becomes much easier to produce axiomatic definitions, operational definitions, and proofs of equivalence than doing the same under non- atomic models. The increased ease with which these definitions can be produced in turn allows architects to build processors much more confidently, and yet the relaxed nature of the models we propose still allows most or all of the performance of non-atomic models to be retained. -

E Cient Software-Based Fault Isolation Abstract 1 Introduction

Ecient SoftwareBased Fault Isolation Rob ert Wahbe Steven Lucco Thomas E Anderson Susan L Graham Computer Science Division University of California Berkeley CA Intro duction Abstract Application programs often achieve extensibilityby One way to provide fault isolation among co op erating incorp orating indep endently develop ed software mo d software mo dules is to place each in its own address ules However faults in extension co de can render a space However for tightlycoupled mo dules this so software system unreliable or even dangerous since lution incurs prohibitivecontext switchoverhead In such faults could corrupt p ermanent data Toin this pap er we present a software approach to imple crease the reliability of these applications an op erat menting fault isolation within a single address space ing system can provide services that prevent faults in Our approach has two parts First we load the co de distrusted mo dules from corrupting application data and data for a distrusted mo dule into its own fault do Such fault isolation services also facilitate software de main a logically separate p ortion of the applications velopmentby helping to identify sources of system fail address space Second we mo dify the ob ject co de of a ure distrusted mo dule to prevent it from writing or jump For example the postgres database manager in ing to an address outside its fault domain Both these cludes an extensible typ e system Sto Using this software op erations are p ortable and programming lan facility postgres queries can refer to generalpurp -

A Method for Comparative Analysis of Trusted Execution Environments

Portland State University PDXScholar Dissertations and Theses Dissertations and Theses 6-8-2021 A Method for Comparative Analysis of Trusted Execution Environments Stephano Cetola Portland State University Follow this and additional works at: https://pdxscholar.library.pdx.edu/open_access_etds Part of the Electrical and Computer Engineering Commons Let us know how access to this document benefits ou.y Recommended Citation Cetola, Stephano, "A Method for Comparative Analysis of Trusted Execution Environments" (2021). Dissertations and Theses. Paper 5720. https://doi.org/10.15760/etd.7593 This Thesis is brought to you for free and open access. It has been accepted for inclusion in Dissertations and Theses by an authorized administrator of PDXScholar. Please contact us if we can make this document more accessible: [email protected]. A Method for Comparative Analysis of Trusted Execution Environments by Stephano Cetola A thesis submitted in partial fulfillment of the requirements for the degree of Master of Science in Electrical and Computer Engineering Thesis Committee: John M. Acken, Chair Roy Kravitz Tom Schubert Portland State University 2021 © 2021 Stephano Cetola This work is licensed under a Creative Commons \Attribution 4.0 International" license. Abstract The problem of secure remote computation has become a serious concern of hardware manufacturers and software developers alike. Trusted Execution Envi- ronments (TEEs) are a solution to the problem of secure remote computation in applications ranging from \chip and pin" financial transactions [40] to intellectual property protection in modern gaming systems [17]. While extensive literature has been published about many of these technologies, there exists no current model for comparing TEEs.