Effectiveness of Social Media Analytics on Detecting Service Quality Metrics in the U.S

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Automated Flight Statistics Report For

DENVER INTERNATIONAL AIRPORT TOTAL OPERATIONS AND TRAFFIC March 2014 March YEAR TO DATE % of % of % Grand % Grand Incr./ Incr./ Total Incr./ Incr./ Total 2014 2013 Decr. Decr. 2014 2014 2013 Decr. Decr. 2014 OPERATIONS (1) Air Carrier 36,129 35,883 246 0.7% 74.2% 99,808 101,345 (1,537) -1.5% 73.5% Air Taxi 12,187 13,754 (1,567) -11.4% 25.0% 34,884 38,400 (3,516) -9.2% 25.7% General Aviation 340 318 22 6.9% 0.7% 997 993 4 0.4% 0.7% Military 15 1 14 1400.0% 0.0% 18 23 (5) -21.7% 0.0% TOTAL 48,671 49,956 (1,285) -2.6% 100.0% 135,707 140,761 (5,054) -3.6% 100.0% PASSENGERS (2) International (3) Inbound 68,615 58,114 10,501 18.1% 176,572 144,140 32,432 22.5% Outbound 70,381 56,433 13,948 24.7% 174,705 137,789 36,916 26.8% TOTAL 138,996 114,547 24,449 21.3% 3.1% 351,277 281,929 69,348 24.6% 2.8% International/Pre-cleared Inbound 42,848 36,668 6,180 16.9% 121,892 102,711 19,181 18.7% Outbound 48,016 39,505 8,511 21.5% 132,548 108,136 24,412 22.6% TOTAL 90,864 76,173 14,691 19.3% 2.0% 254,440 210,847 43,593 20.7% 2.1% Majors (4) Inbound 1,698,200 1,685,003 13,197 0.8% 4,675,948 4,662,021 13,927 0.3% Outbound 1,743,844 1,713,061 30,783 1.8% 4,724,572 4,700,122 24,450 0.5% TOTAL 3,442,044 3,398,064 43,980 1.3% 75.7% 9,400,520 9,362,143 38,377 0.4% 75.9% National (5) Inbound 50,888 52,095 (1,207) -2.3% 139,237 127,899 11,338 8.9% Outbound 52,409 52,888 (479) -0.9% 139,959 127,940 12,019 9.4% TOTAL 103,297 104,983 (1,686) -1.6% 2.3% 279,196 255,839 23,357 9.1% 2.3% Regionals (6) Inbound 382,759 380,328 2,431 0.6% 1,046,306 1,028,865 17,441 1.7% Outbound -

Impact of US Low Cost Carriers Entrance to the Airline Market in Colombia, South America

International Journal of Aviation, Aeronautics, and Aerospace Volume 4 Issue 1 Article 1 1-1-2017 Impact of US Low Cost Carriers Entrance to the Airline Market in Colombia, South America Carolina Anderson Embry-Riddle Aeronautical University – Daytona Beach, [email protected] Tamilla Curtis Embry Riddle Aeronautical University, [email protected] Robert F. Moss ERAU, [email protected] MaryJo O. Smith Ypsilon Associates, [email protected] Gerson Mey [email protected] Follow this and additional works at: https://commons.erau.edu/ijaaa Part of the Business Commons, and the Management and Operations Commons Scholarly Commons Citation Anderson, C., Curtis, T., Moss, R. F., Smith, M. O., & Mey, G. (2017). Impact of US Low Cost Carriers Entrance to the Airline Market in Colombia, South America. International Journal of Aviation, Aeronautics, and Aerospace, 4(1). https://doi.org/10.15394/ijaaa.2017.1133 This Article is brought to you for free and open access by the Journals at Scholarly Commons. It has been accepted for inclusion in International Journal of Aviation, Aeronautics, and Aerospace by an authorized administrator of Scholarly Commons. For more information, please contact [email protected]. Anderson et al.: Impact of US Low Cost Carriers Entrance to the Airline Market in Following a political and economic crisis in the late 1990s, many Colombian citizens decided to leave the country in search of opportunities, economic stability and most importantly, a secure place to raise a family. Most Colombian immigrants live in Florida, particularly in the Miami and the Orlando metropolitan areas. With the increase in the Colombian population in the US, and the improvement of the economic and political situation between the two countries, US LCCs such as Spirit Airlines, Inc. -

November 2017 Newsletter

PilotsPROUDLY For C ELEBRATINGKids Organization 34 YEARS! Pilots For KidsSM ORGANIZATION Helping Hospitalized Children Since 1983 Want to join in this year’s holiday visits? Newsletter November 2017 See pages 8-9 to contact the coordinator in your area! PFK volunteers have been visiting youngsters at Texas Children’s Hospital for 23 years. Thirteen volunteers representing United, Delta and Jet Blue joined together and had another very successful visit on June 13th. Sign up for holiday visits in your area by contacting your coordinator! “100% of our donations go to the kids” visit us at: pilotsforkids.org (2) Pilots For Kids Organization CITY: LAX/Los Angeles, CA President’s Corner... COORDINATOR: Vasco Rodriques PARTICIPANTS: Alaska Airlines Dear Members, The volunteers from the LAX Alaska Airlines Pilots Progress is a word everyone likes. The definition for Kids Chapter visited with 400 kids at the Miller of progress can be described as growth, develop- Children’s Hospital in Long Beach. This was during ment, or some form of improvement. their 2-day “Beach Carnival Day”. During the last year we experienced continual growth in membership and also added more loca- The crews made and flew paper airplanes with the tions where our visits take place. Another sign kids. When the kids landed their creations on “Run- of our growth has been our need to add a second way 25L”, they got rewarded with some cool wings! “Captain Baldy” mascot due to his popularity. Along with growth comes workload. To solve this challenge we have continually looked for ways to reduce our workload and cost through increased automation. -

November 2015 Newsletter

PilotsPROUDLY For C ELEBRATINGKids Organization 32 YEARS! Pilots For KidsSM ORGANIZATION Helping Hospitalized Children Since 1983 Want to join in this year’s holiday visits? Newsletter November 2015 See pages 8-9 to contact the coordinator in your area! PFK volunteers from ORF made their first visit to the Children’s Hospital of the King’s Daughters (CHKD). This group from Delta/VFC-12 and UAL enjoyed their inaugural visit in October and volunteers plan more visits through the holiday season. “100% of our donations go to the kids” visit us at: pilotsforkids.org (2) (3) Pilots For Kids Organization Pilots For Kids Organization President’s Corner... More Success for Dear Members, MCO Golf According to Webster’s Dictionary, the Captain Baldy was joined by an enthusiastic group of definition of fortunate is “bringing some good not golfers at Rio Pinar Country Club in Orlando on Sat- foreseen.” urday, October 24th. The golf event was followed by lunch and a silent auction that raised additional funds Considering that definition, our organization for Orlando area children. is indeed fortunate on many levels. We are fortu- nate to have members who passionately support Special thanks to all of the businesses who donated our vision, financially support our work, and vol- to make the auction a huge success. The group of unteer their valuable time to benefit hospitalized generous doners included the Orlando Magic, Jet- children. Blue, Flight Safety, SeaWorld/Aquatica, i-FLY, Embassy Suites, Hyatt Regency, Wingate, Double- Because of this good fortune, we stand out tree, Renaissance, Sonesta Suites, LaQuinta, the among many creditable charitable organizations. -

Laguardia Airport

Aviation Department Traffic Statistics: D.Wilson, J. Cuneo THE PORT AUTHORITY OF NY & NJ JUNE 2007 TRAFFIC REPORT Current month,12 months ending,year-to-date totals Showing percentage change from prior year period Month Year-to-date 12 Months Ending LGA Current % Current % Current % PASSENGERS Domestic 2,094,634 -5.1 11,718,462 -4.2 23,986,727 -2.1 International 109,583 -5.8 589,898 -6.6 1,272,111 -6.2 Total Revenue Passengers 2,204,217 -5.1 12,308,360 -4.3 25,258,838 -2.3 Non Revenue Passengers 64,627 -7.7 341,790 -10.8 718,978 -10.9 Note: Commuter - Regional Pax incl. in above 463,366 0.7 2,500,200 4.6 5,085,284 7.0 FLIGHTS Domestic 28,253 -5.9 177,628 -1.6 360,527 -0.6 International 1,768 -6.5 10,442 -4.5 21,483 -3.7 General Aviation 1,208 2.0 7,322 -1.0 14,318 -3.1 Total 31,229 -5.7 195,392 -1.8 396,328 -0.8 Note: Commuter - Regional Flights incl. in above 14,256 -3.4 91,055 3.0 184,743 4.2 FREIGHT (in short tons) Domestic 709 -46.0 4,948 -36.0 10,966 -31.6 International 16 -20.0 114 -13.0 229 -29.3 Total 725 -45.6 5,062 -35.6 11,195 -31.6 MAIL (in short tons) Total 137 -79.5 1,020 -78.7 1,956 -79.0 Ground Transportation Paid Parked Cars 168,554 -10.5 937,956 -11.7 1,967,853 -9.4 Ground Transpo.Counter Passengers 11,405 -19.1 65,725 -22.5 132,761 -13.4 Airport Coach Passengers 37,000 -31.1 186,174 -10.5 390,968 -6.6 Taxis Dispatched 324,894 -2.0 1,898,376 2.3 3,769,281 -0.2 Air Transport Association Carriers (USA) Passengers:Domestic Enplaned (000) 44,217 2.3 243,391 1.2 486,615 1.5 Passengers:International Enplaned (000) 6,384 2.3 -

The Unfriendly Skies

The Unfriendly Skies Five Years of Airline Passenger Complaints to the Department of Transportation The Unfriendly Skies Five Years of Airline Passenger Complaints to the Department of Transportation Laura Murray U.S. PIRG Education Fund April 2014 Acknowledgements The author would like to thank Kendall Creighton and Paul Hudson of FlyersRights.org for their expert review of this report. Additionally, thank you to Ed Mierzwinski, U.S. PIRG Education Fund Federal Consumer Program Director, for his expertise and guidance in developing this report, and to my intern Julia Christensen for her research assistance. U.S. PIRG Education Fund thanks the Colston Warne Program of Consumers Union for making this report possible. The authors bear responsibility for any factual errors. The recommendations are those of U.S. PIRG Education Fund. The views expressed in this report are those of the authors and do not necessarily reflect the views of our funders or those who provided review. 2014 U.S. PIRG Education Fund. Some Rights Reserved. This work is licensed under a Creative Commons Attribution Non-Commercial No Derivatives 3.0 Unported License. To view the terms of this license, visit creativecommons.org/licenses/by-nc-nd/3.0. With public debate around important issues often dominated by special interests pursuing their own nar- row agendas, U.S. PIRG Education Fund offers an independent voice that works on behalf of the public in- terest. U.S. PIRG Education Fund, a 501(c)(3) organization, works to protect consumers and promote good government. We investigate problems, craft solutions, educate the public and offer Americans meaningful opportunities for civic participation. -

Spirit Airlines, Inc

UNITED STATES OF AMERICA DEPARTMENT OF TRANSPORTATION OFFICE OF THE SECRETARY WASHINGTON, D.C. Issued by the Department of Transportation on May 5, 2020 NOTICE OF ACTION TAKEN -- DOCKET DOT-OST-2020-0037 _______________________________________________________________________________________________________________________________ On March 27, 2020, the President signed the Coronavirus Aid, Recovery, and Economic Security Act (CARES Act) into law. Sections 4005 and 4114(b) of the CARES Act authorize the Secretary of the U.S. Department of Transportation (Secretary) to require, “to the extent reasonable and practicable,” an air carrier receiving financial assistance under the act to maintain scheduled air transportation service as the Secretary deems necessary to ensure services to any point served by that air carrier before March 1, 2020 (Service Obligation). By Order 2020-4-2, the U.S. Department of Transportation established the parameters for implementation of the authority granted to the Secretary under the CARES Act, including an exemption process as described at pages 9-10 and in Appendix D of that order. This serves as notice to the public of an action taken by a Department official with regard to exemption(s) from Order 2020-4-2. No additional confirming order will be issued in this matter. Applicant: Spirit Airlines, Inc. (Spirit) 1. XX Service Level Exemption (large hub)1 Date Filed: April 27, 2020 In its April 27, 2020 request for exemption, Spirit Airlines requests that the Department approve a temporary suspension of service to the following covered points from its Service Obligation under the provisions of Order 2020- 4-2: (1) Charlotte, NC (4) Phoenix, AZ (2) Denver, CO (5) Portland, OR (3) Minneapolis/St. -

Air Travel Consumer Report

U.S. Department of Transportation Air Travel Consumer Report A Product Of The OFFICE OF AVIATION ENFORCEMENT AND PROCEEDINGS Aviation Consumer Protection Division Issued: March 2018 Flight Delays1 January 2018 Mishandled Baggage1 January 2018 Oversales1 4th. Quarter 2017 January – December 2017 Consumer Complaints2 January 2018 (Includes Disability and Discrimination Complaints) Airline Animal Incident Reports4 January 2018 Customer Service Reports to 3 the Dept. of Homeland Security January 2018 1 Data collected by the Bureau of Transportation Statistics. Website: http://www.bts.gov 2 Data compiled by the Aviation Consumer Protection Division. Website: http://www.transportation.gov/airconsumer 3 Data provided by the Department of Homeland Security, Transportation Security Administration 4 Data collected by the Aviation Consumer Protection Division 2 TABLE OF CONTENTS Section Section Page Page Flight Delays (continued) Introduction Table 11 26 2 List of Regularly Scheduled Flights with Tarmac Flight Delays Delays Over 3 Hours, By Carrier Explanation 3 Table 11A 27 Table 1 4 List of Regularly Scheduled International Flights with Overall Percentage of Reported Flight Tarmac Delays Over 4 Hours, By Carrier Operations Arriving On Time, by Carrier Table 12 28 Table 1A 5 Number and Percentage of Regularly Scheduled Flights Overall Percentage of Reported Flight With Tarmac Delays of 2 Hours or More, By Carrier Operations Arriving On Time and Carrier Rank, Footnotes 29 by Month, Quarter, and Data Base to Date Appendix 30 Table 2 6 Number of Reported Flight Arrivals and Percentage Mishandled Baggage Arriving On Time, by Carrier and Airport Consumer Complaints Table 3 9 Explanation 31 Percentage of All Carriers' Reported Flight Operations Ranking— January 2018 32 Arriving On Time, by Airport and Time of Day Table 4 11 Oversales Percentage of All Carriers' Reported Flight Operations Explanation 33 Departing On Time, by Airport and Time of Day Ranking — 4th. -

Air Travel Consumer Report

1 Air Travel Consumer Report A Product Of The OFFICE OF AVIATION ENFORCEMENT AND PROCEEDINGS Aviation Consumer Protection Division Issued: February 2019 Flight Delays1 December 2018 Mishandled Baggage, Wheelchairs and Scooters1 December 2018 January – November 2018 Oversales1 4th. Quarter 2018 January - December 2018 Consumer Complaints2 December 2018 (Includes Disability and January - December 2018 Discrimination Complaints) Airline Animal Incident Reports4 December 2018 January - December 2018 Customer Service Reports to the Dept. of Homeland Security3 December 2018 1 Data collected by the Bureau of Transportation Statistics. Website: http://www.bts.gov 2 Data compiled by the Aviation Consumer Protection Division. Website: http://www.transportation.gov/airconsumer 3 Data provided by the Department of Homeland Security, Transportation Security Administration 4 Data collected by the Aviation Consumer Protection Division 2 TABLE OF CONTENTS Section Section Page Page Flight Delays (continued) Introduction Table 8 31 3 List of Regularly Scheduled Domestic Flights with Tarmac Flight Delays Delays Over 3 Hours, By Marketing/Operating Carrier Explanation 4 Table 8A 32 Branded Codeshare Partners 5 List of Regularly Scheduled International Flights with Table 1 6 Tarmac Delays Over 4 Hours, By Marketing/Operating Carrier Overall Percentage of Reported Flight Appendix 33 Operations Arriving On-Time, by Marketing Carrier Mishandled Baggage Table 1A 7 Explanation 34 Overall Percentage of Reported Flight December 4-31—New Method by Operating Carrier -

NACC Contact List July 2015 Update

ID POC Name POC Email Office Cell Filer Other Comments ABS Jets (Czech Republic) ABS Michal Pazourek (Chf Disp) [email protected] +420 220 111 388 + 420 602 205 (LKPRABPX & LKPRABY) [email protected] 852 ABX Air ABX Alain Terzakis [email protected] 937-366-2464 937-655-0703 (800) 736-3973 x62450 KILNABXD Ron Spanbauer [email protected] 937-366-2435 (937) 366-2450 24hr. AeroMexico AMX Raul Aguirre (FPF) [email protected] 011 (5255) 9132-5500 (281) 233-3406 Files thru HP/EDS Air Berlin BER Recep Bayindir [email protected] 49-30-3434-3705 EDDTBERA [email protected] AirBridgeCargo Airlines ABW Dmitry Levushkin [email protected] Chief Flight Dispatcher 7 8422 590370 Also see Volga-Dnepr Airlines Volga-Dnepr Airlines 7 8422 590067 (VDA) Air Canada ACA Richard Steele (Mgr Flt Supt) [email protected] 905 861 7572 647 328-3895 905 861 7528 CYYZACAW thru LIDO Rod Stone [email protected] 905 861 7570 Air China CCA Weston Li (Mgr. American Ops) [email protected] 604-233-1682 778-883-3315 Zhang Yuenian [email protected] Air Europa AEA Bernardo Salleras [email protected] Flight Ops [email protected] 34 971 178 281 (Ops Mgr) Air France AFR Thierry Vuillaume Thierry Vuillaume <[email protected]> +33 (0)1 41 56 78 65 LFPGAFRN Air India AIC Puneet Kataria [email protected] 718-632-0125 917-9811807 + 91-22-66858028 KJFKAICO [email protected] 718-632-0162direct Use SABRE for flights Files thru HP/EDS arriving/departing USA Air New Zealand -

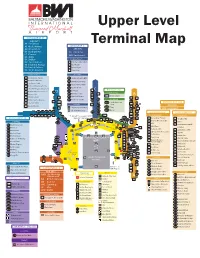

Upper Level Terminal

Upper Level CONCOURSE D AIRLINES AC Air Canada CONCOURSE C Terminal Map AS Alaska Airlines G4 Allegiant Air AIRLINES B4 Boutique Air AA American LF Contour WN Southwest DL Delta B6 jetBlue FOOD/BEVERAGES NK Spirit Airlines C3 Potbelly Sandwich Works 9X Southern Airways C4 Kraze Burger UA United Airlines C5 Einstein Bagels VX Virgin America C6 Sweet Jesus FOOD/BEVERAGES SHOPPING D1 Auntie Anne’s Pretzels C1 Hudson News & Gifts D2 Brix & Vine Wine Bar C2 Benet Cosmetics D3 The Greene Turtle C3 Hudson News Wall D4 Nature’s Kitchen Fresh Cafe Sock It To You D5 Smoothie King (Coming Soon) C4 Be Relax C3 C B/C Connector D6 McDonald’s (Under Renovation) C5 D4D C8 FOOD/BEVERAGES C6 NYS Collection D7 Quiznos Sub C4 C1 B/C1 Firkin & Flyer B D8 Market Express C7 Marshall Rousso D9 C6 B2 C2 B9 D9 Phillip‘s Seafood Express C8 Roshe Cosmetics C3 SHOPPING D8 B5 D10 The Club at BWI D10 B/C1 Tax & Duty Free CONCOURSE A/B SERVICES Shop B10 D11 C5 C1 AIRLINES D11 Dunkin’ Donuts B3 B2 Automatic Teller Mach. C4 B/C2 Tumi B7 D3 B6 B12 WN Southwest Water Rell Station C7 B/C3 Kiehl’s D10 D9 D2 D3 C5 B7 B5 CONCOURSE B CONCOURSE A D5 C6 B13 D7 D1 B/C1 B4 FOOD/BEVERAGES FOOD/BEVERAGES D6 D7 D2 D4 D5 D12 Ticketed Passengers ONLY B/C2 B11 CONCOURSE D Beyond This Line B8 B1 Auntie Anne’s Pretzels A1 Obrycki’s A-Bar D11 D6 T5 T1 B1 T4 T3 T3 B/C3 B/C1 B2 Obrycki’s Restaurant/Bar SHOPPING D1 T8 A2 Arby’s Observation B1 D/E4 B3 BGR B6 Gallery A3 Auntie Anne’s Pretzels / D1 Tech Interaction B4 T2 VC AA Thurgood Marshall B4 B4 Silver Diner D2 America! G4 9X Cinnabon AS -

1 January 25, 2021 the Honorable Steven G. Bradbury Deputy Secretary & General Counsel Department of Transportation 1200

January 25, 2021 The Honorable Steven G. Bradbury Deputy Secretary & General Counsel Department of Transportation 1200 New Jersey Ave. SE Washington, DC 20590 Re: Request for Public Interest Review of the U.S. Department of Transportation’s Approval of the American Airlines and JetBlue Northeast Alliance Dear Deputy Secretary Bradbury: The American Antitrust Institute (AAI) writes to request a public interest review of the U.S. Department of Transportation’s (DOT’s) recent approval of the American Airlines Inc. (American) and JetBlue Airways Corporation (JetBlue) “Northeast Alliance” cooperative agreement.1 AAI is concerned that the public was not afforded any opportunity to comment on the public interest implications of the DOT’s approval of the cooperative agreement, which was pushed through with only 10 days remaining in the Trump administration. AAI takes no position on the merits of Complainant Spirit Airlines’ claim that implementation of the cooperative agreement constitutes an unfair method of competition under 49 U.S.C. 41712.2 Rather, AAI’s comments highlight that the DOT’s approval of the cooperative agreement itself raises significant competitive concerns. Namely, the remedies contained in DOT’s agreement may be inadequate to restore lost competition. These issues warranted public input and commentary that would have ensured an appropriate review of the American-JetBlue cooperative agreement. I. Interest of the American Antitrust Institute AAI is an independent, nonprofit organization.3 AAI’s mission is to promote competition that protects consumers, businesses, and society. We serve the public through research, education, and advocacy on the benefits of competition and the use of antitrust enforcement as a vital component of competition policy.