Comparing Colleges' Graduation Rates

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

The Red Flag Campaign

objectives • Red Flag Campaign development process • Core elements of the campaign • How the campaign uses prevention messages to emphasize and promote healthy dating relationships • Campus implementation ideas prevalence • Women age 16 to 24 experience the highest per capita rate of intimate partner violence. C. Rennison and S. Welchans, “Intimate Partner Violence” U.S. Department of Justice Bureau of Justice Statistics, May 2000. • In 1 in 5 college dating relationships, one of the partners is being abused. C. Sellers and M. Bromley, “Violent Behavior in College Student Dating Relationships,” Journal of Contemporary Criminal Justice (1996) 1 key players • Advisory committee • College student focus groups development process preliminary focus groups • March 2006, four focus groups held with college students • Two women’s groups; two men’s groups • Students said they were willing to intervene with friends who are being victimized by or acting abusively towards their dates • Students also indicated they would be receptive to hearing intervention and prevention messages from their friends 2 developing core messages • Target college students who are friends/peers of victims and perpetrators of dating violence – Educate friends/peers about ‘red flags’ (warning indicators) of dating violence – Encourage friends/peers to ‘say something’ (intervene in the situation) Social Ecological Model Address norms or customs or people’s experience with local institutions Change in person’s Address influence of knowledge, attitude, peers and intimate behavior partners Address broad social forces, such as inequality, oppression, and broad public policy changes. 3 focus group: example of edits “He told me I was fat and stupid and no one else would want me … … maybe he’s right.” "I told her ‘That’s wrong. -

Nomination Guidelines for the 2022 Virginia Outstanding Faculty Awards

Nomination Guidelines for the 2022 Virginia Outstanding Faculty Awards Full and complete nomination submissions must be received by the State Council of Higher Education for Virginia no later than 5 p.m. on Friday, September 24, 2021. Please direct questions and comments to: Ms. Ashley Lockhart, Coordinator for Academic Initiatives State Council of Higher Education for Virginia James Monroe Building, 10th floor 101 N. 14th St., Richmond, VA 23219 Telephone: 804-225-2627 Email: [email protected] Sponsored by Dominion Energy VIRGINIA OUTSTANDING FACULTY AWARDS To recognize excellence in teaching, research, and service among the faculties of Virginia’s public and private colleges and universities, the General Assembly, Governor, and State Council of Higher Education for Virginia established the Outstanding Faculty Awards program in 1986. Recipients of these annual awards are selected based upon nominees’ contributions to their students, academic disciplines, institutions, and communities. 2022 OVERVIEW The 2022 Virginia Outstanding Faculty Awards are sponsored by the Dominion Foundation, the philanthropic arm of Dominion. Dominion’s support funds all aspects of the program, from the call for nominations through the award ceremony. The selection process will begin in October; recipients will be notified in early December. Deadline for submission is 5 p.m. on Friday, September 24, 2021. The 2022 Outstanding Faculty Awards event is tentatively scheduled to be held in Richmond sometime in February or March 2022. Further details about the ceremony will be forthcoming. At the 2022 event, at least 12 awardees will be recognized. Included among the awardees will be two recipients recognized as early-career “Rising Stars.” At least one awardee will also be selected in each of four categories based on institutional type: research/doctoral institution, masters/comprehensive institution, baccalaureate institution, and two-year institution. -

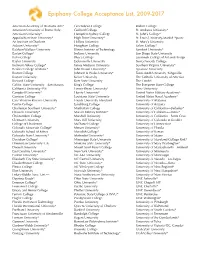

Epiphany Comprehensive College List

Epiphany College Acceptance List, 2009-2017 American Academy of Dramatic Arts* Greensboro College Rollins College American University of Rome (Italy) Guilford College St. Andrews University* American University* Hampden-Sydney College St. John’s College* Appalachian State University* High Point University* St. Louis University-Madrid (Spain) Art Institute of Charlotte Hollins University St. Mary’s University Auburn University* Houghton College Salem College* Baldwin Wallace University Illinois Institute of Technology Samford University* Barton College* Indiana University San Diego State University Bates College Ithaca College Savannah College of Art and Design Baylor University Jacksonville University Sierra Nevada College Belmont Abbey College* James Madison University Southern Virginia University* Berklee College of Music* John Brown University* Syracuse University Boston College Johnson & Wales University* Texas A&M University (Kingsville) Boston University Keiser University The Catholic University of America Brevard College Kent State University The Citadel Califor. State University—San Marcos King’s College The Evergreen State College California University (PA) Lenoir-Rhyne University* Trine University Campbell University* Liberty University* United States Military Academy* Canisius College Louisiana State University United States Naval Academy* Case Western Reserve University Loyola University Maryland University of Alabama Centre College Lynchburg College University of Arizona Charleston Southern University* Manhattan College University -

Catalog 2008-2009

S w e et B riar College Catalog 2008-2009 2008-2009 College Calendar Fall Semester 2008 August 23, 2008 ____________________________________________ New students arrive August 27, 2008 __________________________________________ Opening Convocation August 28, 2008 _________________________________________________ Classes begin September 26, 2008 _____________________________________________ Founders’ Day September 25-27, 2008 ___________________________________Homecoming Weekend October 2-3, 2008 ________________________________________________ Reading Days October 17-19, 2008 __________________________________________ Families Weekend November 5, 2008 _____________________________ Registration for Spring Term Begins November 21, 2008 _________________________Thanksgiving vacation begins, 5:30 p.m. (Residence Halls close November 22 at 8 a.m.) December 1, 2008_______________________________________________ Classes resume December 12, 2008________________________________________________ Classes End December 13, 2008________________________________________________Reading Day December 14-19, 2008 ____________________________________________ Examinations December 19, 2008_________________________________ Winter break begins, 5:30 p.m. (Residence Halls close December 19 at 5:30 p.m.) Spring Semester 2009 January 21, 2009 ___________________________________________ Spring Term begins March 13, 2009 __________________________________ Spring vacation begins, 5:30 p.m. (Residence Halls close March 14 at 8 a.m.) March 23, 2009 _________________________________________________ -

Emory and Henry College 01/30/1989

VLR Listed: 1/18/1983 NPS Form 10-900 NRHP Listed: 1/30/1989 OMB No. 1024-0018 (3-82) Eip. 10-31-84 United States Department of the interior National Park Service For NPS use only National Register of Historic Piaces received OCT 1 ( I935 Inventory—Nomination Form date entered See instructions in How to Complete National Register Forms Type all entries—complete applicable sections 1. Name historic Emory and Henry College (VHLD File No. 95-98) and or common Same 2. Location street & number VA State Route 609 n/a not for publication Emory city, town X vicinity of 51 state Virginia ^^^^ Washington code county 3. Classification Category Ownership Status Present Use X district public X occupied agriculture museum building(s) X private unoccupied commercial park structure both work in progress educational private residence site Public Acquisition Accessible entertainment -X religious object in process X yes: restricted government scientific being considered yes: unrestricted industrial transportation n/a no military other: 4. Owner of Property name The Holston Conference Colleges Board of Trustees, c/o Dr. Heisse Johnson street & number P.O. Box 1176 city,town Johnson City n/-a vicinity of state Tennessee 37601 5. Location of Legal Description courthouse, registry of deeds, etc. Washington County Courthouse street&number Main Street city.town Abingdon state Virginia 24210 6. Representation in Existing Surveys titieyirginia Historic Landmarks has this property been determined eligible? yes X no Division Survey File No. 95-98 date 1982 federal X_ state county local depository tor survey records Virginia Historic Landmarks divi sion - 221 Governor Street cuv.town Richmond state Virginia 23219 7. -

Forging a New Path

FORGING A NEW PATH, SWEET BRIAR TURNS TO THE FUTURE Dear Sweet Briar Alumnae, Throughout this spring semester, distinguished women musicians, writers and policy makers have streamed to the campus, in a series dubbed “At the Invitation of the President.” As you will read in this issue, the series started in January with a remarkable all-women ensemble of scholar-performers dedicated to excavating little-known string trios from the 17th and 18th century, and it ended the semester with a lecture by Bettina Ring, the secretary of agriculture and forestry for the Commonwealth. Sweet Briar was a working farm for most of its history, a fact that does not escape the secretary, both as an important legacy we share and cherish, but also as a resurgent possibility for the future — for Sweet Briar and Central Virginia. Through this series, one learns stunning things about women who shape history. A gradu- ate of Sweet Briar, Delia Taylor Sinkov ’34 was a top code breaker who supervised a group of women who worked silently — under an “omerta” never to be betrayed in one’s lifetime — to break the Japanese navy and army codes and eventually to help win the Battle of Midway. Ultimately, the number of code breakers surpassed 10,000. While America is a country that loves and shines light on its heroes, women have often stayed in the shadow of that gleaming light; they are history’s greatest omission. “Do you like doing the crossword puzzle?” Navy recruiters would ask the potential code breakers. “And are you engaged to be married?” If the answer to the former was a “yes” and to the lat- ter a “no,” then the women were recruited to the first wave of large-scale intelligence work upon which the nation would embark. -

Sweet Briar College Magazine – Spring 2019

Dear Sweet Briar alumnae and friends, Friendship and family have been on my mind lately, and so I want to tell you about some new friends I’ve been making this semester. One of them is Ray, who is tall and smart and also known as Love Z, and the other is Blues, who is very personable and a wonderful teacher. My friendship with Ray has grown over time; frankly, I didn’t seem to make much of an impression on him at first. My friendship with Blues blossomed immediately; we seem to be simpatico. As I joked with Merrilee “Mimi” Wroten, the director of Sweet Briar’s acclaimed riding program, maybe I bonded faster with Blues because he’s rather short, and so am I. And if you haven’t already figured it out, Ray, a chestnut warmblood, and Blues, a gray quarter horse, are members of the College’s equine family, and along with Mimi, they’ve been teaching me how to ride. As Sweet Briar’s president, it’s vital for me to learn as much as I can about the Col- lege, its programs and its people. That’s why I taught a course in our inaugural three- week session in the fall of 2018; that’s why I have just instituted collegial gatherings of faculty and staff every Monday evening (called Sweet Briar Hour); and that’s why I’m taking riding lessons, so that I can better understand our equestrian program, its ac- complishments and its needs. Riding also gives me a connection with many members of our Sweet Briar family; a full third of our students identify as riders, whether for competition or recreation. -

A TWO-DAY SYMPOSIUM Randolph-Macon Woman's College and Sweet Briar College February 28 and March 1, 1985 !~ { U;·

A TWO-DAY SYMPOSIUM Randolph-Macon Woman's College and Sweet Briar College February 28 and March 1, 1985 !~ { u;· .... ~ ~-~-.. ~ g, i: ~c; ,~ In Celebration of Virginia Women: Their Cultural Heritage A SYMPOSIUM Thursday, February 28, 1985 Friday, March 1, 1985 SWEET BRIAR COLLEGE RANDOLPH-MACON WOMAN'S COLLEGE Tyson Auditorium Maier Museum of Art 2:00 PM OPENING SESSION 9:30 AM COFFEE College Communities and Visible Leaders: Their Contributions to Virginia Women's Education 10:00 AM PANEL MODERATOR Virginia Women Writers Elizabeth R. Baer, Associate Dean of Student Academic Affairs, Sweet Briar College MODERATOR PARTICIPANTS Elizabeth J. Lipscomb, Associate Professor of English, Claudia Chang, Assistant Professor of Anthropology, Randolph-Macon Woman's College Sweet Briar College, and Students PARTICIPANTS Finds from Archaeological Digs on Sweet Briar Campus Elizabeth R. Baer, Sweet Briar College 7\ Tr"\-/.nr, ;,.,.. J.],-,.., ~;f,,..,,.,,. ~_.; ..,,.. ,...., .( ,..._ D_,,,,....,.·-=--- -= J.. _r.,_ l\.t.f:,._.,..,.4. r ,:,.. ....... TT:~- - .:~-:~ uera1a lVl. Herg, Associate Yroressor or History, ~weet i ·~·v~ ••• ·· ·~ <, in, o ··· ~; .. i , • v••• v .,i ., w .. i •vv ... 6 ...... Briar College, and Students Women Diarists Presentation of Historical Research Margaret I. Raynal, Professor of English, Randolph-Macon Woman's College Anne Spencer: Rebel and Poet ORAL HISTORY Anne Jones, Visiting Associate Professor, Graduate Insti tute of Liberal Arts, Emory University Videotapes The Southern Lady and the Virginia Woman Writer: Glasgow and Johnston Lorraine Robertson and Helen White, Randolph-Macon Woman's College Housekeepers INTERVIEWERS 2:00 PM DISCUSSION Carolyn Wilkerson Bell, Associate Professor of English, Randolph Macon Woman's College Mary Guthrow, Director of Academic Resource Center, Sweet Briar College Virginia Women Artists Meta Glass, President of Sweet Briar College, 1925-1946, MODERATOR Ellen Schall, Curator, The Maier Museum of Art at and Randolph-Macon Woman's College alumna, class of Randolph-Macon Woman's College 1899. -

Academic Catalog

2020-2021 ACADEMIC CATALOG One Hundred and Twenty-Eighth Session Lynchburg, Virginia The contents of this catalog represent the most current information available at the time of publication. During the period of time covered by this catalog, it is reasonable to expect changes to be made without prior notice. Thus, the provisions of this catalog are not to be regarded as an irrevocable contract between the College and the student. The Academic Catalog is produced by the Registrar’s Office in cooperation with various other offices. 2 Academic Calendar, 2020-2021 Undergraduate (UG) Programs (Dates subject to change) FALL 2020 AUGUST Thurs 13 SUPER Program begins Fri 14 STAR Program begins Mon 17 Summer grades due Thurs 20 Move-in for First Years begins at 9:00 am Thurs-Sat 20-23 New Student Orientation Sat 22 Move-in for all other students Mon 24 Fall UG classes begin Wed 26 Summer Incomplete work due from students Fri 28 End of add period for full semester and 1st quarter (UG classes) Last day to file Fall Independent Study forms SEPTEMBER Fri 4 End of 1st quarter drop period for UG classes Last day for students w/ Spring Incompletes to submit required work Fri 11 Grades due for Spring Incompletes Last day for seniors to apply for graduation in May 2021 Fri 18 End of full semester drop period and audit period OCTOBER Fri 2 End of 1st quarter “W” period (UG classes) Spring 2021 course schedules due by noon (all programs) Fri 9 End of 1st quarter UG classes Mon 12 2nd quarter UG classes begin Wed 14 Midterm grades due by 10:00 am for full-semester -

Sweet Briar College Campus Reopening Plan SBC.EDU

Sweet Briar College Campus Reopening Plan SBC.EDU | 434.381.6100 | [email protected] | 134 CHAPEL ROAD | SWEET BRIAR, VA 24595 | JULY 17, 2020 Sweet Briar College Campus Reopening Plan Table of Contents RETURNING TO SWEET BRIAR RESIDENTIAL LEARNING ................................................................. 1 THE SWEET BRIAR LIVING COMMUNITY ......................................................................................... 2 Campus Status............................................................................................................................. 2 General Protocol ......................................................................................................................... 2 Orientation and Move-In Plans ................................................................................................... 2 Residence Life ............................................................................................................................. 4 Dining Services and Meriwether Godsey .................................................................................... 4 Student Activities and Events ..................................................................................................... 5 Athletics ...................................................................................................................................... 5 LEARNING ........................................................................................................................................ 5 Fall Planning -

A L U M N a E M a G a Z I N E Volume 79

ALUMNAE MAGAZINE VOLUME 79 NUMBER 1 WINTER 2007/2008 NOTE FROM THE hen I was a high school student looking at colleges, I was dragged kicking and screaming to look at Sweet Briar. Sweet Briar touted the refrain that women’s colleges offered far more opportunities than coeducational schools did for Wwomen to become leaders. I was one of those students who matriculated despite the fact, not because it offered single-sex education, and I took the leadership mantra with a grain of salt. After all, I attended a small Catholic high school where everyone seemed to be involved in everything and I could not imagine that women, especially me, needed help with anything. (This was the early 1980s, and I was only 17!) Not until I attended law school did I really begin to recognize what Sweet Briar had given me. Then, as now, College leaders led by example and provided ample opportunities for the studentsalumnae to take a lead somewhere, association somehow. I was involved in presidentall sorts of campus activities as a student and remained involved with Sweet Briar in some capacity since my graduation because I believe so strongly in the Sweet Briar Promise. In my years on the Alumnae Association Board, I have served under the leadership of two fabulous presidents, each of whom I admire for her wisdom, diplomacy, and dedication to our alma mater. I feel privileged Being a leader does not to have been given the opportunity to follow in their footsteps. Although I have served on mean that you have to several boards in my professional and residential communities, Sweet Briar’s Alumnae Board is special to me in the way that Sweet Briar is special to each of us, but for reasons we cannot be in the position at the always articulate. -

Catalog 2004-2005

Sweet BriarCollege Catalog 2004-2005 2004-2005 College Calendar Fall Semester 2004 August 21, 2004_____________________________________________New students arrive August 25, 2004 ________________________________Registration, Opening Convocation August 26, 2004 __________________________________________________Classes begin September 24, 2004 ______________________________________________Founders’ Day Sept. 30-Oct. 1, 2004 ______________________________________________Reading Days October 15-17, 2004___________________________________________Families Weekend November 10, 2004___________________________________Registration for Spring Term November 19, 2004 ____________________________Thanksgiving vacation begins, 5 p.m. (Residence Halls close at 8 a.m. November 20) November 29, 2004 ______________________________________________Classes resume December 10, 2004_________________________________________________Classes End December 11, 2004________________________________________________Reading Day December 12-17, 2004 ____________________________________________Examinations December 17, 2004____________________________________Winter break begins, 5 p.m. (Residence Halls close at 8 a.m. December 18) Spring Semester 2005 January 20, 2005 ____________________________________________Spring Term begins March 11, 2005 _____________________________________Spring vacation begins, 5 p.m. (Residence Halls close at 8 a.m. March 12) March 21, 2005 _________________________________________________Classes resume April 6, 2005 __________________________________________Registration