Datasets and Baselines for Armenian Named Entity Recognition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

Glossaries of Words 30 1

ENG L I SH ARABI C P ERSI AN TU RK I SH ARM EN I AN K U RD I SH SY RI AC by the G eog rap hical Section of the Na z al 1a112 67206 " D vision N val St miralt i , a qfi , A d y LONDON PUBLI SHED BY ms M AJ ESTY ’S ST ION ERY FFICE AT O . To b e p urc h ased t h rough any B ookse lle r or d ire c t ly f rom E . S TI NERY FFICE a t h e f ollowi n ad d r sse M . TA O O t g e s I M P I AL HOU KI G WA D W 2 an Y LO O C . d ER SE , N S , N N , . , 28 A B I N D O N S T T N D W G E L O O N S. l R E , , . ; 37 P ETER STREET M ANCH ESTER ; ’ 1 ST. D W éRESCEN T CA D I F F AN RE S , R ; 23 F ORTH S T T E D I B U G H REE , N R ; or from E S ST EET D B LI . P N NBY LTD 116 G AFTO U O O , R N R , N 19 2 0 Print ed und e r t h e afith ority of ’ H rs M AJ ESTY S STATI O NERY OF F I CE B F D I CK H AL L at t h e U nive sit P re ss Ox ford . -

Al-Hadl Yahya B. Ai-Husayn: an Introduction, Newly Edited Text and Translation with Detailed Annotation

Durham E-Theses Ghayat al-amani and the life and times of al-Hadi Yahya b. al-Husayn: an introduction, newly edited text and translation with detailed annotation Eagle, A.B.D.R. How to cite: Eagle, A.B.D.R. (1990) Ghayat al-amani and the life and times of al-Hadi Yahya b. al-Husayn: an introduction, newly edited text and translation with detailed annotation, Durham theses, Durham University. Available at Durham E-Theses Online: http://etheses.dur.ac.uk/6185/ Use policy The full-text may be used and/or reproduced, and given to third parties in any format or medium, without prior permission or charge, for personal research or study, educational, or not-for-prot purposes provided that: • a full bibliographic reference is made to the original source • a link is made to the metadata record in Durham E-Theses • the full-text is not changed in any way The full-text must not be sold in any format or medium without the formal permission of the copyright holders. Please consult the full Durham E-Theses policy for further details. Academic Support Oce, Durham University, University Oce, Old Elvet, Durham DH1 3HP e-mail: [email protected] Tel: +44 0191 334 6107 http://etheses.dur.ac.uk 2 ABSTRACT Eagle, A.B.D.R. M.Litt., University of Durham. 1990. " Ghayat al-amahr and the life and times of al-Hadf Yahya b. al-Husayn: an introduction, newly edited text and translation with detailed annotation. " The thesis is anchored upon a text extracted from an important 11th / 17th century Yemeni historical work. -

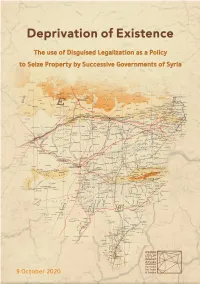

In PDF Format, Please Click Here

Deprivatio of Existence The use of Disguised Legalization as a Policy to Seize Property by Successive Governments of Syria A special report sheds light on discrimination projects aiming at radical demographic changes in areas historically populated by Kurds Acknowledgment and Gratitude The present report is the result of a joint cooperation that extended from 2018’s second half until August 2020, and it could not have been produced without the invaluable assistance of witnesses and victims who had the courage to provide us with official doc- uments proving ownership of their seized property. This report is to be added to researches, books, articles and efforts made to address the subject therein over the past decades, by Syrian/Kurdish human rights organizations, Deprivatio of Existence individuals, male and female researchers and parties of the Kurdish movement in Syria. Syrians for Truth and Justice (STJ) would like to thank all researchers who contributed to documenting and recording testimonies together with the editors who worked hard to produce this first edition, which is open for amendments and updates if new credible information is made available. To give feedback or send corrections or any additional documents supporting any part of this report, please contact us on [email protected] About Syrians for Truth and Justice (STJ) STJ started as a humble project to tell the stories of Syrians experiencing enforced disap- pearances and torture, it grew into an established organization committed to unveiling human rights violations of all sorts committed by all parties to the conflict. Convinced that the diversity that has historically defined Syria is a wealth, our team of researchers and volunteers works with dedication at uncovering human rights violations committed in Syria, regardless of their perpetrator and victims, in order to promote inclusiveness and ensure that all Syrians are represented, and their rights fulfilled. -

Exploring Reproductive Roles and Attitudes in Saudi Arabia Azizah Linjawi

Florida State University Libraries Electronic Theses, Treatises and Dissertations The Graduate School 2005 Exploring Reproductive Roles and Attitudes in Saudi Arabia Azizah Linjawi Follow this and additional works at the FSU Digital Library. For more information, please contact [email protected] THE FLORIDA STATE UNIVERSITY COLLEGE OF SOCIAL SCIENCES EXPLORING REPRODUCTIVE ROLES AND ATTITUDES IN SAUDI ARABIA By AZIZAH LINJAWI A Dissertation submitted to the Department of Sociology in partial fulfillment of the requirements for the degree of Doctor of Philosophy Degree awarded: Fall semester 2005 The members of the committee approve the dissertation of Azizah Linjawi defended on August 4th 2005: _____________________________________ Elwood Carlson Professor Directing Dissertation _____________________________________ Rebecca Miles Outside Committee Member ____________________________________ Graham Kinloch Committee Member ____________________________________ Isaac Eberstein Committee Member Approved: ______________________________________ Patricia Martin (Chair - Sociology) ______________________________________ Graham Kinloch (College of Social Sciences) The office of Graduate studies has verified and approved the above named committee members. ii ACKNOWLEDGEMENT Praise be to almighty Allah, the most merciful one, for his blessings to me which enabled me to finish my study at FSU. I write this acknowledgement with special gratitude and thanks to my Professor Elwood Carlson for his great teaching and his unlimited patience. I really appreciate his commitment to teaching me and imparting knowledge and encouragement. I have great respect for him. I would like to express my deepest appreciation to Professor Isaac Eberstein for his tremendous encouragement and advisement. I also wish to convey much thanks to Professor Rebecca Miles for sharing with me her own experience in research related to the Arab world along with always willing to teach. -

“Peran Organisasi Islam Dalam Pengembangan Dan Penerapan Hukum Islam Di Indonesia” Sri Sultarini Rahayu & Riska Angriani

“Peran Organisasi Islam Dalam Pengembangan dan Penerapan Hukum Islam di Indonesia” Sri Sultarini Rahayu & Riska Angriani BAB I PENDAHULUAN 1.1. Latar Belakang Indonesia adalah negara muslim terbesar di dunia, disusul secara berturut- turut oleh Pakistan, India, Bangladesh dan Turki. Sebagai negara muslim terbesar, Indonesia memiliki peranan penting di dunia Islam sehingga posisinya cukup diperhitungkan. Munculnya Indonesia sebagai kekuatan baru di dunia Internasional juga didukung oleh realitas sejarah yang dibuktikan dengan munculnya ormas-ormas Islam di Indonesia yang sebagian besar telah ada bahkan sebelum Indonesia merdeka. Sejarah ormas Islam sangat panjang. Mereka hadir melintasi berbagai zaman: sejak masa kolonialisme Belanda, penjajahan Jepang, pasca-kemerdekaan Orde Lama, era pembangunan Orde Baru, dan masa demokrasi Reformasi sekarang ini. Dalam lintasan zaman yang terus berubah itu, satu hal yang pasti, ormas-ormas Islam telah memberikan kontribusi besar bagi kejayaan Islam di Indonesia. Dinamika hukum Islam di Indonesia tidak lepas dari peran dan kontribusi ormas-ormas Islam dalam mendorong pengembangan dan penerapannya. Hukum Islam telah mengalami perkembangan yang pesat berkat peran ormas Islam yang diaktualisasikan melalui kegiatan di berbagi bidang, seperti bidang pendidikan, kesehatan hingga politik. Oleh karena itu, melalui makalah ini, kami akan membahas lebih lanjut mengenai peran organisasi-organisasi Islam dalam pengembangan dan penerapan hukum Islam di Indonesia. BAB II PEMBAHASAN 2.1. Pengertian Ormas Islam Organisasi -

Thesis Draft 8 with Corrections

Fuṣḥá, ‘āmmīyah, or both?: Towards a theoretical framework for written Cairene Arabic Saussan Khalil Submitted in accordance with the requirements for the degree of Doctor of Philosophy The University of Leeds Arabic, Islamic and Middle Eastern Studies School of Languages, Cultures and Societies September 2018 - !ii - I confirm that the work submitted is my own and that appropriate credit has been given where reference has been made to the work of others. This copy has been supplied on the understanding that it is copyright material and that no quotation from the thesis may be published without proper acknowledgement. The right of Saussan Khalil to be identified as Author of this work has been asserted by her in accordance with the Copyright, Designs and Patents Act 1988. © 2018 The University of Leeds and Saussan Khalil - iii! - Acknowledgements I would like to firstly thank my supervisor, Professor James Dickins for his support and encouragement throughout this study, and his endless patience and advice in guiding me on this journey. From the University of Leeds, I would like to thank Mrs Karen Priestley for her invaluable support and assistance with all administrative matters, however great or small. From the University of Cambridge, I would like to thank my colleagues Professor Amira Bennison, Dr Rachael Harris and Mrs Farida El-Keiy for their encouragement and allowing me to take the time to complete this study. I would also like to thank Dr Barry Heselwood, Professor Rex Smith, Dr Marco Santello and Dr Serge Sharoff for their input and guidance on specific topics and relevant research areas to this study. -

Doing Business Guide 2021: Understanding Saudi Arabia's Tax Position

Doing business guide 2021 Understanding Saudi Arabia’s tax position Doing business guide | Understanding Saudi Arabia’s tax position Equam ipsamen 01 Impos is enditio rendae acea 02 Debisinulpa sequidempos 03 Imo verunt illia 04 Asus eserciamus 05 Desequidellor ad et 06 Ivolupta dolor sundus et rem 07 Limporpos eum sequas as 08 Ocomniendae dit ulparcia dolori 09 Aquia voluptas seque 10 Dolorit ellaborem rest mi 11 Foccaes in nulpa arumquis 12 02 Doing business guide | Understanding Saudi Arabia’s tax position Contents 04 About the Kingdom of Saudi Arabia 06 Market overview 08 Industries of opportunity 10 Entering the market 03 Doing business guide | Understanding Saudi Arabia’s tax position About the Kingdom of Saudi Arabia The Kingdom" of Saudi A country located in the Arabian Peninsula, Throughout this guide, we have provided the Kingdom of Saudi Arabia (KSA, Saudi our comments with respect to KSA, unless Arabia is the largest Arabia or The Kingdom) is the largest oil- noted otherwise. oil-producing country producing country in the world. in the world Government type Monarchy " Population (2019) 34.2 million GDP (2019) US$ 793 billion GDP growth (2019) 0.33% Inflation (2019) -2.09% Labor force (2019) 14.38 million Crude oil production, petroleum refining, basic petrochemicals, ammonia, Key industries industrial gases, sodium hydroxide (caustic soda), cement, fertilizer, plastics, metals, commercial ship repair, commercial aircraft repair, construction Source: World Bank, General Authority of Statistics 04 Doing business guide | Understanding Saudi Arabia’s tax position 05 Doing business guide | Understanding Saudi Arabia’s tax position Market overview • Saudi Arabia is an oil-based economy Government with the largest proven crude oil reserves Government type Monarchy in the world. -

Education in Danger Monthly News Brief May 2019

Education in Danger Monthly News Brief May 2019 Attacks on education This monthly digest comprises threats and The section aligns with the definition of attacks on education used by the Global Coalition to incidents of violence Protect Education under Attack (GCPEA) in Education under Attack 2018. as well as protests and other events affecting Africa education. Cameroon It is prepared by 21 May 2019: In Bamenda town, Mezam region, Ambazonian separatists Insecurity Insight and reportedly killed and dismembered a teacher. No further details specified. draws on information Source: ACLED1 collected by Insecurity Insight and the Global 24 May 2019: In Bakweri town, Buea region, three teachers were reportedly Coalition to Protect abducted by Ambazonian separatists. A ransom was demanded for their release. Education from Attack Source: ACLED1 (GCPEA) from information available in open sources. Mozambique 09 May 2019: In Mocimboa da Praia town, Cabo Delgado province, insurgents from the militia group ASQJ reportedly beheaded a teacher. No further details GCPEA provided 1 specified. Source: ACLED technical guidance on definitions of attacks Nigeria on education. 29 April 2019: In Katari village, Kagarko local government area, Kaduna state, unidentified perpetrators blocking the Kaduna-Abuja Highway abducted the Data from the chairman of the Universal Basic Education Commission and his daughter, killing Education in Danger Monthly News Brief is the driver in the process. Sources: Punch and The Guardian Nigeria available on HDX Insecurity Insight. 30 April 2019: In Abuja, unidentified gunmen broke into the Taraba State University and kidnapped the Deputy Registrar, subsequently calling the brother Subscribe here to of the victim to request a ransom. -

Errata E&C 31

ERRATA for Extensions & Corrections to the UDC, 31 (2009) Revised UDC Tables (pp. 49-159) This document lists typographical errors found in Revised UDC Tables of Extensions & Corrections to the UDC, 31 (2009) as well as some other changes introduced during the MRF 09 database update. The column on the right ('should read') shows the correct way in which records were entered in the UDC MRF09. Parts of the text that were corrected are marked in bold. Because of the systematic text checkings in the new database there is a number of additional corrections that were not published in the E&C 31 but are introduced in the MRF09. For instance, upon the introduction of the field IN (General Information note) this year the text formerly stored in the Editorial Note (administrative field 955) was moved to IN field. These changes were not published in the E&C 31 but are all listed below. All UDC numbers that were not present in E&C 31 but were updated in the process of the MRF09 production are introduced with symbol # (number sign). APPEARS IN E&C 31 SHOULD READ # +, / Table 1a. Connecting symbols. Coordination. # +, / Table 1a - Connecting symbols. Coordination. Extensions Extension [remainder is the same] ! + Coordination. Addition ! + Coordination. Addition SN: The coordination sign + (plus) connects SN: The coordination sign + (plus) (Table two or more separated (non-consecutive, non- 1a) connects two or more separated (non- related) UDC numbers, to denote a compound consecutive) UDC numbers, to denote a subject for which no single number exists compound subject for which no single Example(s) of combination: number exists (44+460) France and Spain Example(s) of combination: (470+571) Russia. -

Metu Jfa 2019/1 (36:1) 281-283

METU JFA 2019/1 DOI: 10.4305/METU.JFA.2019.1.10 (36:1) 281-283 ISLAMIC ARCHITECTURE AND BOOK REVIEW: ISLAMIC ARCHITECTURE AND ART IN CROATIA ART IN CROATIA: OTTOMAN AND OTTOMAN AND CONTEMPORARY HERITAGE CONTEMPORARY HERITAGE Zlatko Karač, Alen Žunıć Rifat ALİHODŽİĆ* (Faculty of Architecture, University of Zagreb, UPI-2M PLUS, Zagreb, 2018. 432 pages) The scientific monograph co-authored Croatia for a long time, even though ISBN: 978-953-7703-35-6 by dr.sc. Zlatko Karač and dr.sc. there was enough historical distance Alen Žunić is a comprehensive for this to be corrected earlier. In the study, published at the end of 2018. professional and especially general It represents the first significant public, this cultural heritage was affirmation of the Islamic heritage regarded as irrelevant, strange and in Croatia. It presents not only the fragmentary. Having not been viewed almost unknown Islamic heritage within its entire social context and from the 16th and 17th centuries, but historical dimension, this heritage also the contemporary architecture became neglected, which has been of the 20th and 21st centuries. The corrected to a significant extent with book quickly drew the attention of the the appearance of this monograph. the academic community, winning There is scarce heritage of Ottoman the international Award Ranko Radović monuments in Croatia now, but (Belgrade, Serbia) for the best theoretical even in those places where there are work on architecture and the city in some remains, they are very often not 2018, which was soon followed by the recognized and revalorized. Unpleasant Radovan Ivančević Award, awarded by historical memory, antagonisms, the Society of Historians of Croatia and destruction out of revenge and the the prestigious City of Zagreb Award. -

Saudi-Iranian Relations Since the Fall of Saddam

THE ARTS This PDF document was made available CHILD POLICY from www.rand.org as a public service of CIVIL JUSTICE the RAND Corporation. EDUCATION ENERGY AND ENVIRONMENT Jump down to document6 HEALTH AND HEALTH CARE INTERNATIONAL AFFAIRS The RAND Corporation is a nonprofit NATIONAL SECURITY research organization providing POPULATION AND AGING PUBLIC SAFETY objective analysis and effective SCIENCE AND TECHNOLOGY solutions that address the challenges SUBSTANCE ABUSE facing the public and private sectors TERRORISM AND HOMELAND SECURITY around the world. TRANSPORTATION AND INFRASTRUCTURE Support RAND WORKFORCE AND WORKPLACE Purchase this document Browse Books & Publications Make a charitable contribution For More Information Visit RAND at www.rand.org Explore the RAND National Security Research Division View document details Limited Electronic Distribution Rights This document and trademark(s) contained herein are protected by law as indicated in a notice appearing later in this work. This electronic representation of RAND intellectual property is provided for non-commercial use only. Unauthorized posting of RAND PDFs to a non-RAND Web site is prohibited. RAND PDFs are protected under copyright law. Permission is required from RAND to reproduce, or reuse in another form, any of our research documents for commercial use. For information on reprint and linking permissions, please see RAND Permissions. This product is part of the RAND Corporation monograph series. RAND monographs present major research findings that address the challenges facing the public and private sectors. All RAND mono- graphs undergo rigorous peer review to ensure high standards for research quality and objectivity. Saudi-Iranian Relations Since the Fall of Saddam Rivalry, Cooperation, and Implications for U.S. -

A Cultural Policy for Arab-Israeli Partnership

RECLAMATION A CULTURAL POLICY FOR ARAB-ISRAELI PARTNERSHIP JOSEPH BRAUDE RECLAMATION A CULTURAL POLICY FOR ARAB-ISRAELI PARTNERSHIP JOSEPH BRAUDE THE WASHINGTON INSTITUTE FOR NEAR EAST POLICY www.washingtoninstitute.org The opinions expressed in this Policy Focus are those of the author and not necessarily those of The Washington Institute, its Board of Trustees, or its Board of Advisors. Policy Focus 158, January 2019 All rights reserved. Printed in the United States of America. No part of this publication may be reproduced or transmitted in any form or by any means, electronic or mechanical, including photocopy, recording, or any information storage and retrieval system, without permission in writing from the publisher. ©2019 by The Washington Institute for Near East Policy The Washington Institute for Near East Policy 1111 19th Street NW, Suite 500 Washington, DC 20036 Cover design: John C. Koch Text design: 1000colors.org contents Acknowledgments v Introduction: A Call for Reclamation vii PART I A FRAUGHT LEGACY 1. The Story of a Cultural Tragedy 3 2. The Moroccan Anomaly 32 PART II A NEW HOPE 3. Arab Origins of the Present Opportunity 45 4. Communication from the Outside In: Israel & the United States 70 PART III AN UNFAIR FIGHT 5. Obstacles to a Cultural Campaign 109 PART IV CONCLUSION & RECOMMENDATIONS 6. A Plan for Reclamation 137 About the Author Back cover ACKNOWLEDGMENTS THE AUTHOR WISHES TO THANK the leadership, team, and community of the Washington Institute for Near East Policy for their friendship, encouragement, and patience—over twenty-five years and counting. — Joseph Braude January 2019 v INTRODUCTION A Call for Reclamation A RANGE OF ARAB LEADERS and institutions have recently signaled greater openness toward the state of Israel and Jews generally.