Achieving Reliability and Fairness in Online Task Computing Environments

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

October 18 - November 28, 2019 Your Movie! Now Serving!

Grab a Brew with October 18 - November 28, 2019 your Movie! Now Serving! 905-545-8888 • 177 SHERMAN AVE. N., HAMILTON ,ON • WWW.PLAYHOUSECINEMA.CA WINNER Highest Award! Atwood is Back at the Playhouse! Cannes Film Festival 2019! - Palme D’or New documentary gets up close & personal Tiff -3rd Runner - Up Audience Award! with Canada’ international literary star! This black comedy thriller is rated 100% on Rotten Tomatoes. Greed and class discrimination threaten the newly formed symbiotic re- MARGARET lationship between the wealthy Park family and the destitute Kim clan. ATWOOD: A Word After a Word “HHHH, “Parasite” is unquestionably one of the best films of the year.” After a Word is Power. - Brian Tellerico Rogerebert.com ONE WEEK! Nov 8-14 Playhouse hosting AGH Screenings! ‘Scorcese’s EPIC MOB PICTURE is HEADED to a Best Picture Win at Oscars!” - Peter Travers , Rolling Stone SE LAYHOU 2! AGH at P 27! Starts Nov 2 Oct 23- OPENS Nov 29th Scarlett Johansson Laura Dern Adam Driver Descriptions below are for films playing at BEETLEJUICE THE CABINET OF DR. CALIGARI Dir.Tim Burton • USA • 1988 • 91min • Rated PG. FEAT. THE VOC HARMONIC ORCHESTRA the Playhouse Cinema from October 18 2019, Dir. Paul Downs Colaizzo • USA • 2019 • 103min • Rated STC. through to and including November 28, 2019. TIM BURTON’S HAUNTED CLASSIC “Beetlejuice, directed by Tim Burton, is a ghost story from the LIVE MUSICAL ACCOMPANIMENT • CO-PRESENTED BY AGH haunters' perspective.The drearily happy Maitlands (Alec Baldwin and Iconic German Expressionist silent horror - routinely cited as one of Geena Davis) drive into the river, come up dead, and return to their the greatest films ever made - presented with live music accompani- Admission Prices beloved, quaint house as spooks intent on despatching the hideous ment by the VOC Silent Film Harmonic, from Kitchener-Waterloo. -

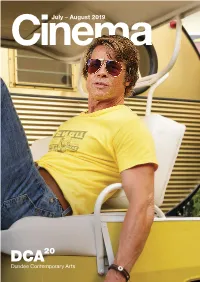

Cinema, on the Big Screen, with an Audience, Midsommar 6 Something That We Know Quentin Feels As Once Upon a Time In

CinJuly – Ae ugust 2019ma One of the most anticipated films of the summer, Contents Quentin Tarantino’s latest offering is a perfect New Films summer movie. Once Upon a Time in... Hollywood Blinded by the Light 9 o is full of energy, laughs, buddies and baddies, and The Chambermaid 8 the kind of bravado filmmaking we’ve come to love l The Dead Don’t Die 7 from this director. It is also, without a shadow of Ironstar: Summer Shorts 28 l a doubt, the kind of film that begs to be seen in The Lion King 27 a cinema, on the big screen, with an audience, Midsommar 6 something that we know Quentin feels as Once Upon a Time in... Hollywood 10 e passionately about as we do. It is a real thrill to Pain and Glory 11 be able to say Once Upon a Time in... Hollywood Photograph 8 will be shown at DCA exclusively on 35mm. We Robert the Bruce 5 can’t wait to fire up our Centurys, hear the whirr h Spider-Man: Far From Home 4 of the projectors in the booth and experience the Tell It To The Bees 7 magic of celluloid again. If you’ve never seen a film The Cure – Anniversary 13 on film, now is your chance! 197 8–2018 Live in Hyde Park Vita & Virginia 4 Another master of cinema, Pedro Almodóvar, also Documentary returns to our screens with an introspective, tender Marianne & Leonard: Words of Love 13 look at the life of an artist facing up to his regrets Of Fish and Foe 12 and the past loves who shaped him. -

Con-Scripting the Masses: False Documents and Historical Revisionism in the Americas

University of Massachusetts Amherst ScholarWorks@UMass Amherst Open Access Dissertations 2-2011 Con-Scripting the Masses: False Documents and Historical Revisionism in the Americas Frans Weiser University of Massachusetts Amherst Follow this and additional works at: https://scholarworks.umass.edu/open_access_dissertations Part of the Comparative Literature Commons Recommended Citation Weiser, Frans, "Con-Scripting the Masses: False Documents and Historical Revisionism in the Americas" (2011). Open Access Dissertations. 347. https://scholarworks.umass.edu/open_access_dissertations/347 This Open Access Dissertation is brought to you for free and open access by ScholarWorks@UMass Amherst. It has been accepted for inclusion in Open Access Dissertations by an authorized administrator of ScholarWorks@UMass Amherst. For more information, please contact [email protected]. CON-SCRIPTING THE MASSES: FALSE DOCUMENTS AND HISTORICAL REVISIONISM IN THE AMERICAS A Dissertation Presented by FRANS-STEPHEN WEISER Submitted to the Graduate School of the University of Massachusetts Amherst in partial fulfillment Of the requirements for the degree of DOCTOR OF PHILOSOPHY February 2011 Program of Comparative Literature © Copyright 2011 by Frans-Stephen Weiser All Rights Reserved CON-SCRIPTING THE MASSES: FALSE DOCUMENTS AND HISTORICAL REVISIONISM IN THE AMERICAS A Dissertation Presented by FRANS-STEPHEN WEISER Approved as to style and content by: _______________________________________________ David Lenson, Chair _______________________________________________ -

Anna Magnani

5 JUL 19 5 SEP 19 1 | 5 JUL 19 - 5 SEP 19 88 LOTHIAN ROAD | FILMHOUSECinema.COM FILMS WORTH TALKING ABOUT HOME OF THE EDINBURGH INTERNATIONAL FILM FESTIVAL Filmhouse, Summer 2019, Part II Luckily I referred to the last issue of this publication as the early summer double issue, which gives me the opportunity to call this one THE summer double issue, as once again we’ve been able to cram TWO MONTHS (July and August) of brilliant cinema into its pages. Honestly – and honesty about the films we show is one of the very cornerstones of what we do here at Filmhouse – should it become a decent summer weather-wise, please don’t let it put you off coming to the cinema, for that would be something of an, albeit minor in the grand scheme of things, travesty. Mind you, a quick look at the long-term forecast tells me you’re more likely to be in here hiding from the rain. Honestly…? No, I made that last bit up. I was at a world-renowned film festival on the south coast of France a few weeks back (where the weather was terrible!) seeing a great number of the films that will figure in our upcoming programmes. A good year at that festival invariably augurs well for a good year at this establishment and it’s safe to say 2019 was a very good year. Going some way to proving that statement right off the bat, one of my absolute favourites comes our way on 23 August, Pedro Almodóvar’s Pain and Glory which is simply 113 minutes of cinematic pleasure; and, for you, dear reader, I put myself through the utter bun-fight that was getting to see Quentin Tarantino’s Once Upon a Time.. -

Bruce Jackson & Diane Christian Video Introduction To

Virtual May 5, 2020 (XL:14) Pedro Almodóvar: PAIN AND GORY (2019, 113m) Spelling and Style—use of italics, quotation marks or nothing at all for titles, e.g.—follows the form of the sources. Bruce Jackson & Diane Christian video introduction to this week’s film Click here to find the film online. (UB students received instructions how to view the film through UB’s library services.) Videos: “Pedro Almodóvar and Antonio Banderas on Pain and Glory (New York Film Festival, 45:00 min.) “How Antonio Banderas Became Pedro Almodóvar in ‘Pain & Glory’” (Variety, 4:40) “PAIN AND GLORY Cast & Crew Q&A” (TIFF 2019, 24:13) CAST Antonio Banderas...Salvador Mallo “Pedro Almodóvar on his new film ‘Pain and Glory, Penélope Cruz...Jacinta Mallo Penelope Cruz and his sexuality” (27:23) Raúl Arévalo...Venancio Mallo Leonardo Sbaraglia...Federico Delgado DIRECTOR Pedro Almodóvar Asier Etxeandia...Alberto Crespo WRITER Pedro Almodóvar Cecilia Roth...Zulema PRODUCERS Agustín Almodóvar, Ricardo Marco Pedro Casablanc...Doctor Galindo Budé, and Ignacio Salazar-Simpson Nora Navas...Mercedes CINEMATOGRAPHER José Luis Alcaine Susi Sánchez...Beata EDITOR Teresa Font Julieta Serrano...Jacinta Mallo (old age) MUSIC Alberto Iglesias Julián López...the Presenter Paqui Horcajo...Mercedes The film won Best Actor (Antonio Banderas) and Best Rosalía...Rosita Composer and was nominated for the Palme d'Or and Marisol Muriel...Mari the Queer Palm at the 2019 Cannes Film Festival. It César Vicente...Eduardo was also nominated for two Oscars at the 2020 Asier Flores...Salvador Mallo (child) Academy Awards (Best Performance by an Actor for Agustín Almodóvar...the priest Banderas and Best International Feature Film). -

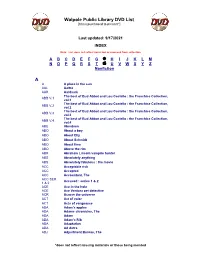

Walpole Public Library DVD List A

Walpole Public Library DVD List [Items purchased to present*] Last updated: 9/17/2021 INDEX Note: List does not reflect items lost or removed from collection A B C D E F G H I J K L M N O P Q R S T U V W X Y Z Nonfiction A A A place in the sun AAL Aaltra AAR Aardvark The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.1 vol.1 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.2 vol.2 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.3 vol.3 The best of Bud Abbot and Lou Costello : the Franchise Collection, ABB V.4 vol.4 ABE Aberdeen ABO About a boy ABO About Elly ABO About Schmidt ABO About time ABO Above the rim ABR Abraham Lincoln vampire hunter ABS Absolutely anything ABS Absolutely fabulous : the movie ACC Acceptable risk ACC Accepted ACC Accountant, The ACC SER. Accused : series 1 & 2 1 & 2 ACE Ace in the hole ACE Ace Ventura pet detective ACR Across the universe ACT Act of valor ACT Acts of vengeance ADA Adam's apples ADA Adams chronicles, The ADA Adam ADA Adam’s Rib ADA Adaptation ADA Ad Astra ADJ Adjustment Bureau, The *does not reflect missing materials or those being mended Walpole Public Library DVD List [Items purchased to present*] ADM Admission ADO Adopt a highway ADR Adrift ADU Adult world ADV Adventure of Sherlock Holmes’ smarter brother, The ADV The adventures of Baron Munchausen ADV Adverse AEO Aeon Flux AFF SEAS.1 Affair, The : season 1 AFF SEAS.2 Affair, The : season 2 AFF SEAS.3 Affair, The : season 3 AFF SEAS.4 Affair, The : season 4 AFF SEAS.5 Affair, -

Index to Volume 29 January to December 2019 Compiled by Patricia Coward

THE INTERNATIONAL FILM MAGAZINE Index to Volume 29 January to December 2019 Compiled by Patricia Coward How to use this Index The first number after a title refers to the issue month, and the second and subsequent numbers are the page references. Eg: 8:9, 32 (August, page 9 and page 32). THIS IS A SUPPLEMENT TO SIGHT & SOUND SUBJECT INDEX Film review titles are also Akbari, Mania 6:18 Anchors Away 12:44, 46 Korean Film Archive, Seoul 3:8 archives of television material Spielberg’s campaign for four- included and are indicated by Akerman, Chantal 11:47, 92(b) Ancient Law, The 1/2:44, 45; 6:32 Stanley Kubrick 12:32 collected by 11:19 week theatrical release 5:5 (r) after the reference; Akhavan, Desiree 3:95; 6:15 Andersen, Thom 4:81 Library and Archives Richard Billingham 4:44 BAFTA 4:11, to Sue (b) after reference indicates Akin, Fatih 4:19 Anderson, Gillian 12:17 Canada, Ottawa 4:80 Jef Cornelis’s Bruce-Smith 3:5 a book review; Akin, Levan 7:29 Anderson, Laurie 4:13 Library of Congress, Washington documentaries 8:12-3 Awful Truth, The (1937) 9:42, 46 Akingbade, Ayo 8:31 Anderson, Lindsay 9:6 1/2:14; 4:80; 6:81 Josephine Deckers’s Madeline’s Axiom 7:11 A Akinnuoye-Agbaje, Adewale 8:42 Anderson, Paul Thomas Museum of Modern Art (MoMA), Madeline 6:8-9, 66(r) Ayeh, Jaygann 8:22 Abbas, Hiam 1/2:47; 12:35 Akinola, Segun 10:44 1/2:24, 38; 4:25; 11:31, 34 New York 1/2:45; 6:81 Flaherty Seminar 2019, Ayer, David 10:31 Abbasi, Ali Akrami, Jamsheed 11:83 Anderson, Wes 1/2:24, 36; 5:7; 11:6 National Library of Scotland Hamilton 10:14-5 Ayoade, Richard -

51ST ANNUAL CONVENTION March 5–8, 2020 Boston, MA

Northeast Modern Language Association 51ST ANNUAL CONVENTION March 5–8, 2020 Boston, MA Local Host: Boston University Administrative Sponsor: University at Buffalo SUNY 1 BOARD OF DIRECTORS President Carole Salmon | University of Massachusetts Lowell First Vice President Brandi So | Department of Online Learning, Touro College and University System Second Vice President Bernadette Wegenstein | Johns Hopkins University Past President Simona Wright | The College of New Jersey American and Anglophone Studies Director Benjamin Railton | Fitchburg State University British and Anglophone Studies Director Elaine Savory | The New School Comparative Literature Director Katherine Sugg | Central Connecticut State University Creative Writing, Publishing, and Editing Director Abby Bardi | Prince George’s Community College Cultural Studies and Media Studies Director Maria Matz | University of Massachusetts Lowell French and Francophone Studies Director Olivier Le Blond | University of North Georgia German Studies Director Alexander Pichugin | Rutgers, State University of New Jersey Italian Studies Director Emanuela Pecchioli | University at Buffalo, SUNY Pedagogy and Professionalism Director Maria Plochocki | City University of New York Spanish and Portuguese Studies Director Victoria L. Ketz | La Salle University CAITY Caucus President and Representative Francisco Delgado | Borough of Manhattan Community College, CUNY Diversity Caucus Representative Susmita Roye | Delaware State University Graduate Student Caucus Representative Christian Ylagan | University -

57Th New York Film Festival September 27- October 13, 2019

57th New York Film Festival September 27- October 13, 2019 filmlinc.org AT ATTHE THE 57 57TH NEWTH NEW YORK YORK FILM FILM FESTIVAL FESTIVAL AndAnd over over 40 40 more more NYFF NYFF alums alums for for your your digitaldigital viewing viewing pleasure pleasure on onthe the new new KINONOW.COM/NYFFKINONOW.COM/NYFF Table of Contents Ticket Information 2 Venue Information 3 Welcome 4 New York Film Festival Programmers 6 Main Slate 7 Talks 24 Spotlight on Documentary 28 Revivals 36 Special Events 44 Retrospective: The ASC at 100 48 Shorts 58 Projections 64 Convergence 76 Artist Initiatives 82 Film at Lincoln Center Board & Staff 84 Sponsors 88 Schedule 89 SHARE YOUR FESTIVAL EXPERIENCE Tag us in your posts with #NYFF filmlinc.org @FilmLinc and @TheNYFF /nyfilmfest /filmlinc Sign up for the latest NYFF news and ticket updates at filmlinc.org/news Ticket Information How to Buy Tickets Online filmlinc.org In Person Advance tickets are available exclusively at the Alice Tully Hall box office: Mon-Sat, 10am to 6pm • Sun, 12pm to 6pm Day-of tickets must be purchased at the corresponding venue’s box office. Ticket Prices Main Slate, Spotlight on Documentary, Special Events*, On Cinema Talks $25 Member & Student • $30 Public *Joker: $40 Member & Student • $50 Public Directors Dialogues, Master Class, Projections, Retrospective, Revivals, Shorts $12 Member & Student • $17 Public Convergence Programs One, Two, Three: $7 Member & Student • $10 Public The Raven: $70 Member & Student • $85 Public Gala Evenings Opening Night, ATH: $85 Member & Student • $120 Public Closing Night & Centerpiece, ATH: $60 Member & Student • $80 Public Non-ATH Venues: $35 Member & Student • $40 Public Projections All-Access Pass $140 Free Events NYFF Live Talks, American Trial: The Eric Garner Story, Holy Night All free talks are subject to availability. -

WIFF-2019-Program-FULL-1.Pdf

HOW TO WIFF 2 TICKET INFO 3 WHERE TO WIFF 4 WIFF VILLAGE/WIFF ALLEY 5 WHO WE ARE 6-7 SALUTE TO OUR SPONSORS 8-21 SPECIAL THANKS 22 A MESSAGE FROM VINCENT 23 A MESSAGE FROM LYNNE 24 A MESSAGE FROM THE MAYOR 25 FESTIVAL FACTS 26-27 MIDNIGHT MADNESS 28 SPECIAL SCREENINGS 29 WIFF PRIZE IN CANADIAN FILM 30 MARK BOSCARIOL 48-HOUR FLICKFEST 31 WIFF LOCAL 32 WOMEN OF WIFF 33 HOTDOCS SHOWCASE 34 SPOTLIGHT ON ARCHITECTURE 35 LES FILMS FRANCOPHONES 36 IN CELEBRATION OF MUSIC 37 TRIXIE MATTEL 38 LGBTQ2+ 39 SPOTLIGHT AWARD 40 TAKE IT FROM VINCENT 41 OPENING NIGHT FILM 42 CLOSING NIGHT FILM 43 FILM SYNOPSES 44-127 SCREENING CALENDAR 128-137 INDEX BY COUNTRY 138-139 1. PICK YOUR FILMS Films and showtimes can be found online at windsorfilmfestival.com, in the program book or at the box office. Film synopses are listed by title in alphabetical order, with their countries of origin noted. 2. TYPES OF TICKETS If you plan on seeing several films, consider a festival pass for an unlimited movie experience. Show your valid student card for discounted single tickets or festival passes. If you want to catch a show with a large group of 20+, our group sales packages will help you save. If tickets are sold out in advance a limited number of standby tickets will be available at the venue just before showtime. 3. BUY YOUR TICKETS Online – Purchase through your phone or computer by visiting our website. Print them off at home or bring them up on your mobile device. -

Mayor De San Andres University School of Humanities and Education Sciences Department of Linguistics and Languages

MAYOR DE SAN ANDRES UNIVERSITY SCHOOL OF HUMANITIES AND EDUCATION SCIENCES DEPARTMENT OF LINGUISTICS AND LANGUAGES THESIS LINGUISTIC RESOURCES EMPLOYED BY THE POLITICAL PARTY M.A.S. AS A STRATEGY IN VERBAL GOVERNMENT PROPAGANDA TO BRING FORWARD A DOMINANT DISCURSIVE IDEOLOGY IN THE BOLIVIAN SOCIETY A thesis presented for the requirements of Licenciatura Degree SUBMITTED BY: Eugenio Walter Ajoruro Alanoca SUPERVISOR: Elizabeth Rojas Candia, Ph.D. LA PAZ – BOLIVIA 2020 MAYOR DE SAN ANDRES UNIVERSITY SCHOOL OF HUMANITIES AND EDUCATION SCIENCES DEPARTMENT OF LINGUISTICS AND LANGUAGES THESIS LINGUISTIC RESOURCES EMPLOYED BY THE POLITICAL PARTY M.A.S. AS A STRATEGY IN VERBAL GOVERNMENT PROPAGANDA TO BRING FORWARD A DOMINANT DISCURSIVE IDEOLOGY IN THE BOLIVIAN SOCIETY © 2020 Eugenio Walter Ajoruro Alanoca A thesis presented for the requirements of Licenciatura Degree. ………………………………………………………………………………………………… ………………………………………………………………………………………………… ………………………………………………………………………………………………… Head of Department: Lic. Maria Virginia Ferrufino Loza Supervisor: Elizabeth Rojas Candia Ph.D. Committee: Lic. Maria Virginia Coronado Conde Committee: M.Sc. Roberto Quina Mamani Date………………………………………... / 2020 i ABSTRACT This research aims to examine five government propaganda developed by the Ministry of Communication in July and August 2017 in Bolivia. The analysis is focused on Fairclough’s (1995) model of Critical Discourse Analysis, contending that there is a close link across text, discourse practice, and socio-cultural practice. It also employs Walton´s (1997) observation of Propaganda by using Discourse Analysis as a method which claims that propaganda is an art of persuasion and re-education of the audience, even of manipulating the social attitude encouraged by specific objectives. Likewise, the research analysis takes van Dijk´s (1998) ideological structures, which, according to him, are being developed to legitimate power or social inequality. -

16 May 2021 Spanishfilmfestival.Com

20 APRIL - 16 MAY 2021 SPANISHFILMFESTIVAL.COM SPANISH FILM FESTIVAL SPANISHFILMFEST SPONSORS THANK YOU TO OUR GENEROUS SPONSORS PRESENTED BY NAMING RIGHTS PARTNER CULTURAL PARTNERS OFFICIAL BEER PARTNER HOTEL PARTNERS SUPPORTING PARTNERS CATERING PARTNERS 2 SPONSORS 2 REEL LATINO OPENING NIGHT Ema .................................................. 33 Go Youth! .......................................... 34 Rosa’s Wedding .............................. 4-5 Spider ............................................... 35 ARGENTINIAN NIGHT The Adopters ..................................... 36 CONTENTS Heroic Losers ................................. 6-7 The Good Intentions ........................... 37 The Moneychanger ............................ 38 YOUNG AT HEART presents White on White .................................. 39 Bye Bye Mr. Etxebeste! ................... 8-9 SESSIONS AND TICKETING CENTREPIECE MELBOURNE/SYDNEY While at War ................................ 10-11 Ticket Information .............................. 40 Melbourne Session Times ............. 41-45 SPECIAL EVENTS Sydney Session Times ................... 46-49 Latigo .......................................... 12-13 Wishlist ........................................ 14-15 CANBERRA Ticket Information .............................. 50 SPECIAL PRESENTATION Session Times .............................. 51-52 A Thief’s Daughter ............................. 17 BYRON BAY REEL ESPAÑOL Ticket Information .............................. 53 Cross the Line ...................................