IBM Xseries 380 — 733 Mhz and 800 Mhz Intel Itanium Enterprise Server with Microsoft Windows Advanced Server, Limited Edition

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

HP Z620 Memory Configurations and Optimization

HP recommends Windows® 7. HP Z620 Memory Configurations and Optimization The purpose of this document is to provide an overview of the memory configuration for the HP Z620 Workstation and to provide recommendations to optimize performance. Supported Memory Modules1 Memory Features The types of memory supported on a HP Z620 are: ECC is supported on all of our supported DIMMs. • 2 GB and 4 GB PC3-12800E 1600MHz DDR3 • Single-bit errors are automatically corrected. Unbuffered ECC DIMMs • Multi-bit errors are detected and will cause the • 4 GB and 8 GB PC3-12800R 1600MHz DDR3 system to immediately reboot and halt with an Registered DIMMs F1 prompt error message. • 1.35V and 1.5V DIMMs are supported, but the Non-ECC memory does not detect or correct system will operate the DIMMs at 1.5V only. single-bit or multi-bit errors which can cause • 2 Gb and 4 Gb based DIMMs are supported. instability, or corruption of data, in the platform. See the Memory Technology White Paper for See the Memory Technology White Paper for additional technical information. additional technical information. Command and Address parity is supported with Platform Capabilities Registered DIMMs. Maximum capacity Optimize Performance • Single processor: 64 GB Generally, maximum memory performance is • Dual processors: 96 GB achieved by evenly distributing total desired memory capacity across all operational Total of 12 memory sockets channels. Proper individual DIMM capacity • 8 sockets on the motherboard: 4 channels per selection is essential to maximizing performance. processor and 2 sockets per channel On the second CPU, installing the same • 4 sockets on the 2nd CPU and memory amount of memory as the first CPU will optimize module: 4 channels per processor and 1 performance. -

Interfacing EPROM to the 8088

Chapter 10: Memory Interface Introduction Simple or complex, every microprocessor-based system has a memory system. Almost all systems contain two main types of memory: read-only memory (ROM) and random access memory (RAM) or read/write memory. This chapter explains how to interface both memory types to the Intel family of microprocessors. MEMORY DEVICES Before attempting to interface memory to the microprocessor, it is essential to understand the operation of memory components. In this section, we explain functions of the four common types of memory: read-only memory (ROM) Flash memory (EEPROM) Static random access memory (SRAM) dynamic random access memory (DRAM) Memory Pin Connections – address inputs – data outputs or input/outputs – some type of selection input – at least one control input to select a read or write operation Figure 10–1 A pseudomemory component illustrating the address, data, and control connections. Address Connections Memory devices have address inputs to select a memory location within the device. Almost always labeled from A0, the least significant address input, to An where subscript n can be any value always labeled as one less than total number of address pins A memory device with 10 address pins has its address pins labeled from A0 to A9. The number of address pins on a memory device is determined by the number of memory locations found within it. Today, common memory devices have between 1K (1024) to 1G (1,073,741,824) memory locations. with 4G and larger devices on the horizon A 1K memory device has 10 address pins. therefore, 10 address inputs are required to select any of its 1024 memory locations It takes a 10-bit binary number to select any single location on a 1024-location device. -

Benchmarking the Intel FPGA SDK for Opencl Memory Interface

The Memory Controller Wall: Benchmarking the Intel FPGA SDK for OpenCL Memory Interface Hamid Reza Zohouri*†1, Satoshi Matsuoka*‡ *Tokyo Institute of Technology, †Edgecortix Inc. Japan, ‡RIKEN Center for Computational Science (R-CCS) {zohour.h.aa@m, matsu@is}.titech.ac.jp Abstract—Supported by their high power efficiency and efficiency on Intel FPGAs with different configurations recent advancements in High Level Synthesis (HLS), FPGAs are for input/output arrays, vector size, interleaving, kernel quickly finding their way into HPC and cloud systems. Large programming model, on-chip channels, operating amounts of work have been done so far on loop and area frequency, padding, and multiple types of blocking. optimizations for different applications on FPGAs using HLS. However, a comprehensive analysis of the behavior and • We outline one performance bug in Intel’s compiler, and efficiency of the memory controller of FPGAs is missing in multiple deficiencies in the memory controller, leading literature, which becomes even more crucial when the limited to significant loss of memory performance for typical memory bandwidth of modern FPGAs compared to their GPU applications. In some of these cases, we provide work- counterparts is taken into account. In this work, we will analyze arounds to improve the memory performance. the memory interface generated by Intel FPGA SDK for OpenCL with different configurations for input/output arrays, II. METHODOLOGY vector size, interleaving, kernel programming model, on-chip channels, operating frequency, padding, and multiple types of A. Memory Benchmark Suite overlapped blocking. Our results point to multiple shortcomings For our evaluation, we develop an open-source benchmark in the memory controller of Intel FPGAs, especially with respect suite called FPGAMemBench, available at https://github.com/ to memory access alignment, that can hinder the programmer’s zohourih/FPGAMemBench. -

A Modern Primer on Processing in Memory

A Modern Primer on Processing in Memory Onur Mutlua,b, Saugata Ghoseb,c, Juan Gomez-Luna´ a, Rachata Ausavarungnirund SAFARI Research Group aETH Z¨urich bCarnegie Mellon University cUniversity of Illinois at Urbana-Champaign dKing Mongkut’s University of Technology North Bangkok Abstract Modern computing systems are overwhelmingly designed to move data to computation. This design choice goes directly against at least three key trends in computing that cause performance, scalability and energy bottlenecks: (1) data access is a key bottleneck as many important applications are increasingly data-intensive, and memory bandwidth and energy do not scale well, (2) energy consumption is a key limiter in almost all computing platforms, especially server and mobile systems, (3) data movement, especially off-chip to on-chip, is very expensive in terms of bandwidth, energy and latency, much more so than computation. These trends are especially severely-felt in the data-intensive server and energy-constrained mobile systems of today. At the same time, conventional memory technology is facing many technology scaling challenges in terms of reliability, energy, and performance. As a result, memory system architects are open to organizing memory in different ways and making it more intelligent, at the expense of higher cost. The emergence of 3D-stacked memory plus logic, the adoption of error correcting codes inside the latest DRAM chips, proliferation of different main memory standards and chips, specialized for different purposes (e.g., graphics, low-power, high bandwidth, low latency), and the necessity of designing new solutions to serious reliability and security issues, such as the RowHammer phenomenon, are an evidence of this trend. -

Itanium-Based Solutions by Hp

Itanium-based solutions by hp an overview of the Itanium™-based hp rx4610 server a white paper from hewlett-packard june 2001 table of contents table of contents 2 executive summary 3 why Itanium is the future of computing 3 rx4610 at a glance 3 rx4610 product specifications 4 rx4610 physical and environmental specifications 4 the rx4610 and the hp server lineup 5 rx4610 architecture 6 64-bit address space and memory capacity 6 I/O subsystem design 7 special features of the rx4610 server 8 multiple upgrade and migration paths for investment protection 8 high availability and manageability 8 advanced error detection, correction, and containment 8 baseboard management controller (BMC) 8 redundant, hot-swap power supplies 9 redundant, hot-swap cooling 9 hot-plug disk drives 9 hot-plug PCI I/O slots 9 internal removable media 10 system control panel 10 ASCII console for hp-ux 10 space-saving rack density 10 complementary design and packaging 10 how hp makes the Itanium transition easy 11 binary compatibility 11 hp-ux operating system 11 seamless transition—even for home-grown applications 12 transition help from hp 12 Itanium quick start service 12 partner technology access centers 12 upgrades and financial incentives 12 conclusion 13 for more information 13 appendix: Itanium advantages in your computing future 14 hp’s CPU roadmap 14 Itanium processor architecture 15 predication enhances parallelism 15 speculation minimizes the effect of memory latency 15 inherent scalability delivers easy expansion 16 what this means in a server 16 2 executive The Itanium™ Processor Family is the next great stride in computing--and it’s here today. -

Advanced X86

Advanced x86: BIOS and System Management Mode Internals Input/Output Xeno Kovah && Corey Kallenberg LegbaCore, LLC All materials are licensed under a Creative Commons “Share Alike” license. http://creativecommons.org/licenses/by-sa/3.0/ ABribuEon condiEon: You must indicate that derivave work "Is derived from John BuBerworth & Xeno Kovah’s ’Advanced Intel x86: BIOS and SMM’ class posted at hBp://opensecuritytraining.info/IntroBIOS.html” 2 Input/Output (I/O) I/O, I/O, it’s off to work we go… 2 Types of I/O 1. Memory-Mapped I/O (MMIO) 2. Port I/O (PIO) – Also called Isolated I/O or port-mapped IO (PMIO) • X86 systems employ both-types of I/O • Both methods map peripheral devices • Address space of each is accessed using instructions – typically requires Ring 0 privileges – Real-Addressing mode has no implementation of rings, so no privilege escalation needed • I/O ports can be mapped so that they appear in the I/O address space or the physical-memory address space (memory mapped I/O) or both – Example: PCI configuration space in a PCIe system – both memory-mapped and accessible via port I/O. We’ll learn about that in the next section • The I/O Controller Hub contains the registers that are located in both the I/O Address Space and the Memory-Mapped address space 4 Memory-Mapped I/O • Devices can also be mapped to the physical address space instead of (or in addition to) the I/O address space • Even though it is a hardware device on the other end of that access request, you can operate on it like it's memory: – Any of the processor’s instructions -

Motorola Mpc107 Pci Bridge/Integrated Memory Controller

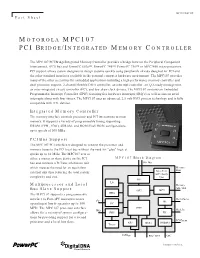

MPC107FACT/D Fact Sheet MOTOROLA MPC107 PCI BRIDGE/INTEGRATED MEMORY CONTROLLER The MPC107 PCI Bridge/Integrated Memory Controller provides a bridge between the Peripheral Component Interconnect, (PCI) bus and PowerPC 603e™, PowerPC 740™, PowerPC 750™ or MPC7400 microprocessors. PCI support allows system designers to design systems quickly using peripherals already designed for PCI and the other standard interfaces available in the personal computer hardware environment. The MPC107 provides many of the other necessities for embedded applications including a high-performance memory controller and dual processor support, 2-channel flexible DMA controller, an interrupt controller, an I2O-ready message unit, an inter-integrated circuit controller (I2C), and low skew clock drivers. The MPC107 contains an Embedded Programmable Interrupt Controller (EPIC) featuring five hardware interrupts (IRQ’s) as well as sixteen serial interrupts along with four timers. The MPC107 uses an advanced, 2.5-volt HiP3 process technology and is fully compatible with TTL devices. Integrated Memory Controller The memory interface controls processor and PCI interactions to main memory. It supports a variety of programmable timing supporting DRAM (FPM, EDO), SDRAM, and ROM/Flash ROM configurations, up to speeds of 100 MHz. PCI Bus Support The MPC107 PCI interface is designed to connect the processor and memory buses to the PCI local bus without the need for "glue" logic at speeds up to 66 MHz. The MPC107 acts as either a master or slave device on the PCI MPC107 Block Diagram bus and contains a PCI bus arbitration unit 60x Bus which reduces the need for an equivalent Memory Data external unit thus reducing the total system Data Path ECC / Parity complexity and cost. -

The Impulse Memory Controller

IEEE TRANSACTIONS ON COMPUTERS, VOL. 50, NO. 11, NOVEMBER 2001 1 The Impulse Memory Controller John B. Carter, Member, IEEE, Zhen Fang, Student Member, IEEE, Wilson C. Hsieh, Sally A. McKee, Member, IEEE, and Lixin Zhang, Student Member, IEEE AbstractÐImpulse is a memory system architecture that adds an optional level of address indirection at the memory controller. Applications can use this level of indirection to remap their data structures in memory. As a result, they can control how their data is accessed and cached, which can improve cache and bus utilization. The Impuse design does not require any modification to processor, cache, or bus designs since all the functionality resides at the memory controller. As a result, Impulse can be adopted in conventional systems without major system changes. We describe the design of the Impulse architecture and how an Impulse memory system can be used in a variety of ways to improve the performance of memory-bound applications. Impulse can be used to dynamically create superpages cheaply, to dynamically recolor physical pages, to perform strided fetches, and to perform gathers and scatters through indirection vectors. Our performance results demonstrate the effectiveness of these optimizations in a variety of scenarios. Using Impulse can speed up a range of applications from 20 percent to over a factor of 5. Alternatively, Impulse can be used by the OS for dynamic superpage creation; the best policy for creating superpages using Impulse outperforms previously known superpage creation policies. Index TermsÐComputer architecture, memory systems. æ 1 INTRODUCTION INCE 1987, microprocessor performance has improved at memory. By giving applications control (mediated by the Sa rate of 55 percent per year; in contrast, DRAM latencies OS) over the use of shadow addresses, Impulse supports have improved by only 7 percent per year and DRAM application-specific optimizations that restructure data. -

Optimizing Thread Throughput for Multithreaded Workloads on Memory Constrained Cmps

Optimizing Thread Throughput for Multithreaded Workloads on Memory Constrained CMPs Major Bhadauria and Sally A. Mckee Computer Systems Lab Cornell University Ithaca, NY, USA [email protected], [email protected] ABSTRACT 1. INTRODUCTION Multi-core designs have become the industry imperative, Power and thermal constraints have begun to limit the replacing our reliance on increasingly complicated micro- maximum operating frequency of high performance proces- architectural designs and VLSI improvements to deliver in- sors. The cubic increase in power from increases in fre- creased performance at lower power budgets. Performance quency and higher voltages required to attain those frequen- of these multi-core chips will be limited by the DRAM mem- cies has reached a plateau. By leveraging increasing die ory system: we demonstrate this by modeling a cycle-accurate space for more processing cores (creating chip multiproces- DDR2 memory controller with SPLASH-2 workloads. Sur- sors, or CMPs) and larger caches, designers hope that multi- prisingly, benchmarks that appear to scale well with the threaded programs can exploit shrinking transistor sizes to number of processors fail to do so when memory is accurately deliver equal or higher throughput as single-threaded, single- modeled. We frequently find that the most efficient config- core predecessors. The current software paradigm is based uration is not the one with the most threads. By choosing on the assumption that multi-threaded programs with little the most efficient number of threads for each benchmark, contention for shared data scale (nearly) linearly with the average energy delay efficiency improves by a factor of 3.39, number of processors, yielding power-efficient data through- and performance improves by 19.7%, on average. -

COSC 6385 Computer Architecture - Multi-Processors (IV) Simultaneous Multi-Threading and Multi-Core Processors Edgar Gabriel Spring 2011

COSC 6385 Computer Architecture - Multi-Processors (IV) Simultaneous multi-threading and multi-core processors Edgar Gabriel Spring 2011 Edgar Gabriel Moore’s Law • Long-term trend on the number of transistor per integrated circuit • Number of transistors double every ~18 month Source: http://en.wikipedia.org/wki/Images:Moores_law.svg COSC 6385 – Computer Architecture Edgar Gabriel 1 What do we do with that many transistors? • Optimizing the execution of a single instruction stream through – Pipelining • Overlap the execution of multiple instructions • Example: all RISC architectures; Intel x86 underneath the hood – Out-of-order execution: • Allow instructions to overtake each other in accordance with code dependencies (RAW, WAW, WAR) • Example: all commercial processors (Intel, AMD, IBM, SUN) – Branch prediction and speculative execution: • Reduce the number of stall cycles due to unresolved branches • Example: (nearly) all commercial processors COSC 6385 – Computer Architecture Edgar Gabriel What do we do with that many transistors? (II) – Multi-issue processors: • Allow multiple instructions to start execution per clock cycle • Superscalar (Intel x86, AMD, …) vs. VLIW architectures – VLIW/EPIC architectures: • Allow compilers to indicate independent instructions per issue packet • Example: Intel Itanium series – Vector units: • Allow for the efficient expression and execution of vector operations • Example: SSE, SSE2, SSE3, SSE4 instructions COSC 6385 – Computer Architecture Edgar Gabriel 2 Limitations of optimizing a single instruction -

IMME128M64D2SOS8AG (Die Revision E) 1Gbyte (128M X 64 Bit)

Product Specification | Rev. 1.0 | 2015 IMME128M64D2SOS8AG (Die Revision E) 1GByte (128M x 64 Bit) 1GB DDR2 Unbuffered SO-DIMM By ECC DRAM RoHS Compliant Product Product Specification 1.0 1 IMME128M64D2SOS8AG www.intelligentmemory.com Version: Rev. 1.0, FEB 2015 1.0 - Initial release ReReRemark:Re mark: Please refer to the last page of the i) Contents ii) List of Table iii) List of Figures . We Listen to Your Comments Any information within this document that you feel is wrong, unclear or missing at all? Your feedback will help us to continuously improve the quality of this document. Please send your proposal (including a reference to this document) to: [email protected] Product Specification 1.0 2 IMME128M64D2SOS8AG www.intelligentmemory.com Features 200-Pin Unbuffered Small Outline Dual-In-Line Memory Module Capacity: 1GB Maximum Data Transfer Rate: 6.40 GB/Sec JEDEC-Standard Built by ECC DRAM Chips Power Supply: VDD, VDDQ =1.8± 0.1 V Bi-directional Differential Data-Strobe (Single-ended data-strobe is an optional feature) 64 Bit Data Bus Width without ECC Programmable CAS Latency (CL): o PC2-6400: 4, 5, 6 o PC2-5300: 4, 5 Programmable Additive Latency (Posted /CAS) : 0, CL-2 or CL-1(Clock) Write Latency (WL) = Read Latency (RL) -1 Posted /CAS On-Die Termination (ODT) Off-Chip Driver (OCD) Impedance Adjustment Burst Type (Sequential & Interleave) Burst Length: 4, 8 Refresh Mode: Auto and Self 8192 Refresh Cycles / 64ms Serial Presence Detect (SPD) with EEPROM SSTL_18 Interface Gold Edge Contacts 100% RoHS-Compliant Standard Module Height: 30.00mm (1.18 inch) Product Specification 1.0 3 IMME128M64D2SOS8AG www.intelligentmemory.com ECC DRAM Introduction Special Features (ECC ––– Functionality) - Embedded error correction code (ECC) functionality corrects single bit errors within each 64 bit memory-word. -

WP127: "Embedded System Design Considerations" V1.0 (03/06/2002)

White Paper: Virtex-II Series R WP127 (v1.0) March 6, 2002 Embedded System Design Considerations By: David Naylor Embedded systems see a steadily increasing bandwidth mismatch between raw processor MIPS and surrounding components. System performance is not solely dependent upon processor capability. While a processor with a higher MIPS specification can provide incremental system performance improvement, eventually the lagging surrounding components become a system performance bottleneck. This white paper examines some of the factors contributing to this. The analysis of bus and component performance leads to the conclusion that matching of processor and component performance provides a good cost-performance trade-off. © 2002 Xilinx, Inc. All rights reserved. All Xilinx trademarks, registered trademarks, patents, and disclaimers are as listed at http://www.xilinx.com/legal.htm. All other trademarks and registered trademarks are the property of their respective owners. All specifications are subject to change without notice. WP127 (v1.0) March 6, 2002 www.xilinx.com 1 1-800-255-7778 R White Paper: Embedded System Design Considerations Introduction Today’s systems are composed of a hierarchy, or layers, of subsystems with varying access times. Figure 1 depicts a layered performance pyramid with slower system components in the wider, lower layers and faster components nearer the top. The upper six layers represent an embedded system. In descending order of speed, the layers in this pyramid are as follows: 1. CPU 2. Cache memory 3. Processor Local Bus (PLB) 4. Fast and small Static Random Access Memory (SRAM) subsystem 5. Slow and large Dynamic Random Access Memory (DRAM) subsystem 6.