MT005-Hamaker

Total Page:16

File Type:pdf, Size:1020Kb

Load more

Recommended publications

-

GEO and GEOSS……

GEO - GEOSS Support of the CBD 2010 Targets CBD - SBSTTA Paris, 4 July 2007 Douglas Muchoney, GEO Secretariat © GEO Secretariat The Earth is a complex system of systems © GEO Secretariat Any Single Problem Requires Many Data Sets A Single Data Set Will Serve Many Communities © GEO Secretariat Solar Energy © GEO Secretariat GEOSS Implementation is a Non- binding, Voluntary Process • Relies on the Goodwill of Members and Participating Organizations • Efficient for Contribution of Components • Not a Funding Mechanism • GEO implements GEOSS © GEO Secretariat GEO Goal Improve and Coordinate Observation Systems Provide Easier & More Open Data Access Foster Use (Science, Applications, Capacity Bldg) … to answer Society’s need for informed decision making © GEO Secretariat GEOSS: A Global, Coordinated, Comprehensive and Sustained System of Observing Systems © GEO Secretariat GEOSS will Address Nine Societal Benefit Areas 1. Reduction and Prevention of Disasters 2. Human Health and Epidemiology 3. Energy Management 4. Climate Variability & Change 5. Water Management 6. Weather Forecasting 7. Ecosystems 8. Agriculture 9. Biodiversity © GEO Secretariat GEOSS achievement through 5 Transverse Areas 1. Architecture 2. Data Management 3. User Engagement 4. Capacity Building 5. Outreach © GEO Secretariat Envisat SORCE Aura/Aqua/Terra Sage QuikScat IKONOS CBERS SeaWiFS SPOT 4, 5 SPIN-2 SeaWinds Orbview 2, 3 TRMM DMC ACRIMSAT EROS A1 ERBS Radarsat ALOS Toms-EP QuickBird Grace Landsat 7 Jason UARS Space Observation© GEO Secretariat Systems In Situ Observation Systems © GEO Secretariat GEO Societal Benefit Areas 1. Reduction and Prevention of Disasters 2. Human Health 3. Energy Management 4. Climate Change 5. Water Management 6. Weather Forecasting 7. Ecosystems 8. Agriculture 9. -

Highlights in Space 2010

International Astronautical Federation Committee on Space Research International Institute of Space Law 94 bis, Avenue de Suffren c/o CNES 94 bis, Avenue de Suffren UNITED NATIONS 75015 Paris, France 2 place Maurice Quentin 75015 Paris, France Tel: +33 1 45 67 42 60 Fax: +33 1 42 73 21 20 Tel. + 33 1 44 76 75 10 E-mail: : [email protected] E-mail: [email protected] Fax. + 33 1 44 76 74 37 URL: www.iislweb.com OFFICE FOR OUTER SPACE AFFAIRS URL: www.iafastro.com E-mail: [email protected] URL : http://cosparhq.cnes.fr Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law The United Nations Office for Outer Space Affairs is responsible for promoting international cooperation in the peaceful uses of outer space and assisting developing countries in using space science and technology. United Nations Office for Outer Space Affairs P. O. Box 500, 1400 Vienna, Austria Tel: (+43-1) 26060-4950 Fax: (+43-1) 26060-5830 E-mail: [email protected] URL: www.unoosa.org United Nations publication Printed in Austria USD 15 Sales No. E.11.I.3 ISBN 978-92-1-101236-1 ST/SPACE/57 *1180239* V.11-80239—January 2011—775 UNITED NATIONS OFFICE FOR OUTER SPACE AFFAIRS UNITED NATIONS OFFICE AT VIENNA Highlights in Space 2010 Prepared in cooperation with the International Astronautical Federation, the Committee on Space Research and the International Institute of Space Law Progress in space science, technology and applications, international cooperation and space law UNITED NATIONS New York, 2011 UniTEd NationS PUblication Sales no. -

Type Here Your Title

Envisat SORCE Aura/Aqua/Terra Sage QuikScat IKONOS CBERS SeaWiFS SPOT 4, 5 GEO-GLAM SPIN-2 SeaWinds GLOBAL AGRICULTURALOrbview 2, 3 MONITORINGTRMM DMC ACRIMSAT EROS A1 ERBS Radarsat Jai Singh PariharALOS for the GEOGLAM group and GEO Agriculture CommunityToms-EP of Practice QuickBird Grace Landsat 7 Jason UARS © GEO Secretariat Cereal grain production per hectare (Source: SAGE, Univ. of Wisconsin, Madison, Wisc., USA) 5th GEOSS-Asia Pacific Symposium, Tokyo, April 2-4, 2012 1. The GEO-GLAM Initiative : Objectives To reinforce the international community’s capacity to produce and disseminate relevant, timely and accurate forecasts of agricultural production at national, regional and global scales. Envisat SORCE Aura/Aqua/Terra Sage QuikScat IKONOS CBERS SeaWiFS SPOT 4, 5 SPIN-2 SeaWinds Orbview 2, 3 TRMM DMC ACRIMSAT EROS A1 ERBS Radarsat ALOS Toms-EP QuickBird Grace Landsat 7 Jason UARS © GEO Secretariat Agronomy Earth Observation Agro-economy Cultivated area / crop type area Meteorology Cereal grain production per hectare (Source: SAGE, Univ. of Wisconsin, Madison, Wisc., USA) Crop yield forecast 2 1. The GEO-GLAM Initiative : Background (G20 2011) 3 1. The GEO-GLAM Initiative : Background (G20 2011) G20 Final Declaration (Nov. 2011) 44. We commit to improve market information and transparency in order to make international markets for agricultural commodities more effective. To that end, we launched: • The "Agricultural Market Information System" (AMIS) in Rome on September 15, 2011, to improve information on markets ...; • The "Global Agricultural Geo-monitoring Initiative" (GEOGLAM) in Geneva on September 22-23, 2011, to coordinate satellite monitoring observation systems … to enhance crop production projections ... 4 2. -

Precision Optics and Assemblies

PRECISION OPTICS AND ASSEMBLIES Image: NASA Wide Field Infrared Survey Telescope (WFIRST) High-performance, reliable optics for ground and space-based observation Dazzling details of Earth and insights into the mysteries of the universe BENEFITS have one thing in common — L3Harris precision optics. High-perfor- > Meet the most demanding mance optical components and assemblies have been integral to the requirements for spatial world’s most sophisticated ground- and space-based telescopes and frequency, mounting and satellites for more than 50 years. application-specific needs > Provide end-to-end capabilities with cost-effective fabrication SPECIALIZING IN CUSTOM, L3Harris provides solutions for high- LARGE-SCALE OPTICS performance systems, including: > Create surface finish to less than two-nanometer root mean > Ground- and space-based telescopes L3Harris provides optomechanical design, square (RMS) on glass and glass optical fabrication, test and assembly > Solid and lightweight optics for ceramic materials capabilities for precision optics solutions autocollimating systems and systems. The company manufactures > Lightweight fast-steering mirrors large optical components, including lightweight and solid mirrors in most > High-energy laser optics geometric shapes, to meet low surface > Optical test systems microroughness and tight surface figure error specifications. This experience, > Relay mirrors along with a rigorous metrology and > Observation and vacuum testing program and state-of-the-art compatible viewing windows in-house facilities, -

Platforms & Sensors

PLATFORMS & SENSORS Platform: the vehicle carrying the remote sensing device Sensor: the remote sensing device recording wavelengths of energy e.g. Aerial photography - the plane and the camera Satellite image example: Platform: Landsat (1, 5, 7 etc..) Sensor: Multispectral Sensor (MSS) or Thematic Mapper (TM) Selected satellite remote sensing systems NASA Visible Earth: long list Wim Bakker's website http://members.home.nl/wim.h.bakker http://earthobservatory.nasa.gov/IOTD/view.php?id=52174 1. Satellite orbits “Sun-synchronous” “Geostationary” Land monitoring Weather satellites ~ 700 km altitude ~ 30,000 km altitude Satellite orbits Geostationary / geosynchronous : 36,000 km above the equator, stays vertically above the same spot, rotates with earth - weather images, e.g. GOES (Geostat. Operational Env. Satellite) Sun-synchronous satellites: 700-900 km altitude, rotates at circa 81-82 degree angle to equator: captures imagery approx the same time each day (10am +/- 30 minutes) - Landsat path: earthnow Sun-synchronous Graphic: http://ccrs.nrcan.gc.ca/resource/tutor/datarecept/c1p2_e.php 700-900 km altitude rotates at ~ 81-82 ° angle to the equator (near polar): captures imagery the same time each day (10.30am +/- 30 minutes) - for earth mapping Orbit every 90-100 minutes produces similar daytime lighting Geostationary satellites capture a (rectangular) scene, sun-synchronous satellites capture a continuous swath, … which is broken into rectangular scenes. 2. Scanner types Whiskbroom (mirror/ cross-track): a small number of sensitive diodes for each band sweep perpendicular to the path or swath, centred directly under the platform, i.e. at 'nadir' e.g. LANDSAT MSS /TM Pushbroom (along-track): an array of diodes (one for each column of pixels) is 'pointed' in a selected direction, nadir or off-nadir, on request, usually 0-30 degrees (max.), e.g. -

EDITOR's in This Issue

rvin bse g S O ys th t r e a m E THE EARTH OBSERVER May/June 2001 Vol. 13 No. 3 In this issue . EDITOR’S SCIENCE MEETINGS Joint Advanced Microwave Scanning Michael King Radiometer (AMSR) Science Team EOS Senior Project Scientist Meeting. 3 Clouds and The Earth’s Radiant Energy I’m pleased to announce that NASA’s Earth Science Enterprise is sponsoring System (CERES) Science Team Meeting a problem for the Odyssey of the Mind competitions during the 2001-2002 . 9 school year. It is a technical problem involving an original performance about environmental preservation. The competitions will involve about The ACRIMSAT/ACRIM3 Experiment — 450,000 students from kindergarten through college worldwide, and will Extending the Precision, Long-Term Total culminate in a World Finals next May in Boulder, CO where the “best of the Solar Irradiance Climate Database . .14 best” will compete for top awards in the international competitions. It is estimated that we reach 1.5 to 2 million students, parents, teachers/ SCIENCE ARTICLES administrators, coaches, etc. internationally through participation in this Summary of the International Workshop on LAI Product Validation. 18 program. An Introduction to the Federation of Earth The Odyssey of the Mind Program fosters creative thinking and problem- Science Information Partners . 19 solving skills among participating students from kindergarten through col- lege. Students solve problems in a variety of areas from building mechanical Tools and Systems for EOS Data . 23 devices such as spring-driven vehicles to giving their own interpretation of literary classics. Through solving problems, students learn lifelong skills Status and Plans for HDF-EOS, NASA’s such as working with others as a team, evaluating ideas, making decisions, Format for EOS Standard Products . -

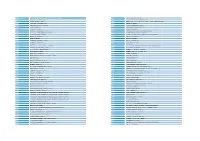

List of Satellite Missions (By Year and Sponsoring

Launch Year EO Satellite Mission (and sponsoring agency) 2008 CARTOSAT-2A (ISRO) 1967 Diademe 1&2 (CNES) 2008 FY-3A (NSMC-CMA / NRSCC) 1975 STARLETTE (CNES) 2008 OSTM (Jason-2) (NASA / NOAA / CNES / EUMETSAT) 1976 LAGEOS-1 (NASA / ASI) 2008 RapidEye (DLR) 1992 LAGEOS-2 (ASI / NASA) 2008 HJ-1A (CRESDA / CAST) 1993 SCD-1 (INPE) 2008 HJ-1B (CRESDA / CAST) 1993 STELLA (CNES) 2008 THEOS (GISTDA) 1997 DMSP F-14 (NOAA / USAF) 2008 COSMO-SkyMed 3 (ASI / MoD (Italy)) 1997 Meteosat-7 (EUMETSAT / ESA) 2008 FY-2E (NSMC-CMA / NRSCC) 1997 TRMM (NASA / JAXA) 2009 GOSAT (JAXA / MOE (Japan) / NIES (Japan)) 1998 NOAA-15 (NOAA) 2009 NOAA-19 (NOAA) 1998 SCD-2 (INPE) 2009 RISAT-2 (ISRO) 1999 Landsat 7 (USGS / NASA) 2009 GOES-14 (NOAA) 1999 QuikSCAT (NASA) 2009 UK-DMC2 (UKSA) 1999 Ikonos-2 2009 Deimos-1 1999 Ørsted (Oersted) (DNSC / CNES) 2009 Meteor-M N1 (ROSHYDROMET / ROSKOSMOS) 1999 DMSP F-15 (NOAA / USAF) 2009 OCEANSAT-2 (ISRO) 1999 Terra (NASA / METI / CSA) 2009 DMSP F-18 (NOAA / USAF) 1999 ACRIMSAT (NASA) 2009 SMOS (ESA / CDTI / CNES) 2000 NMP EO-1 (NASA) 2010 GOES-15 (NOAA) 2001 Odin (SNSB / TEKES / CNES / CSA) 2010 CryoSat-2 (ESA) 2001 QuickBird-2 2010 TanDEM-X (DLR) 2001 PROBA (ESA) 2010 COMS (KARI) 2002 GRACE (NASA / DLR) 2010 AISSat-1 (NSC) 2002 Aqua (NASA / JAXA / INPE) 2010 CARTOSAT-2B (ISRO) 2002 SPOT-5 (CNES) 2010 FY-3B (NSMC-CMA / NRSCC) 2002 Meteosat-8 (EUMETSAT / ESA) 2010 COSMO-SkyMed 4 (ASI / MoD (Italy)) 2002 KALPANA-1 (ISRO) 2011 Elektro-L N1 (ROSKOSMOS / ROSHYDROMET) 2003 CORIOLIS (DoD (USA)) 2011 RESOURCESAT-2 (ISRO) 2003 SORCE (NASA) -

Trends in Space Commerce

Foreword from the Secretary of Commerce As the United States seeks opportunities to expand our economy, commercial use of space resources continues to increase in importance. The use of space as a platform for increasing the benefits of our technological evolution continues to increase in a way that profoundly affects us all. Whether we use these resources to synchronize communications networks, to improve agriculture through precision farming assisted by imagery and positioning data from satellites, or to receive entertainment from direct-to-home satellite transmissions, commercial space is an increasingly large and important part of our economy and our information infrastructure. Once dominated by government investment, commercial interests play an increasing role in the space industry. As the voice of industry within the U.S. Government, the Department of Commerce plays a critical role in commercial space. Through the National Oceanic and Atmospheric Administration, the Department of Commerce licenses the operation of commercial remote sensing satellites. Through the International Trade Administration, the Department of Commerce seeks to improve U.S. industrial exports in the global space market. Through the National Telecommunications and Information Administration, the Department of Commerce assists in the coordination of the radio spectrum used by satellites. And, through the Technology Administration's Office of Space Commercialization, the Department of Commerce plays a central role in the management of the Global Positioning System and advocates the views of industry within U.S. Government policy making processes. I am pleased to commend for your review the Office of Space Commercialization's most recent publication, Trends in Space Commerce. The report presents a snapshot of U.S. -

Geoeye Corp Overview

GeoEye Corporate Overview Presented to XIII Simposio Brasileiro de Sensoriamento Remoto April 24th , 2007 Revised: April 2007 About GeoEye • GeoEye is a leading producer of satellite, aerial and geospatial information • Core Capabilities – 2 remote-sensing satellites; 3rd this fall – 2 aircraft with digital mapping capability – Advanced geospatial imagery processing capability – World’s largest satellite image archive: > 275 sq km – International network of regional ground stations to directly task, receive and process high resolution imagery • GeoEye delivers high quality satellite imagery and products to better map, measure and monitor the world 2 Milestones 2007 • Scheduled launch for GeoEye-1 March 2006 • GeoEye acquires MJ Harden Sept • GeoEye begins trading on NASDAQ Jan • GeoEye acquires Space Imaging 2004 Sept • GeoEye Wins $500M DoD NextView contract 2003 Jun • Launch of OV-3 1999 Sept • Launch of IKONOS 1997 Aug • Launch of OrbView-2 1992 Nov • Predecessor company founded 3 Company Offerings: Imagery • Extensive Commercial Satellite Imagery Archive – IKONOS and OrbView-3 combined archive: 278 million sq km as of April 2007 – Online search for archive imagery Niagara Falls, NY Frankfurt Airport, Germany 4 Company Offerings: Value Added Applications & Production • Select Imagery Applications – National Security & Intelligence – Online Mapping / Search Engines –Homeland Defense – Oil & Gas and Mining – Air and Marine Transportation – Insurance & Risk Management – Digital Planimetric & Topographic Mapping 3-D Fly Through – Mobile GIS Services • Value-Added Production – Fused images, digital elevation models Vector (DEMs), land-use classification maps – World class facilities in: Elevation • St. Louis, MO • Thornton, CO Image • Dulles, VA • Mission, KS Bundled Product Layers 5 Company Offerings: Capacity • Satellite access • Aerial image acquisition • Ground stations – Infrastructure / Upgrades – Operations, maintenance and training Satellite Imagery can be sold almost anywhere. -

Moderate Resolution Remote Sensing Alternatives: a Review of Landsat-Like Sensors and Their Applications

Journal of Applied Remote Sensing, Vol. 1, 012506 (9 November 2007) Moderate resolution remote sensing alternatives: a review of Landsat-like sensors and their applications Scott L. Powella*, Dirk Pflugmacherb, Alan A. Kirschbaumb, Yunsuk Kimb, and Warren B. Cohena a USDA Forest Service, Pacific Northwest Research Station, Corvallis Forestry Sciences Laboratory, Corvallis, OR, 97331, USA b Oregon State University, Department of Forest Science, Corvallis, OR, 97331, USA * Corresponding Author USDA Forest Service, Pacific Northwest Research Station, Corvallis Forestry Sciences Laboratory, Corvallis, OR, 97331, USA [email protected] Abstract. Earth observation with Landsat and other moderate resolution sensors is a vital component of a wide variety of applications across disciplines. Despite the widespread success of the Landsat program, recent problems with Landsat 5 and Landsat 7 create uncertainty about the future of moderate resolution remote sensing. Several other Landsat- like sensors have demonstrated applicability in key fields of earth observation research and could potentially complement or replace Landsat. The objective of this paper is to review the range of applications of 5 satellite suites and their Landsat-like sensors: SPOT, IRS, CBERS, ASTER, and ALI. We give a brief overview of each sensor, and review the documented applications in several earth observation domains, including land cover classification, forests and woodlands, agriculture and rangelands, and urban. We conclude with suggestions for further research into the fields of cross-sensor comparison and multi-sensor fusion. This paper is significant because it provides the remote sensing community a concise synthesis of Landsat-like sensors and research demonstrating their capabilities. It is also timely because it provides a framework for evaluating the range of Landsat alternatives, and strategies for minimizing the impact of a possible Landsat data gap. -

10. Spacecraft Configurations MAE 342 2016

2/12/20 Spacecraft Configurations Space System Design, MAE 342, Princeton University Robert Stengel • Angular control approaches • Low-Earth-orbit configurations – Satellite buses – Nanosats/cubesats – Earth resources satellites – Atmospheric science and meteorology satellites – Navigation satellites – Communications satellites – Astronomy satellites – Military satellites – Tethered satellites • Lunar configurations • Deep-space configurations Copyright 2016 by Robert Stengel. All rights reserved. For educational use only. 1 http://www.princeton.edu/~stengel/MAE342.html 1 Angular Attitude of Satellite Configurations • Spinning satellites – Angular attitude maintained by gyroscopic moment • Randomly oriented satellites and magnetic coil – Angular attitude is free to vary – Axisymmetric distribution of mass, solar cells, and instruments Television Infrared Observation (TIROS-7) Orbital Satellite Carrying Amateur Radio (OSCAR-1) ESSA-2 TIROS “Cartwheel” 2 2 1 2/12/20 Attitude-Controlled Satellite Configurations • Dual-spin satellites • Attitude-controlled satellites – Angular attitude maintained by gyroscopic moment and thrusters – Angular attitude maintained by 3-axis control system – Axisymmetric distribution of mass and solar cells – Non-symmetric distribution of mass, solar cells – Instruments and antennas do not spin and instruments INTELSAT-IVA NOAA-17 3 3 LADEE Bus Modules Satellite Buses Standardization of common components for a variety of missions Modular Common Spacecraft Bus Lander Congiguration 4 4 2 2/12/20 Hine et al 5 5 Evolution -

CRS Issue Brief for Congress Received Through the CRS Web

Order Code IB92011 CRS Issue Brief for Congress Received through the CRS Web U.S. Space Programs: Civilian, Military, and Commercial Updated June 13, 2000 Marcia S. Smith Resources, Science, and Industry Division Congressional Research Service The Library of Congress CONTENTS SUMMARY MOST RECENT DEVELOPMENTS BACKGROUND AND ANALYSIS U.S. Government Civilian Space Programs National Aeronautics and Space Administration (NASA) Human Spaceflight Space Science and Applications Other Civilian Government Agencies Commercial Space Programs Military Space Programs Interagency Coordination International Cooperation and Competition NASA and DOD Space Budgets Space Program Issues NASA’s Current Challenges Military Space in the Post-Cold War Era Space Launch Vehicles: 1998-1999 Failures and Developing New Systems Environment Commercial Space and Trade Issues Ballistic Missile Proliferation International Relationships LEGISLATION IB92011 06-13-00 U.S. Space Programs: Civilian, Military, and Commercial SUMMARY The 106th Congress is addressing a broad FY2001 request. range of civilian, military, and commercial space issues. Civilian and military satellites provide data useful for environmental objectives. The National Aeronautics and Space NASA’s Earth Observing System has been Administration (NASA) conducts the most controversial for several years because of its visible space activities. NASA’s International cost and other issues. A probe proposed by Space Station (ISS) program is the most Vice President Gore called Triana to transmit controversial because it is over budget, behind images of the Earth to the Internet is the schedule, and relies on Russia for some current focus of controversy, however. hardware and services. Nevertheless, it has Proponents insist that it will provide important survived 21 termination attempts in NASA scientific data.